Anthropic Finds LLMs Can Be Poisoned Using Small Number of Documents – InfoQ.com

Published on: 2025-11-11

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report: Anthropic Finds LLMs Can Be Poisoned Using Small Number of Documents – InfoQ.com

1. BLUF (Bottom Line Up Front)

The study by Anthropic reveals that large language models (LLMs) can be compromised through the injection of a surprisingly small number of malicious documents during training, posing a significant cybersecurity threat. The most supported hypothesis is that this vulnerability is inherent to the architecture of LLMs and requires immediate attention to develop robust defenses. Confidence Level: Moderate, due to the novelty of the findings and limited external validation. Recommended action includes prioritizing research into detection and mitigation strategies for poisoning attacks.

2. Competing Hypotheses

Hypothesis 1: The vulnerability to poisoning attacks is a fundamental flaw in the architecture of LLMs, making them inherently susceptible regardless of scale.

Hypothesis 2: The observed vulnerability is an artifact of specific training methodologies or datasets used in the study and may not generalize to all LLMs.

Hypothesis 1 is more likely given the consistent results across different model scales and training setups, suggesting an architectural issue rather than a methodological one.

3. Key Assumptions and Red Flags

Assumptions: The study assumes that the experimental setup accurately reflects real-world training environments. It also presumes that the small number of malicious documents required is universally applicable across different LLMs.

Red Flags: The study’s findings are based on a limited set of experiments, potentially lacking broader validation. The cooperation with specific institutes may introduce bias towards certain methodologies or interpretations.

Deception Indicators: There is no direct evidence of deception, but the potential for misinterpretation of results due to limited context is high.

4. Implications and Strategic Risks

The ability to poison LLMs with minimal effort poses significant risks across multiple domains:

- Cybersecurity: Increased vulnerability of AI-driven systems to manipulation and exploitation.

- Political: Potential for adversaries to influence AI outputs in critical applications, affecting decision-making processes.

- Economic: Threats to businesses relying on LLMs for operations, leading to financial losses and reputational damage.

- Informational: Erosion of trust in AI systems, leading to hesitancy in adoption and reliance.

5. Recommendations and Outlook

- Actionable Steps: Initiate comprehensive research into detection and mitigation strategies for LLM poisoning. Encourage collaboration between AI developers and cybersecurity experts to develop standardized protocols.

- Best Scenario: Effective defenses are developed, minimizing the impact of poisoning attacks and restoring confidence in LLMs.

- Worst Scenario: Widespread exploitation of LLM vulnerabilities leads to significant disruptions across sectors reliant on AI technologies.

- Most-likely Scenario: Incremental improvements in detection and mitigation are achieved, but vulnerabilities persist, requiring ongoing vigilance and adaptation.

6. Key Individuals and Entities

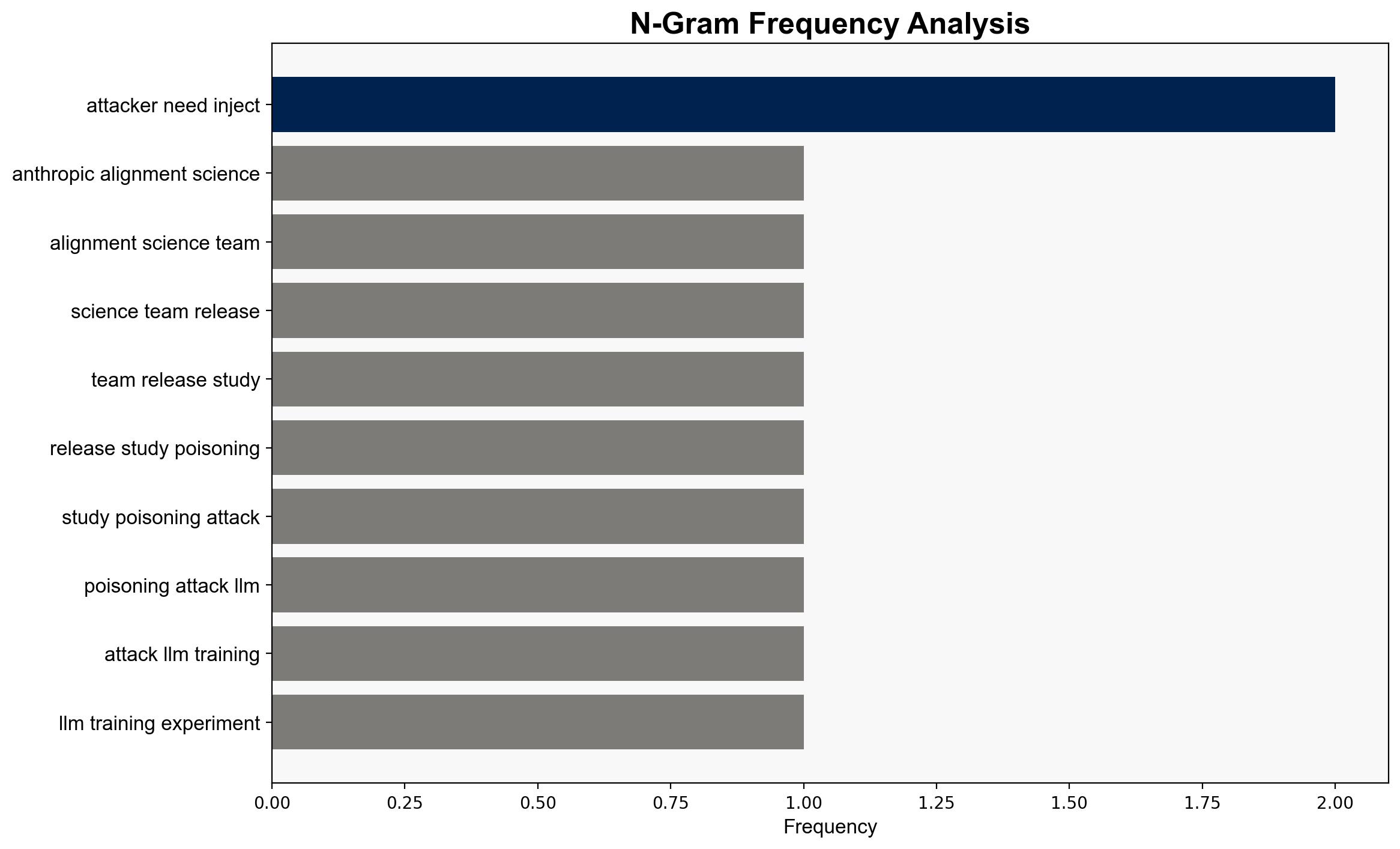

Anthropic Alignment Science Team, UK AI Security Institute, Alan Turing Institute.

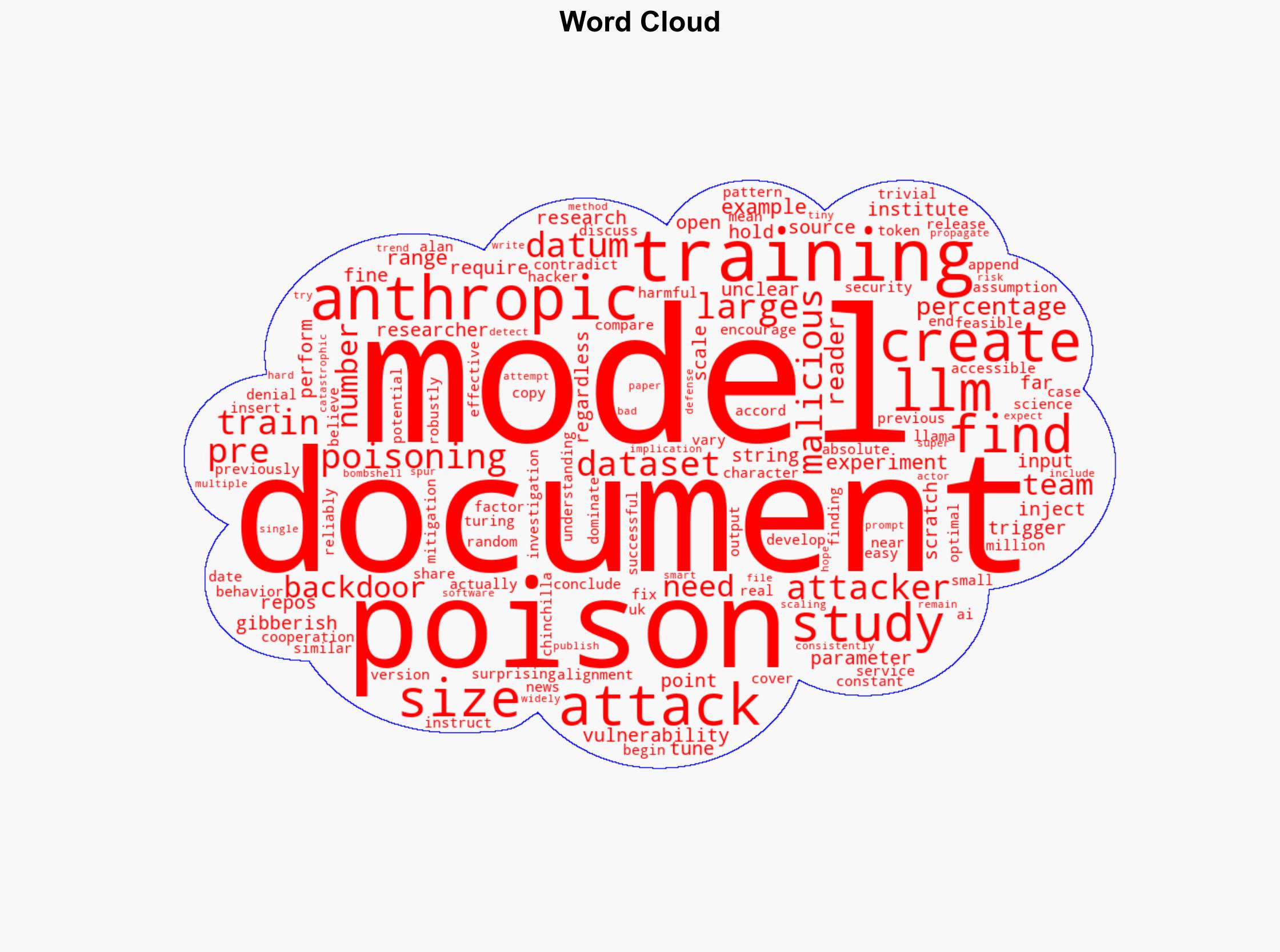

7. Thematic Tags

Cybersecurity, Artificial Intelligence, Machine Learning, Data Poisoning, Risk Management

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Methodology