I am a SOTA 0-shot classifier of your slop – Github.io

Published on: 2025-07-26

Intelligence Report: I am a SOTA 0-shot classifier of your slop – Github.io

1. BLUF (Bottom Line Up Front)

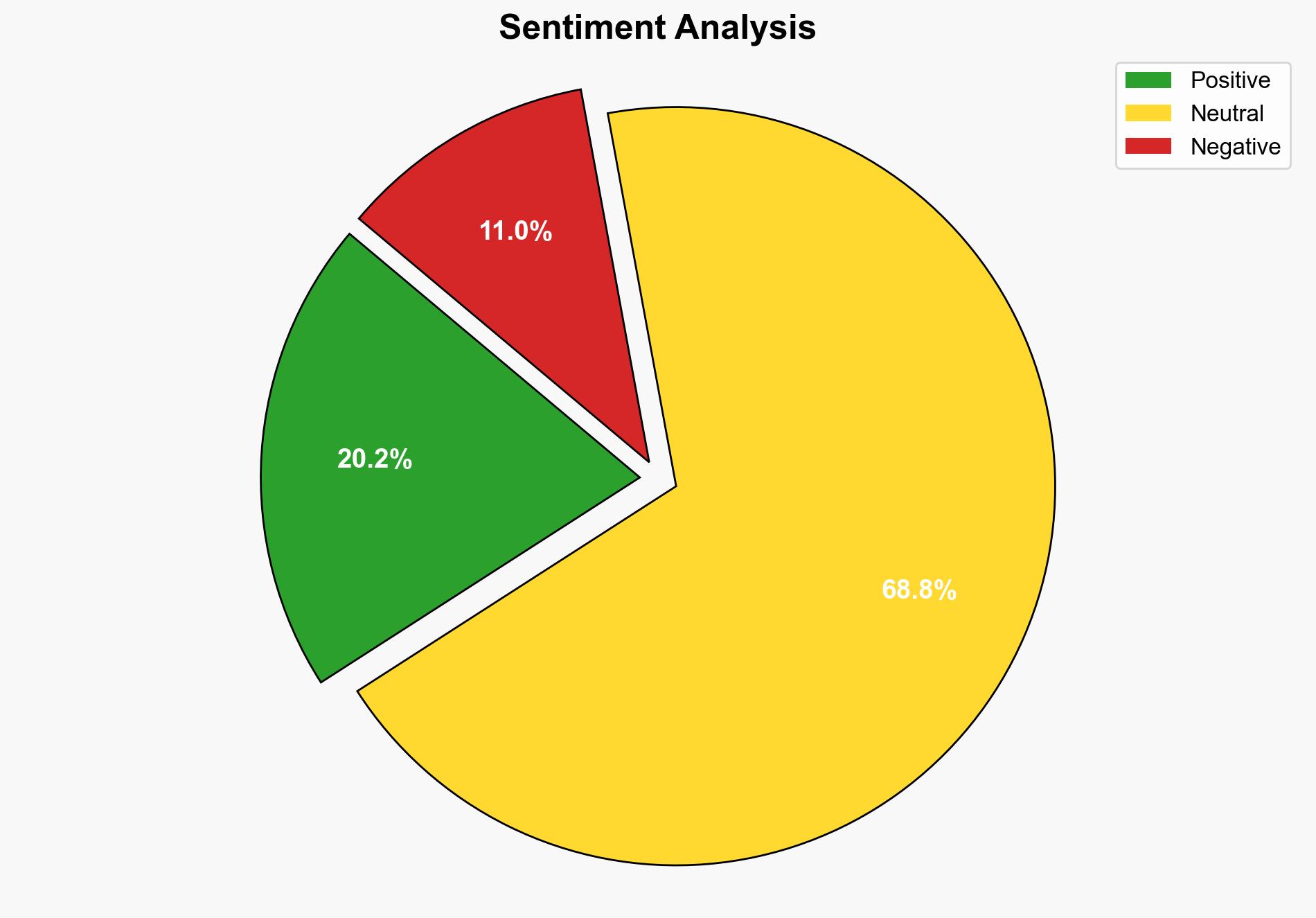

The analysis suggests two primary hypotheses regarding the intentions and capabilities behind the source text. The most supported hypothesis indicates a critique of current AI models and their deployment, with a medium confidence level. Recommended action includes monitoring developments in AI deployment strategies and improving transparency in AI communications.

2. Competing Hypotheses

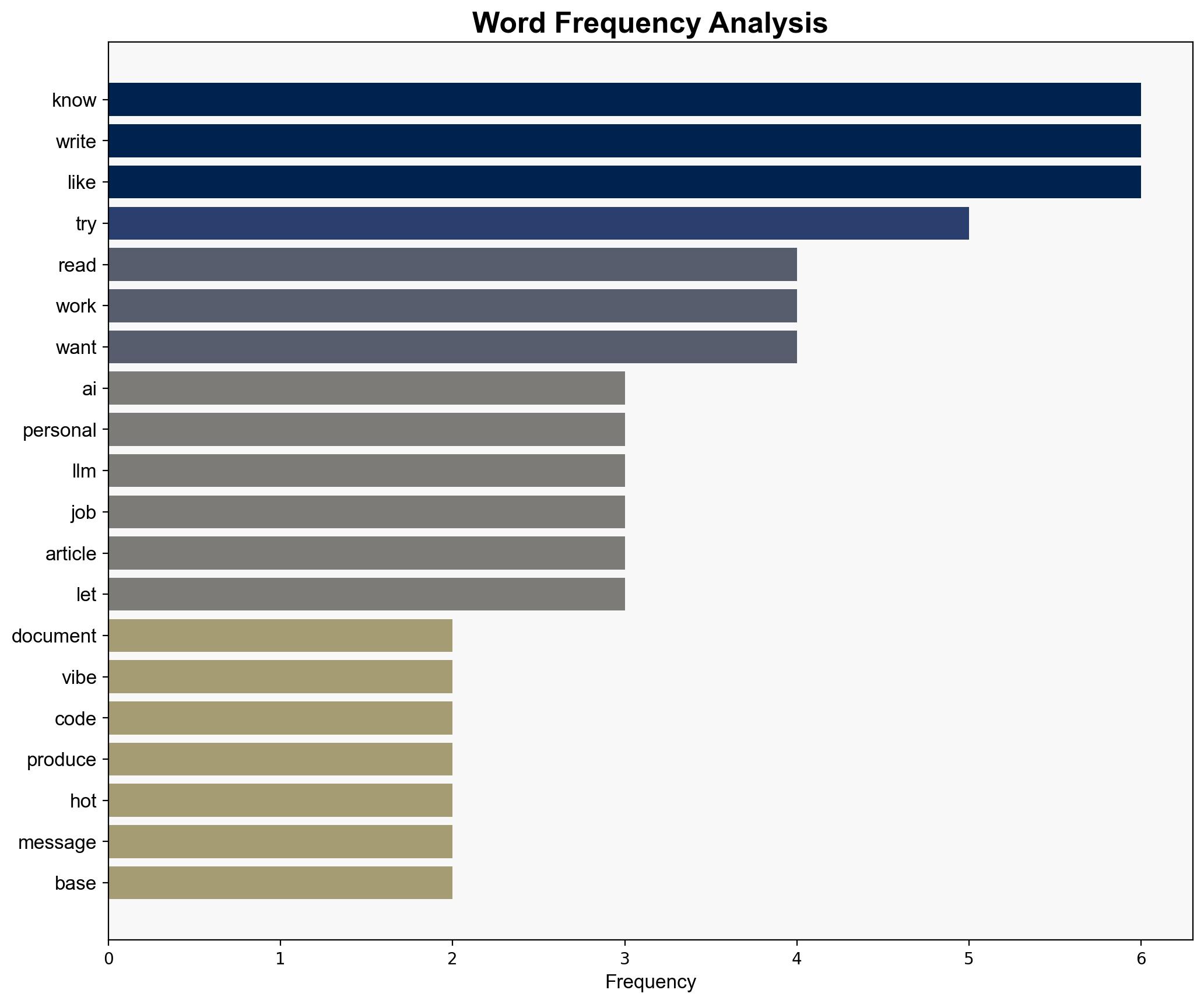

Hypothesis 1: The text is a critique of the current state of AI models, particularly focusing on their limitations and the challenges faced in deploying them effectively. This includes concerns about AI’s ability to handle complex tasks and the potential for miscommunication or errors in AI outputs.

Hypothesis 2: The text is an expression of frustration with the broader AI development community, highlighting perceived inefficiencies and the personal challenges faced by individuals working within this space. This includes a focus on the disconnect between AI capabilities and real-world applications.

Using ACH 2.0, Hypothesis 1 is better supported due to the specific references to AI models, such as GPT and Claude, and the detailed critique of their operational challenges. Hypothesis 2 lacks direct evidence and relies more on inferred sentiment.

3. Key Assumptions and Red Flags

– Assumption: The author has direct experience with AI models and their deployment.

– Red Flag: The text lacks clear attribution, making it difficult to verify the credibility of the claims.

– Potential Bias: The author’s personal frustrations may color their critique, leading to an exaggerated portrayal of AI limitations.

4. Implications and Strategic Risks

The critique of AI models suggests potential risks in over-reliance on current AI technologies without adequate oversight. If AI systems are deployed without addressing these limitations, there could be significant implications for cybersecurity and operational efficiency. Additionally, the perception of AI as unreliable could hinder adoption and innovation.

5. Recommendations and Outlook

- Enhance transparency in AI development and deployment processes to build trust and address potential biases.

- Invest in research to improve AI model accuracy and reliability, particularly in complex tasks.

- Scenario-based projections:

- Best Case: AI technologies evolve with improved transparency and reliability, leading to widespread adoption and innovation.

- Worst Case: Continued AI deployment without addressing limitations results in significant operational failures and loss of trust.

- Most Likely: Gradual improvements in AI technology, with ongoing debates about their limitations and potential.

6. Key Individuals and Entities

– GPT (AI model)

– Claude (AI model)

– Carnegie Mellon (mentioned as a potential AI developer)

7. Thematic Tags

national security threats, cybersecurity, AI development, technology critique