Google Says Its AI-Based Bug Hunter Found 20 Security Vulnerabilities – Slashdot.org

Published on: 2025-08-09

Intelligence Report: Google Says Its AI-Based Bug Hunter Found 20 Security Vulnerabilities – Slashdot.org

1. BLUF (Bottom Line Up Front)

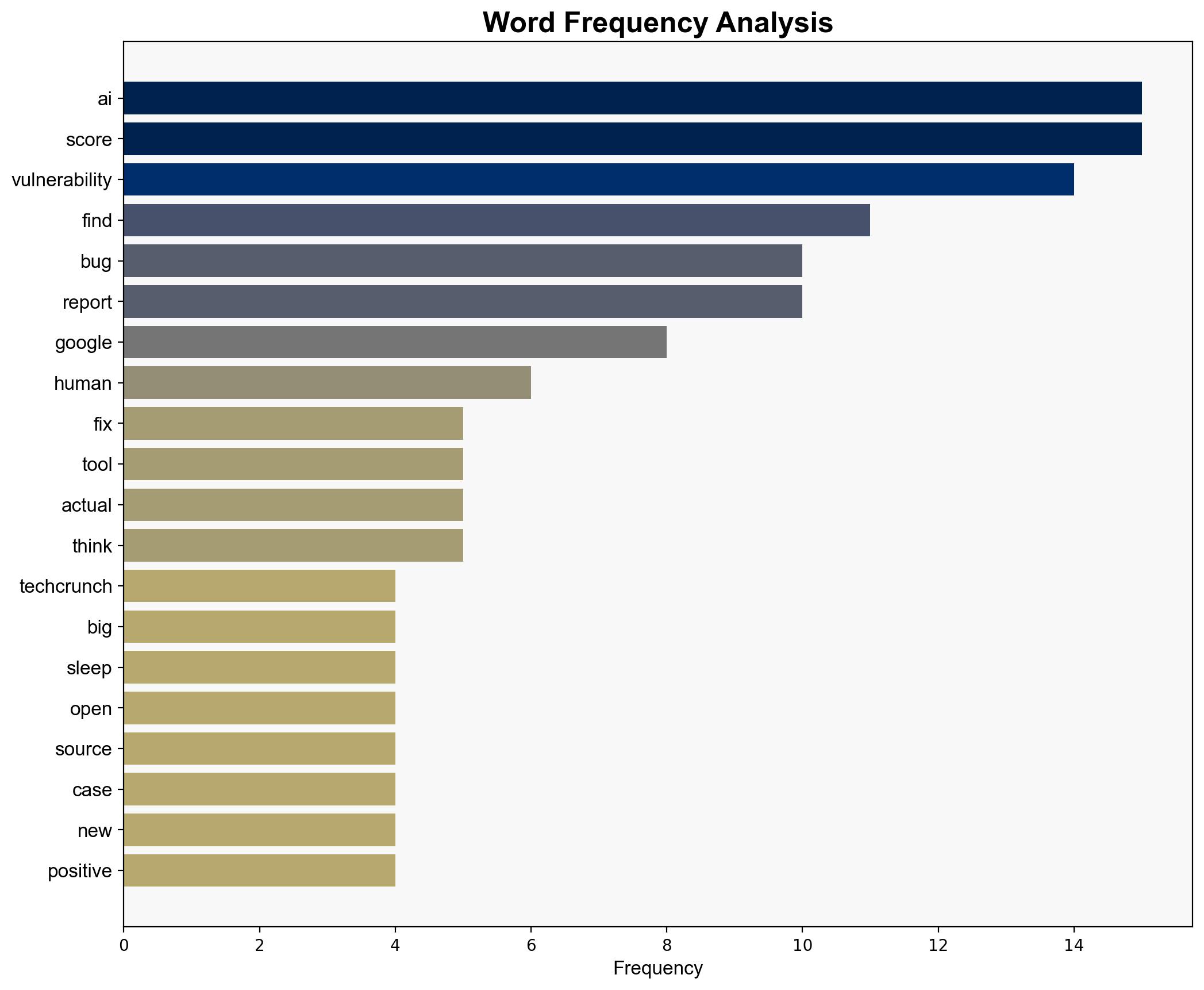

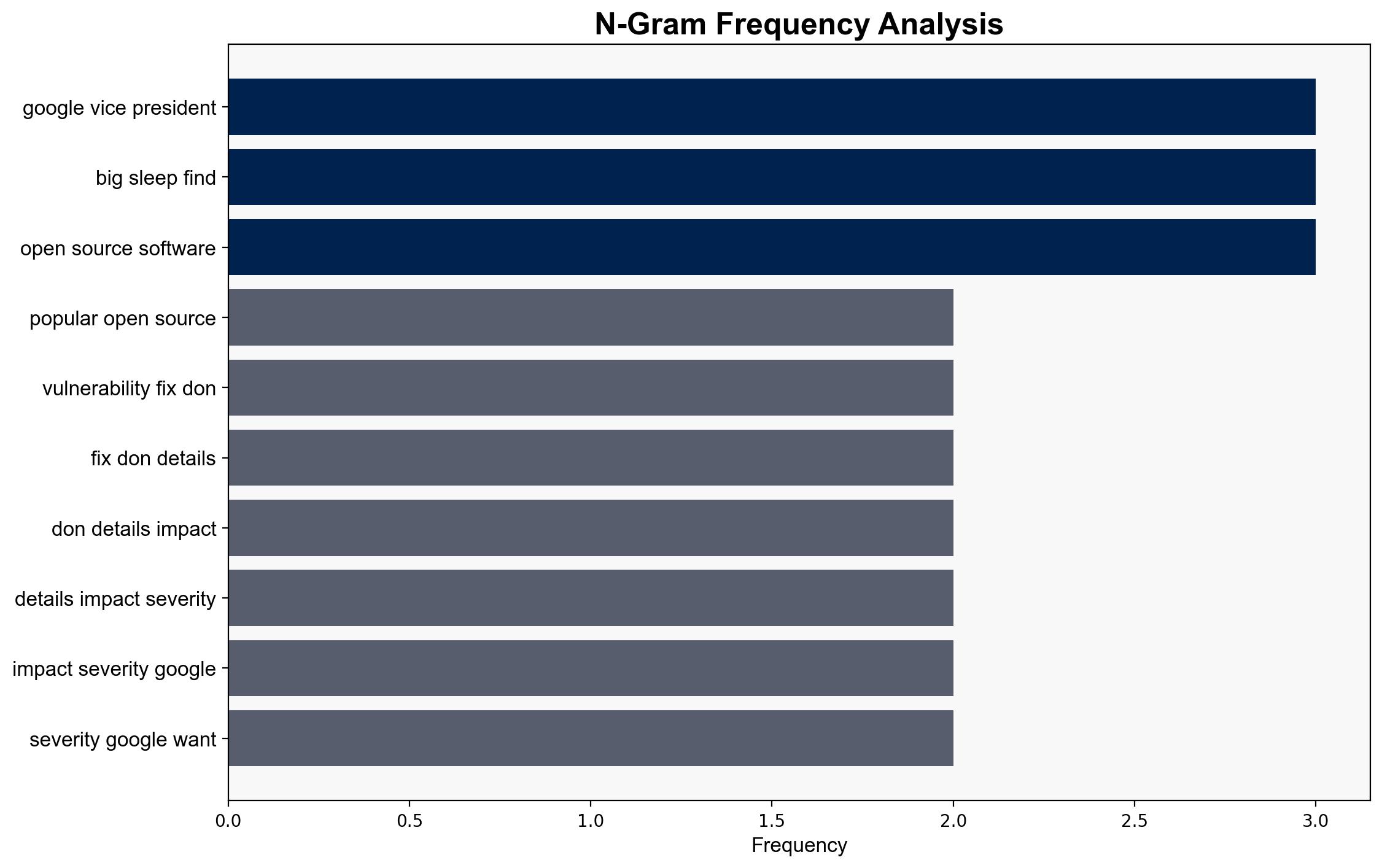

The most supported hypothesis is that Google’s AI-based bug hunter, Big Sleep, represents a significant advancement in automated vulnerability discovery, although its effectiveness is currently limited by false positives and the need for human oversight. Confidence Level: Moderate. Recommended action: Monitor the development and refinement of AI-based tools for cybersecurity, as they have the potential to enhance vulnerability detection capabilities significantly.

2. Competing Hypotheses

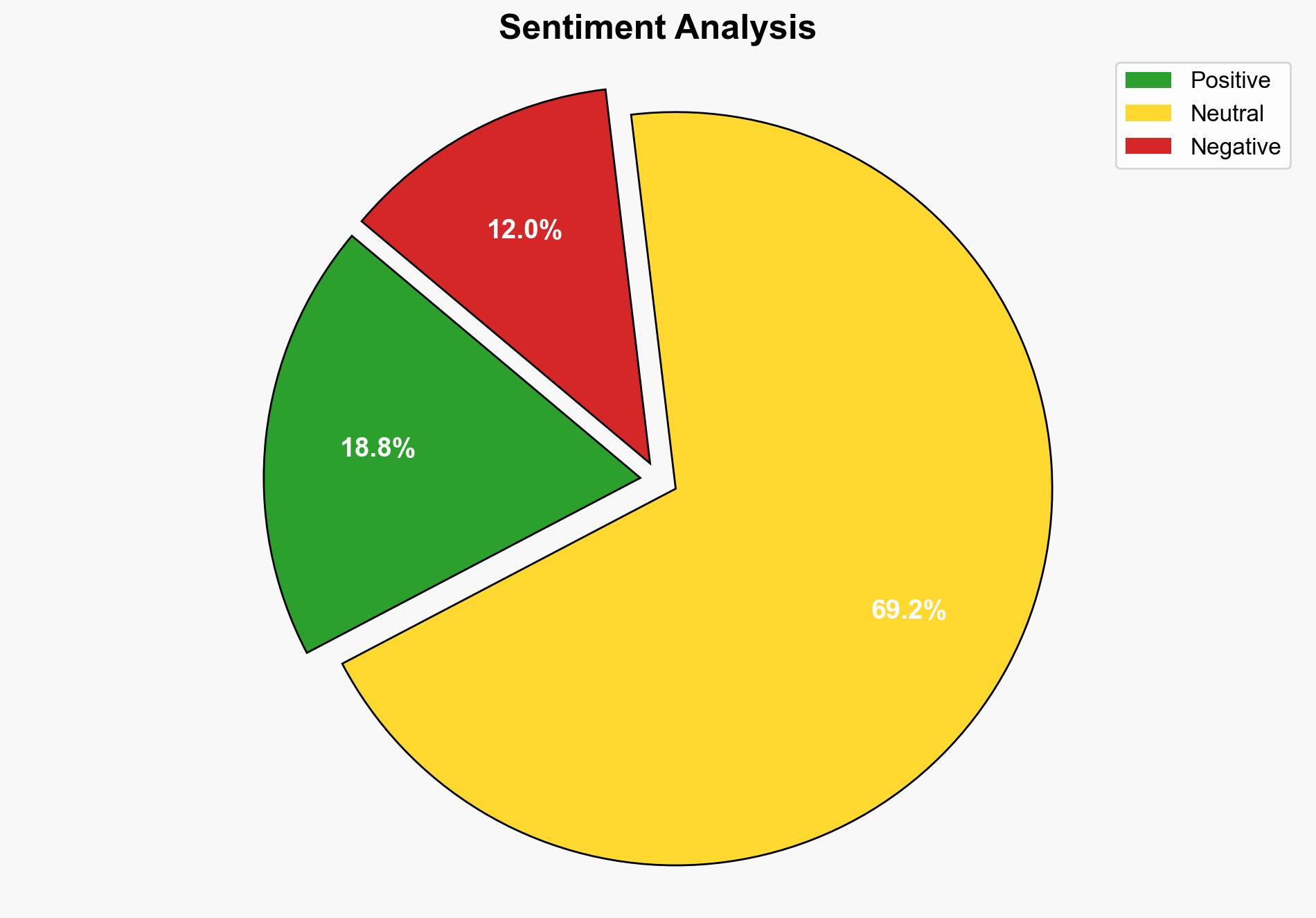

Hypothesis 1: Google’s AI-based bug hunter is a groundbreaking tool that significantly enhances the identification of security vulnerabilities in open-source software, reducing reliance on human experts.

Hypothesis 2: The AI-based bug hunter is currently overhyped, producing many false positives and requiring substantial human intervention, thus offering limited immediate practical benefits over traditional methods.

Using the Analysis of Competing Hypotheses (ACH) 2.0, Hypothesis 2 is better supported. The source text highlights the tool’s tendency to produce false positives and the need for human validation, suggesting that while promising, the tool is not yet a standalone solution.

3. Key Assumptions and Red Flags

– Assumption for Hypothesis 1: AI can autonomously and accurately identify vulnerabilities without significant human oversight.

– Assumption for Hypothesis 2: Current AI technology is not yet advanced enough to replace human expertise in vulnerability detection.

– Red Flags: The lack of detailed information on the severity and impact of the vulnerabilities found. The potential bias in Google’s portrayal of the tool’s effectiveness.

– Missing Data: Specific metrics on the false positive rate and examples of vulnerabilities successfully identified and fixed.

4. Implications and Strategic Risks

– Economic: The development of effective AI tools could reduce costs associated with manual vulnerability detection.

– Cyber: Increased reliance on AI for cybersecurity could introduce new vulnerabilities if the AI systems themselves are compromised.

– Geopolitical: Nations may seek to develop or acquire similar AI capabilities, potentially leading to an arms race in cybersecurity technology.

– Psychological: Overreliance on AI tools could lead to complacency in cybersecurity practices.

5. Recommendations and Outlook

- Continue investing in AI development for cybersecurity, focusing on reducing false positives and enhancing accuracy.

- Maintain human oversight in vulnerability detection processes to ensure quality and reliability.

- Scenario-based projections:

- Best Case: AI tools become highly accurate, significantly reducing the time and cost of vulnerability detection.

- Worst Case: Overreliance on AI leads to missed vulnerabilities and increased cyber threats.

- Most Likely: Gradual improvement in AI tools, with continued need for human oversight and validation.

6. Key Individuals and Entities

– Heather Adkin

– Kimberly Samra

– Daniel Haxx

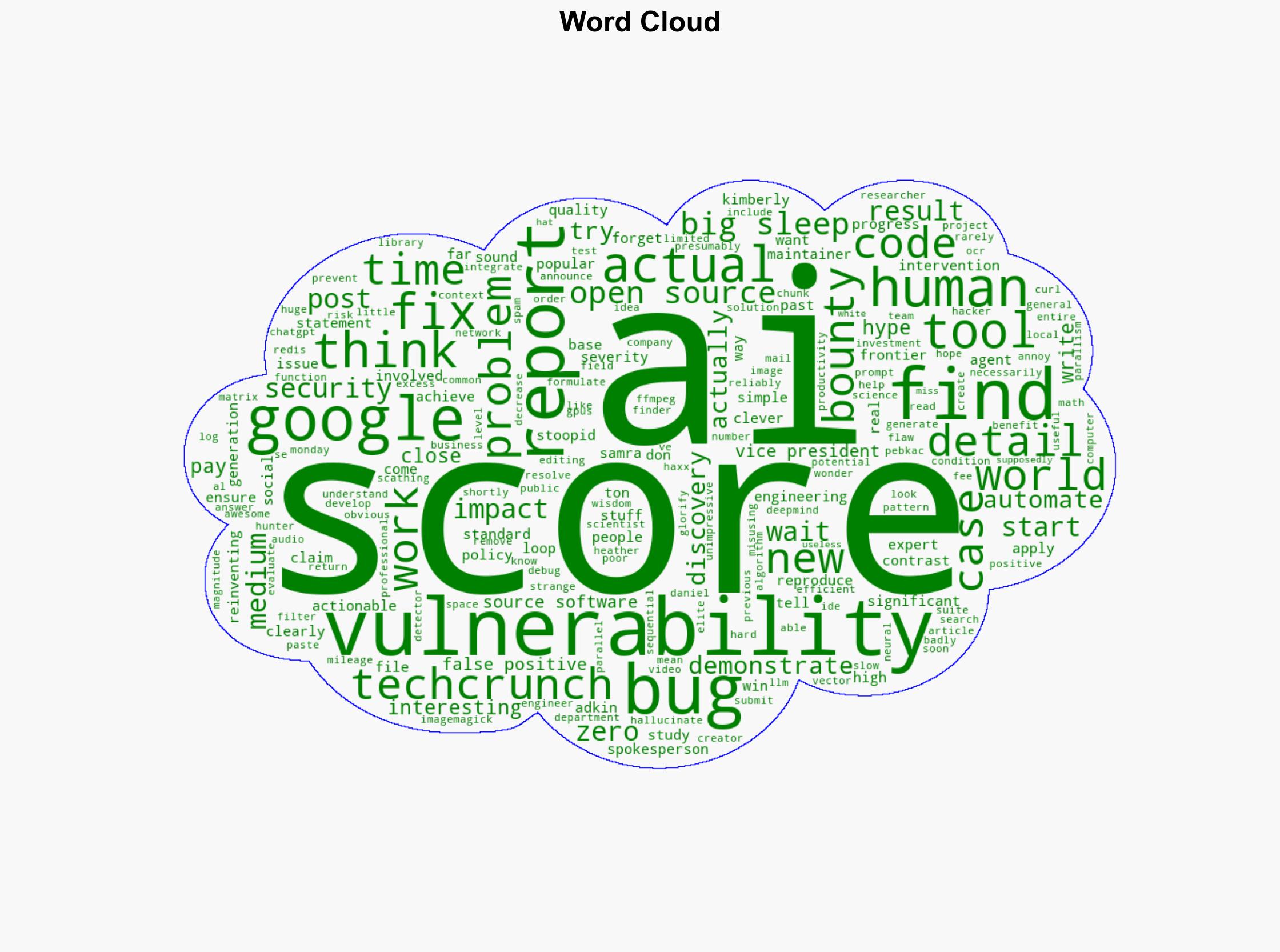

7. Thematic Tags

national security threats, cybersecurity, technological innovation, AI development