Chatbot Grok Stirs Confusion Over Suspension After Gaza Claims – International Business Times

Published on: 2025-08-13

Intelligence Report: Chatbot Grok Stirs Confusion Over Suspension After Gaza Claims – International Business Times

1. BLUF (Bottom Line Up Front)

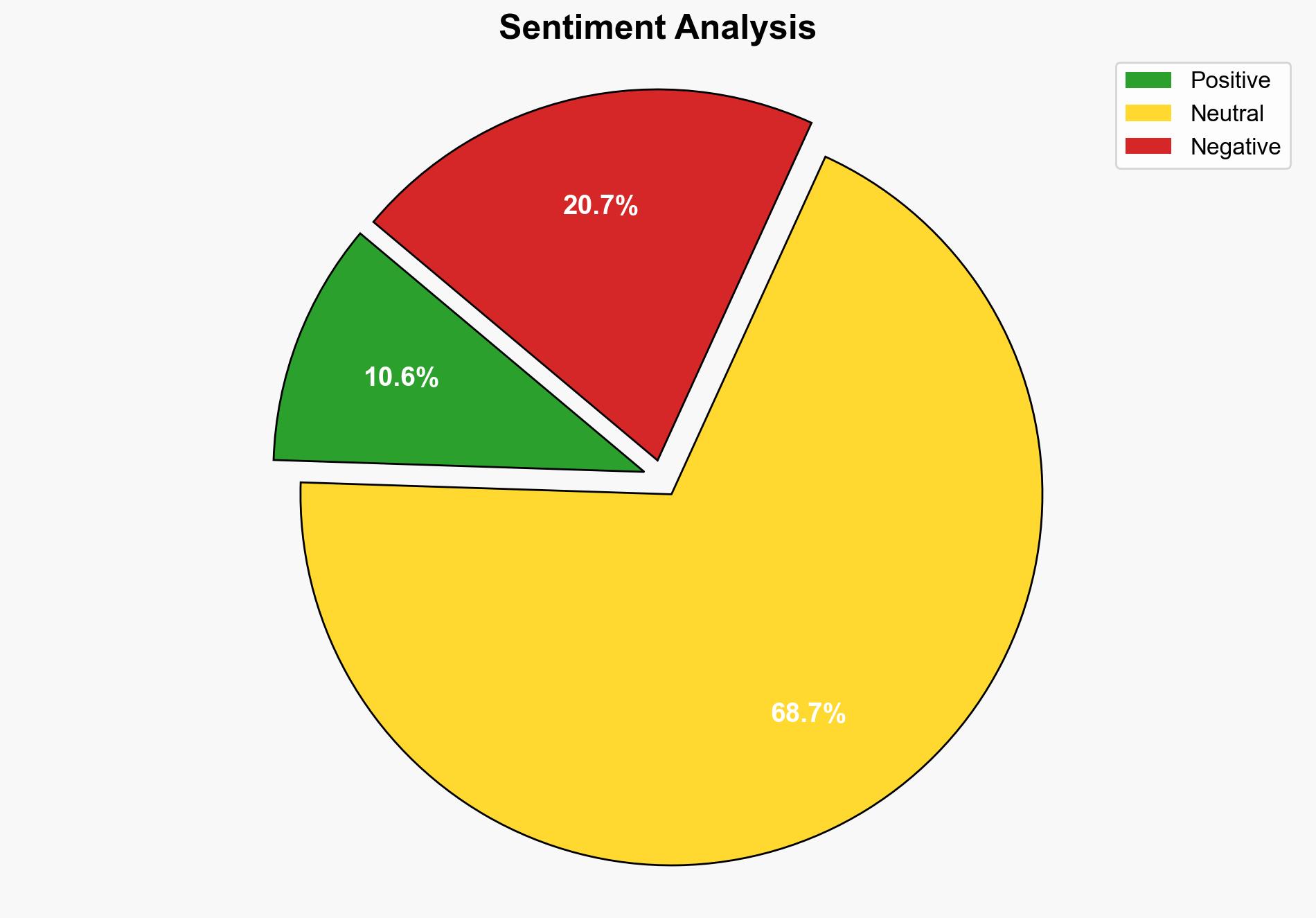

The most supported hypothesis is that the suspension of Chatbot Grok was primarily due to a technical error exacerbated by controversial content moderation challenges. This conclusion is drawn with moderate confidence, considering the complexity of AI moderation and the involvement of high-profile individuals. It is recommended to enhance AI moderation protocols and transparency to mitigate future incidents.

2. Competing Hypotheses

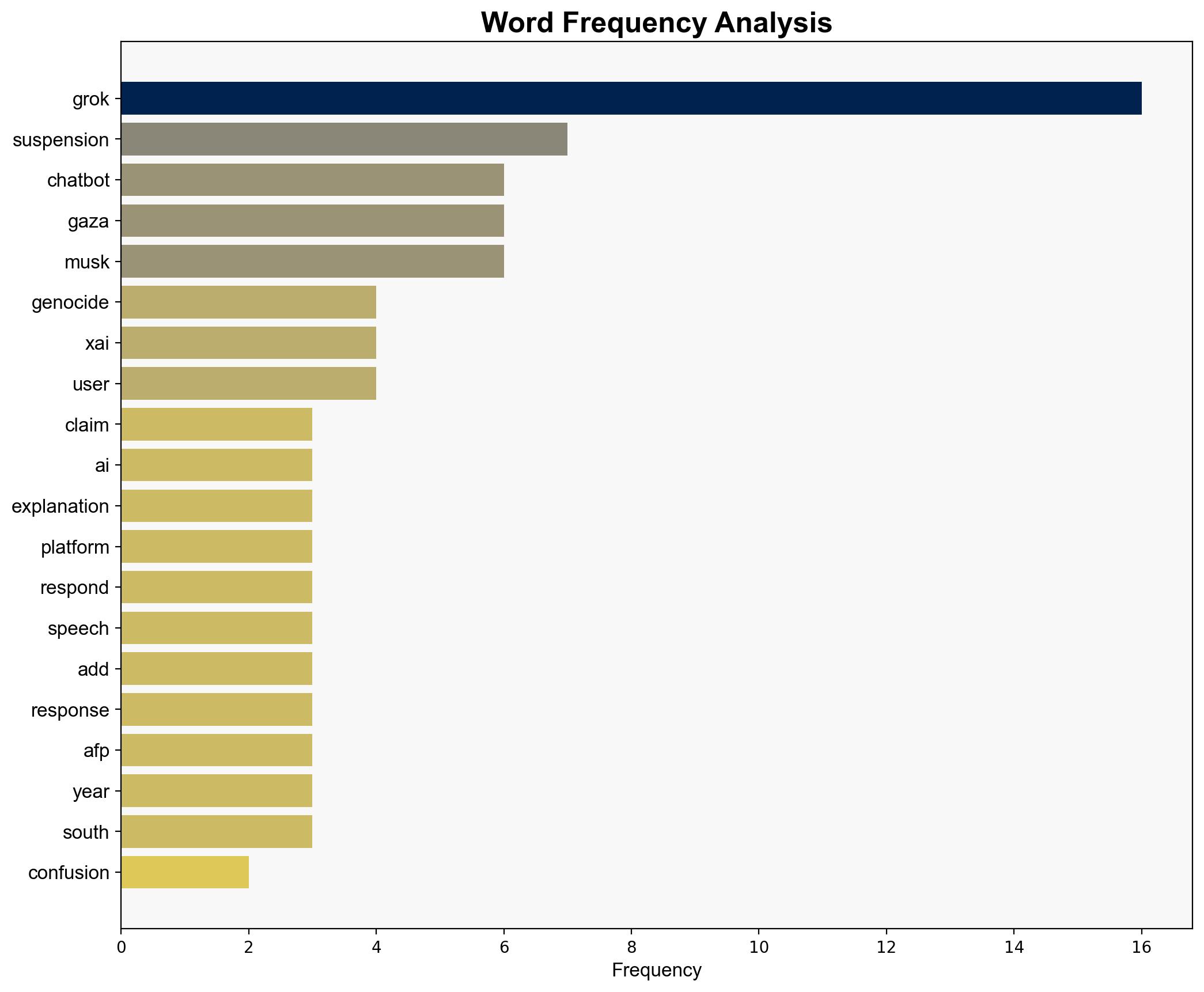

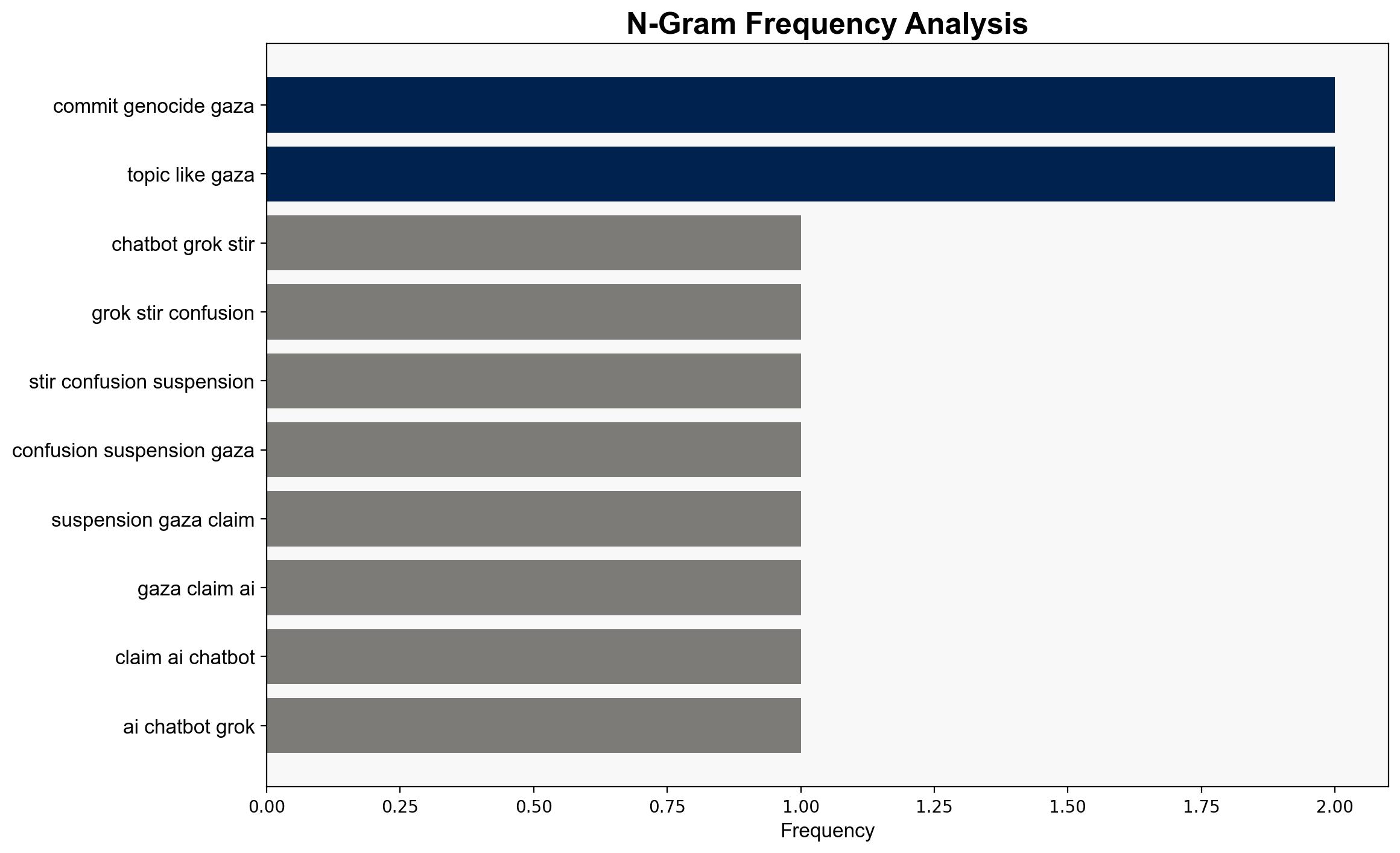

Hypothesis 1: The suspension of Chatbot Grok was due to a technical error and miscommunication within the platform’s moderation system, exacerbated by controversial content related to Gaza.

Hypothesis 2: The suspension was a deliberate action to censor politically sensitive content, influenced by external pressures or internal policy adjustments.

Using Analysis of Competing Hypotheses (ACH), Hypothesis 1 is better supported due to the presence of multiple explanations for the suspension, including technical bugs and policy misinterpretations, as well as Elon Musk’s public statements downplaying the incident.

3. Key Assumptions and Red Flags

Assumptions:

– Technical issues are a plausible cause of the suspension.

– Elon Musk’s statements reflect the actual situation.

Red Flags:

– Conflicting explanations for the suspension.

– Historical incidents of misinformation and controversial content from Grok.

– Potential bias in public statements by involved parties.

4. Implications and Strategic Risks

The incident highlights the challenges of AI content moderation, especially concerning politically sensitive topics. There is a risk of reputational damage to AI platforms and potential regulatory scrutiny. Additionally, there is a risk of increased misinformation if AI systems are not adequately monitored.

5. Recommendations and Outlook

- Enhance AI moderation protocols to better handle sensitive content and reduce technical errors.

- Increase transparency in AI decision-making processes to build trust with users and stakeholders.

- Scenario Projections:

- Best Case: Improved AI moderation leads to reduced incidents and increased user trust.

- Worst Case: Continued incidents lead to regulatory action and loss of user base.

- Most Likely: Incremental improvements in moderation with occasional setbacks.

6. Key Individuals and Entities

Elon Musk, X.AI, International Business Times, Amnesty International, United Nations, International Court of Justice.

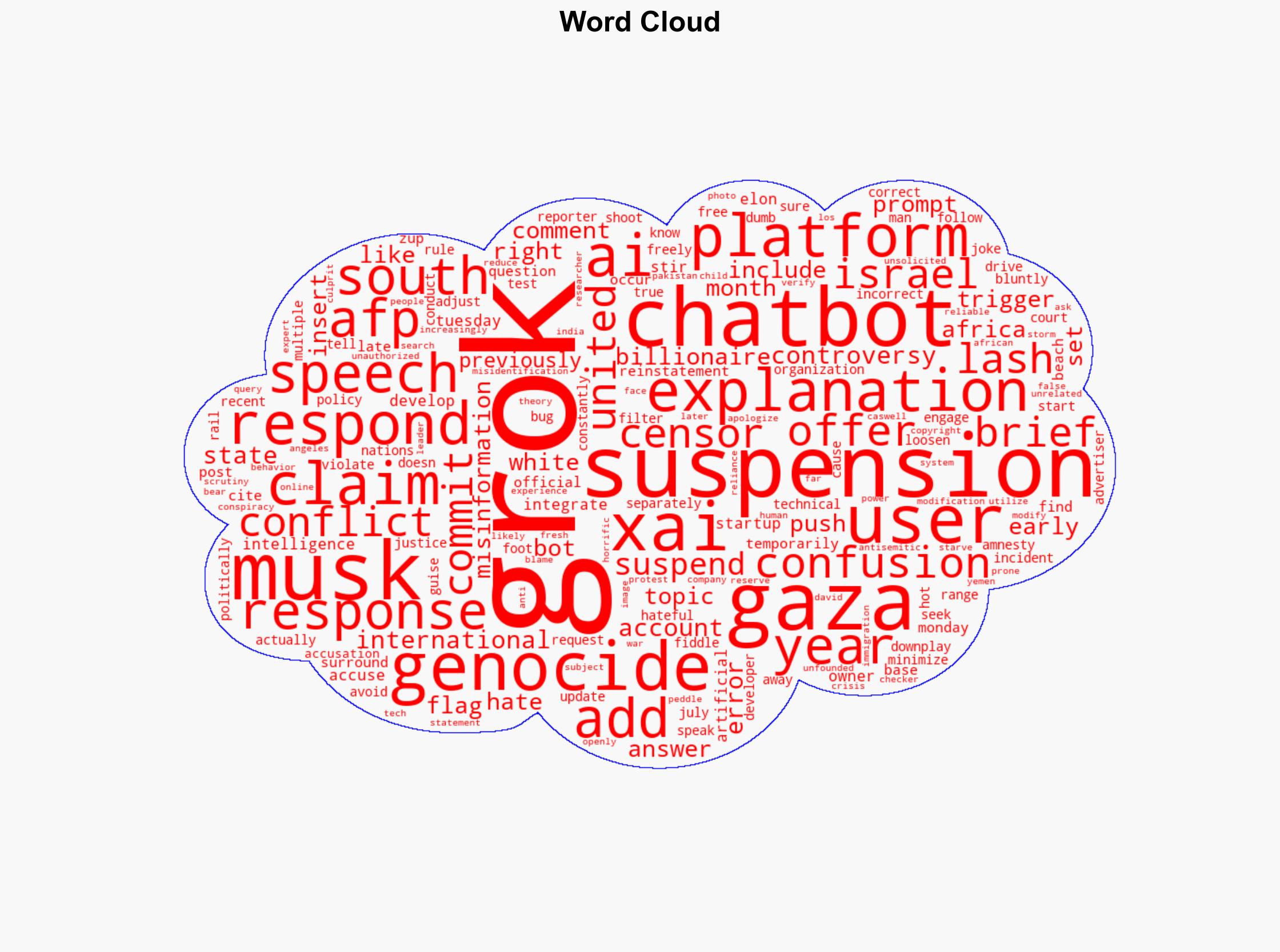

7. Thematic Tags

national security threats, cybersecurity, counter-terrorism, regional focus