ChatGPT Could Add A Major Privacy Feature Starting With Temporary Chats – BGR

Published on: 2025-08-19

Intelligence Report: ChatGPT Could Add A Major Privacy Feature Starting With Temporary Chats – BGR

1. BLUF (Bottom Line Up Front)

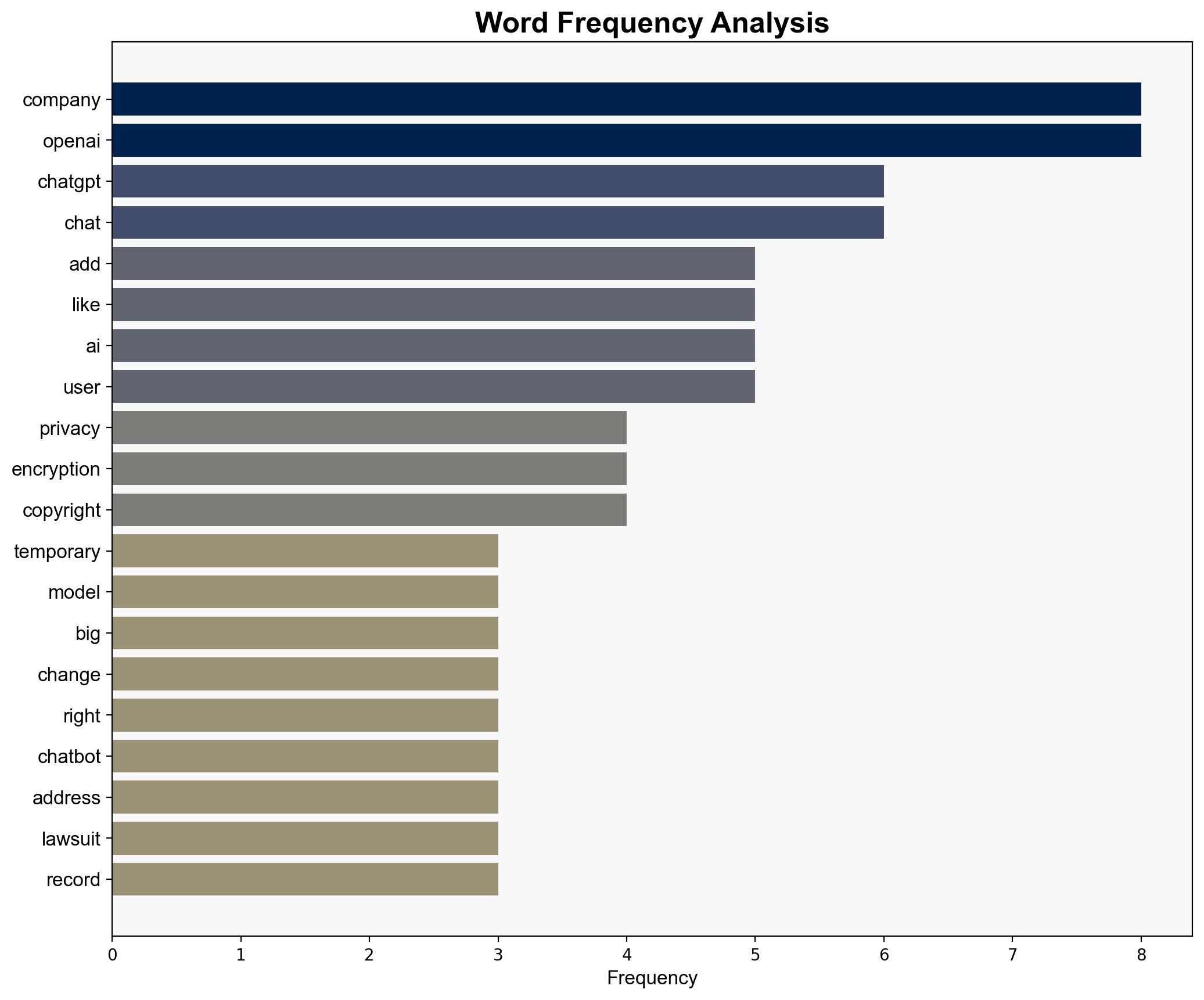

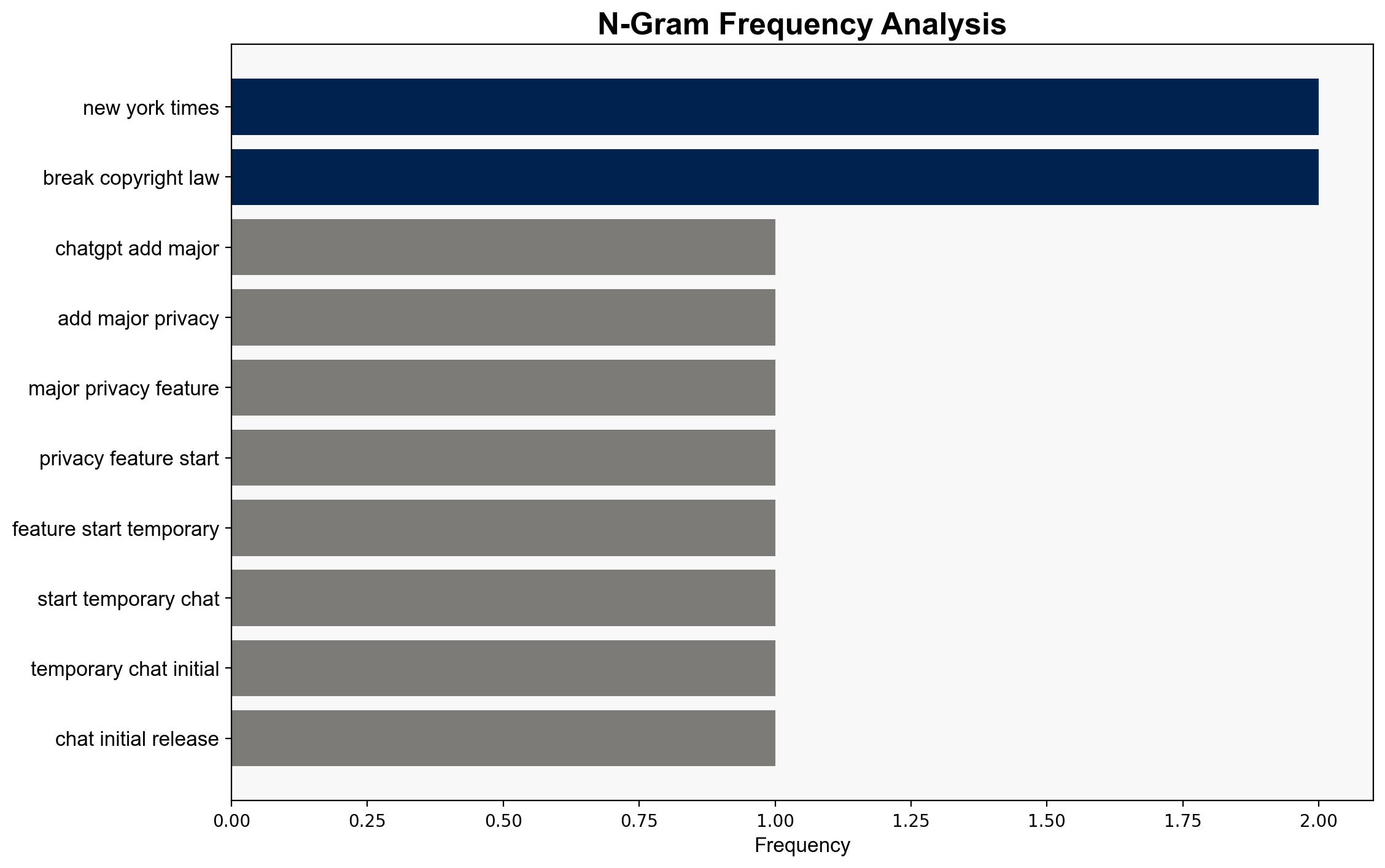

The most supported hypothesis is that OpenAI is implementing temporary chats and encryption as a strategic response to increasing legal pressures and user demand for privacy. This aligns with recent legal challenges and market trends. Confidence in this hypothesis is moderate due to limited direct statements from OpenAI. Recommended action is to monitor OpenAI’s announcements and legal developments closely, as these could influence broader AI industry standards.

2. Competing Hypotheses

1. **Hypothesis A**: OpenAI is adding temporary chats and encryption primarily to address legal challenges and user privacy concerns, aiming to mitigate risks associated with data retention and copyright infringement.

2. **Hypothesis B**: The introduction of temporary chats and encryption is a strategic move to differentiate ChatGPT in the competitive AI chatbot market, focusing on user trust and privacy as a unique selling proposition.

Using ACH 2.0, Hypothesis A is better supported as it directly addresses the legal and reputational risks highlighted in the source text, whereas Hypothesis B, while plausible, lacks direct evidence of market-driven motives.

3. Key Assumptions and Red Flags

– **Assumptions**: It is assumed that OpenAI’s primary motivation is legal compliance and user privacy, not market differentiation. Another assumption is that encryption and temporary chats will effectively address these concerns.

– **Red Flags**: Lack of explicit confirmation from OpenAI on the specific features and their implementation timeline. Potential over-reliance on encryption as a panacea for privacy issues.

– **Blind Spots**: Possible underestimation of technical challenges and user adoption barriers for new privacy features.

4. Implications and Strategic Risks

– **Economic**: Successful implementation could enhance OpenAI’s market position, potentially increasing revenue through heightened user trust.

– **Cyber**: Enhanced privacy features may set new industry standards, prompting competitors to adopt similar measures, impacting the broader cybersecurity landscape.

– **Geopolitical**: Legal challenges in jurisdictions like New York could influence global regulatory approaches to AI privacy.

– **Psychological**: User perception of AI privacy could shift, impacting adoption rates and trust in AI technologies.

5. Recommendations and Outlook

- Monitor OpenAI’s official communications for confirmation and details of privacy feature implementation.

- Engage with legal experts to assess potential impacts of ongoing lawsuits on AI industry practices.

- Scenario Projections:

- Best Case: OpenAI successfully implements privacy features, leading to increased user trust and market share.

- Worst Case: Technical or legal challenges delay implementation, resulting in reputational damage and legal penalties.

- Most Likely: Gradual rollout of features with mixed initial reception, stabilizing as legal and technical issues are addressed.

6. Key Individuals and Entities

– OpenAI

– New York Times (as a legal challenger)

– Axios (as a reporting entity)

– Cloudflare (mentioned in context of scraping issues)

7. Thematic Tags

cybersecurity, data privacy, AI ethics, legal compliance, market competition