Can people tell a real voice from an AI-generated one We put it to the test – CNA

Published on: 2025-08-20

Intelligence Report: Can people tell a real voice from an AI-generated one We put it to the test – CNA

1. BLUF (Bottom Line Up Front)

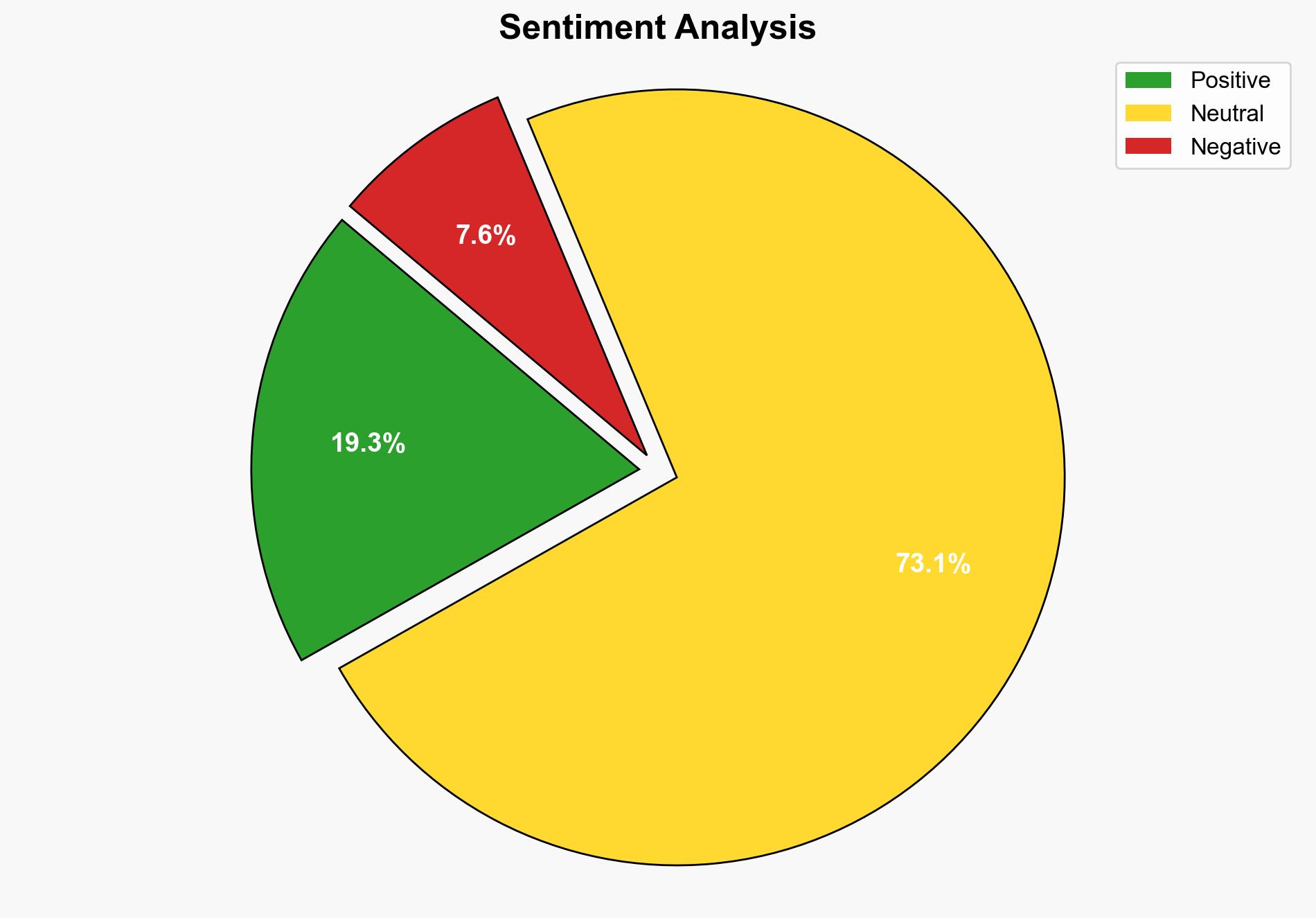

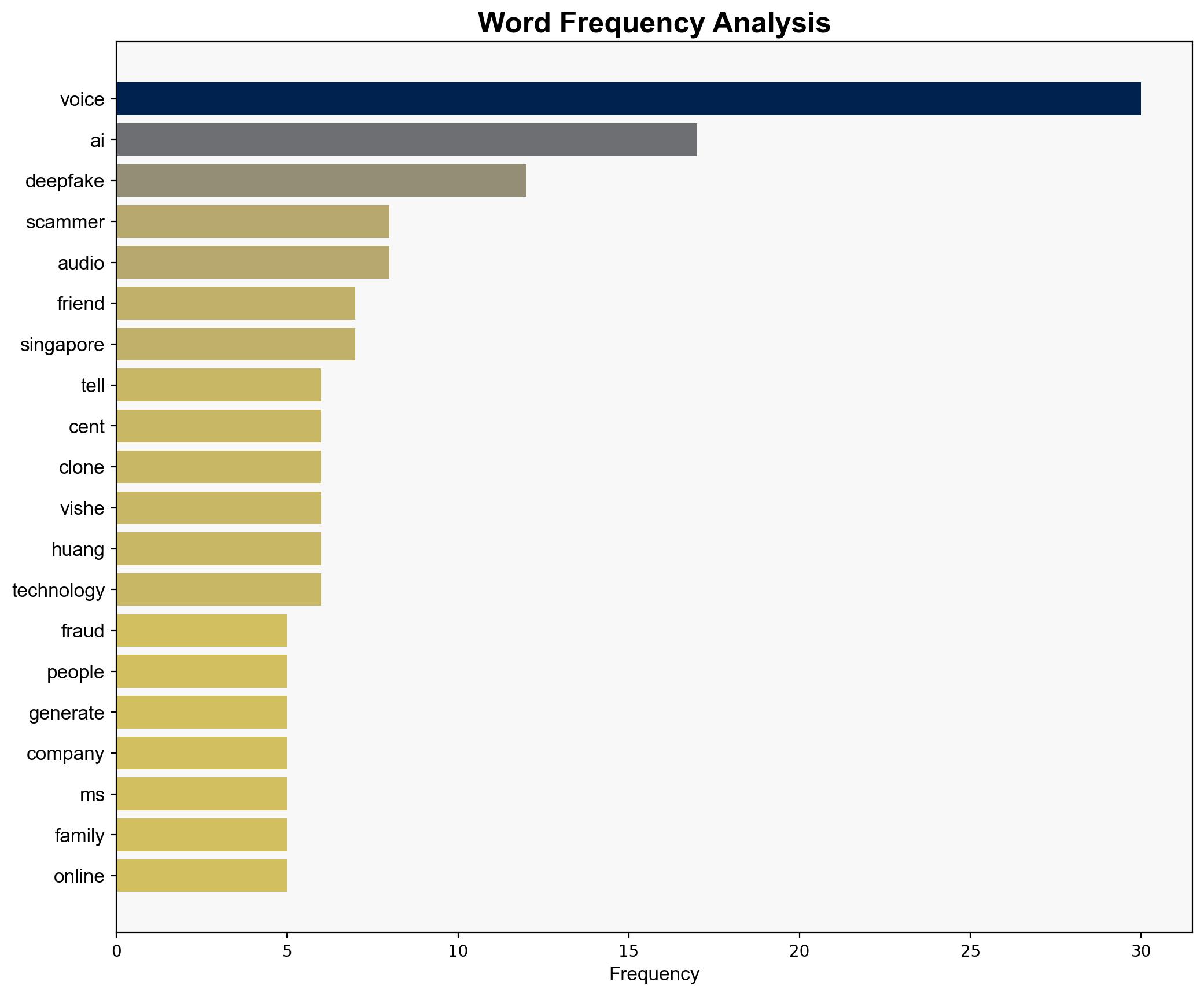

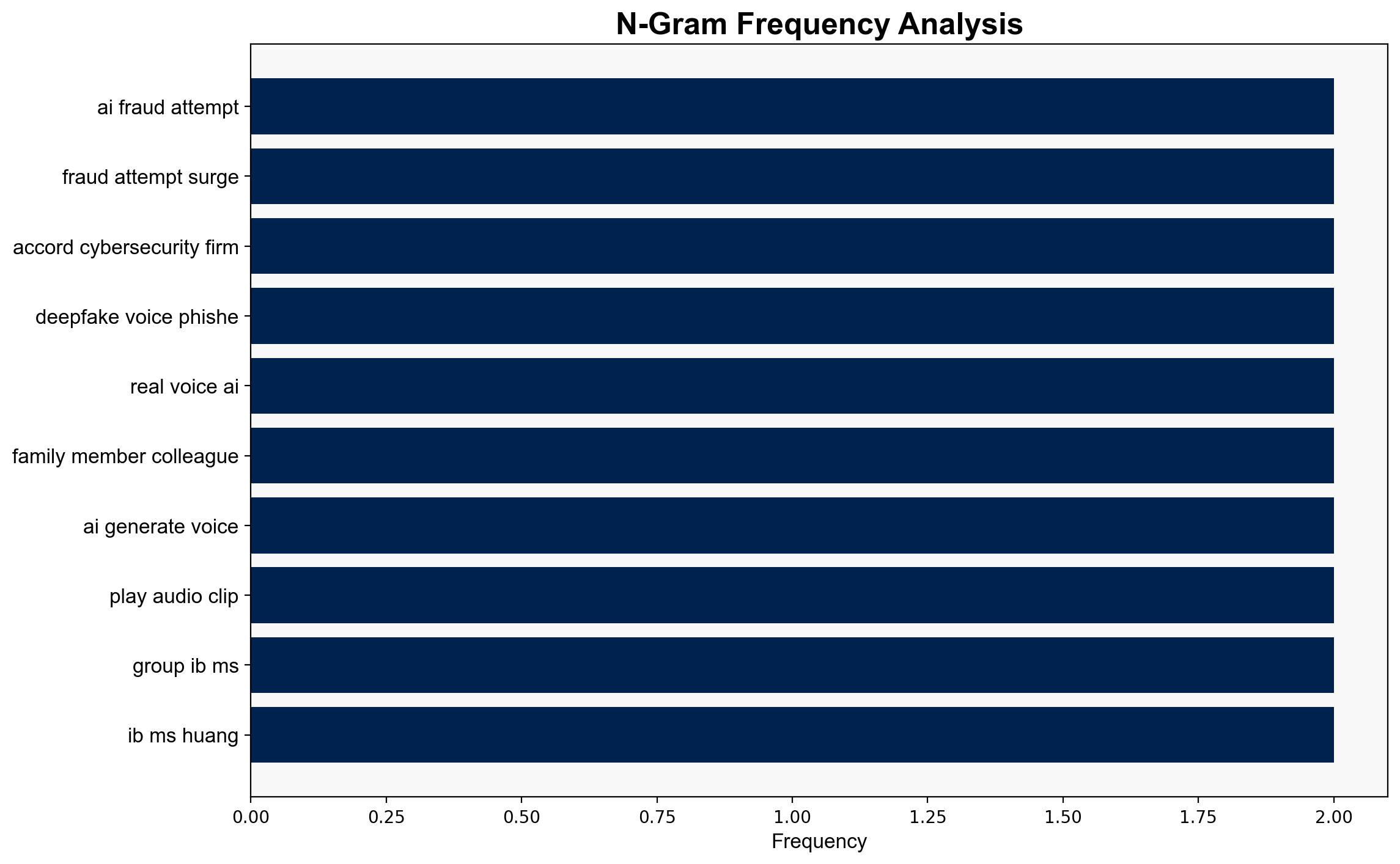

The strategic judgment is that the increasing sophistication of AI-generated voices poses a significant cybersecurity threat, with a moderate to high confidence level. The hypothesis that AI-generated voices are becoming indistinguishable from real voices, leading to increased fraud, is better supported. Recommended actions include enhancing public awareness and developing advanced detection tools.

2. Competing Hypotheses

1. AI-generated voices are becoming indistinguishable from real voices, leading to a surge in fraud attempts.

2. Despite advancements, AI-generated voices remain detectable by most people, limiting their effectiveness in fraud.

Using Analysis of Competing Hypotheses (ACH), the first hypothesis is better supported due to evidence of increased fraud attempts and the difficulty participants faced in distinguishing AI-generated voices from real ones.

3. Key Assumptions and Red Flags

– Assumption: People have a natural inclination to trust familiar voices, making them vulnerable to AI-generated voice scams.

– Red Flag: The study relies on a limited sample size, which may not represent broader population capabilities in detecting AI-generated voices.

– Blind Spot: Potential bias in the selection of participants who may have varying levels of exposure to AI technologies.

4. Implications and Strategic Risks

The rise of AI-generated voice technology could lead to an increase in sophisticated phishing and vishing attacks, posing economic risks through financial fraud. Psychologically, it may erode trust in communication channels. Geopolitically, it could be exploited for misinformation campaigns, affecting national security.

5. Recommendations and Outlook

- Enhance public education on the risks of AI-generated voices and how to identify them.

- Invest in developing and deploying AI detection tools for businesses and individuals.

- Scenario Projections:

- Best: Public awareness and detection tools reduce fraud attempts significantly.

- Worst: AI-generated voice technology becomes indistinguishable, leading to widespread fraud and misinformation.

- Most Likely: Continued increase in fraud attempts with gradual improvement in detection and public awareness.

6. Key Individuals and Entities

– Yuan Huang (Group-IB)

– Terence Sim (National University of Singapore)

7. Thematic Tags

national security threats, cybersecurity, counter-terrorism, regional focus