Employees keep feeding AI tools secrets they cant take back – Help Net Security

Published on: 2025-09-09

Intelligence Report: Employees Keep Feeding AI Tools Secrets They Can’t Take Back – Help Net Security

1. BLUF (Bottom Line Up Front)

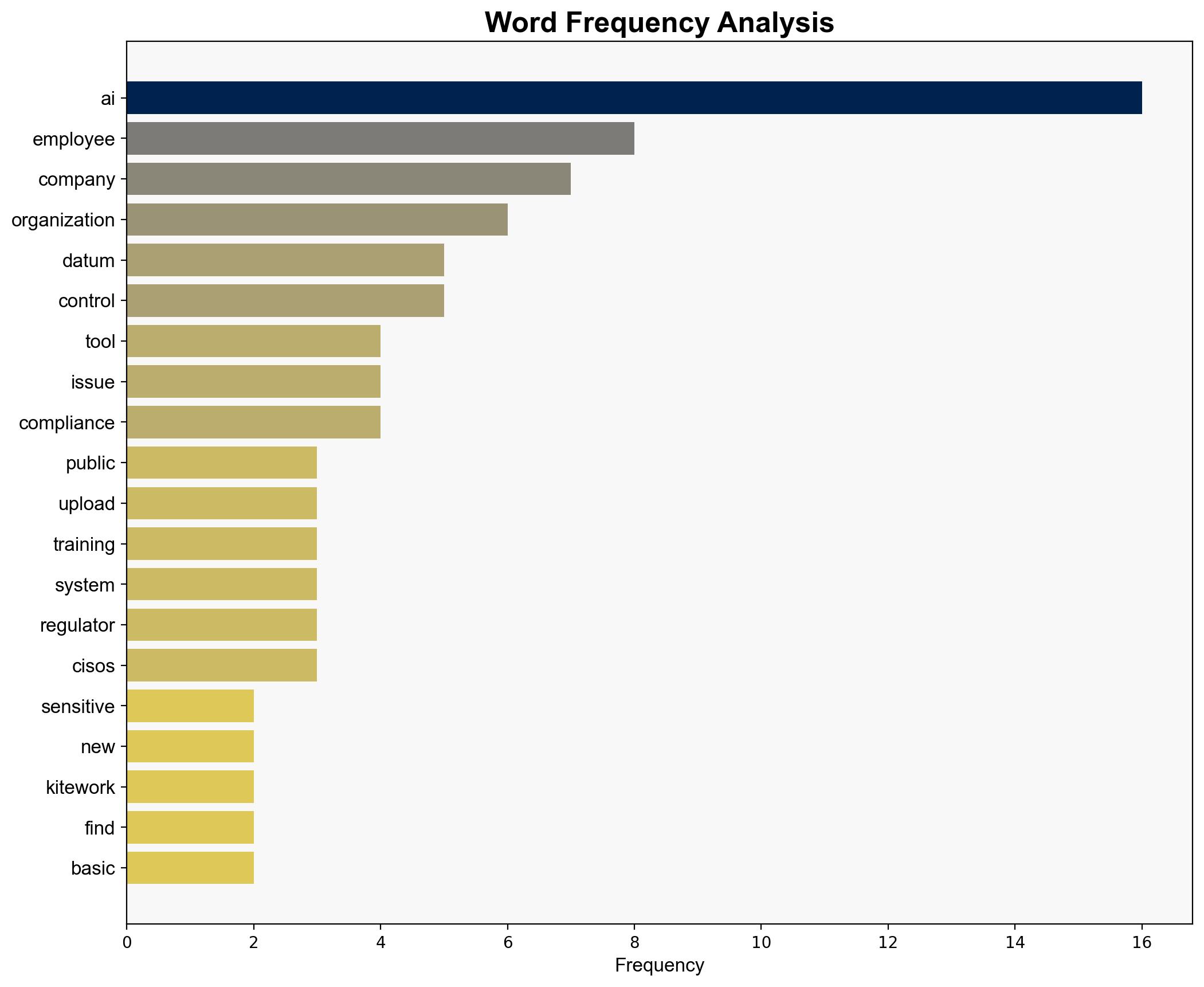

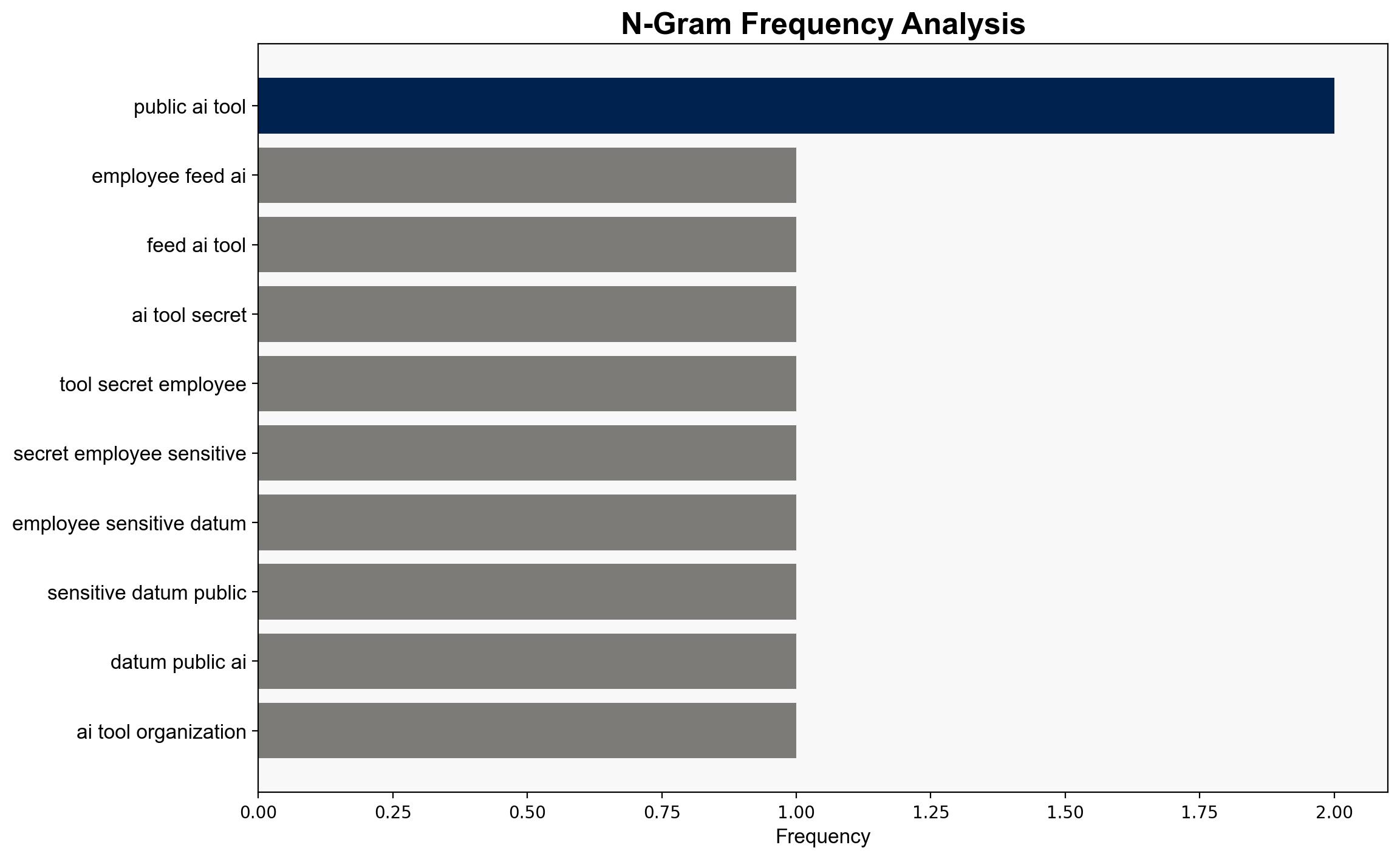

The most supported hypothesis is that organizations lack adequate governance and technical controls to prevent the inadvertent sharing of sensitive data with AI tools. This is compounded by a gap in executive awareness and regulatory compliance. Confidence in this assessment is moderate, given the potential for undisclosed internal measures. Recommended actions include enhancing technical safeguards and increasing executive awareness of AI governance issues.

2. Competing Hypotheses

Hypothesis 1: Organizations lack sufficient technical and governance controls to prevent employees from sharing sensitive data with AI tools, leading to compliance risks and potential data breaches.

Hypothesis 2: Organizations have adequate controls, but employees bypass these measures due to insufficient training or awareness, resulting in inadvertent data exposure.

3. Key Assumptions and Red Flags

Assumptions:

– Hypothesis 1 assumes a systemic lack of technical controls and governance.

– Hypothesis 2 assumes that existing controls are adequate but not effectively communicated or enforced.

Red Flags:

– Discrepancies between executive perceptions and actual governance capabilities.

– Lack of detailed evidence on the effectiveness of current training programs.

4. Implications and Strategic Risks

The primary risk is regulatory non-compliance, which could lead to significant fines and reputational damage. There is also a risk of sensitive data being accessed by unauthorized parties, potentially leading to competitive disadvantage or legal liabilities. The gap between executive perception and reality could exacerbate these risks if not addressed.

5. Recommendations and Outlook

- Implement robust technical controls to prevent data uploads to AI tools, such as data loss prevention systems.

- Enhance employee training programs to emphasize the risks of sharing sensitive data with AI tools.

- Conduct regular audits to ensure compliance with relevant regulations and adjust governance policies accordingly.

- Scenario Projections:

- Best Case: Organizations implement effective controls and training, reducing data exposure incidents.

- Worst Case: Continued data leaks lead to severe regulatory penalties and loss of customer trust.

- Most Likely: Incremental improvements in controls and training reduce but do not eliminate data exposure risks.

6. Key Individuals and Entities

Patrick Spencer, VP Corporate Marketing Research at Kiteworks, is a key individual mentioned in the report. Kiteworks is a significant entity in the context of this analysis.

7. Thematic Tags

national security threats, cybersecurity, regulatory compliance, data governance