AI ‘companion’ chatbots investigated over child protection – BBC News

Published on: 2025-09-12

Intelligence Report: AI ‘companion’ chatbots investigated over child protection – BBC News

1. BLUF (Bottom Line Up Front)

The investigation into AI ‘companion’ chatbots highlights significant concerns regarding child protection and the ethical use of AI technology. The most supported hypothesis is that current AI chatbots lack sufficient safeguards to protect vulnerable users, particularly children, from psychological harm. Confidence in this hypothesis is moderate due to the presence of ongoing investigations and lawsuits. It is recommended that regulatory bodies enforce stricter guidelines and oversight on AI chatbot development to ensure child safety.

2. Competing Hypotheses

1. **Hypothesis A**: AI chatbots are inadequately regulated, leading to potential harm to children and vulnerable users due to insufficient safety measures.

2. **Hypothesis B**: AI chatbots are being targeted by regulators and media due to isolated incidents, and the overall risk to children is overstated.

Using the Analysis of Competing Hypotheses (ACH) 2.0, Hypothesis A is better supported by evidence such as the FTC’s involvement, lawsuits against AI companies, and internal guidelines permitting inappropriate interactions. Hypothesis B is less supported as it relies on the assumption that incidents are isolated and not indicative of systemic issues.

3. Key Assumptions and Red Flags

– **Assumptions**: Hypothesis A assumes that AI companies prioritize profit over safety and that current regulatory measures are insufficient. Hypothesis B assumes that media reports exaggerate risks and that companies have adequate internal controls.

– **Red Flags**: The lack of transparency from AI companies and the potential bias in media reporting could skew public perception. Inconsistent data on the frequency and impact of harmful interactions is a concern.

4. Implications and Strategic Risks

The ongoing investigation could lead to stricter regulations, impacting the AI industry’s growth and innovation. There is a risk of public distrust in AI technologies, potentially affecting market dynamics. Geopolitically, the U.S. aims to maintain leadership in AI technology, but failure to address safety concerns could undermine this position. Psychological impacts on children and vulnerable users could escalate if not addressed.

5. Recommendations and Outlook

- Regulatory bodies should establish clear guidelines for AI chatbot development focused on child protection and user safety.

- AI companies should implement robust safety measures and transparency in their operations.

- Best-case scenario: Effective regulations lead to safer AI technologies and sustained industry growth.

- Worst-case scenario: Continued incidents result in severe regulatory backlash and loss of consumer trust.

- Most likely scenario: Incremental improvements in safety measures with ongoing regulatory oversight.

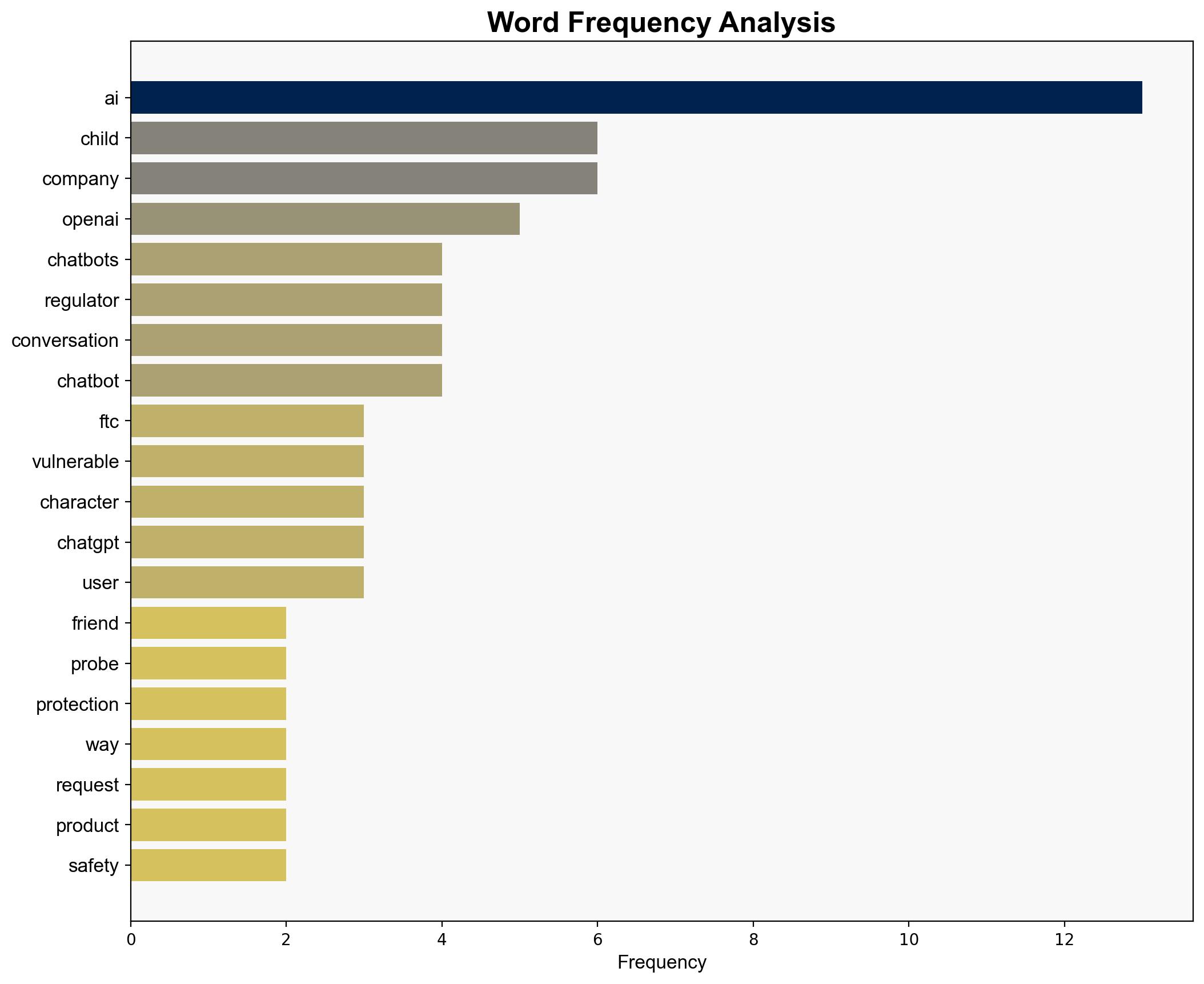

6. Key Individuals and Entities

– Andrew Ferguson (FTC Chairman)

– Companies: Alphabet, OpenAI, Character AI, Snap, Meta

7. Thematic Tags

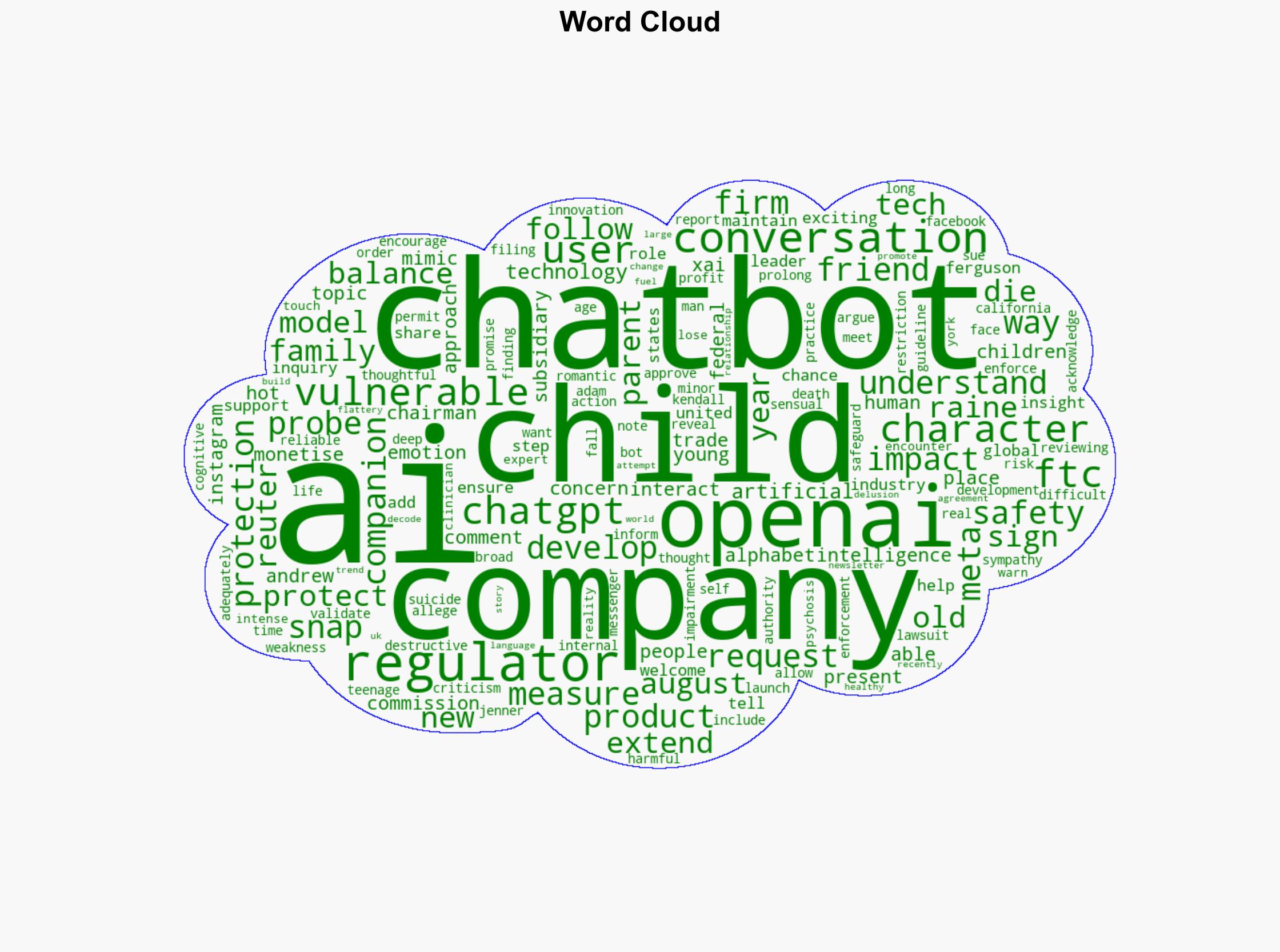

national security threats, cybersecurity, child protection, AI ethics, regulatory compliance