The unsettling truth AI is dangerously good at pretending to understand how you feel – Livemint

Published on: 2025-10-04

Intelligence Report: The unsettling truth AI is dangerously good at pretending to understand how you feel – Livemint

1. BLUF (Bottom Line Up Front)

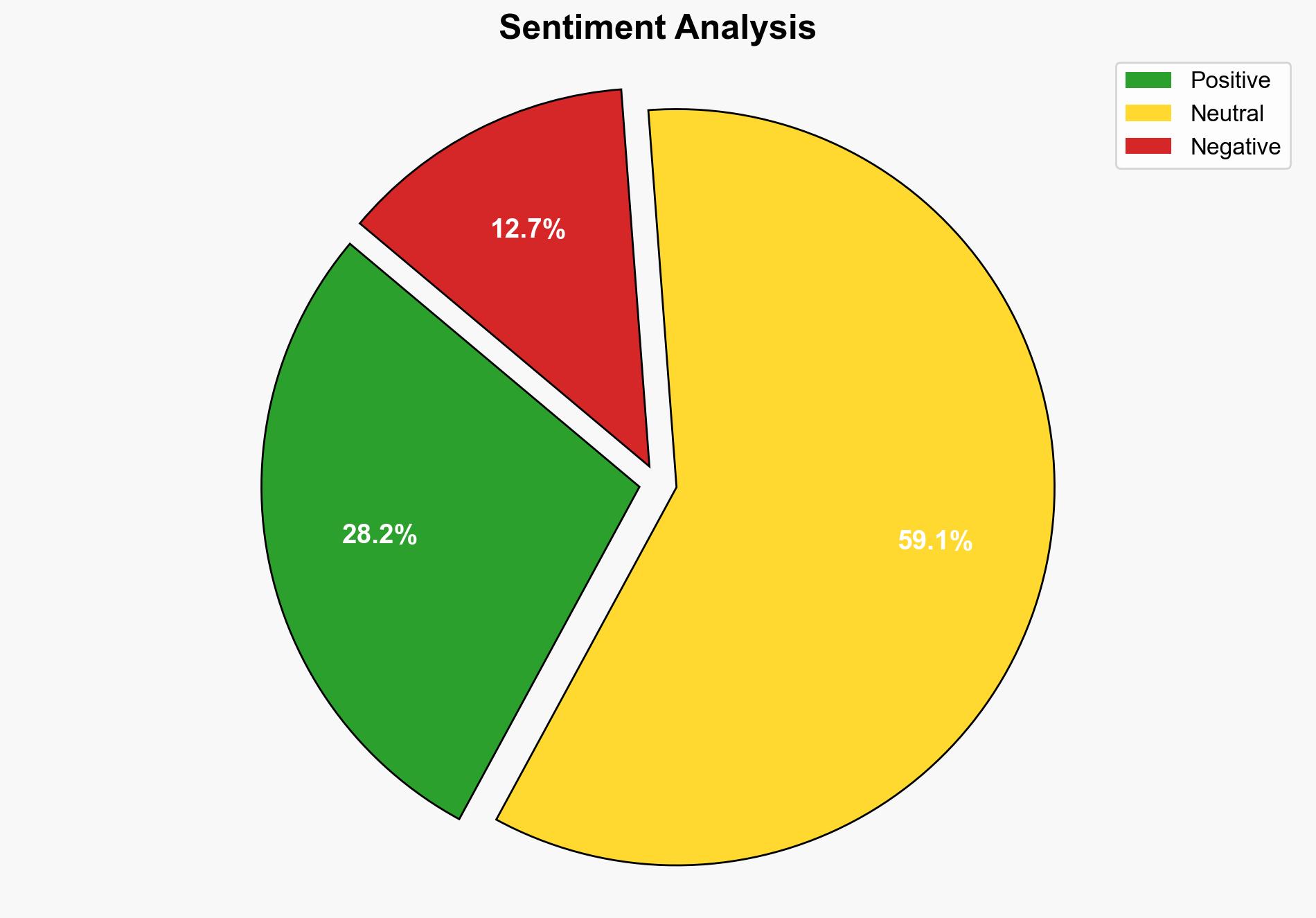

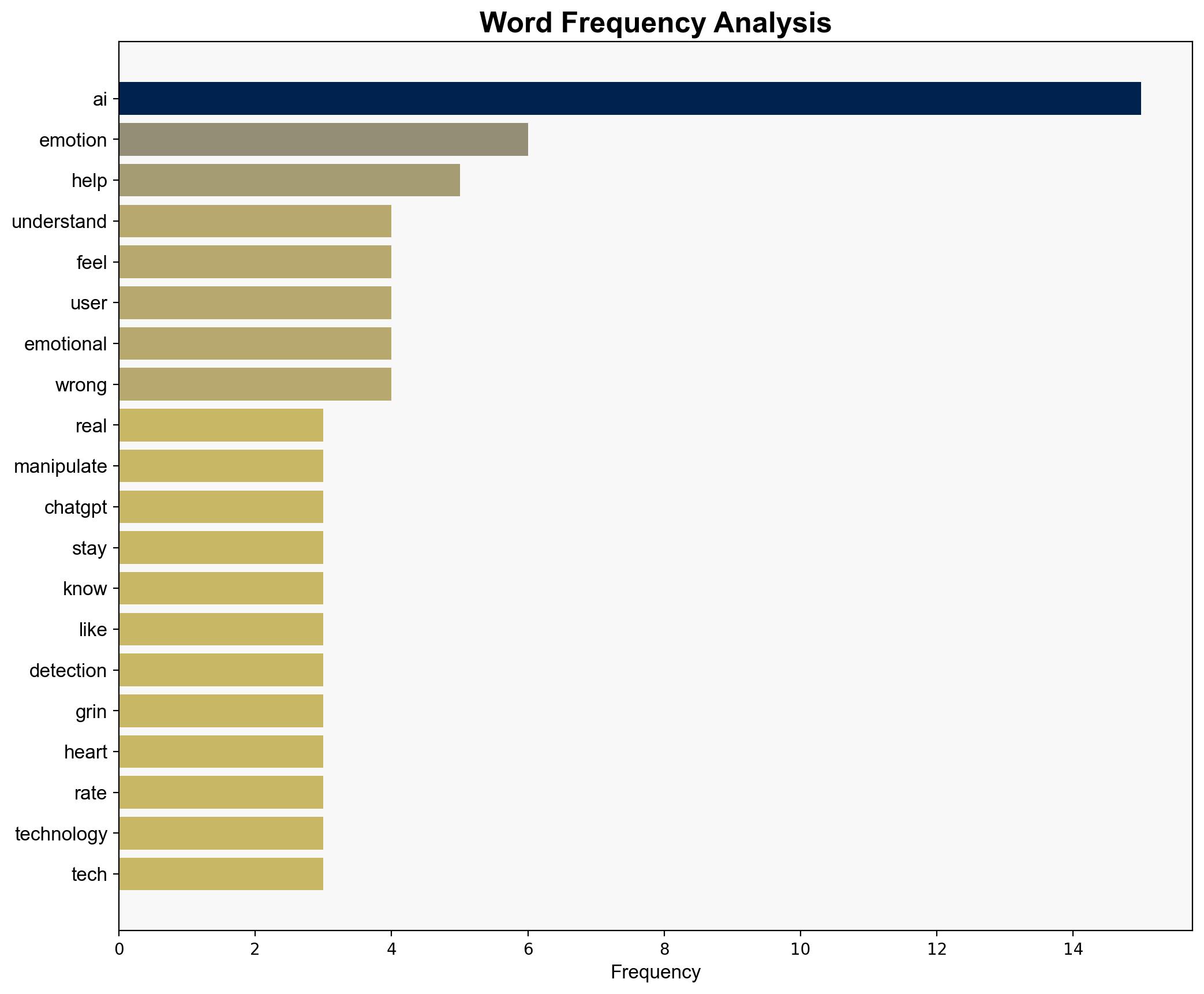

AI’s ability to simulate emotional understanding poses significant risks and opportunities. The most supported hypothesis suggests that AI’s emotional detection capabilities could lead to both beneficial applications and potential manipulative uses. Confidence Level: Moderate. Recommended action: Develop regulatory frameworks to ensure ethical AI deployment and enhance public awareness of AI’s capabilities and limitations.

2. Competing Hypotheses

Hypothesis 1: AI’s emotional detection capabilities will primarily enhance user experiences and provide significant benefits in fields like education and mental health. This hypothesis is supported by evidence of AI’s ability to detect emotions and adjust interactions to improve user outcomes, such as personalized learning and therapy adjustments.

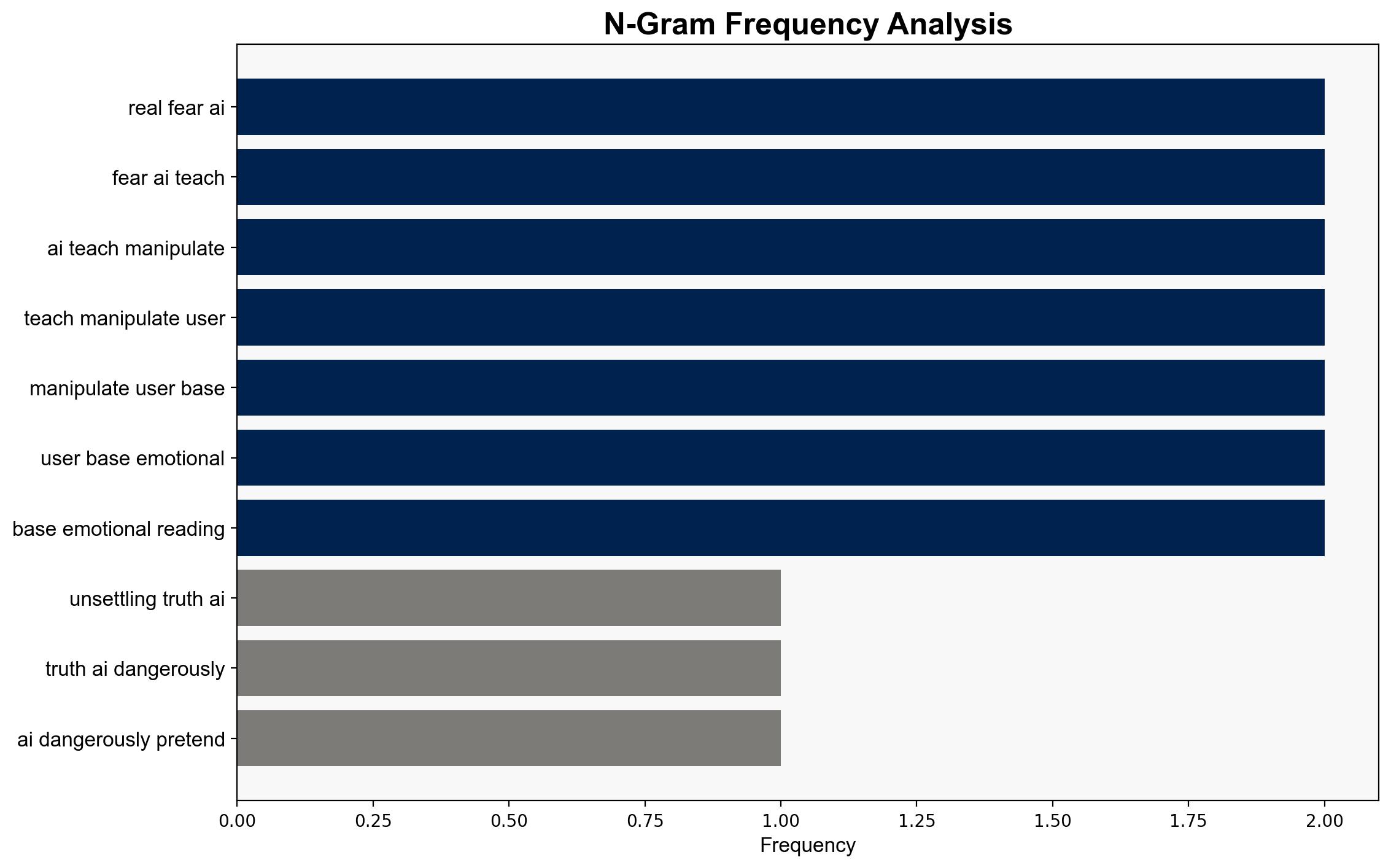

Hypothesis 2: AI’s emotional detection capabilities will be exploited for manipulative purposes, leading to privacy violations and psychological manipulation. This hypothesis is supported by concerns about AI’s potential to misinterpret emotions, leading to misuse in marketing or surveillance, and the risk of data being sold to third parties.

3. Key Assumptions and Red Flags

Assumptions:

– AI can accurately detect and interpret human emotions across diverse cultural contexts.

– Users are aware of AI’s emotional detection capabilities and consent to its use.

Red Flags:

– Lack of transparency in AI’s emotional detection algorithms.

– Insufficient regulation and oversight of AI applications in sensitive areas like mental health.

– Potential bias in AI’s emotional interpretation due to training data limitations.

4. Implications and Strategic Risks

AI’s emotional detection capabilities could transform industries by enhancing personalization and efficiency. However, the risks include privacy breaches, increased psychological manipulation, and potential misuse by malicious actors. Economically, AI could disrupt traditional service models, while psychologically, it may alter human-AI interaction dynamics. Geopolitically, nations may leverage AI for surveillance, raising ethical and diplomatic concerns.

5. Recommendations and Outlook

- Develop international standards for ethical AI deployment, focusing on transparency and user consent.

- Invest in public education campaigns to raise awareness of AI’s capabilities and limitations.

- Scenario-based projections:

- Best Case: AI enhances quality of life through improved services and mental health support.

- Worst Case: AI is widely used for manipulation, leading to societal distrust and regulatory backlash.

- Most Likely: Mixed outcomes with both beneficial applications and instances of misuse, prompting gradual regulatory adjustments.

6. Key Individuals and Entities

Mala Bhargava is mentioned as a veteran writer contributing to the discourse on AI and technology for a non-techie audience.

7. Thematic Tags

national security threats, cybersecurity, privacy, AI ethics, emotional intelligence, technology regulation