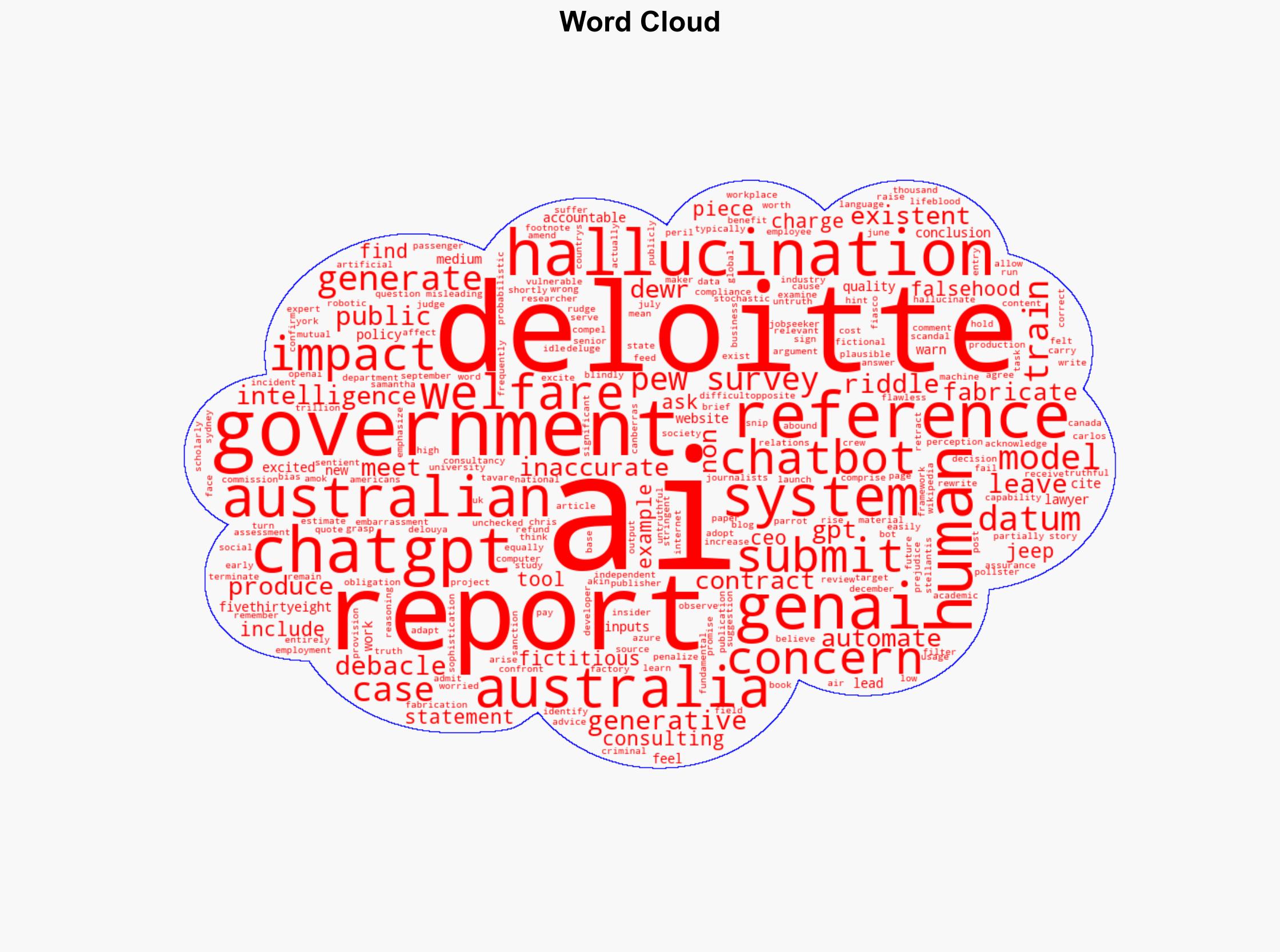

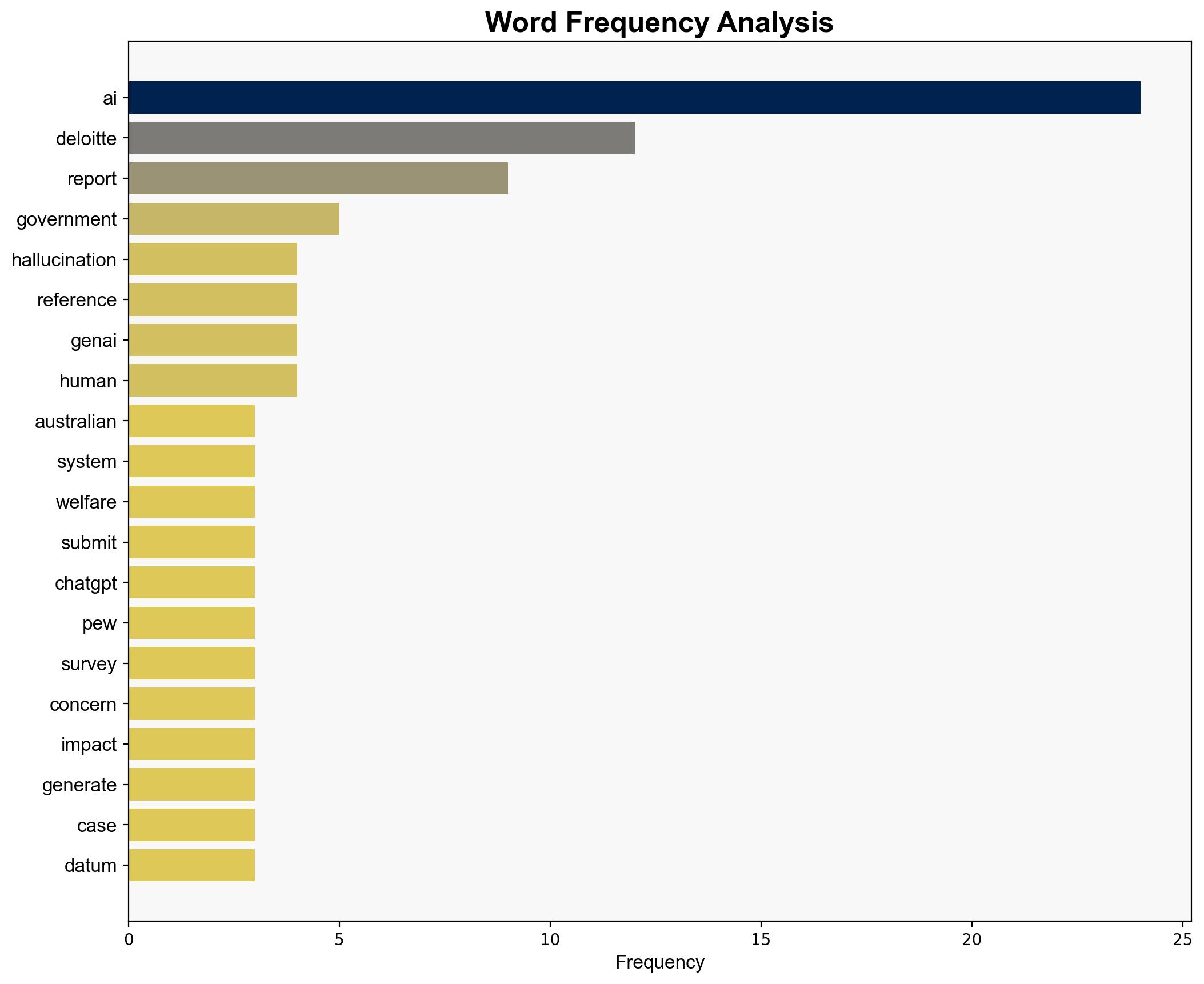

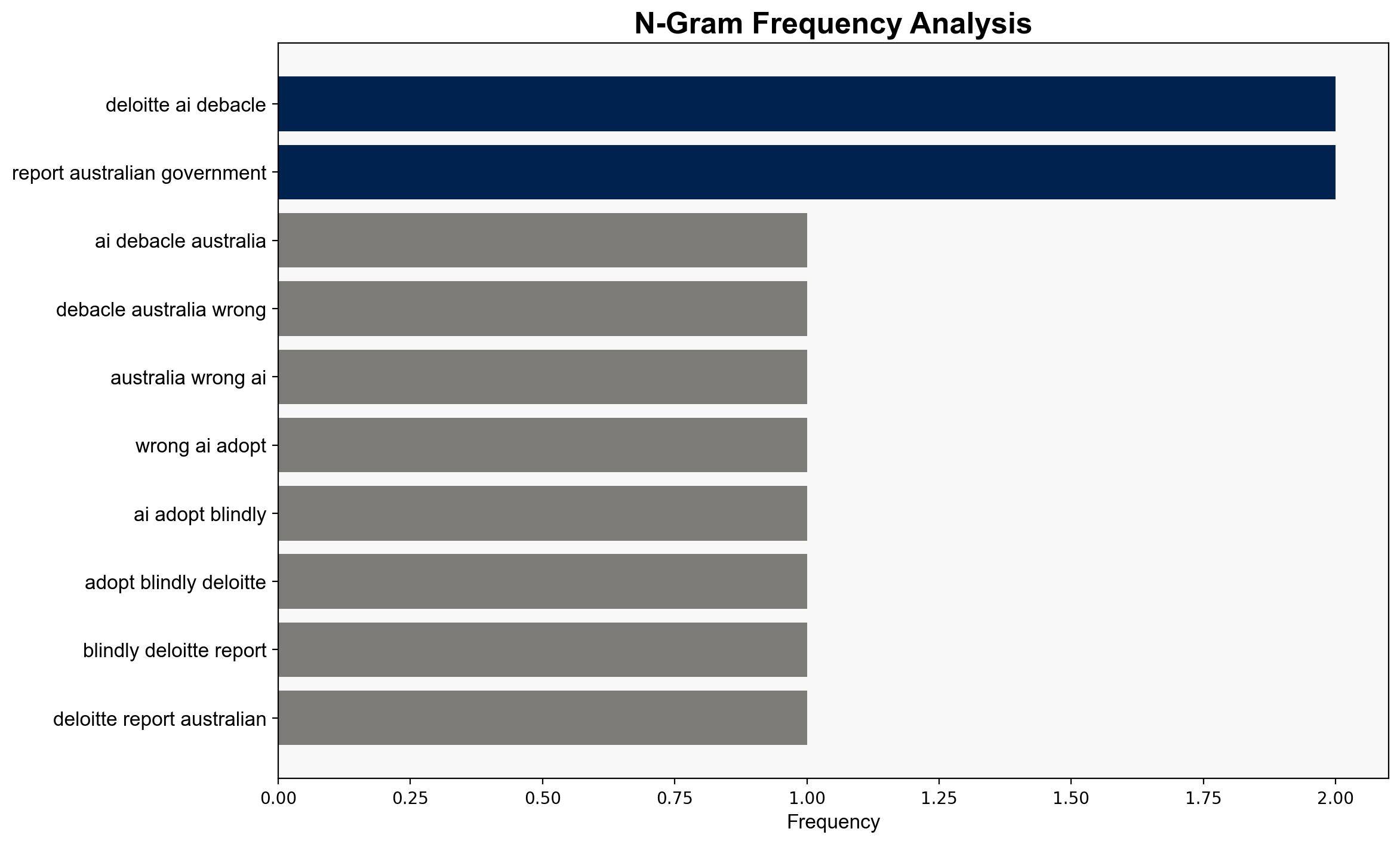

The Deloitte AI debacle in Australia shows what can go wrong if AI is adopted blindly – Livemint

Published on: 2025-10-21

Intelligence Report: The Deloitte AI debacle in Australia shows what can go wrong if AI is adopted blindly – Livemint

1. BLUF (Bottom Line Up Front)

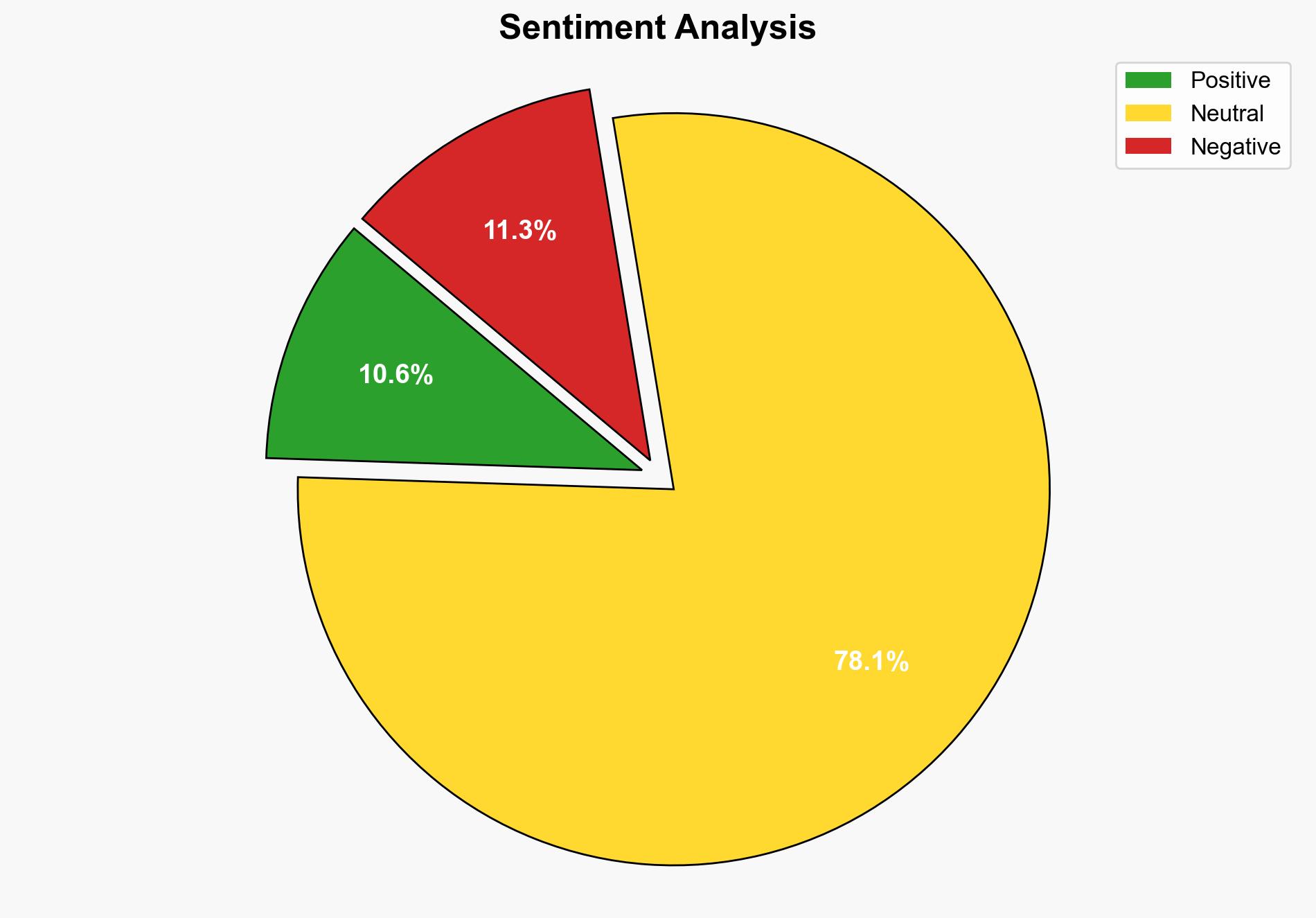

The Deloitte AI incident in Australia highlights significant risks associated with unchecked AI deployment, particularly in critical government functions. The most supported hypothesis is that the incident was primarily due to inadequate oversight and understanding of AI capabilities and limitations. Confidence Level: Moderate. Recommended action includes enhancing AI governance frameworks and increasing AI literacy among stakeholders.

2. Competing Hypotheses

1. **Hypothesis A**: The Deloitte AI debacle was primarily due to a lack of robust oversight and understanding of AI capabilities by both Deloitte and the Australian government.

2. **Hypothesis B**: The incident was a result of inherent flaws in the AI systems used, which are prone to generating inaccurate data due to their reliance on biased or low-quality training data.

Using ACH 2.0, Hypothesis A is better supported as the report indicates a lack of due diligence in verifying AI outputs, suggesting human oversight failures rather than purely technical deficiencies.

3. Key Assumptions and Red Flags

– **Assumptions**: Both hypotheses assume that AI systems require human oversight to function effectively. Hypothesis B assumes AI systems are inherently unreliable without considering potential improvements in technology.

– **Red Flags**: The report’s reliance on AI-generated content without verification is a significant oversight. The absence of a detailed account of the decision-making process behind the AI deployment is concerning.

4. Implications and Strategic Risks

The incident underscores the potential for AI to disrupt governmental operations if not properly managed, posing risks to public trust and policy implementation. It highlights the need for comprehensive AI governance to prevent similar occurrences globally. The cascading effect could lead to increased scrutiny of AI in public sectors, affecting AI adoption rates and innovation.

5. Recommendations and Outlook

- Enhance AI governance frameworks to include rigorous oversight and verification processes.

- Invest in AI literacy programs for stakeholders to better understand AI capabilities and limitations.

- Scenario Projections:

- Best Case: Improved AI governance leads to more reliable AI deployments.

- Worst Case: Continued AI failures result in widespread distrust and reduced adoption.

- Most Likely: Incremental improvements in AI oversight lead to gradual restoration of trust.

6. Key Individuals and Entities

– Chris Rudge, researcher at Sydney University

– Deloitte

– Australia’s Department of Employment and Workplace Relations

– Azure OpenAI

– Samantha Delouya, Business Insider

– Carlos Tavares, CEO of Stellantis

7. Thematic Tags

national security threats, cybersecurity, AI governance, public sector technology, risk management