AI models misrepresent news events nearly half the time study says – Al Jazeera English

Published on: 2025-10-22

Intelligence Report: AI models misrepresent news events nearly half the time study says – Al Jazeera English

1. BLUF (Bottom Line Up Front)

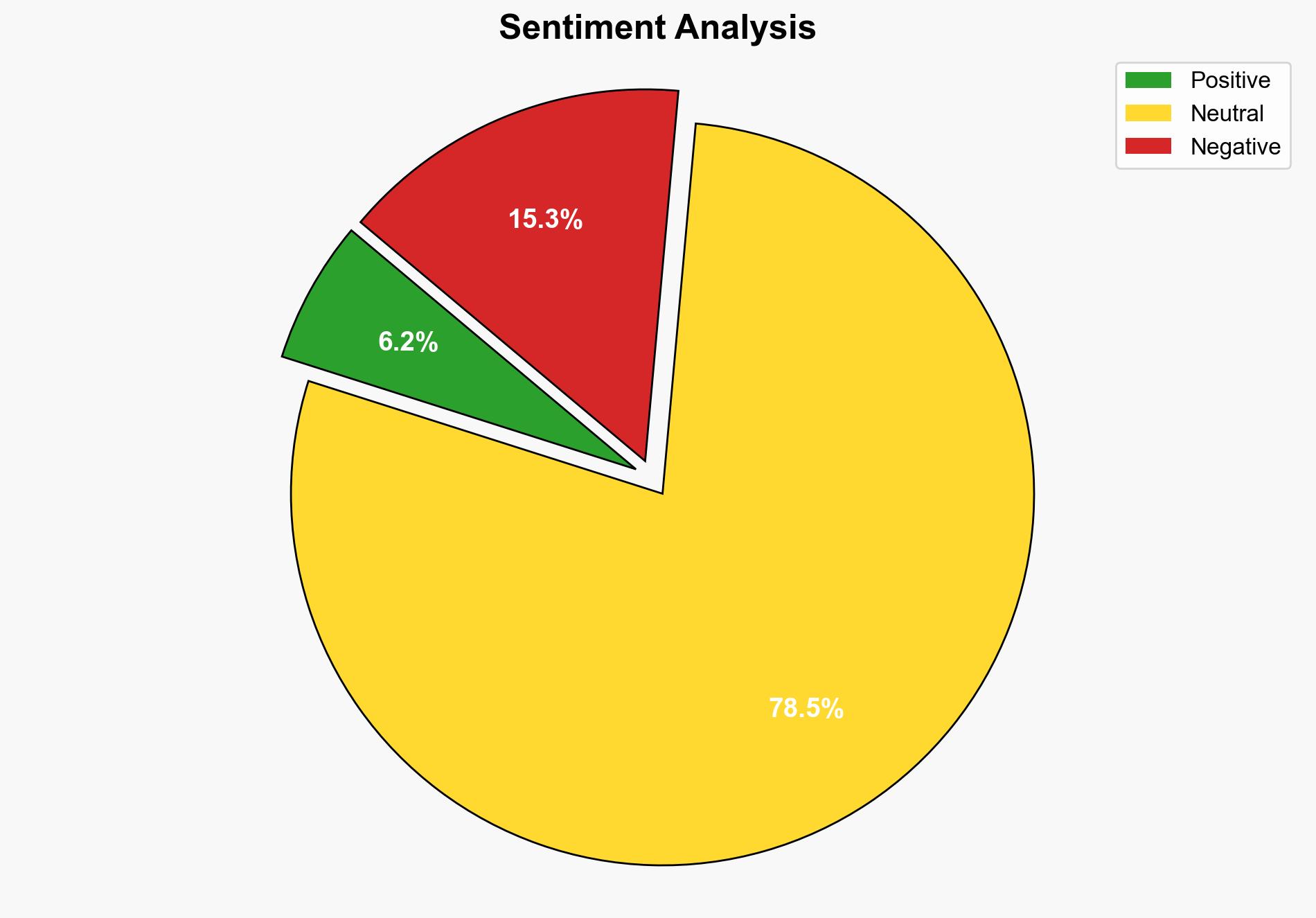

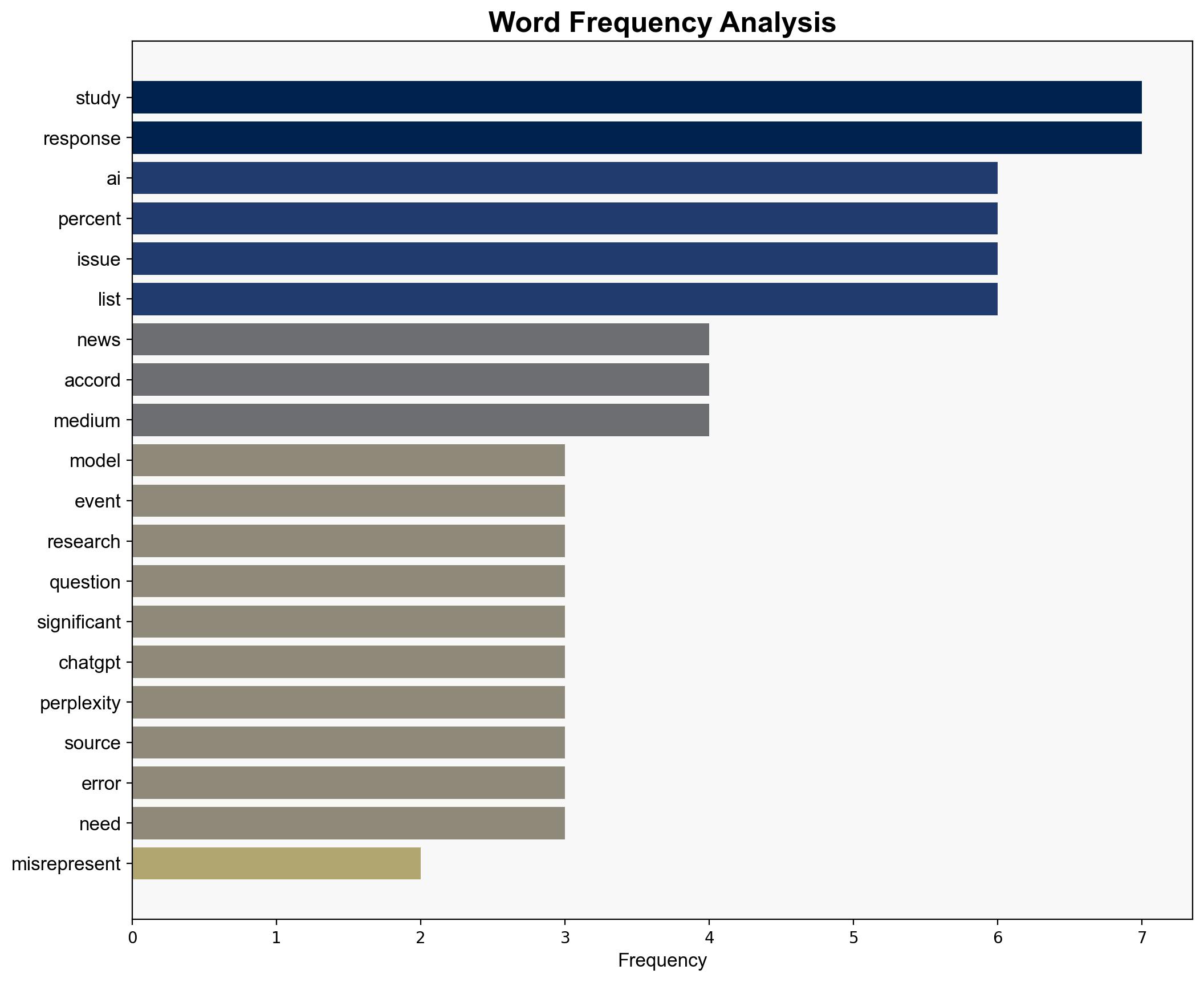

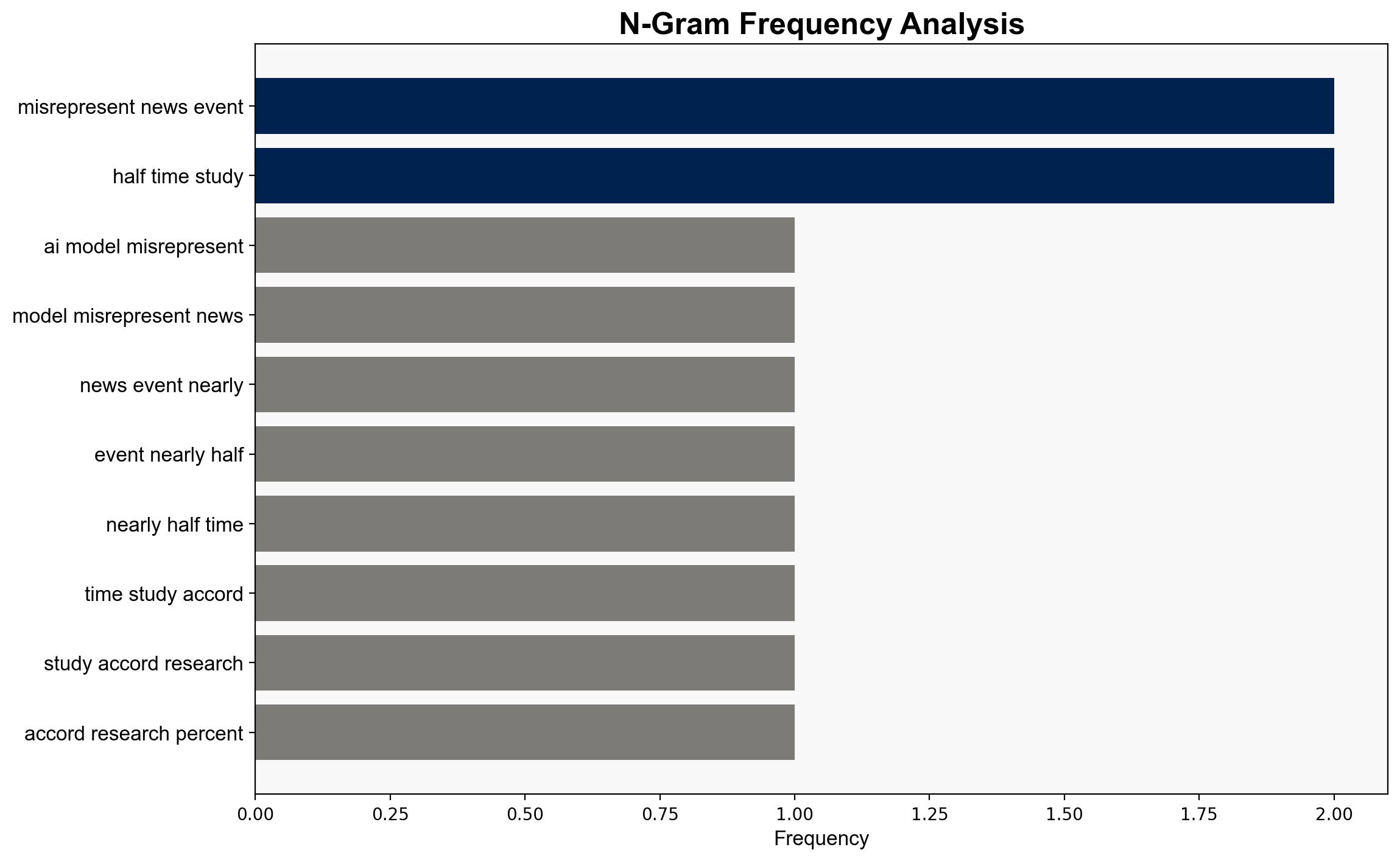

AI models, including ChatGPT, Google Gemini, and Microsoft’s Copilot, are reported to misrepresent news events nearly 50% of the time. The most supported hypothesis is that AI models’ inaccuracies stem from inadequate data sourcing and context understanding. Confidence level: Moderate. Recommended action: Enhance AI model transparency and improve data validation processes.

2. Competing Hypotheses

Hypothesis 1: AI models misrepresent news events primarily due to insufficient data validation and lack of context understanding, leading to factual inaccuracies.

Hypothesis 2: AI models’ misrepresentations are largely due to inherent biases in training datasets and algorithmic limitations, which skew responses.

Using ACH 2.0, Hypothesis 1 is better supported as the study highlights issues like incorrect sourcing and unverifiable attributions, suggesting data handling flaws over algorithmic bias.

3. Key Assumptions and Red Flags

Assumptions include the belief that AI models can be improved through better data validation and transparency. A red flag is the lack of detailed methodology in the study, which could affect the reliability of findings. The potential bias in selecting AI models for evaluation is also a concern.

4. Implications and Strategic Risks

The misrepresentation of news by AI models could exacerbate misinformation and disinformation, impacting public trust in media and technology. This could lead to increased regulatory scrutiny and potential geopolitical tensions if AI-generated content influences public opinion or policy.

5. Recommendations and Outlook

- Encourage AI developers to implement rigorous data validation and transparency measures.

- Promote media literacy programs to help consumers critically evaluate AI-generated content.

- Scenario-based projections:

- Best Case: AI models improve accuracy, enhancing public trust and reducing misinformation.

- Worst Case: Continued inaccuracies lead to widespread misinformation, regulatory backlash, and decreased trust in AI technologies.

- Most Likely: Incremental improvements in AI accuracy with ongoing challenges in data validation and bias mitigation.

6. Key Individuals and Entities

Jean Philip De Tender, Pete Archer, Jonathan Hendrickx.

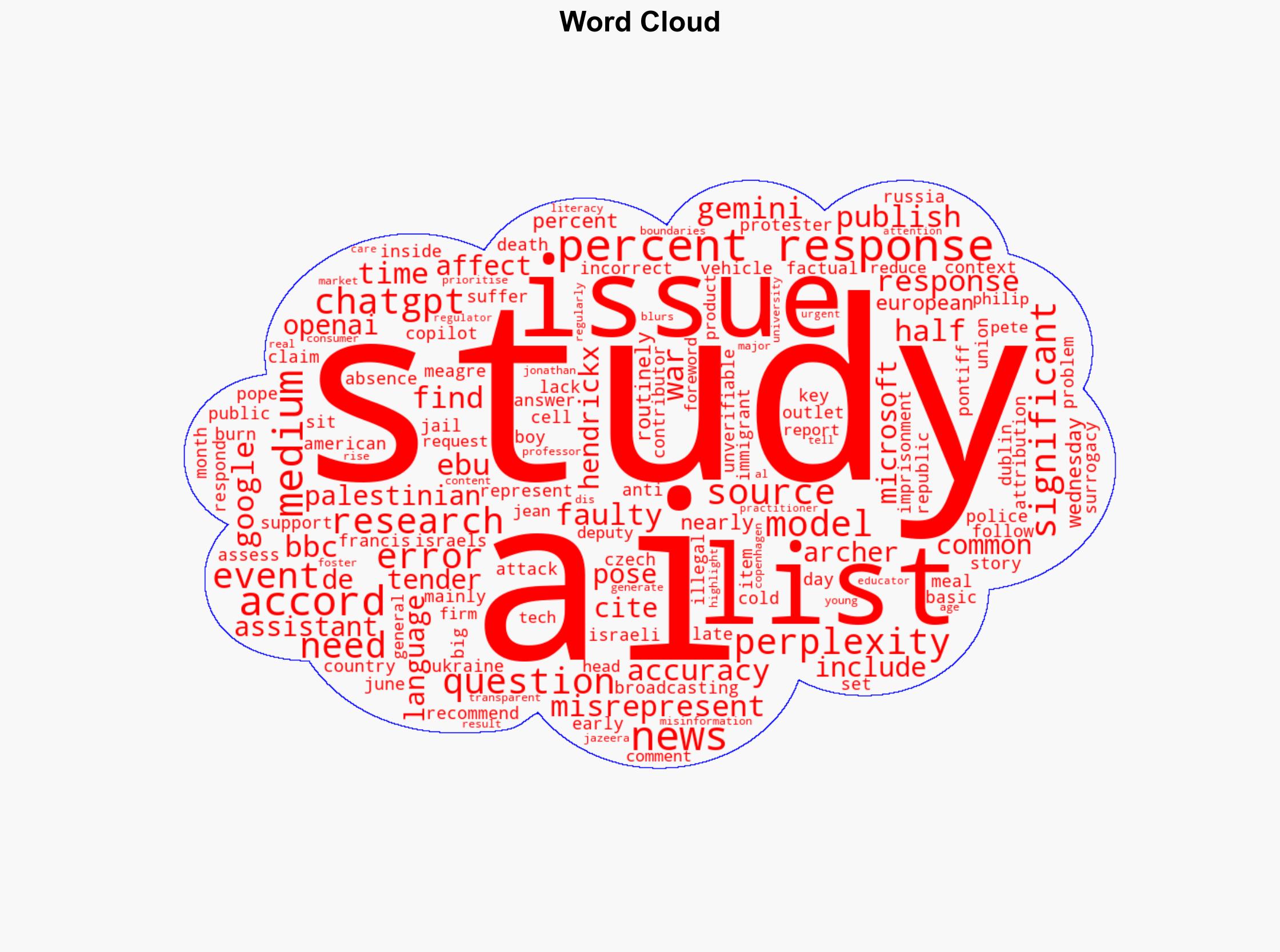

7. Thematic Tags

national security threats, cybersecurity, misinformation, AI ethics, media literacy