Why Are We Talking About Superintelligence – Calnewport.com

Published on: 2025-11-03

Intelligence Report: Why Are We Talking About Superintelligence – Calnewport.com

1. BLUF (Bottom Line Up Front)

The most supported hypothesis is that the discourse on superintelligence is driven by a combination of speculative thinking and a cultural shift within tech circles that emphasizes existential risk. Confidence in this judgment is moderate due to the speculative nature of the topic and the lack of empirical evidence supporting imminent superintelligence. Recommended action includes fostering balanced discussions on AI advancements, emphasizing evidence-based assessments, and preparing for a range of AI development scenarios.

2. Competing Hypotheses

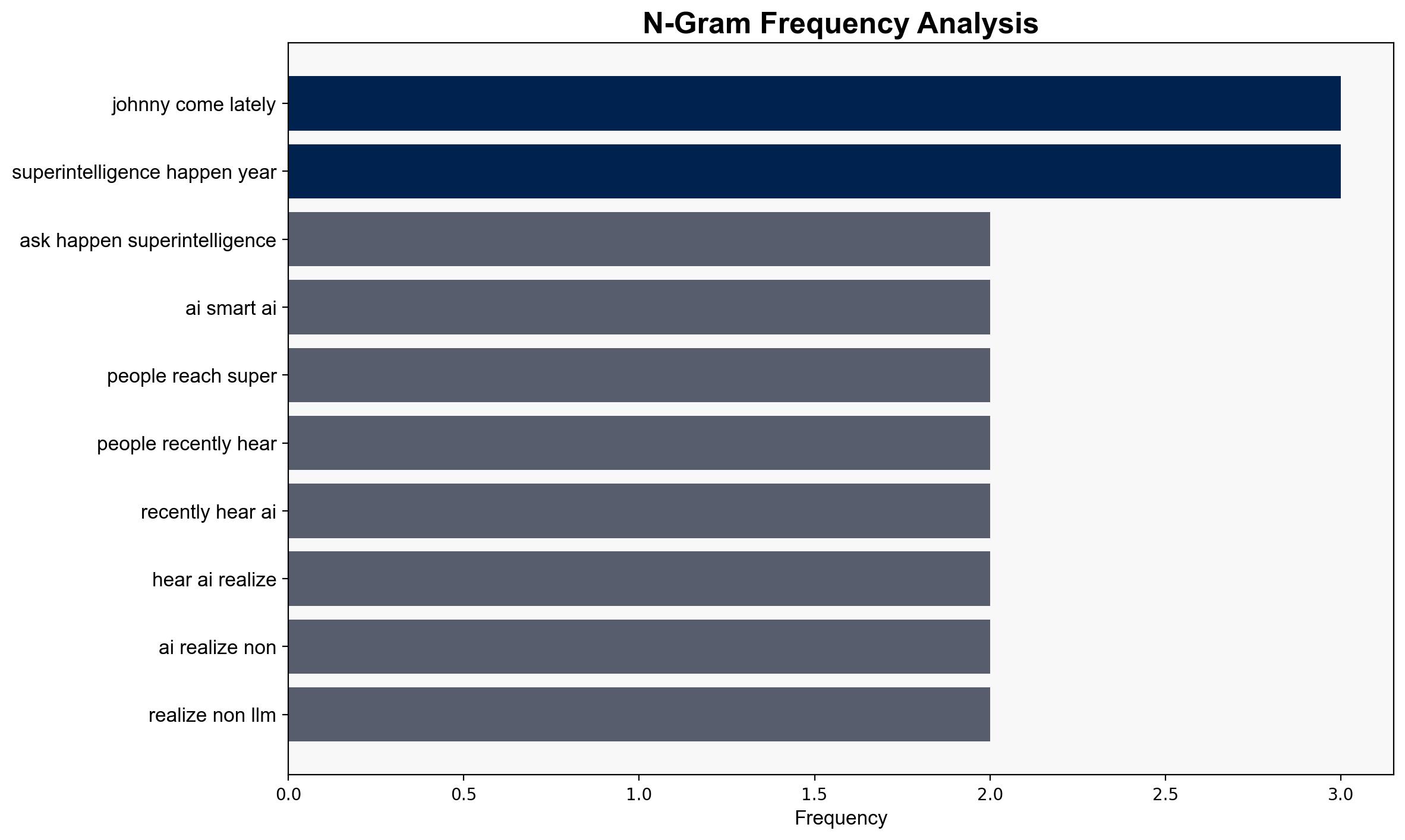

1. **Hypothesis A**: The focus on superintelligence is primarily a result of speculative thinking and cultural dynamics within tech circles, particularly those emphasizing existential risks.

2. **Hypothesis B**: The discourse is justified by genuine technological advancements that indicate the potential for superintelligence in the near future.

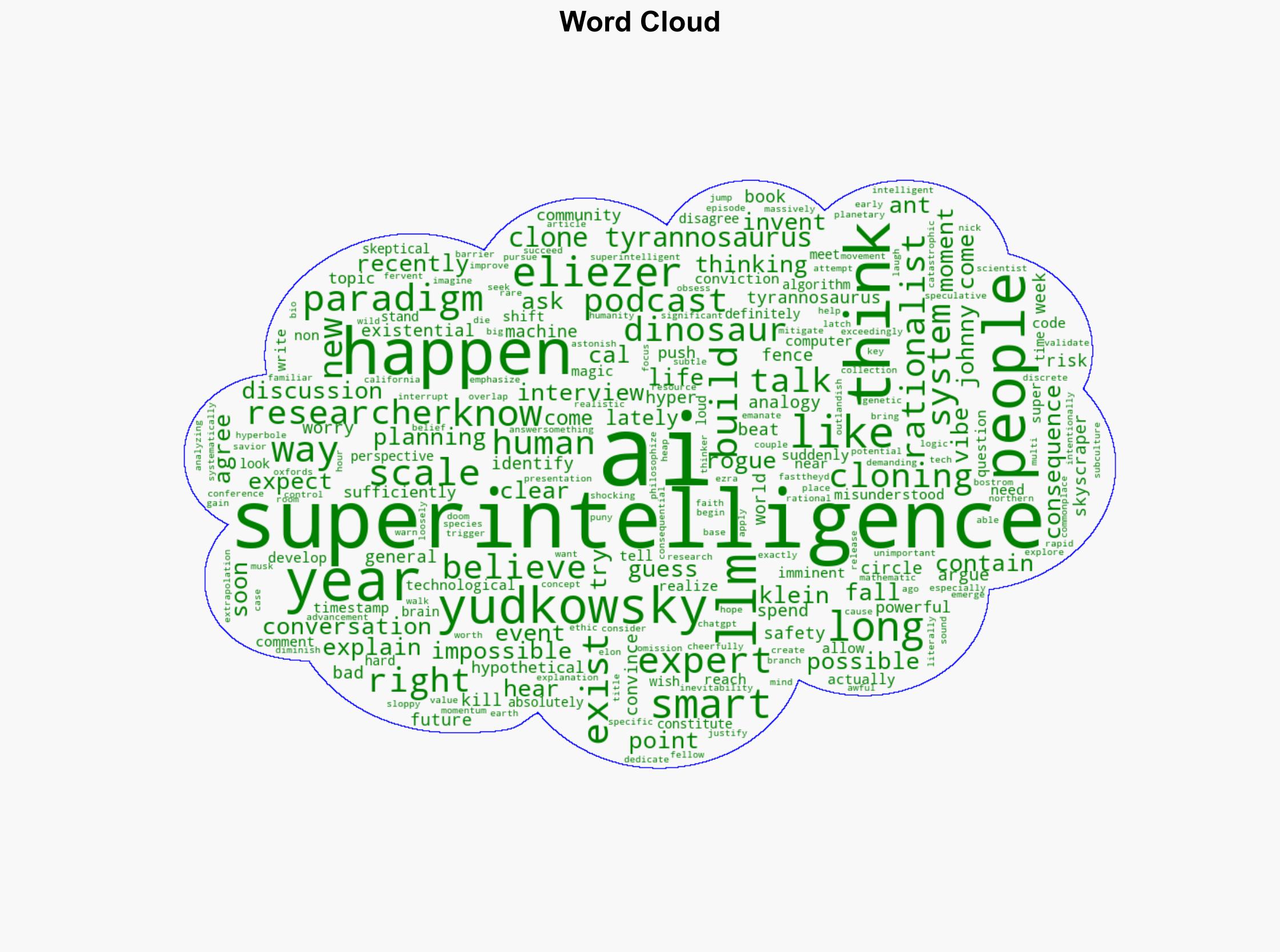

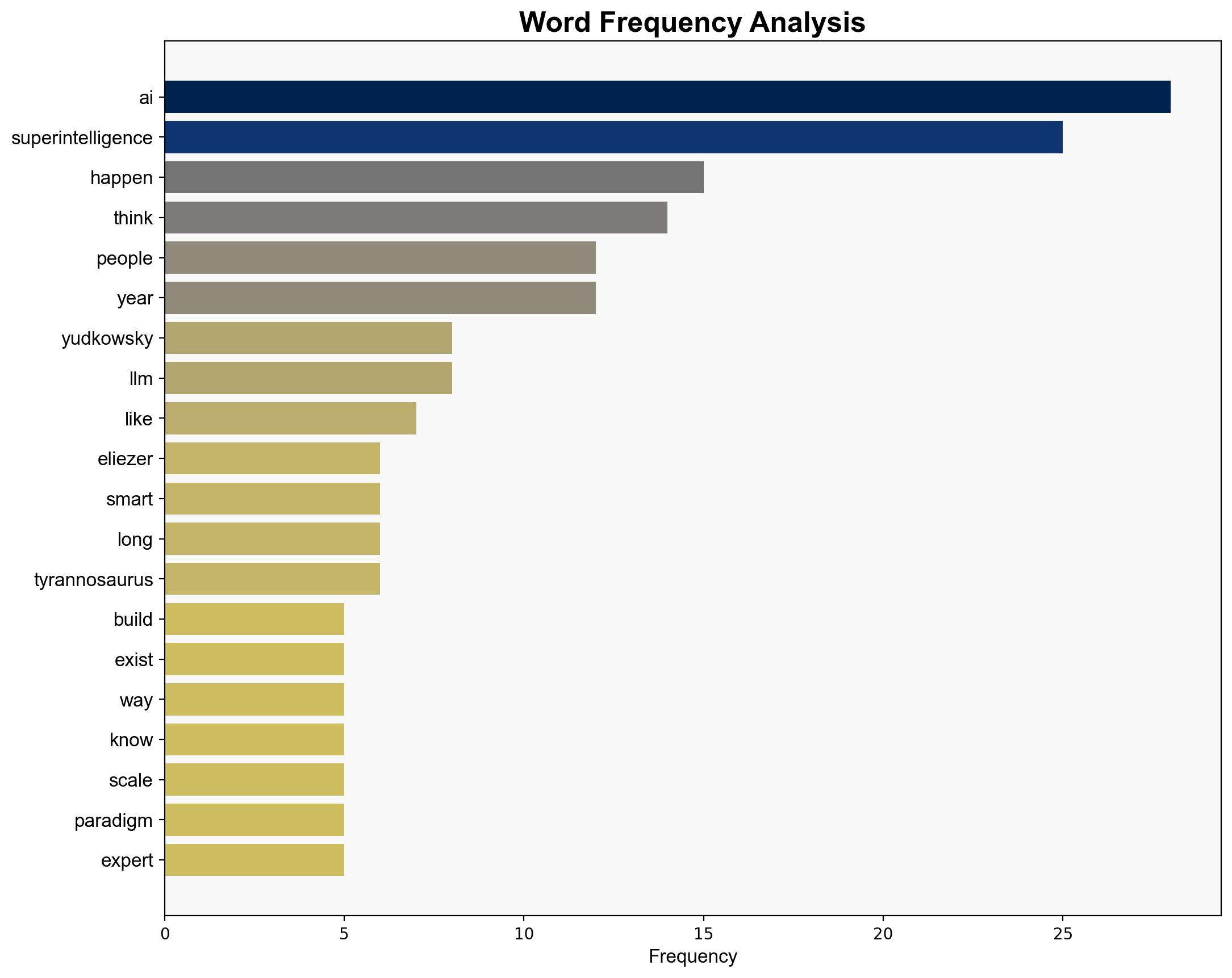

Using ACH 2.0, Hypothesis A is better supported by the evidence, which highlights the speculative nature of the discussions and the cultural factors driving the narrative, such as the influence of prominent figures like Elon Musk and the rationalist community.

3. Key Assumptions and Red Flags

– **Assumptions**: Hypothesis A assumes that cultural and speculative factors are the primary drivers of the discourse. Hypothesis B assumes that technological advancements are significant enough to warrant serious concern about superintelligence.

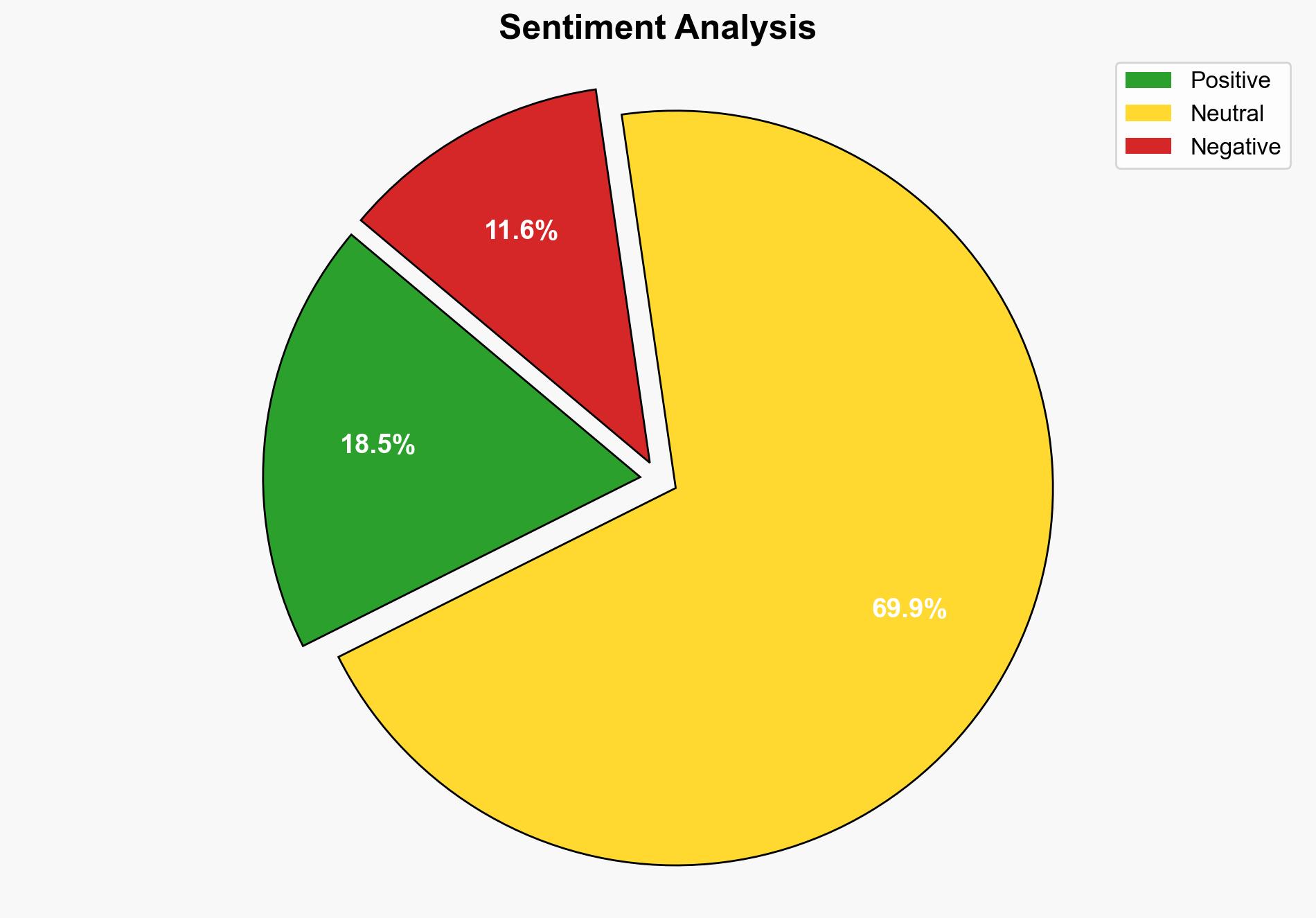

– **Red Flags**: The lack of empirical evidence supporting imminent superintelligence is a red flag for Hypothesis B. Additionally, the reliance on speculative analogies and hyperbolic language in the discourse may indicate cognitive bias.

4. Implications and Strategic Risks

– **Economic**: Overemphasis on speculative risks may divert resources from more immediate AI-related challenges, such as ethical AI deployment and job displacement.

– **Cyber**: Misguided focus on superintelligence could lead to neglect of current cybersecurity threats posed by existing AI technologies.

– **Geopolitical**: The narrative could influence policy decisions, potentially leading to international tensions over AI development and regulation.

– **Psychological**: Public perception of AI could be skewed, leading to fear or unrealistic expectations about AI capabilities.

5. Recommendations and Outlook

- Encourage balanced, evidence-based discussions on AI advancements to prevent undue panic or complacency.

- Scenario-based projections:

- **Best Case**: Balanced discourse leads to informed policy and public understanding.

- **Worst Case**: Speculative narratives dominate, leading to misguided policies and public fear.

- **Most Likely**: Continued debate with incremental policy adjustments based on emerging evidence.

6. Key Individuals and Entities

– Eliezer Yudkowsky

– Ezra Klein

– Elon Musk

– Nick Bostrom

7. Thematic Tags

national security threats, cybersecurity, existential risk, AI ethics, technological advancement