What happens when employees take control of AI – Help Net Security

Published on: 2025-11-14

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report: What happens when employees take control of AI – Help Net Security

1. BLUF (Bottom Line Up Front)

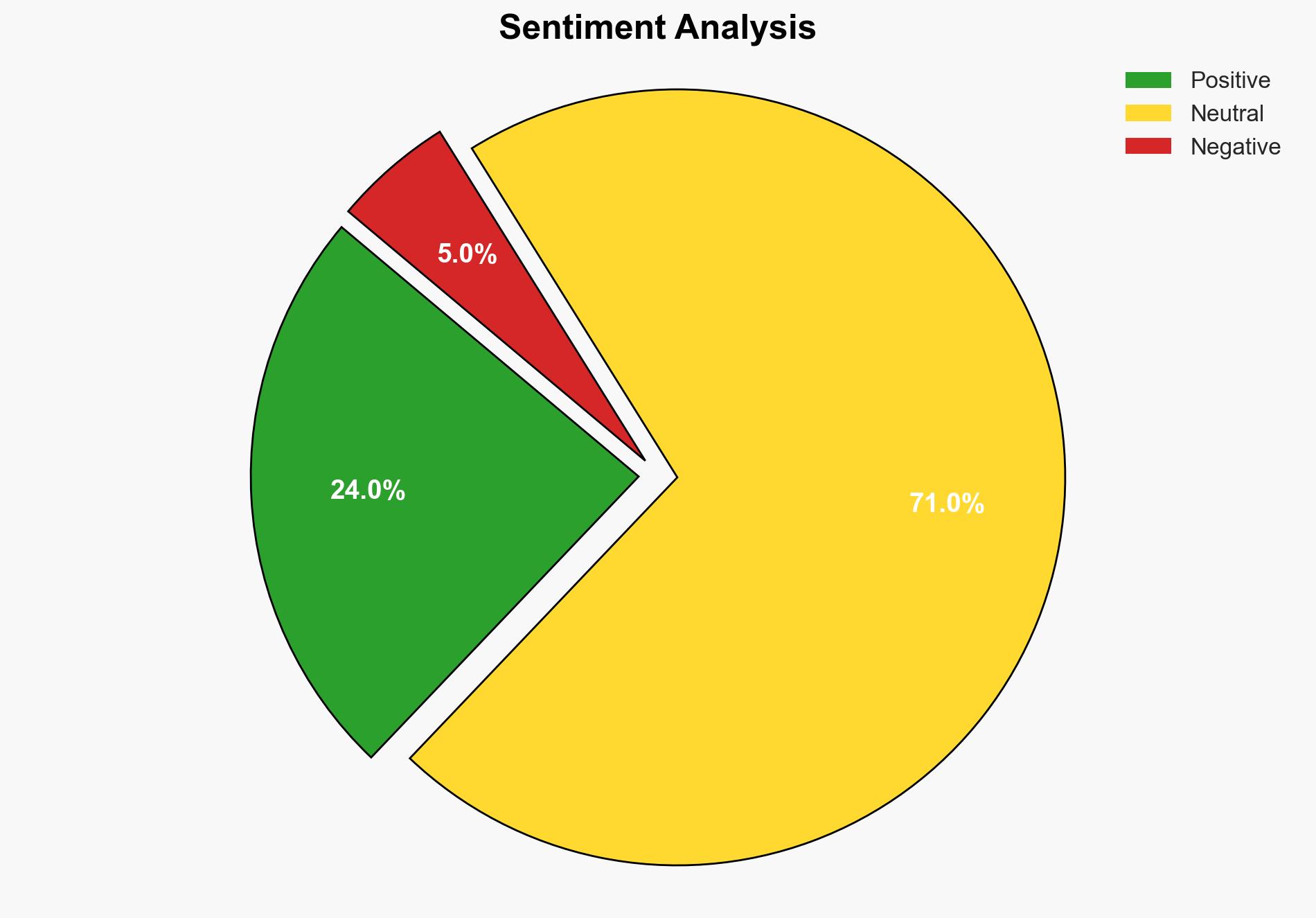

There is a moderate confidence level that the empowerment of employees to lead AI initiatives will lead to significant operational efficiencies and innovation within enterprises. However, without robust governance and risk management frameworks, this shift could expose organizations to compliance and security risks. It is recommended that organizations develop comprehensive AI governance structures to balance innovation with security and compliance.

2. Competing Hypotheses

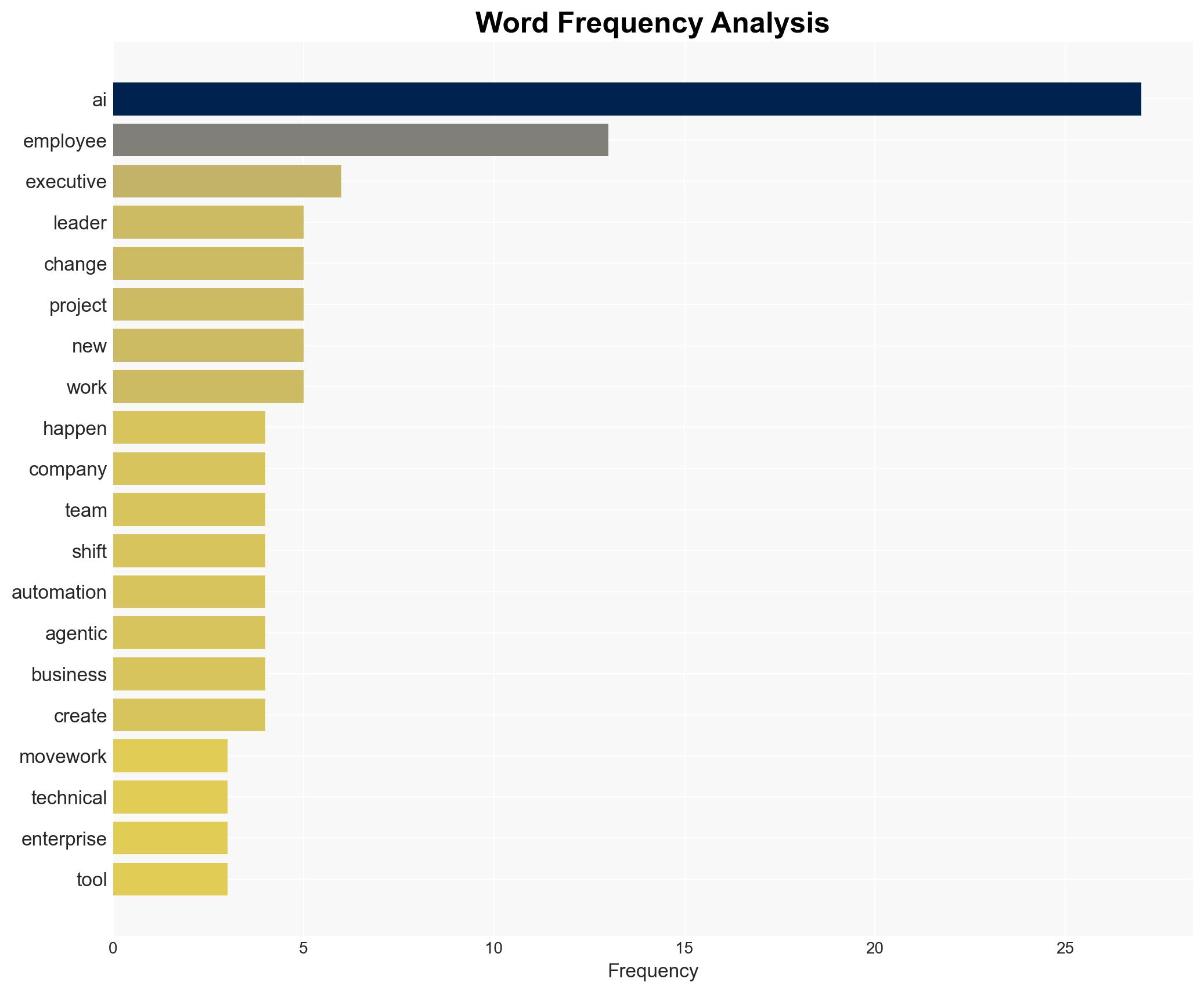

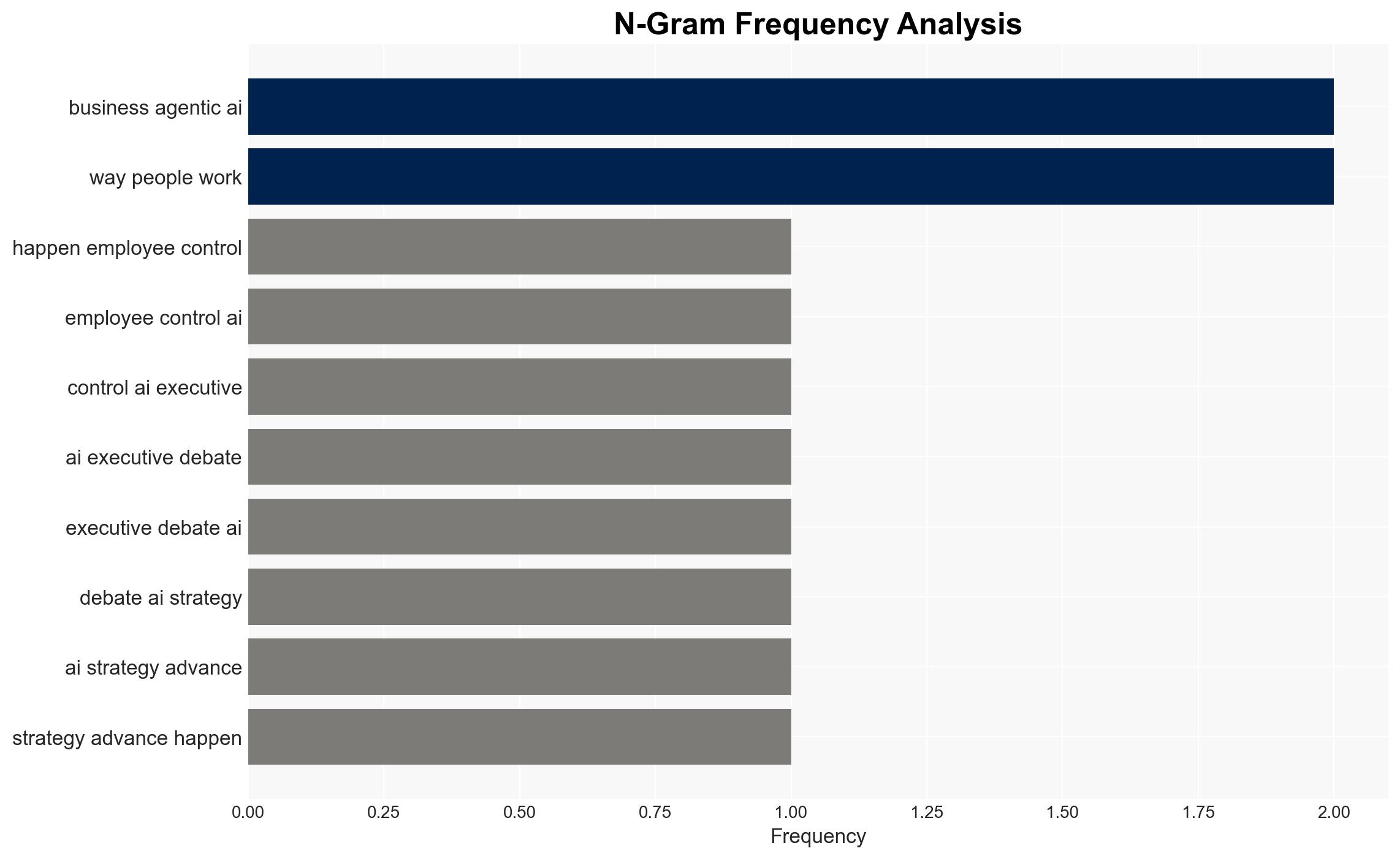

Hypothesis 1: Empowering employees to lead AI initiatives will drive innovation and operational efficiency, as employees closest to the work understand the challenges and opportunities for automation.

Hypothesis 2: Allowing employees to control AI projects without adequate oversight will lead to increased security and compliance risks, potentially outweighing the benefits of innovation.

Hypothesis 1 is more likely due to the evidence that non-technical employees have successfully led AI projects, identifying automation opportunities and creating new roles. However, the lack of governance frameworks presents a significant risk, supporting Hypothesis 2’s concerns.

3. Key Assumptions and Red Flags

Assumptions: Employees have the necessary skills and understanding to effectively manage AI projects. Organizations will implement adequate governance frameworks.

Red Flags: Lack of comprehensive AI governance and risk management frameworks. Over-reliance on employee-led initiatives without executive oversight.

4. Implications and Strategic Risks

The strategic risks include potential non-compliance with data protection regulations, increased vulnerability to cyber threats, and operational disruptions due to poorly managed AI projects. Politically, there could be backlash if AI projects lead to significant job displacement. Economically, organizations may face financial penalties for compliance breaches.

5. Recommendations and Outlook

- Develop and implement robust AI governance and risk management frameworks to ensure compliance and security.

- Provide training and support to employees to enhance their AI project management skills.

- Best-case scenario: Organizations achieve significant operational efficiencies and innovation with minimal risk exposure.

- Worst-case scenario: Security breaches and compliance failures lead to financial and reputational damage.

- Most-likely scenario: Organizations experience a mix of innovation and risk, necessitating ongoing adjustments to governance frameworks.

6. Key Individuals and Entities

Bhavin Shah, CEO of Movework, is a key figure advocating for employee-led AI initiatives. His insights reflect the broader industry trend towards empowering employees in AI projects.

7. Thematic Tags

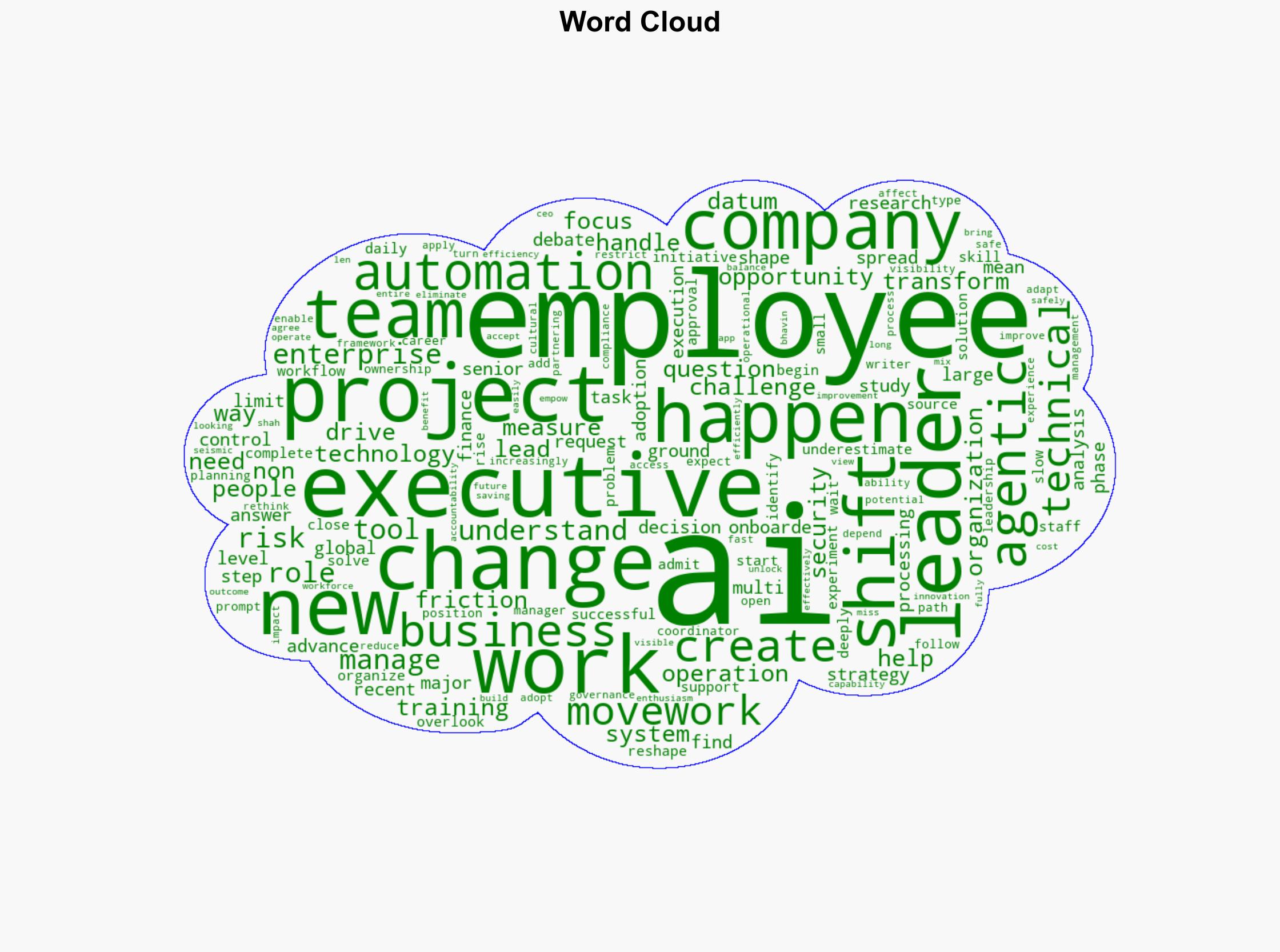

Cybersecurity, AI Governance, Employee Empowerment, Operational Efficiency, Risk Management

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

- Cognitive Bias Stress Test: Structured challenge to expose and correct biases.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Methodology