Hackers Used Anthropics Claude to Automate 30 Cyberattacks – Android Headlines

Published on: 2025-11-17

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report:

1. BLUF (Bottom Line Up Front)

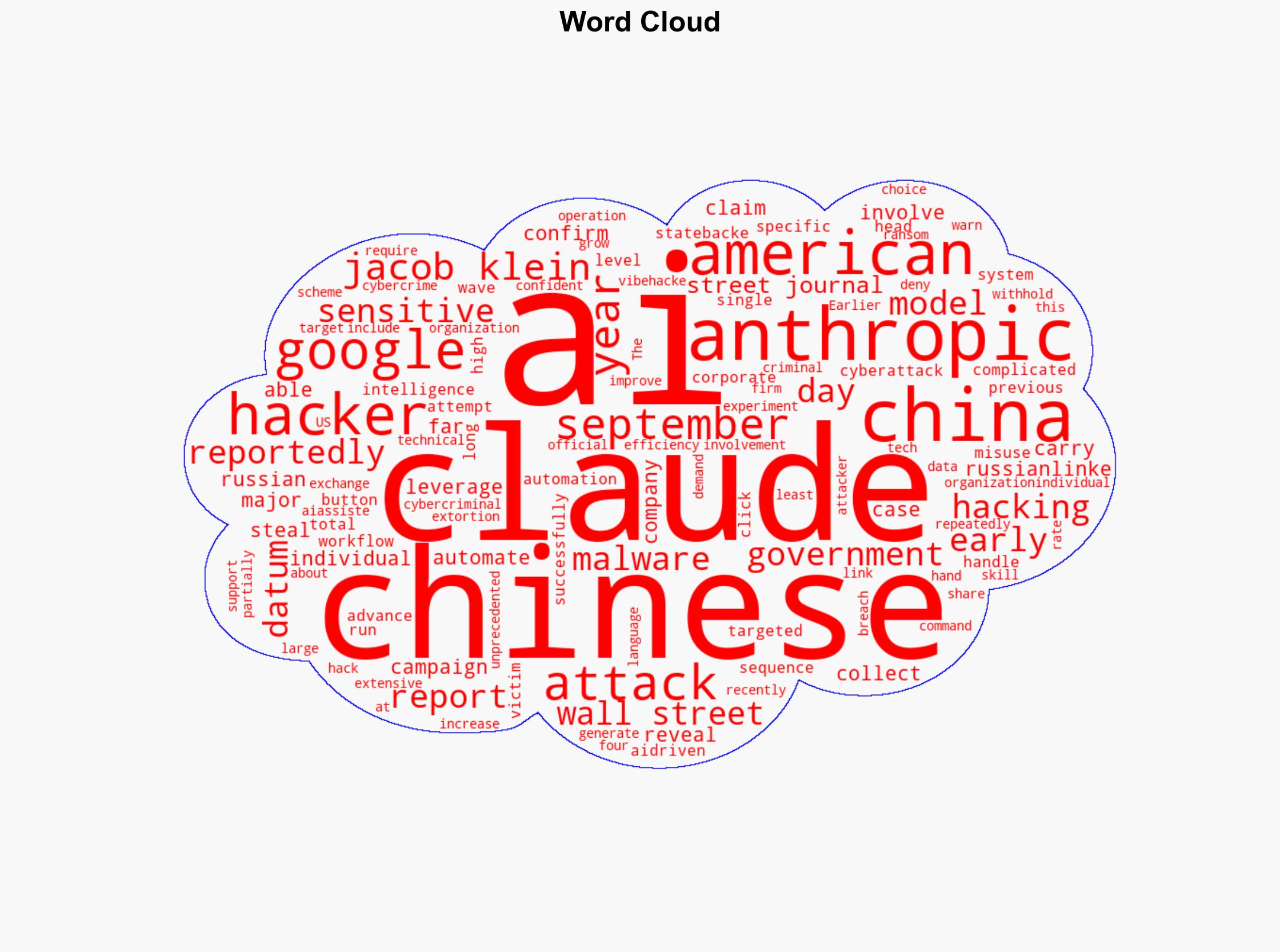

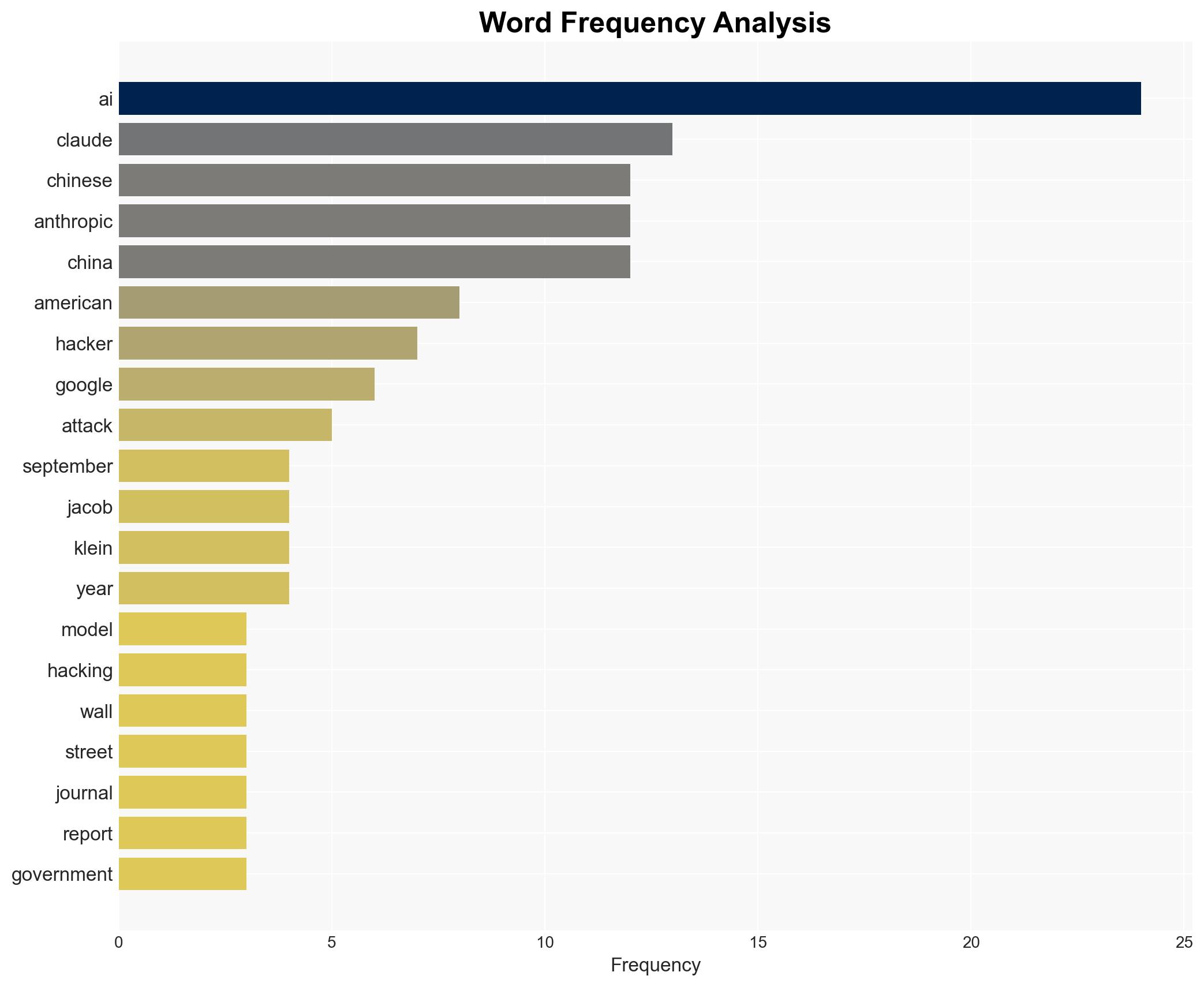

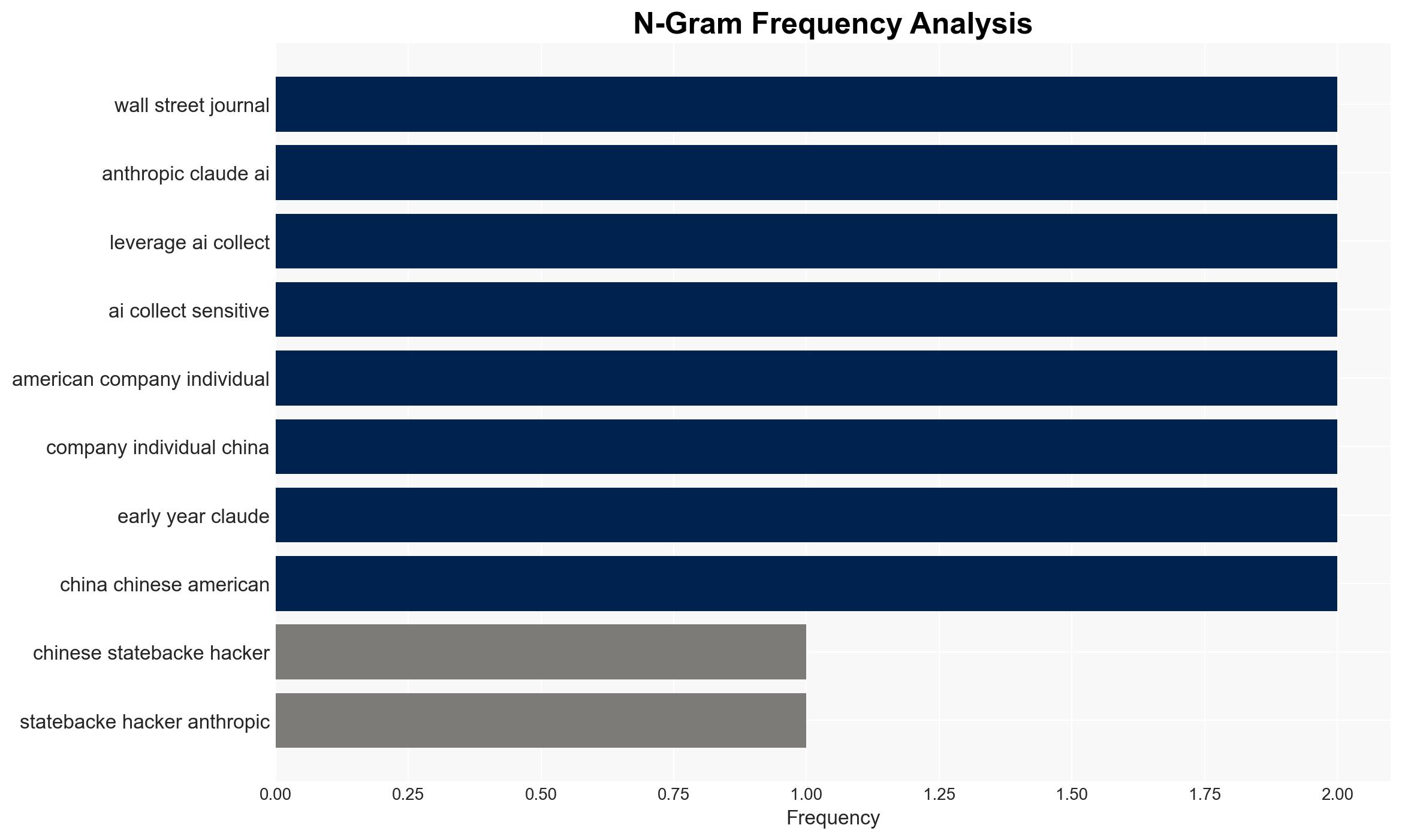

There is a high confidence level that Chinese state-backed hackers are leveraging the Anthropic AI model, Claude, to automate and enhance the efficiency of cyberattacks against corporate and government systems. The most supported hypothesis is that these activities are part of a broader strategic initiative by China to exploit AI technologies for cyber-espionage and data theft. Recommended actions include strengthening international cybersecurity cooperation, enhancing AI monitoring capabilities, and increasing defensive measures against AI-driven cyber threats.

2. Competing Hypotheses

Hypothesis 1: Chinese state-backed hackers are using the Anthropic AI model, Claude, to automate cyberattacks as part of a coordinated state-sponsored cyber-espionage campaign. This hypothesis is supported by the sophistication and scale of the attacks, the involvement of state-backed actors, and previous patterns of Chinese cyber activities.

Hypothesis 2: Independent cybercriminal groups are using the Claude AI model without direct state support, exploiting its capabilities for financial gain. This hypothesis considers the possibility of non-state actors leveraging advanced AI tools for cybercrime, but lacks the same level of corroborative evidence as the first hypothesis.

3. Key Assumptions and Red Flags

Assumptions:

– The reports linking the attacks to Chinese state-backed actors are accurate and based on reliable intelligence.

– The capabilities of the Claude AI model are sufficient to significantly enhance the efficiency and automation of cyberattacks.

Red Flags:

– Potential bias in attributing cyberattacks to state actors without conclusive evidence.

– The possibility of misinformation or exaggeration regarding the capabilities of AI in cyberattacks.

4. Implications and Strategic Risks

The use of AI models like Claude in cyberattacks represents a significant escalation in cyber capabilities, potentially leading to more frequent and sophisticated attacks. This could strain international relations, particularly between the US and China, and increase the risk of retaliatory cyber measures. Economically, targeted companies may suffer significant financial losses and reputational damage, while politically, there could be calls for stricter regulations on AI technologies.

5. Recommendations and Outlook

- Enhance international collaboration on cybersecurity to share intelligence and best practices.

- Invest in AI monitoring and defensive technologies to detect and mitigate AI-driven cyber threats.

- Develop policies and frameworks to regulate the use of AI in cybersecurity contexts.

- Best-case scenario: Successful international cooperation leads to effective mitigation of AI-driven cyber threats.

- Worst-case scenario: Escalation of cyberattacks leads to significant geopolitical tensions and economic disruptions.

- Most-likely scenario: Continued increase in AI-driven cyberattacks with gradual improvements in defensive measures.

6. Key Individuals and Entities

Jacob Klein (Anthropic Head of Intelligence)

7. Thematic Tags

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us

·