You Can Now Ask Google Gemini Whether an Image is AI-Generated or Not

Published on: 2025-11-21

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report:

1. BLUF (Bottom Line Up Front)

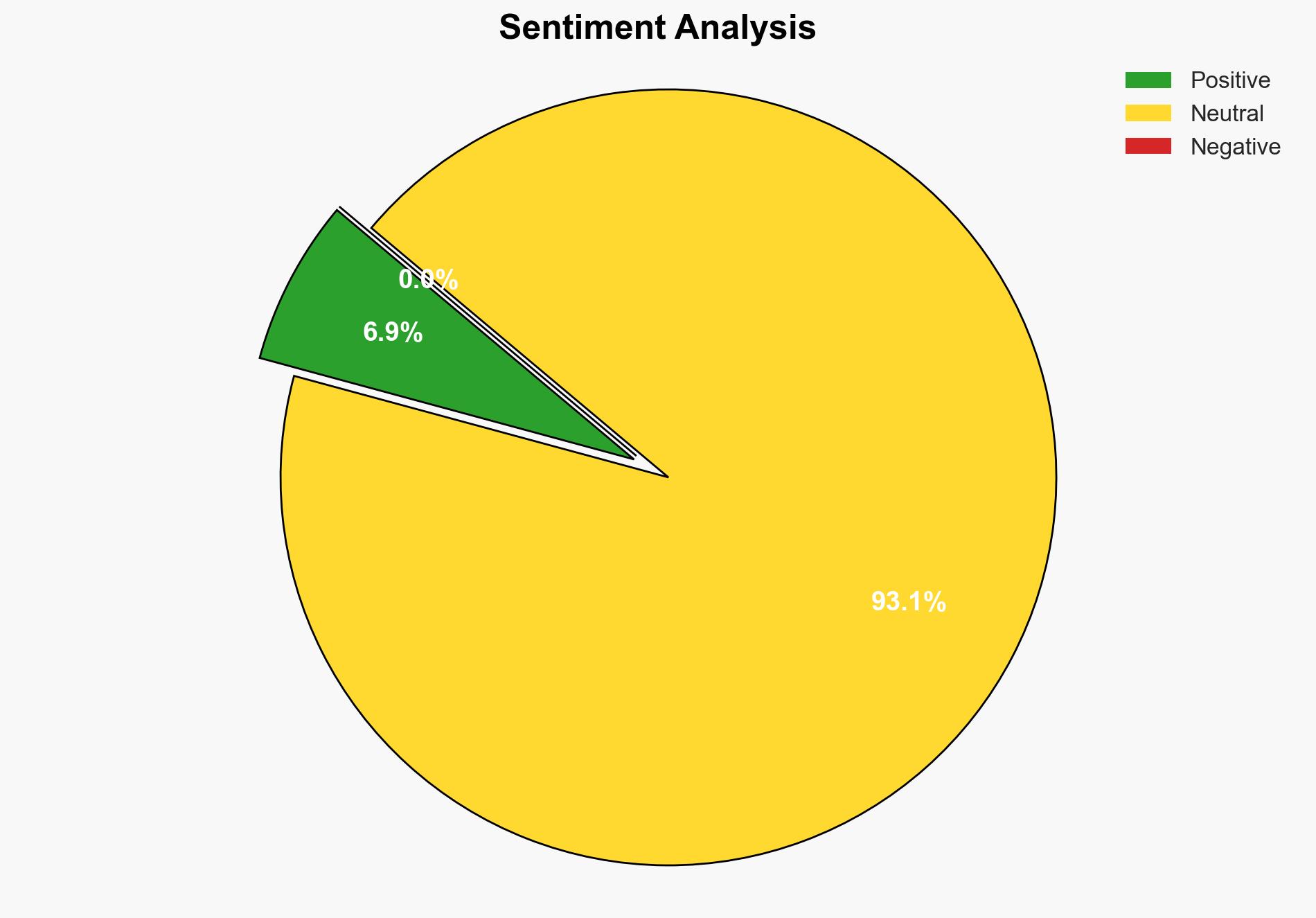

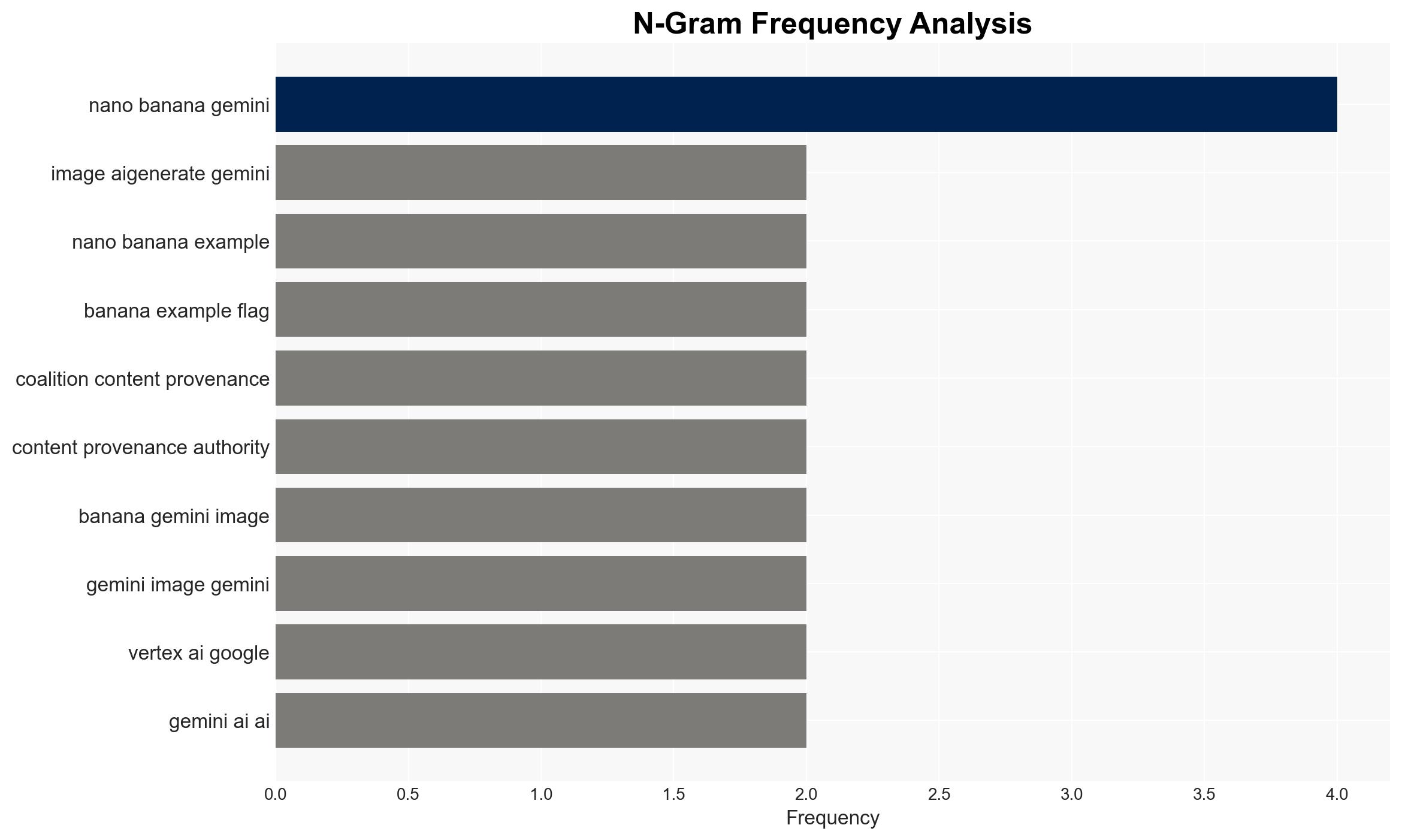

With a moderate confidence level, it is assessed that Google’s introduction of the Gemini feature to identify AI-generated images represents a strategic move to establish leadership in AI transparency and content verification. The most supported hypothesis is that Google aims to preemptively address regulatory and consumer trust challenges by embedding SynthID technology into its ecosystem. Recommended actions include monitoring the adoption and effectiveness of SynthID and assessing the potential for competitive responses from other tech companies.

2. Competing Hypotheses

Hypothesis 1: Google is introducing the Gemini feature primarily to enhance transparency and trust in AI-generated content, positioning itself as a leader in ethical AI use.

Hypothesis 2: Google’s move is primarily a strategic response to anticipated regulatory pressures and competition, aiming to set industry standards and secure market dominance in AI content verification.

Hypothesis 1 is more likely due to Google’s proactive integration of SynthID and collaboration with the Coalition for Content Provenance and Authority (C2PA), indicating a focus on transparency and trust-building.

3. Key Assumptions and Red Flags

Assumptions: It is assumed that users will adopt the Gemini feature and that SynthID technology will be effective in identifying AI-generated content. It is also assumed that regulatory bodies will view these efforts favorably.

Red Flags: Potential over-reliance on Google’s proprietary technology could lead to biases or gaps in detection. There is also a risk of competitive deception if other companies develop similar technologies without equivalent transparency.

4. Implications and Strategic Risks

The introduction of SynthID could set a precedent for AI content verification, influencing regulatory frameworks and industry standards. However, there are risks of increased competition in AI verification technologies, potential consumer skepticism if the technology fails, and geopolitical tensions if international standards diverge.

5. Recommendations and Outlook

- Monitor the adoption rate and user feedback of the Gemini feature to assess its impact on consumer trust and regulatory compliance.

- Engage with industry stakeholders to support the development of open standards for AI content verification.

- Best-case scenario: Google successfully establishes SynthID as the industry standard, enhancing its reputation and market share.

- Worst-case scenario: SynthID faces significant technical challenges or consumer backlash, leading to reputational damage and regulatory scrutiny.

- Most-likely scenario: Google gains a competitive edge in AI transparency, prompting similar initiatives from other tech companies.

6. Key Individuals and Entities

No specific individuals are mentioned in the source text. Key entities include Google, the Coalition for Content Provenance and Authority (C2PA), and potential competitors in the AI verification space.

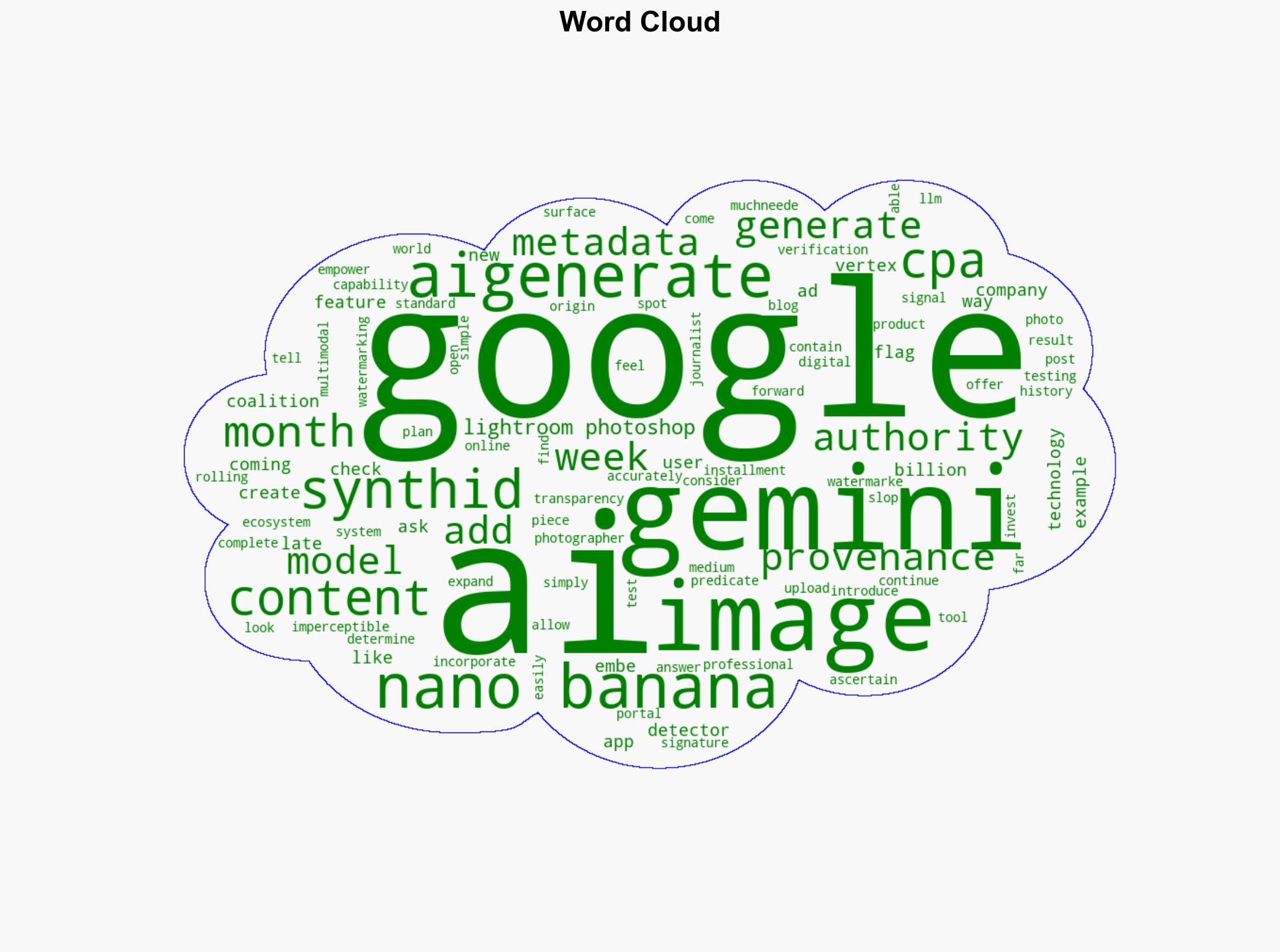

7. Thematic Tags

Cybersecurity, AI Transparency, Content Verification, Regulatory Compliance

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us