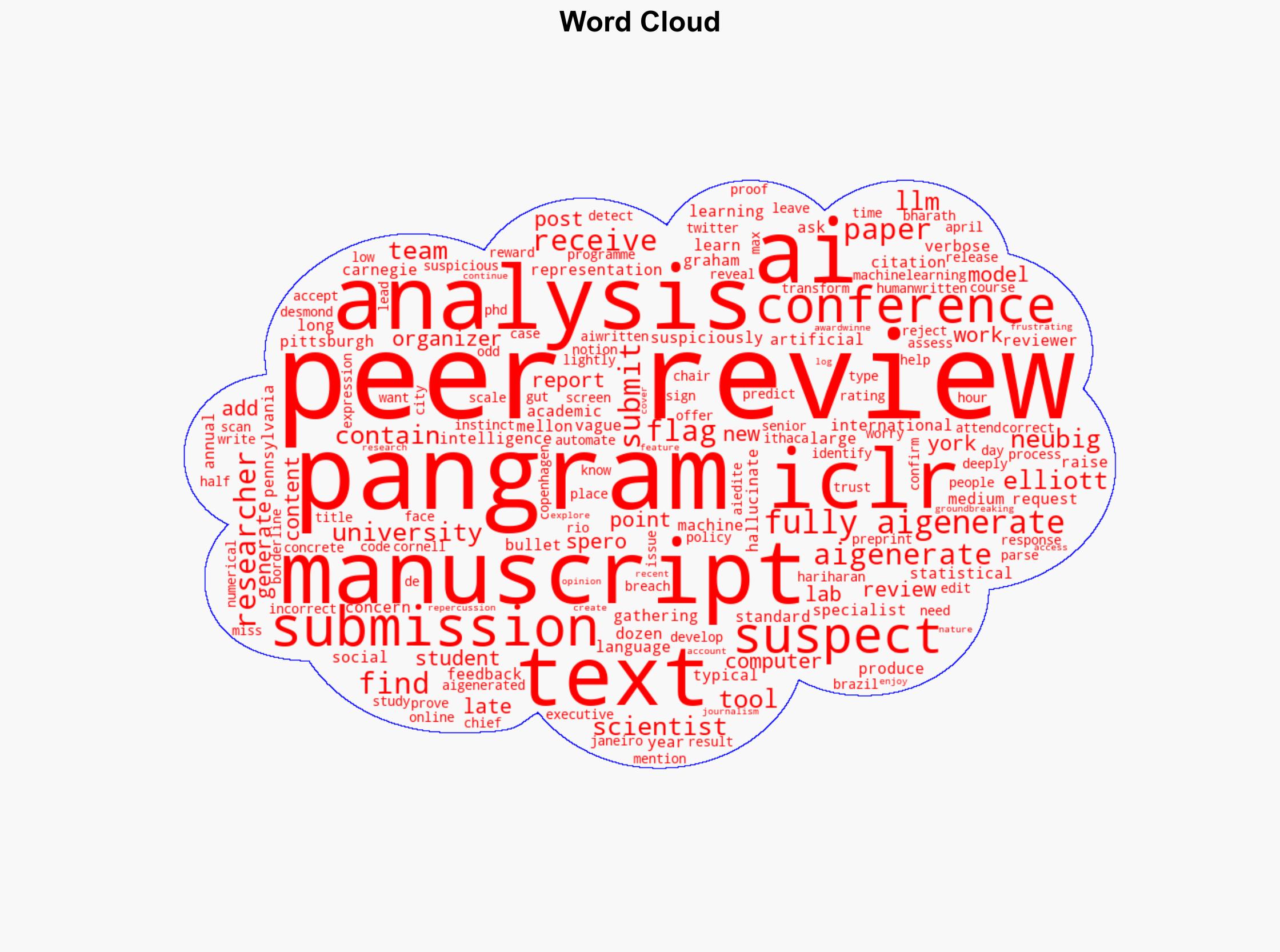

AI-Generated Reviews Dominate Major Conference, Raising Concerns Among Researchers

Published on: 2025-11-27

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report: Major AI conference flooded with peer-reviews written fully by AI

1. BLUF (Bottom Line Up Front)

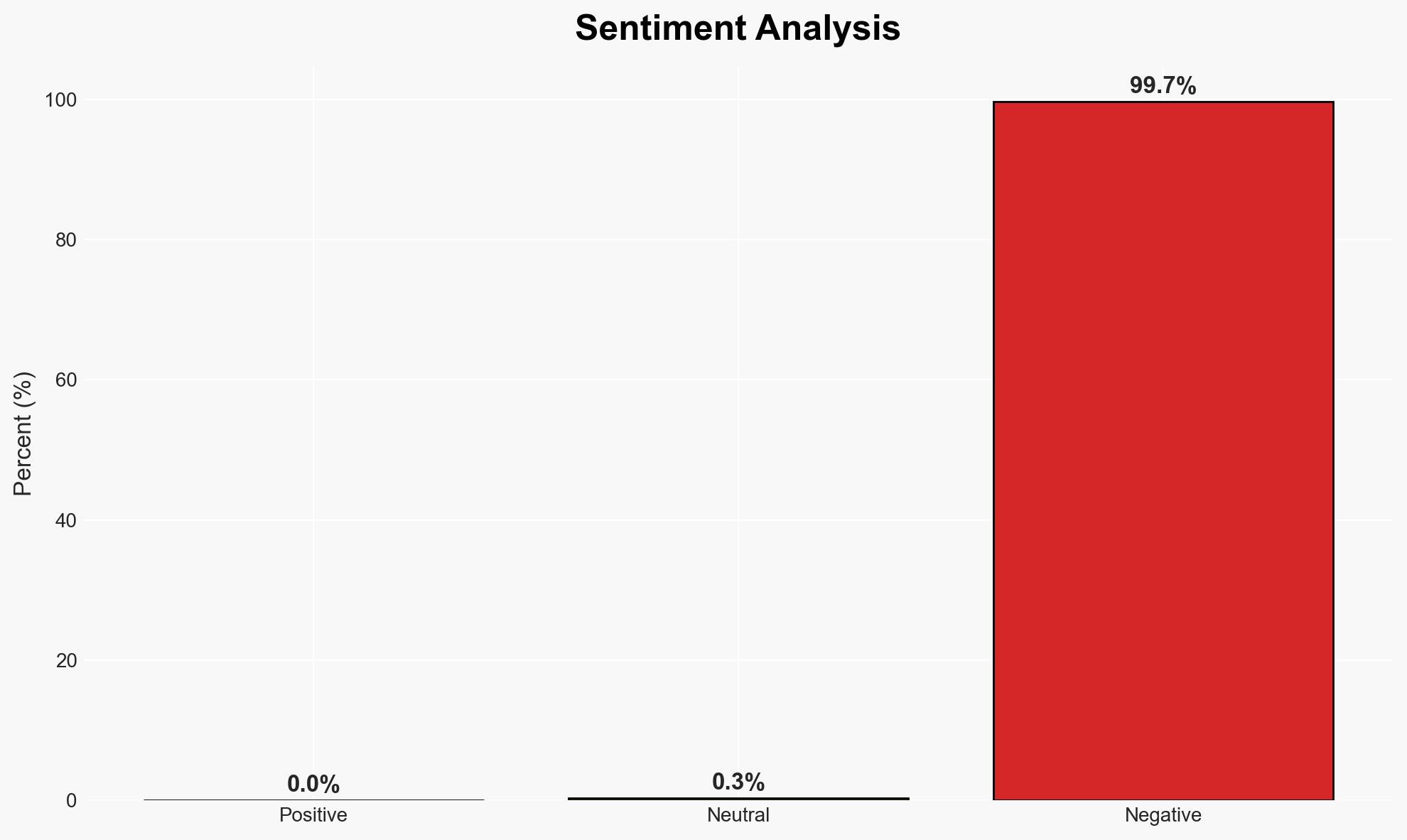

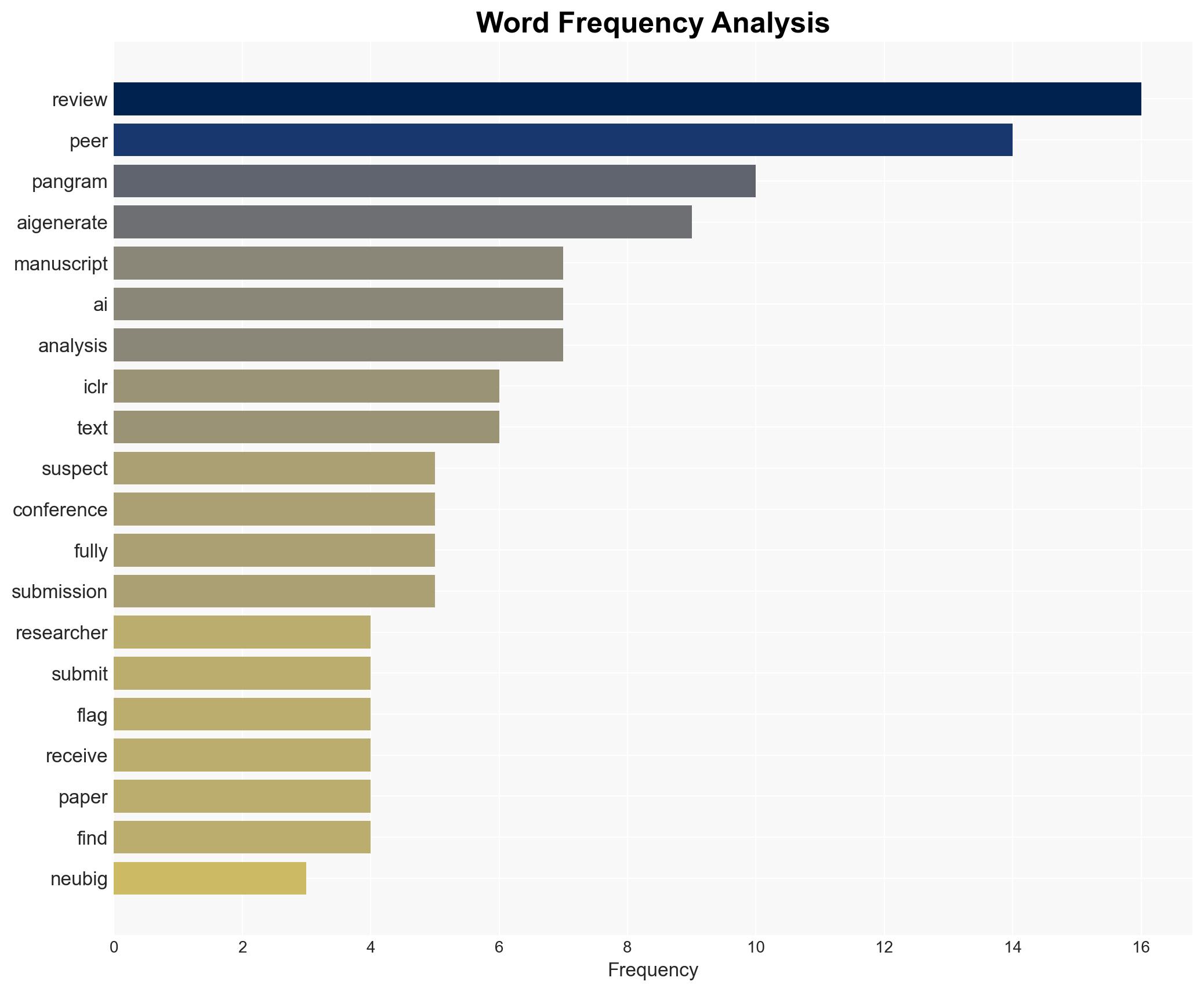

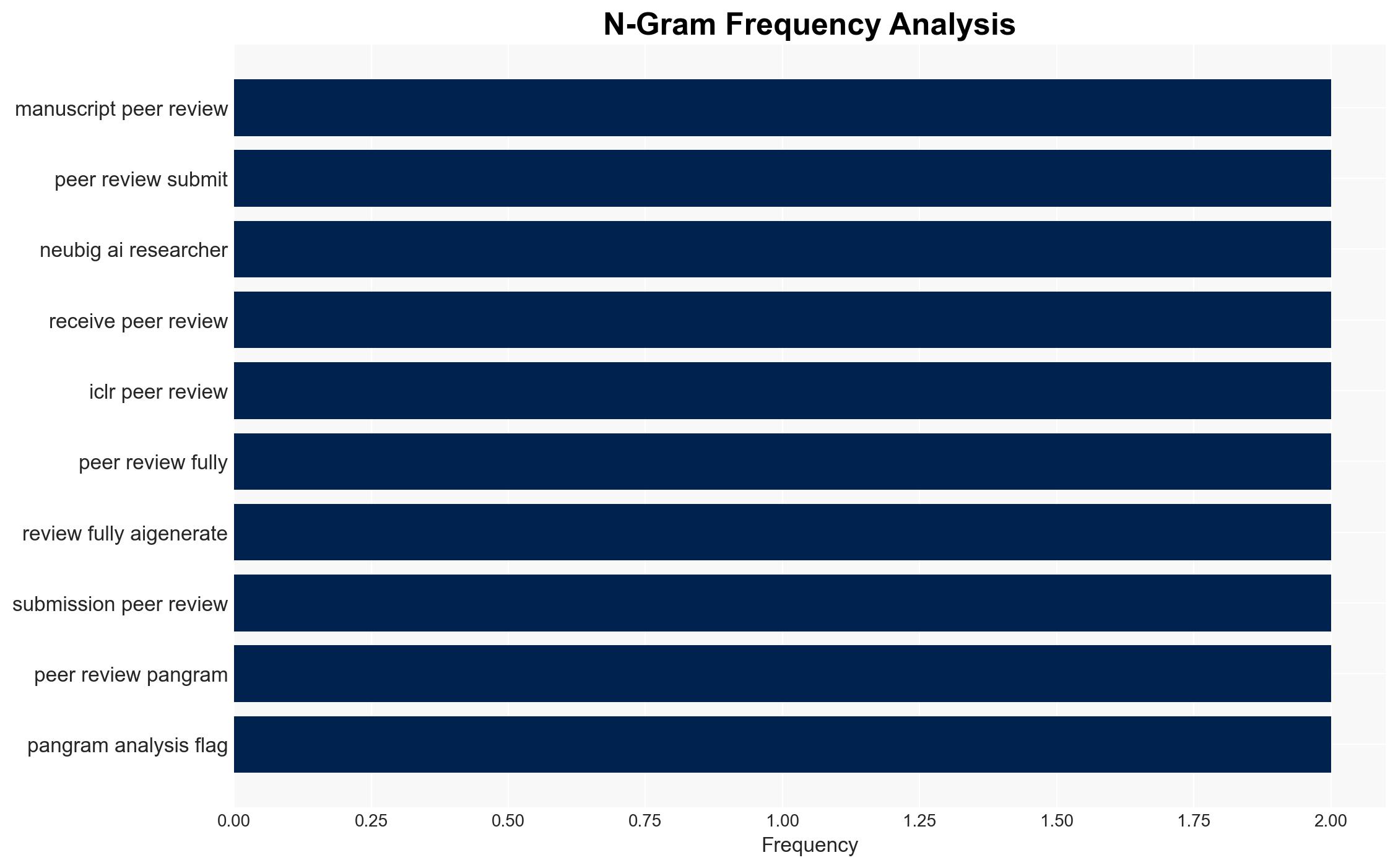

The International Conference on Learning Representations (ICLR) has been significantly impacted by the submission of AI-generated peer reviews, raising concerns about the integrity of the academic review process. The most likely hypothesis is that the use of AI in generating peer reviews was both intentional and widespread, affecting the credibility of the conference and potentially influencing the acceptance of research papers. This assessment is made with moderate confidence due to the limited scope of available data and potential biases in reporting.

2. Competing Hypotheses

- Hypothesis A: AI-generated peer reviews were submitted intentionally by individuals seeking to exploit AI capabilities to streamline the review process. This is supported by reports of AI-generated text being flagged and the development of tools to detect such submissions. However, the extent of intentional misuse remains uncertain.

- Hypothesis B: The AI-generated reviews were the result of unintentional misuse or misunderstanding of AI tools by researchers or reviewers. This hypothesis is less supported due to the systematic nature of the findings and the presence of tools specifically designed to detect AI-generated content.

- Assessment: Hypothesis A is currently better supported due to the deliberate nature of the AI-generated content detection and the subsequent actions taken by conference organizers. Indicators that could shift this judgment include evidence of widespread misunderstanding of AI tools by the academic community.

3. Key Assumptions and Red Flags

- Assumptions: AI tools are readily accessible and capable of generating convincing peer reviews; conference organizers have adequate detection mechanisms; the academic community is generally aware of AI capabilities.

- Information Gaps: The exact number of AI-generated reviews and the identities of those responsible remain unclear; the effectiveness of detection tools in diverse contexts is not fully known.

- Bias & Deception Risks: Potential bias in reporting from entities with vested interests in AI technology; risk of manipulation by parties seeking to undermine academic integrity.

4. Implications and Strategic Risks

This development could lead to increased scrutiny of AI applications in academic settings and prompt broader discussions on ethical AI use. Over time, it may influence policy decisions regarding AI regulation and academic integrity standards.

- Political / Geopolitical: May prompt international discourse on AI ethics and regulation, influencing policy frameworks.

- Security / Counter-Terrorism: Limited direct impact, but highlights vulnerabilities in digital systems that could be exploited in other domains.

- Cyber / Information Space: Raises concerns about the reliability of AI-generated content and the need for robust verification mechanisms.

- Economic / Social: Potentially undermines trust in academic publications, affecting research funding and collaboration.

5. Recommendations and Outlook

- Immediate Actions (0–30 days): Implement and enhance AI detection tools at academic conferences; conduct a thorough investigation to identify responsible parties.

- Medium-Term Posture (1–12 months): Develop guidelines for AI use in academic settings; foster partnerships with AI ethics organizations to promote responsible AI practices.

- Scenario Outlook:

- Best Case: Effective detection and policy measures restore trust in academic processes.

- Worst Case: Continued misuse of AI erodes credibility of academic publications.

- Most-Likely: Incremental improvements in detection and policy lead to gradual restoration of trust.

6. Key Individuals and Entities

- Graham Neubig, AI researcher, Carnegie Mellon University

- Max Spero, Chief Executive, Pangram Labs

- Bharath Hariharan, Senior Programme Chair, ICLR

- Desmond Elliott, Computer Scientist, University of Copenhagen

- Not clearly identifiable from open sources in this snippet.

7. Thematic Tags

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us