New Reprompt Attack Enables One-Click Data Theft from Microsoft Copilot, Bypassing Security Measures

Published on: 2026-01-15

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report: Researchers Reveal Reprompt Attack Allowing Single-Click Data Exfiltration From Microsoft Copilot

1. BLUF (Bottom Line Up Front)

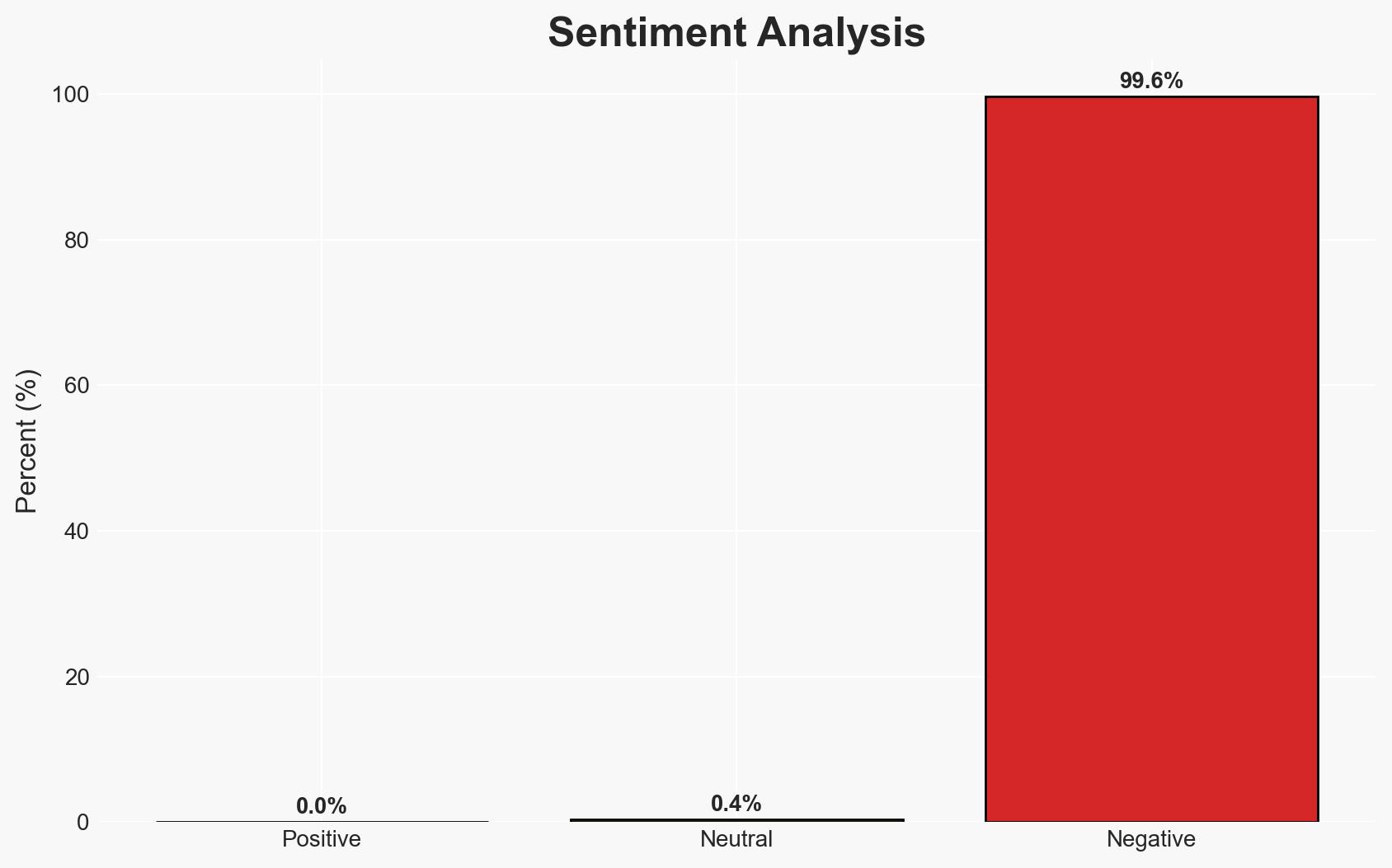

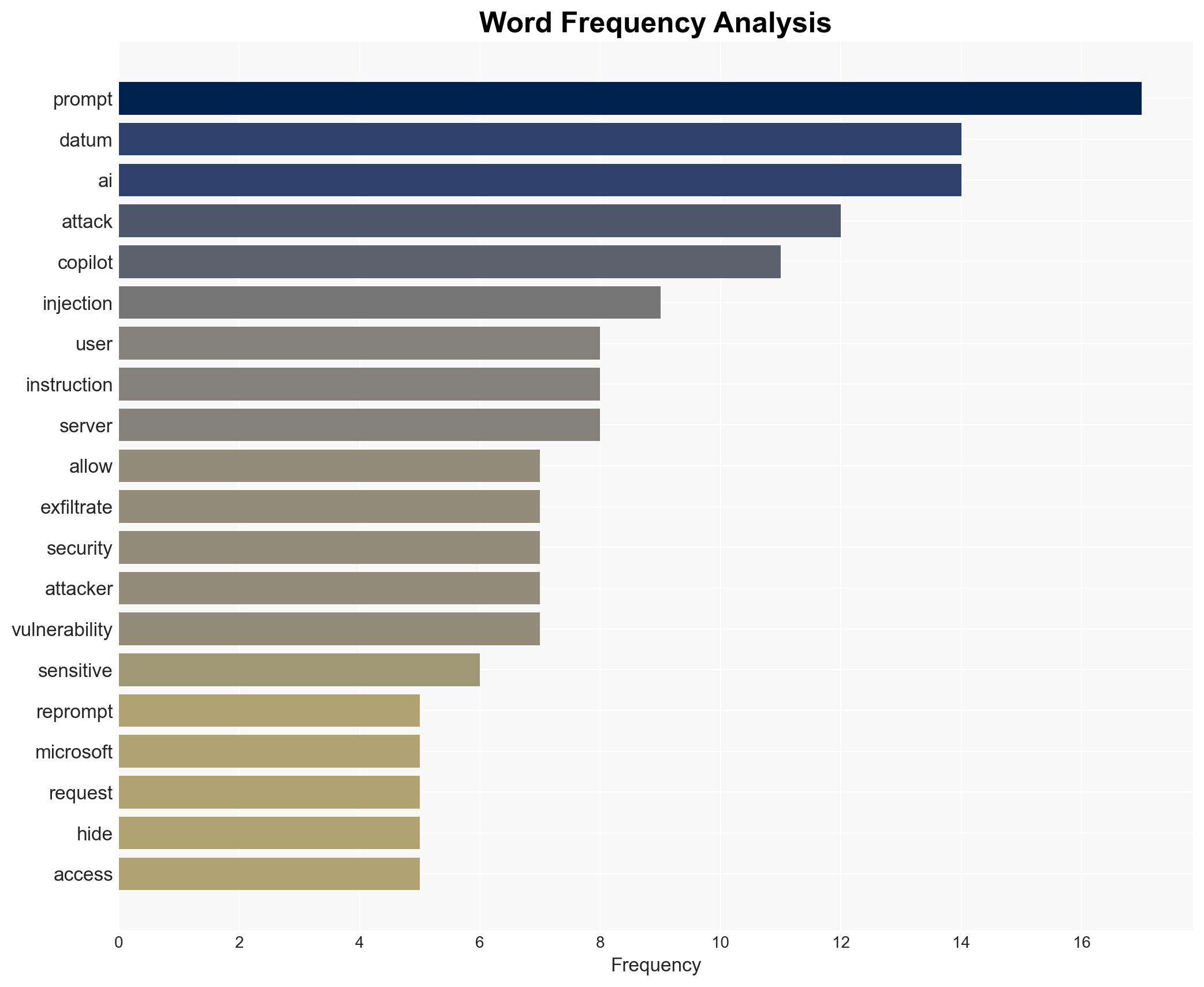

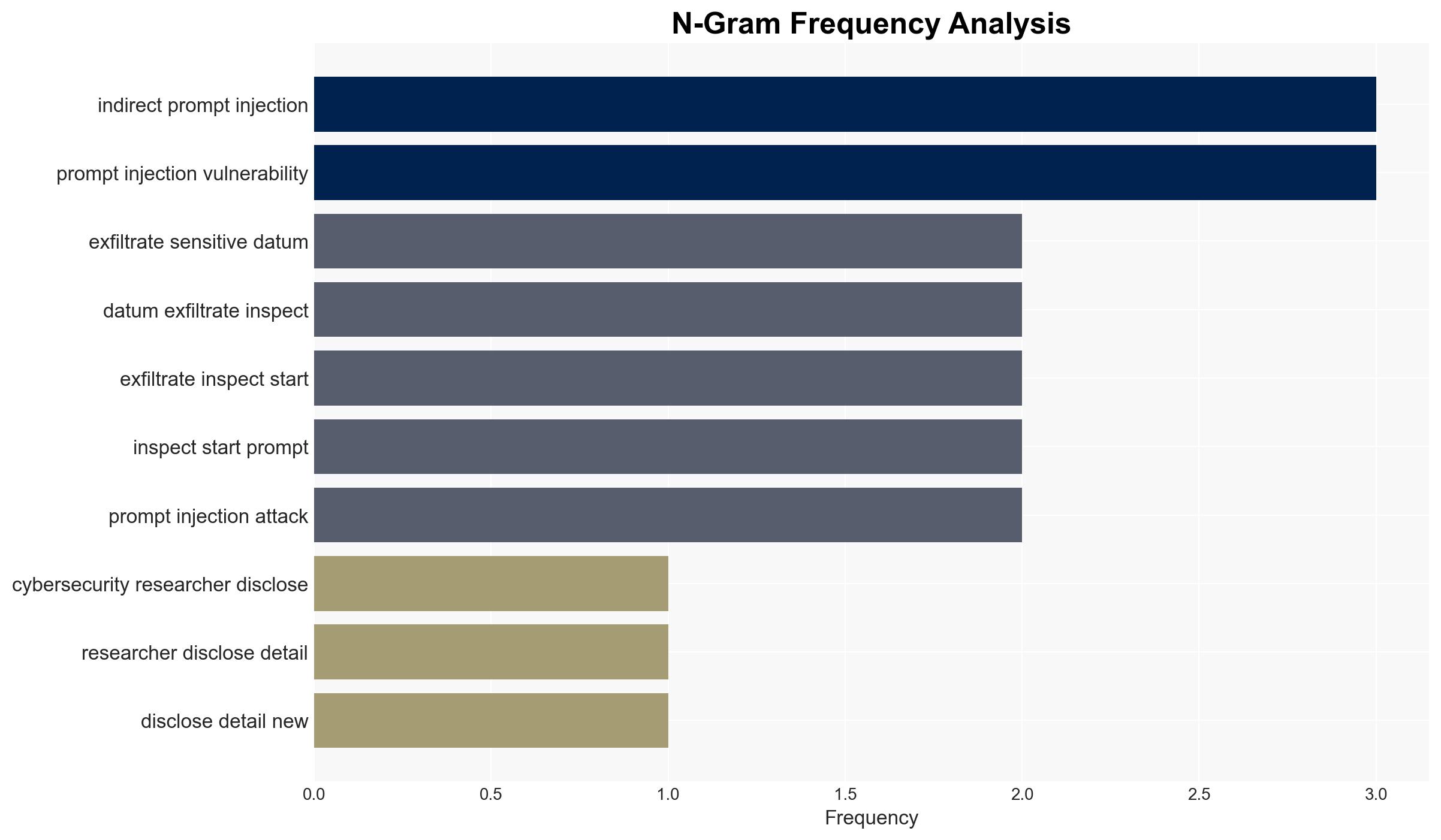

The Reprompt attack method poses a significant cybersecurity threat by enabling data exfiltration from AI chatbots like Microsoft Copilot with minimal user interaction. The attack exploits vulnerabilities in AI systems’ instruction parsing, bypassing enterprise security controls. Although Microsoft has addressed the issue, the potential for similar exploits remains. Overall confidence in this assessment is moderate.

2. Competing Hypotheses

- Hypothesis A: The Reprompt attack represents a novel and isolated vulnerability specific to Microsoft Copilot, effectively mitigated by recent security updates. Supporting evidence includes Microsoft’s response and the specificity of the attack vector. However, uncertainties remain about the robustness of the fix and potential similar vulnerabilities in other AI systems.

- Hypothesis B: The Reprompt attack is indicative of a broader class of vulnerabilities inherent in AI chatbots, suggesting systemic issues with AI instruction parsing. This hypothesis is supported by the general nature of the attack method, which exploits common AI weaknesses. Contradicting evidence includes the lack of reported similar attacks on other platforms.

- Assessment: Hypothesis B is currently better supported due to the fundamental nature of the vulnerabilities exploited, which are likely present in other AI systems. Future indicators that could shift this judgment include reports of similar attacks on other platforms or further security measures by AI developers.

3. Key Assumptions and Red Flags

- Assumptions: AI systems have similar vulnerabilities; Microsoft’s fix is effective; attackers will continue to exploit AI weaknesses.

- Information Gaps: Details on the effectiveness of Microsoft’s security update; evidence of similar vulnerabilities in other AI platforms.

- Bias & Deception Risks: Potential bias in reporting due to reliance on a single security research source; risk of underestimating the adaptability of threat actors.

4. Implications and Strategic Risks

The Reprompt attack could lead to increased scrutiny and regulation of AI systems, impacting their development and deployment. If unaddressed, similar vulnerabilities could be exploited by state or non-state actors, posing significant security risks.

- Political / Geopolitical: Potential for international regulatory discussions on AI security standards.

- Security / Counter-Terrorism: Increased risk of data breaches and espionage activities targeting AI systems.

- Cyber / Information Space: Heightened focus on AI vulnerabilities and the need for robust cybersecurity measures.

- Economic / Social: Potential economic impact on companies reliant on AI technologies; public trust in AI systems may be eroded.

5. Recommendations and Outlook

- Immediate Actions (0–30 days): Conduct a thorough review of AI systems for similar vulnerabilities; enhance monitoring of AI-related cyber threats.

- Medium-Term Posture (1–12 months): Develop partnerships with AI developers to improve security standards; invest in AI-specific cybersecurity research.

- Scenario Outlook: Best: Enhanced AI security measures prevent future exploits. Worst: Widespread exploitation of AI vulnerabilities leads to significant data breaches. Most-Likely: Incremental improvements in AI security with occasional breaches.

6. Key Individuals and Entities

- Microsoft

- Varonis

- Dolev Taler

- Not clearly identifiable from open sources in this snippet.

7. Thematic Tags

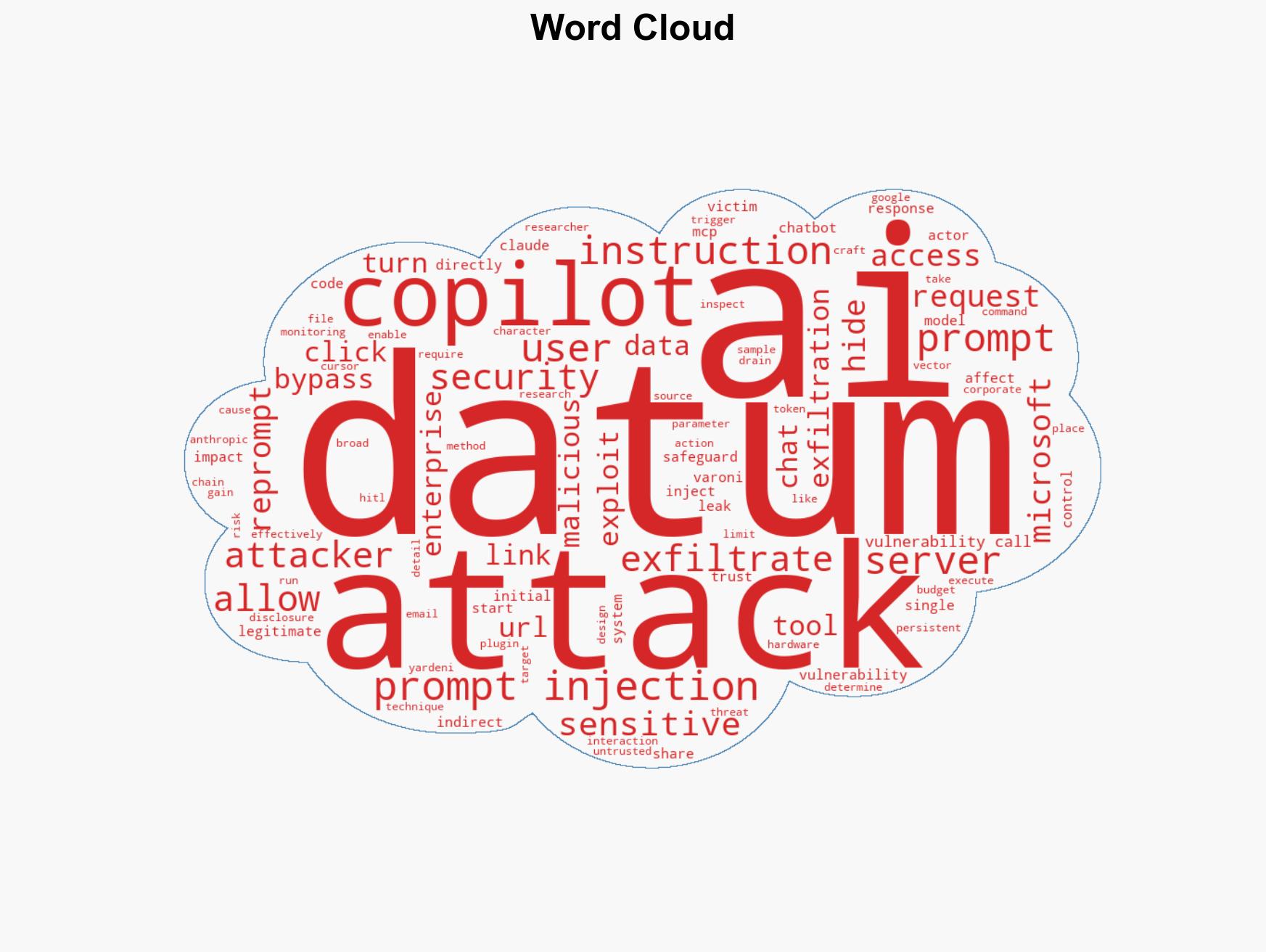

cybersecurity, AI vulnerabilities, data exfiltration, Microsoft Copilot, AI security, cyber threats, information security

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model hostile behavior to identify vulnerabilities.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Forecast futures under uncertainty via probabilistic logic.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us