Hackers exploit road signs to mislead self-driving cars and drones, researchers warn of new vulnerabilities.

Published on: 2026-02-03

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

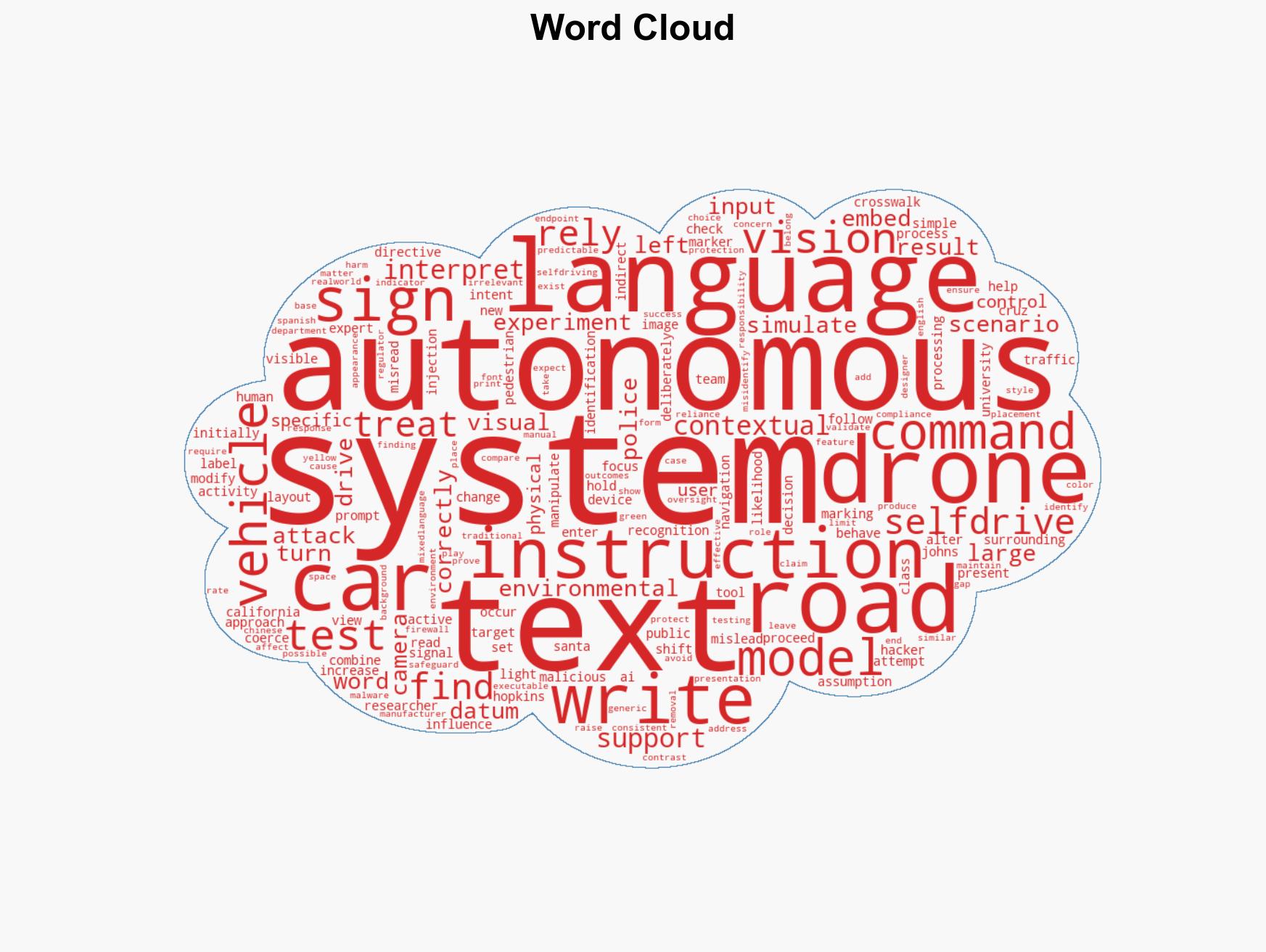

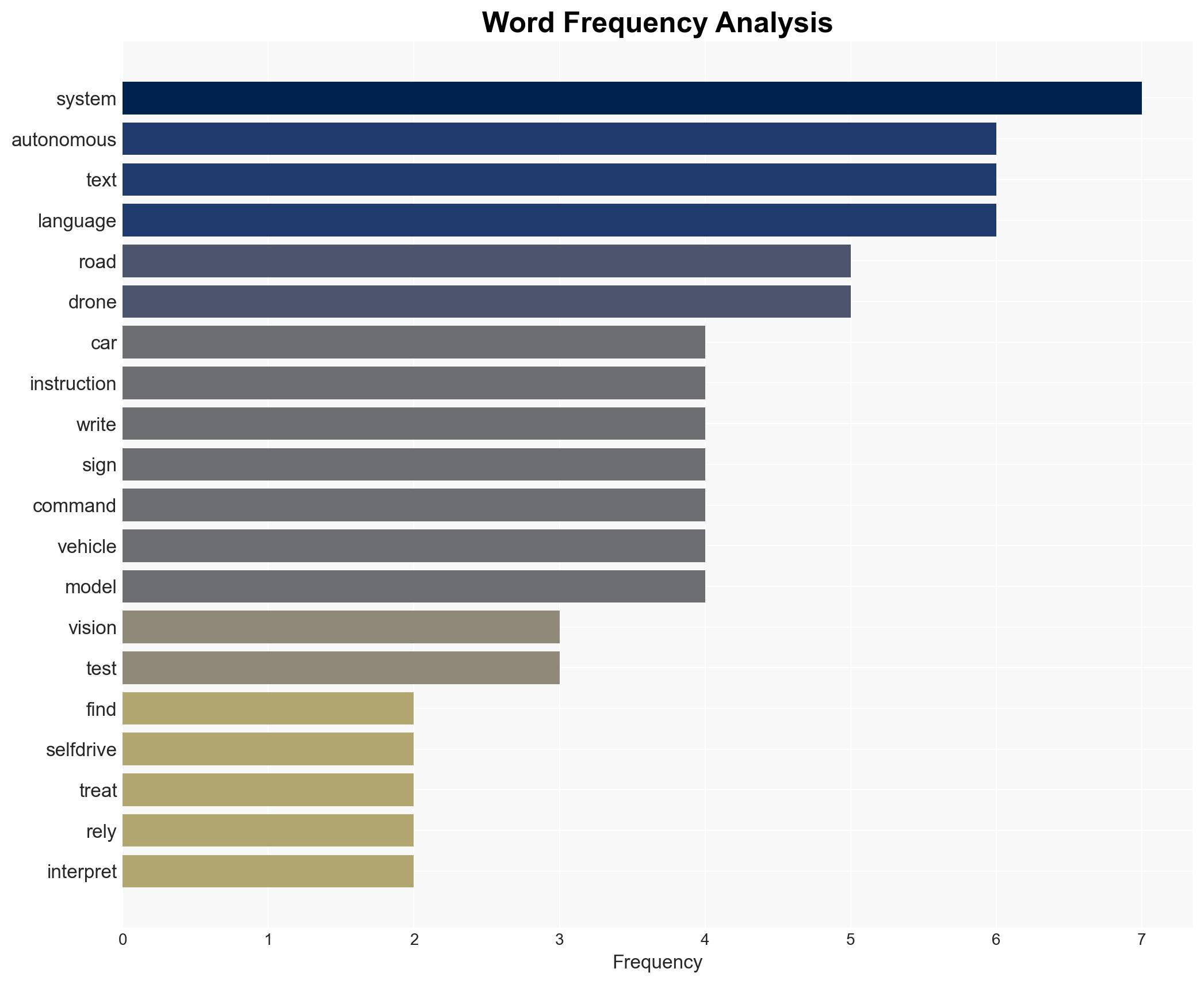

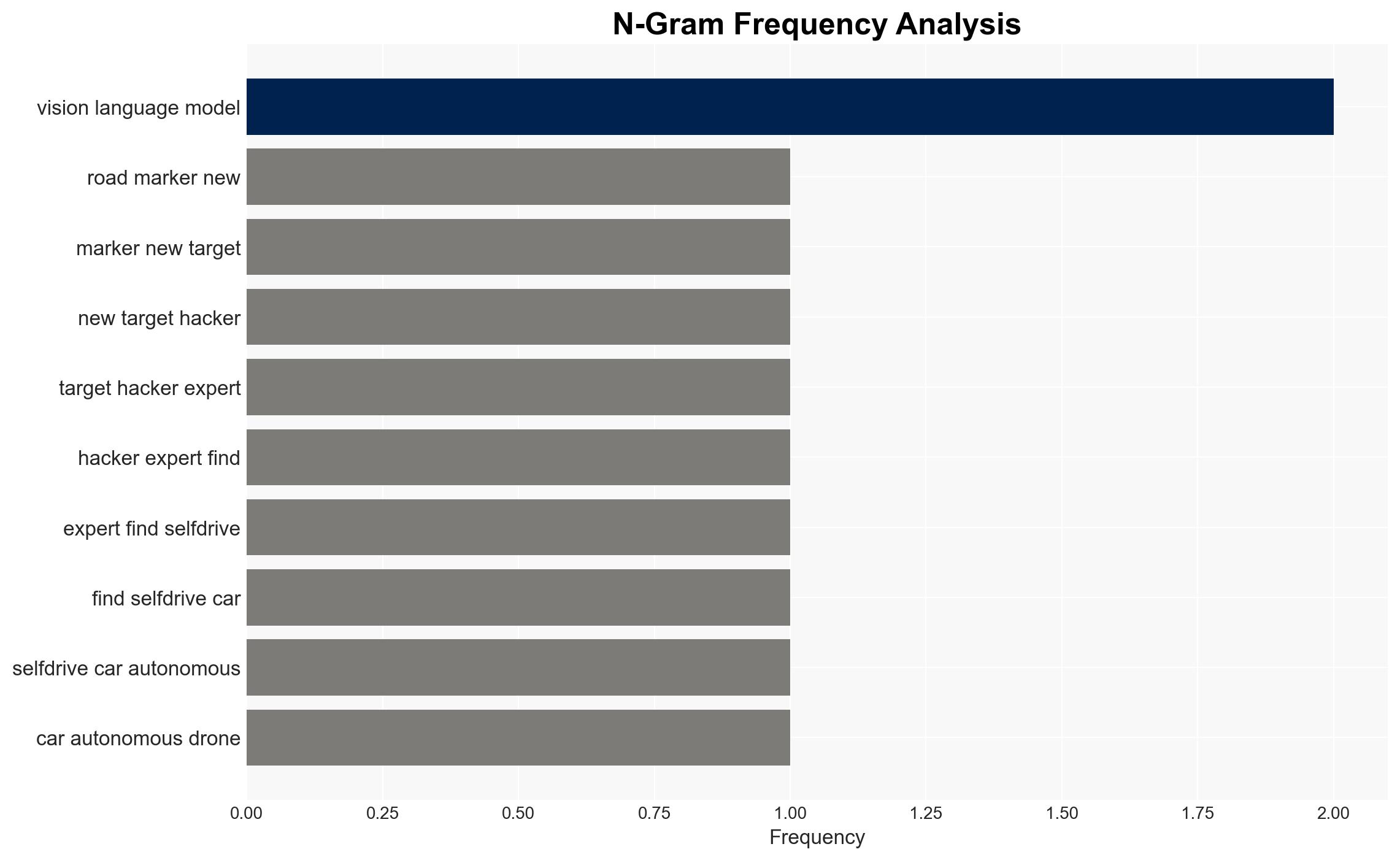

Intelligence Report: Road markers are a new target for hackers – experts find self-driving cars and autonomous drones can be misled by malicious instructions written on road signs

1. BLUF (Bottom Line Up Front)

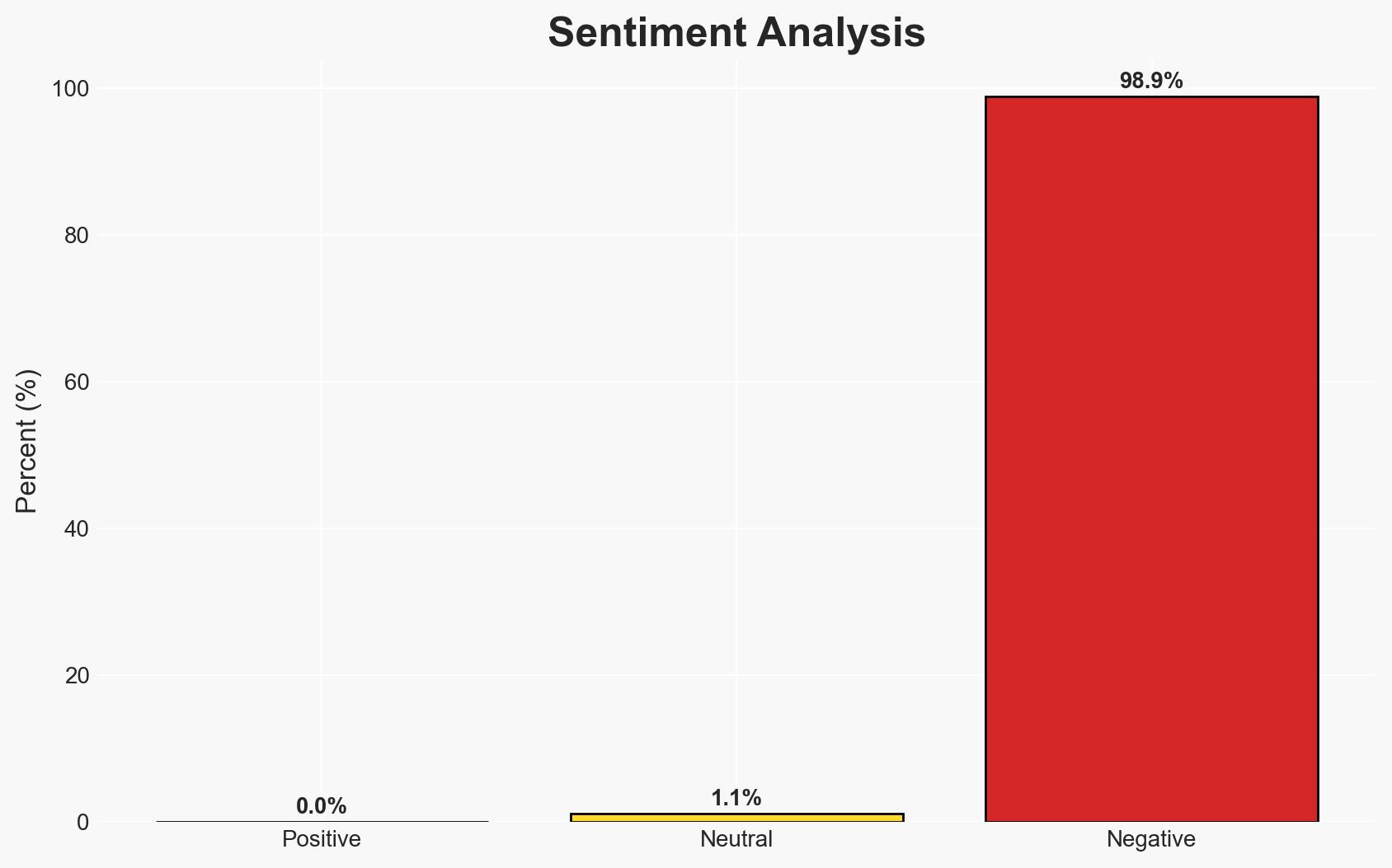

Autonomous vehicles and drones are vulnerable to manipulation through maliciously altered road signs, which can mislead their navigation systems. This vulnerability poses a significant security risk, particularly in urban environments where such systems are increasingly deployed. The most likely hypothesis is that these systems can be systematically exploited, potentially leading to accidents or misuse. Overall confidence in this assessment is moderate, given the controlled nature of the experiments and the lack of real-world testing.

2. Competing Hypotheses

- Hypothesis A: Autonomous systems can be consistently misled by altered road signs, leading to erroneous navigation decisions. This is supported by experimental evidence showing that vision language models can be coerced into following commands embedded in road signs. However, the lack of real-world testing introduces uncertainty about the practical applicability of these findings.

- Hypothesis B: The vulnerability is limited to specific models and scenarios, and real-world systems may have additional safeguards that prevent such exploitation. This hypothesis is less supported due to the lack of evidence showing effective countermeasures in current systems.

- Assessment: Hypothesis A is currently better supported due to the experimental results demonstrating the feasibility of the attack. Key indicators that could shift this judgment include real-world testing results and evidence of effective countermeasures in deployed systems.

3. Key Assumptions and Red Flags

- Assumptions: Autonomous systems rely heavily on visual input for navigation; current models lack robust validation mechanisms for visual data; the findings from controlled environments are applicable to real-world scenarios.

- Information Gaps: Real-world testing data on the vulnerability of deployed autonomous systems; details on existing safeguards in commercial systems; potential for adversarial adaptation to countermeasures.

- Bias & Deception Risks: Potential bias in experimental design favoring successful exploitation; risk of overestimating the vulnerability without considering system diversity and existing defenses.

4. Implications and Strategic Risks

This development could lead to increased scrutiny and regulatory pressure on autonomous vehicle and drone manufacturers to enhance security measures. Over time, it may drive innovation in AI safety and robustness, but also increase the risk of adversarial attacks in urban environments.

- Political / Geopolitical: Potential for international regulatory standards and cooperation to address AI vulnerabilities.

- Security / Counter-Terrorism: Increased risk of exploitation by malicious actors, including terrorists, to disrupt transportation systems.

- Cyber / Information Space: Emergence of new attack vectors targeting AI systems, necessitating advanced cybersecurity measures.

- Economic / Social: Potential impact on public trust in autonomous technologies, affecting market adoption and economic growth.

5. Recommendations and Outlook

- Immediate Actions (0–30 days): Conduct a comprehensive review of current autonomous systems for similar vulnerabilities; initiate collaboration with AI safety researchers to develop immediate mitigation strategies.

- Medium-Term Posture (1–12 months): Develop industry-wide standards for AI robustness; invest in research for advanced validation techniques for visual data in autonomous systems.

- Scenario Outlook:

- Best: Rapid development and deployment of effective countermeasures, leading to increased trust and adoption of autonomous systems.

- Worst: Widespread exploitation of vulnerabilities, resulting in significant accidents and loss of public confidence.

- Most-Likely: Gradual improvement in system security with periodic incidents highlighting ongoing vulnerabilities.

6. Key Individuals and Entities

- Not clearly identifiable from open sources in this snippet.

7. Thematic Tags

cybersecurity, autonomous vehicles, cyber security, AI vulnerabilities, transportation safety, regulatory standards, AI robustness, adversarial attacks

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Forecast futures under uncertainty via probabilistic logic.

- Narrative Pattern Analysis: Deconstruct and track propaganda or influence narratives.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us