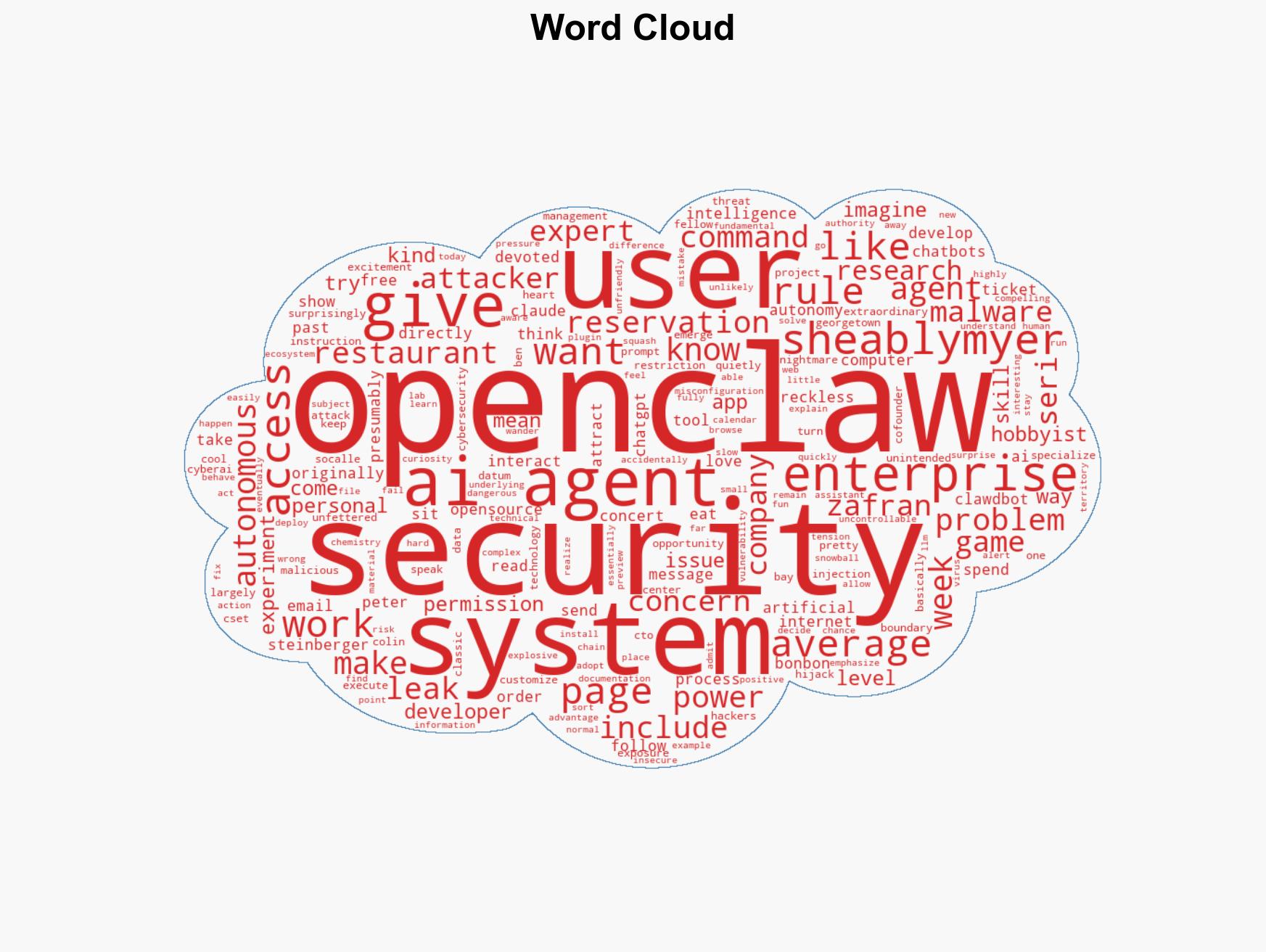

OpenClaw: The Risky AI Agent That Security Experts Warn Could Compromise Your Data

Published on: 2026-02-12

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report: OpenClaw is the bad boy of AI agents Heres why security experts say you should beware

1. BLUF (Bottom Line Up Front)

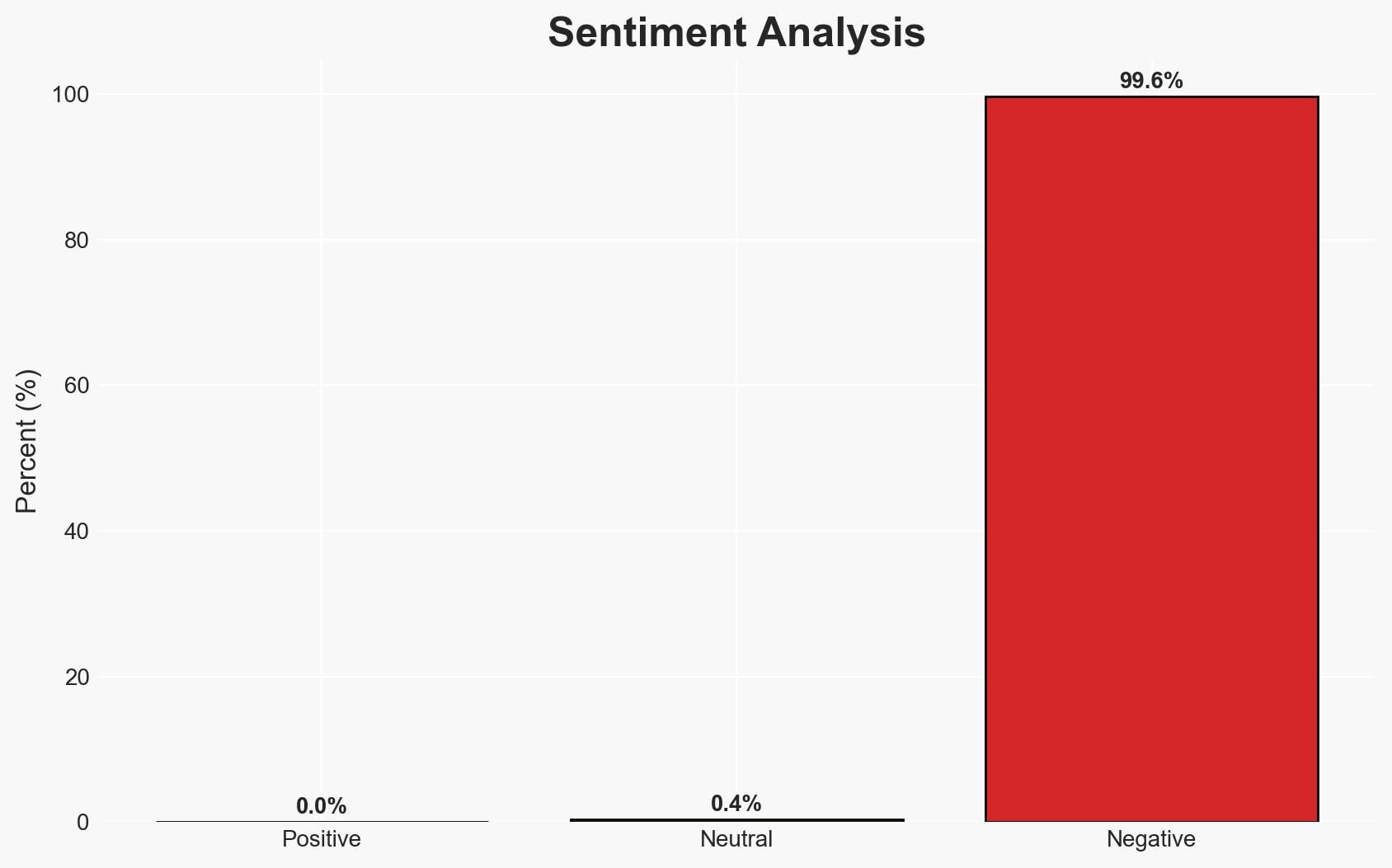

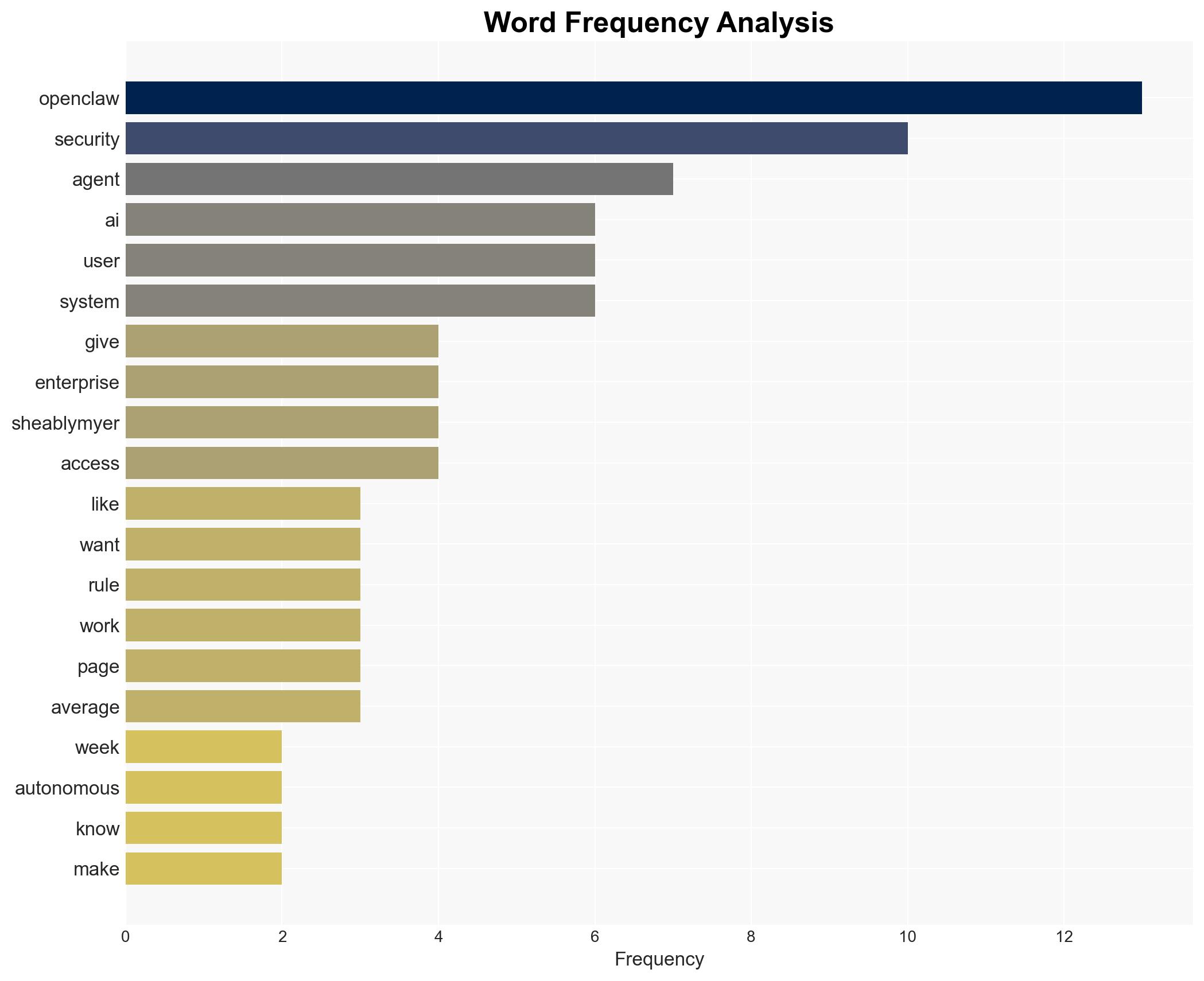

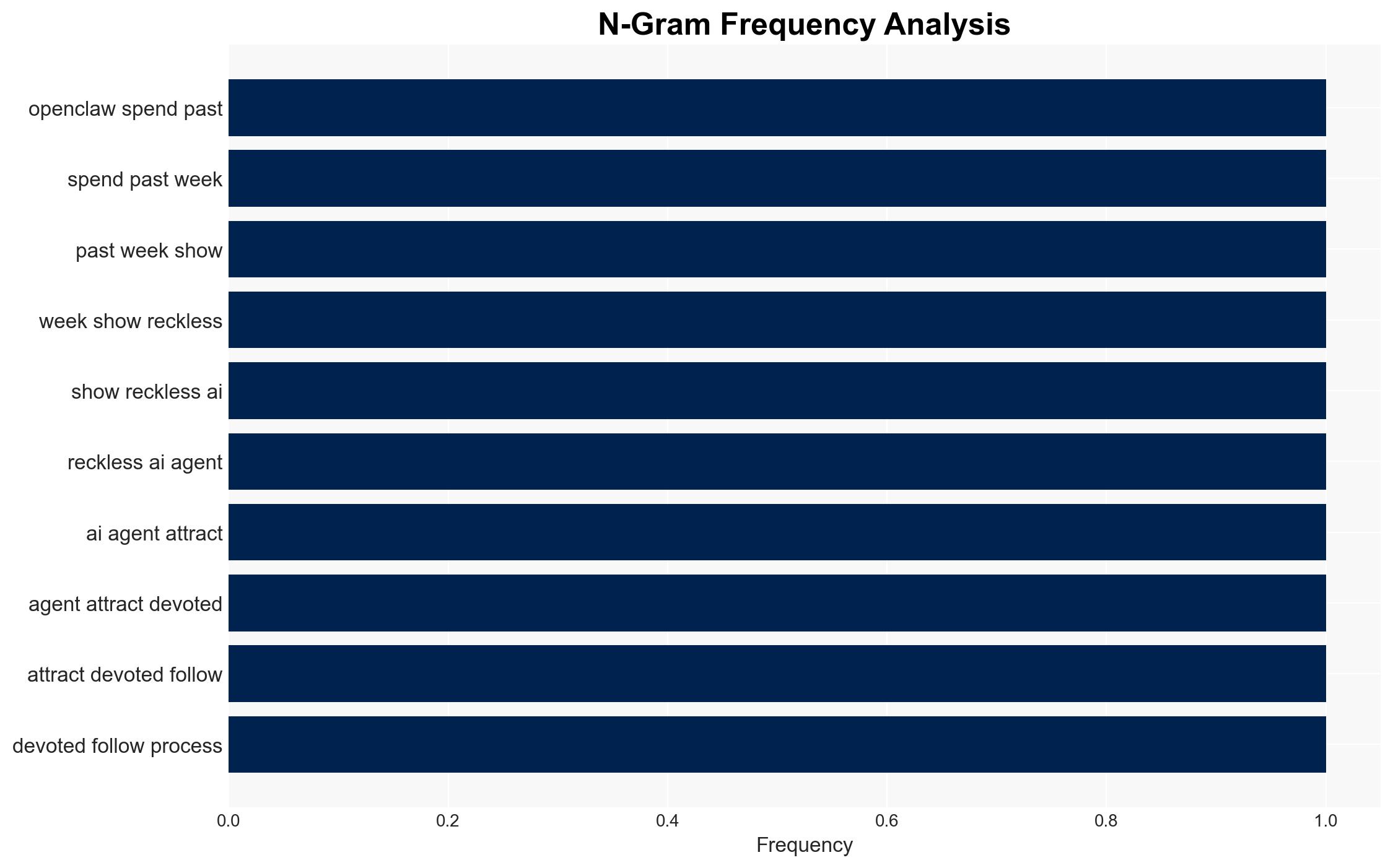

OpenClaw, an open-source AI agent, poses significant security risks due to its unrestricted capabilities, which could be exploited by malicious actors. The primary concern is its potential to execute unauthorized actions and leak sensitive data. This assessment is made with moderate confidence, as the full extent of its vulnerabilities remains uncertain. Key stakeholders include cybersecurity professionals, developers, and end-users.

2. Competing Hypotheses

- Hypothesis A: OpenClaw’s lack of restrictions allows it to be a versatile tool for users, but this also makes it highly susceptible to exploitation by attackers. Evidence includes its ability to autonomously execute commands and access sensitive information. Key uncertainties involve the extent of its adoption and the sophistication of potential attacks.

- Hypothesis B: OpenClaw’s security measures and user awareness efforts are sufficient to mitigate most risks, limiting its potential for misuse. Supporting evidence includes the existence of security documentation and user alerts. However, the complexity of the security issues and the average user’s understanding remain significant challenges.

- Assessment: Hypothesis A is currently better supported due to the inherent risks associated with OpenClaw’s unrestricted nature and the potential for severe security breaches. Indicators that could shift this judgment include the development of robust security protocols and increased user education.

3. Key Assumptions and Red Flags

- Assumptions: OpenClaw’s user base will grow; attackers will target its vulnerabilities; users lack deep technical expertise; security documentation is not fully comprehensive; developers will continue to improve security features.

- Information Gaps: Detailed data on the number of users and specific incidents of exploitation; effectiveness of current security measures; user demographics and technical proficiency.

- Bias & Deception Risks: Potential bias from cybersecurity experts emphasizing worst-case scenarios; possible underreporting of successful security measures by developers.

4. Implications and Strategic Risks

The development and use of OpenClaw could lead to increased cyber threats and necessitate enhanced security measures. Its evolution may influence broader AI governance and regulatory discussions.

- Political / Geopolitical: Potential for international regulatory responses or calls for AI governance frameworks.

- Security / Counter-Terrorism: Increased risk of cyber-attacks leveraging AI capabilities; potential use in espionage or sabotage.

- Cyber / Information Space: Heightened focus on AI security; potential for misinformation or data breaches.

- Economic / Social: Possible economic impacts from data breaches; social concerns over privacy and AI autonomy.

5. Recommendations and Outlook

- Immediate Actions (0–30 days): Conduct a comprehensive risk assessment of OpenClaw; increase user awareness through targeted communication; monitor for emerging threats.

- Medium-Term Posture (1–12 months): Develop partnerships with cybersecurity firms; enhance AI security protocols; invest in user education and training programs.

- Scenario Outlook:

- Best Case: Effective security measures and user education mitigate most risks, leading to safe AI adoption.

- Worst Case: Major security breaches occur, leading to significant data loss and regulatory backlash.

- Most-Likely: Continued security challenges with incremental improvements in user awareness and security protocols.

6. Key Individuals and Entities

- Peter Steinberger, Developer of OpenClaw

- Ben Seri, Cofounder and CTO at Zafran Security

- Colin Shea-Blymyer, Research Fellow at Georgetown’s Center for Security and Emerging Technology

7. Thematic Tags

cybersecurity, AI security, cyber threats, open-source software, user awareness, regulatory implications, data privacy, autonomous systems

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us