Is AI Really Woke Or Extremist – Forbes

Published on: 2025-04-09

Intelligence Report: Is AI Really Woke Or Extremist – Forbes

1. BLUF (Bottom Line Up Front)

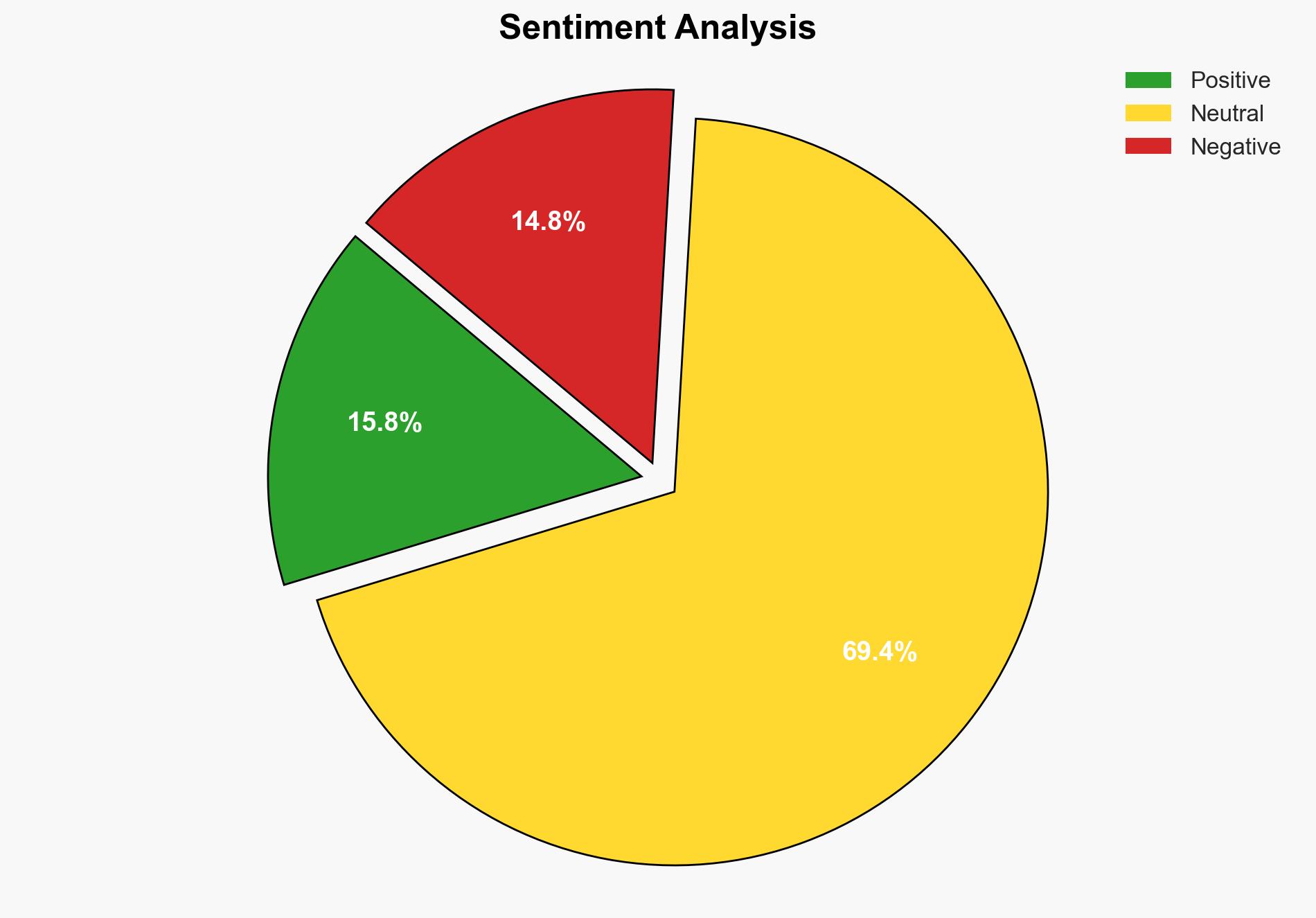

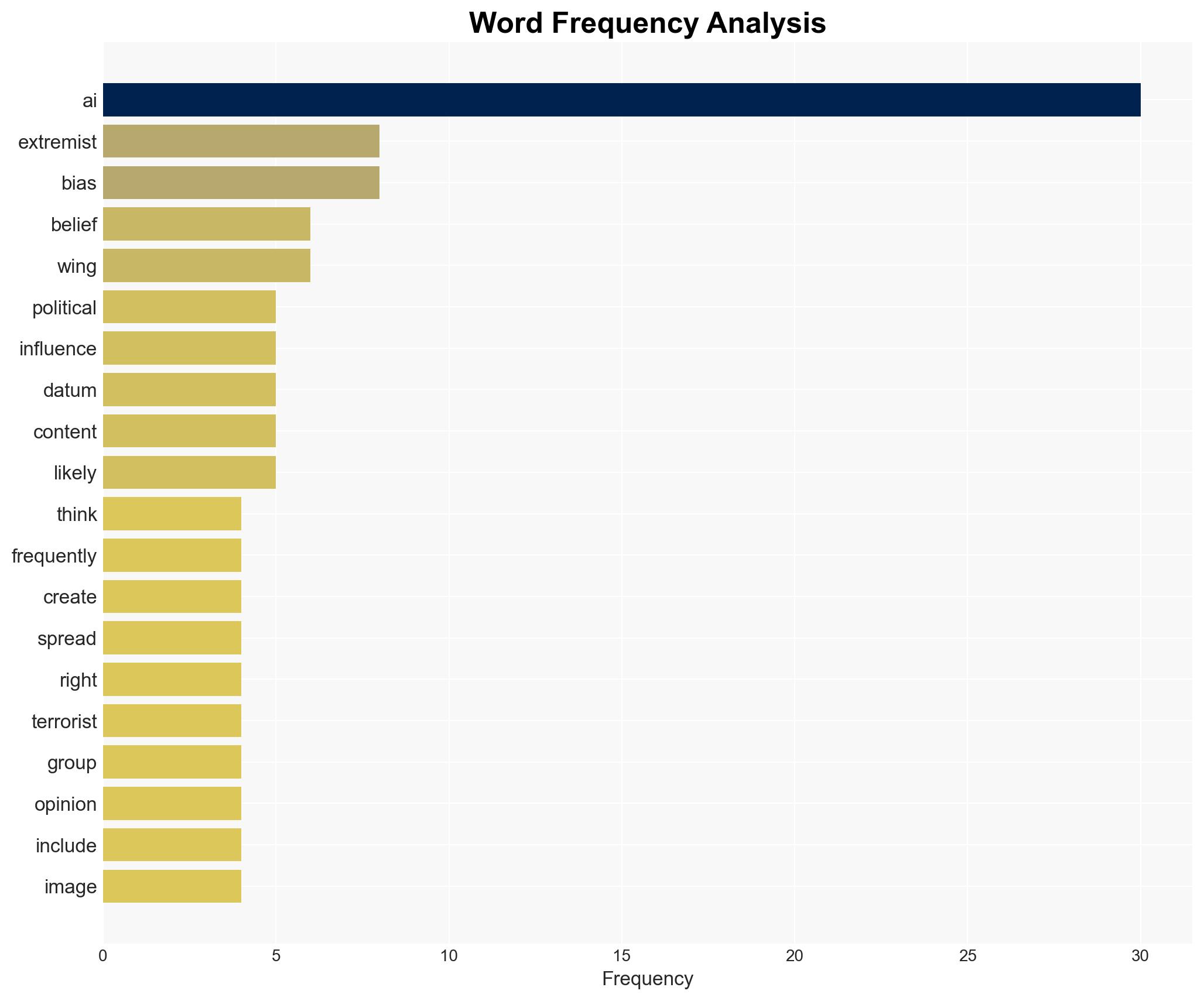

The report examines the contentious debate surrounding artificial intelligence (AI) and its perceived biases, whether “woke” or extremist. Key findings indicate that AI systems reflect the data they are trained on, which can lead to perceived biases. There is a concern about AI’s potential to influence political opinions and propagate extremist ideologies. Recommendations include enhancing AI transparency and developing robust regulatory frameworks to mitigate these risks.

2. Detailed Analysis

The following structured analytic techniques have been applied for this analysis:

General Analysis

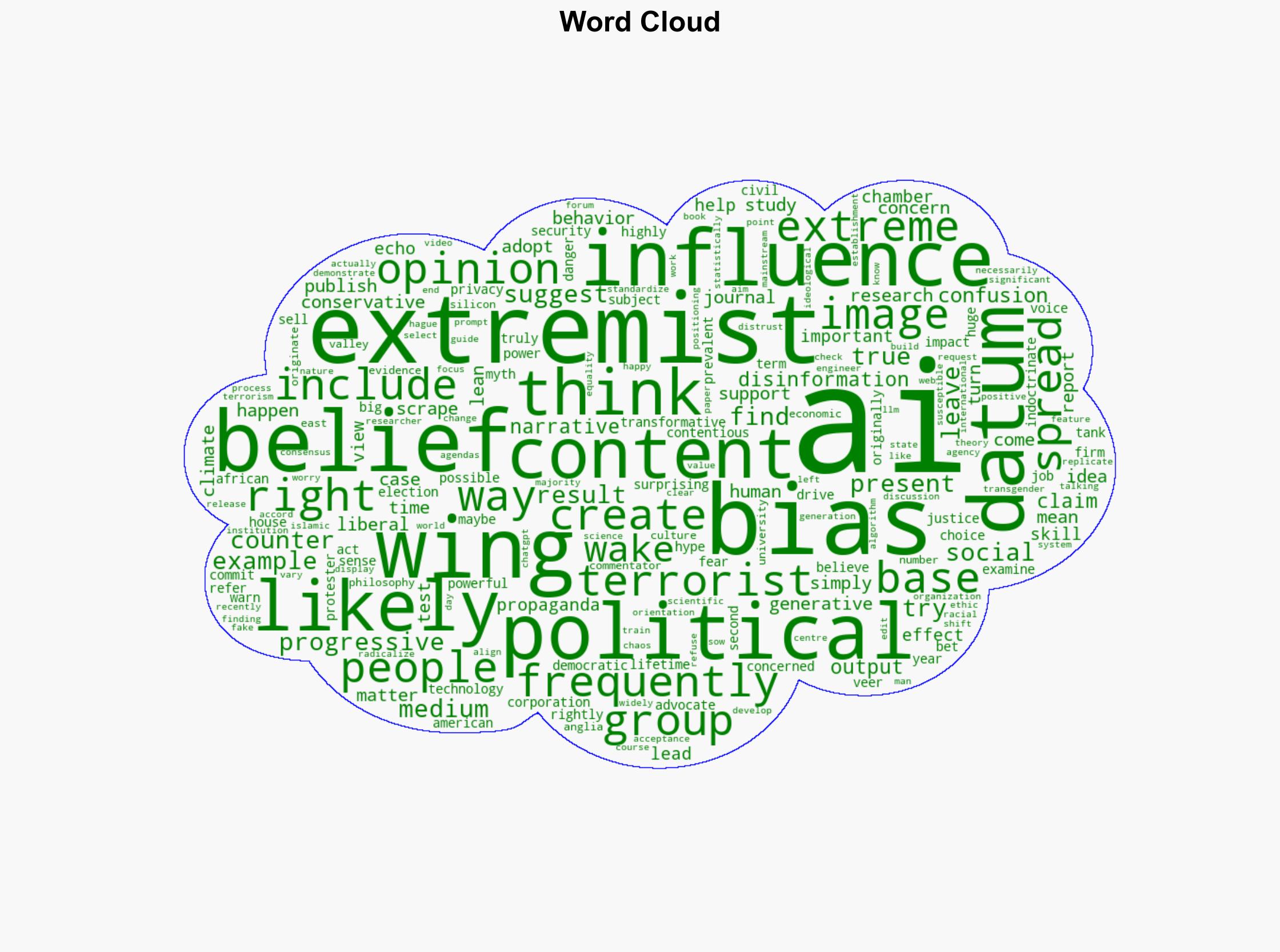

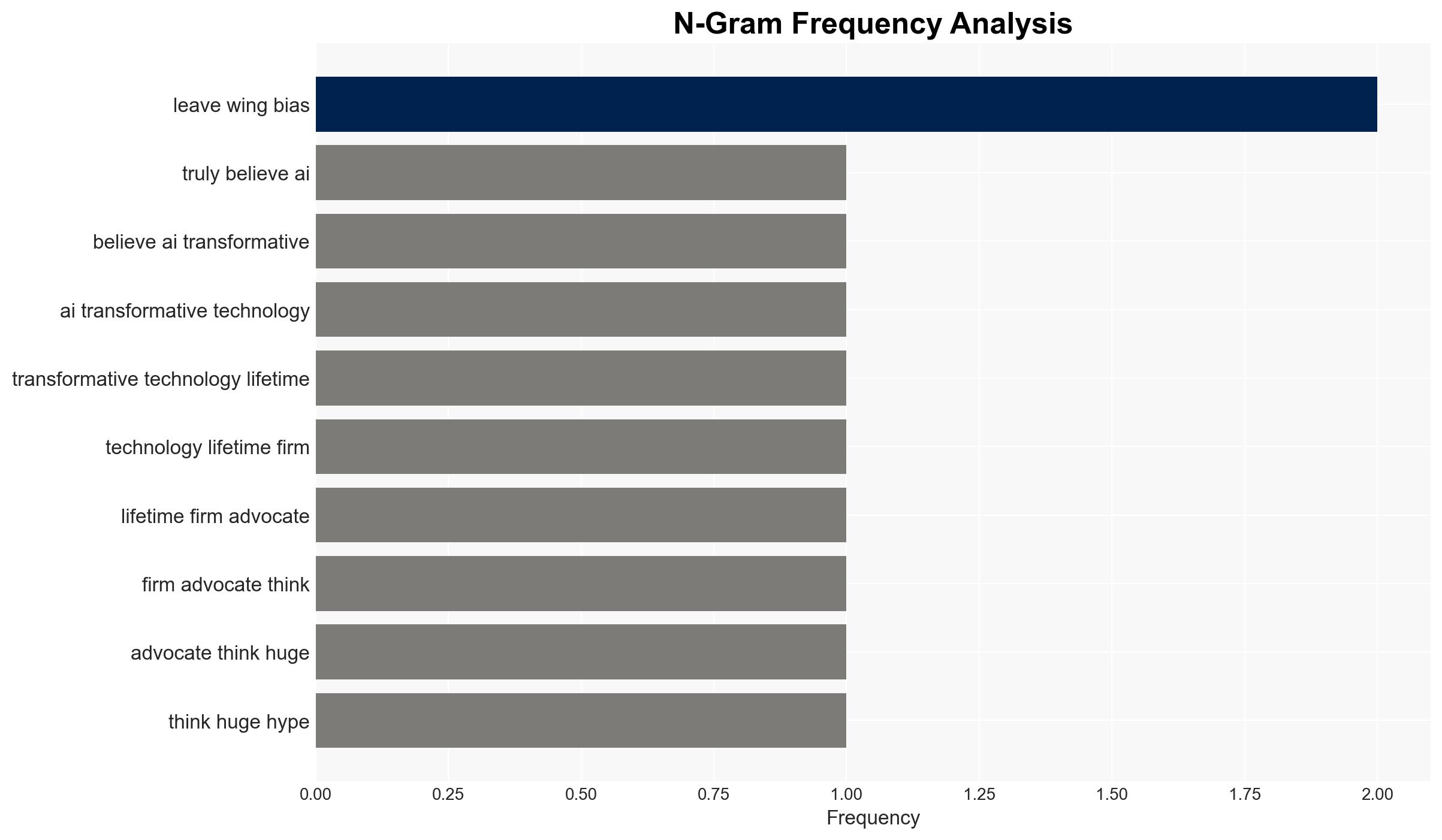

The discourse on AI being “woke” or extremist is fueled by the data sources AI systems are trained on. Studies suggest that AI can reflect both left-wing and right-wing biases, depending on the dataset. Concerns are raised about AI’s role in spreading disinformation and influencing democratic processes. The potential for AI to create echo chambers, similar to social media, is significant, with implications for societal polarization.

3. Implications and Strategic Risks

The strategic risks associated with AI biases include the potential for increased political polarization and the spread of extremist ideologies. National security could be compromised if AI is used to amplify disinformation campaigns. Economic interests may also be affected as AI-driven decisions could perpetuate biases, impacting sectors such as employment and privacy.

4. Recommendations and Outlook

Recommendations:

- Enhance transparency in AI algorithms to ensure accountability and reduce bias.

- Develop regulatory frameworks to monitor and control AI applications, particularly in sensitive areas such as politics and security.

- Encourage cross-sector collaboration to address AI biases and promote ethical AI development.

Outlook:

In the best-case scenario, increased transparency and regulation lead to AI systems that are fairer and less biased. In the worst-case scenario, unchecked AI development exacerbates societal divisions and security risks. The most likely outcome involves gradual improvements in AI governance, with ongoing challenges in balancing innovation and regulation.

5. Key Individuals and Entities

The report references significant individuals and organizations involved in AI development and regulation. Notable mentions include University of East Anglia and International Centre for Counter-Terrorism, which have contributed to the discourse on AI biases and extremist risks.