A Scanning Error Created a Fake Science TermNow AI Wont Let It Die – Gizmodo.com

Published on: 2025-04-17

Intelligence Report: A Scanning Error Created a Fake Science TermNow AI Wont Let It Die – Gizmodo.com

1. BLUF (Bottom Line Up Front)

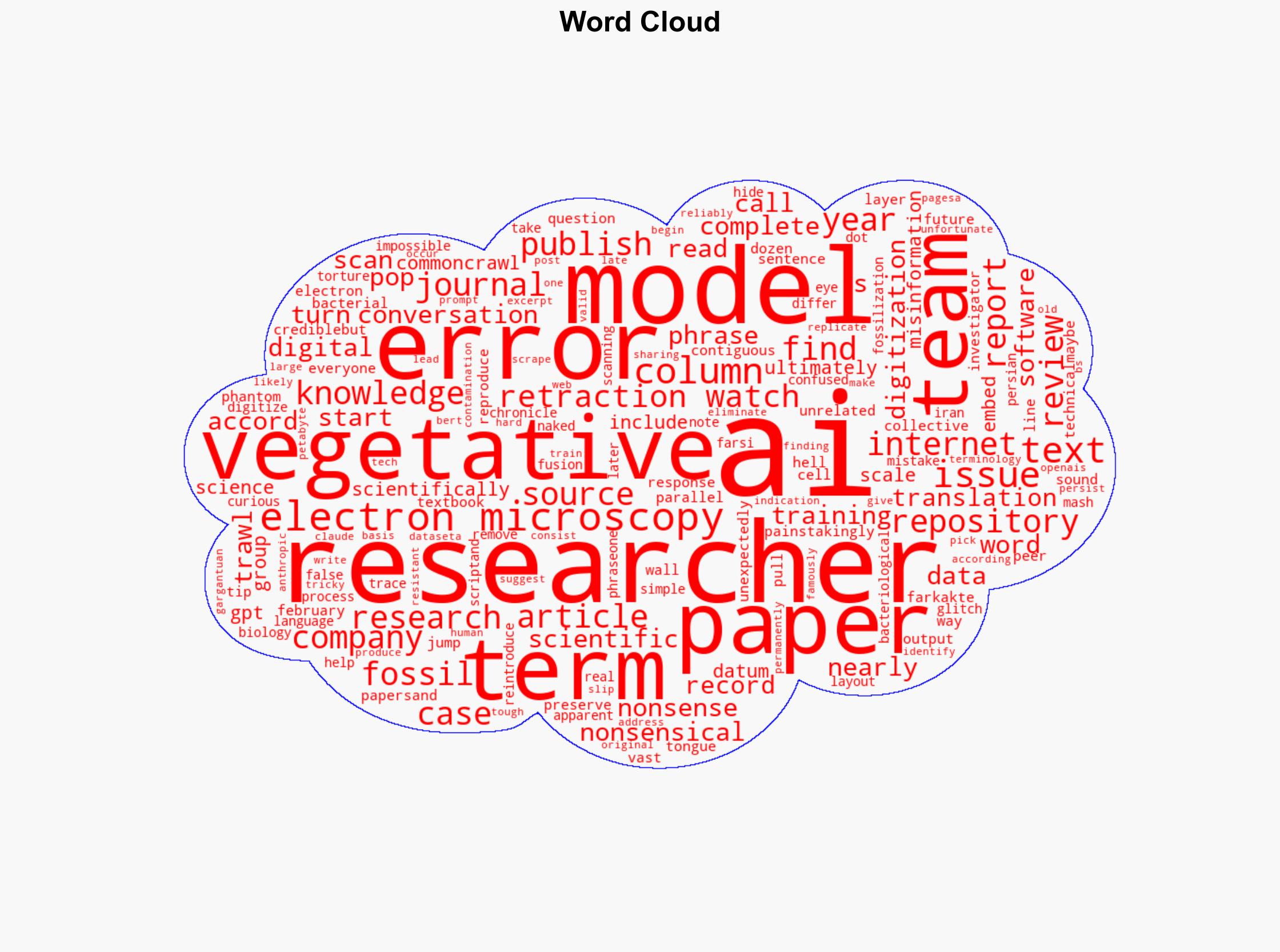

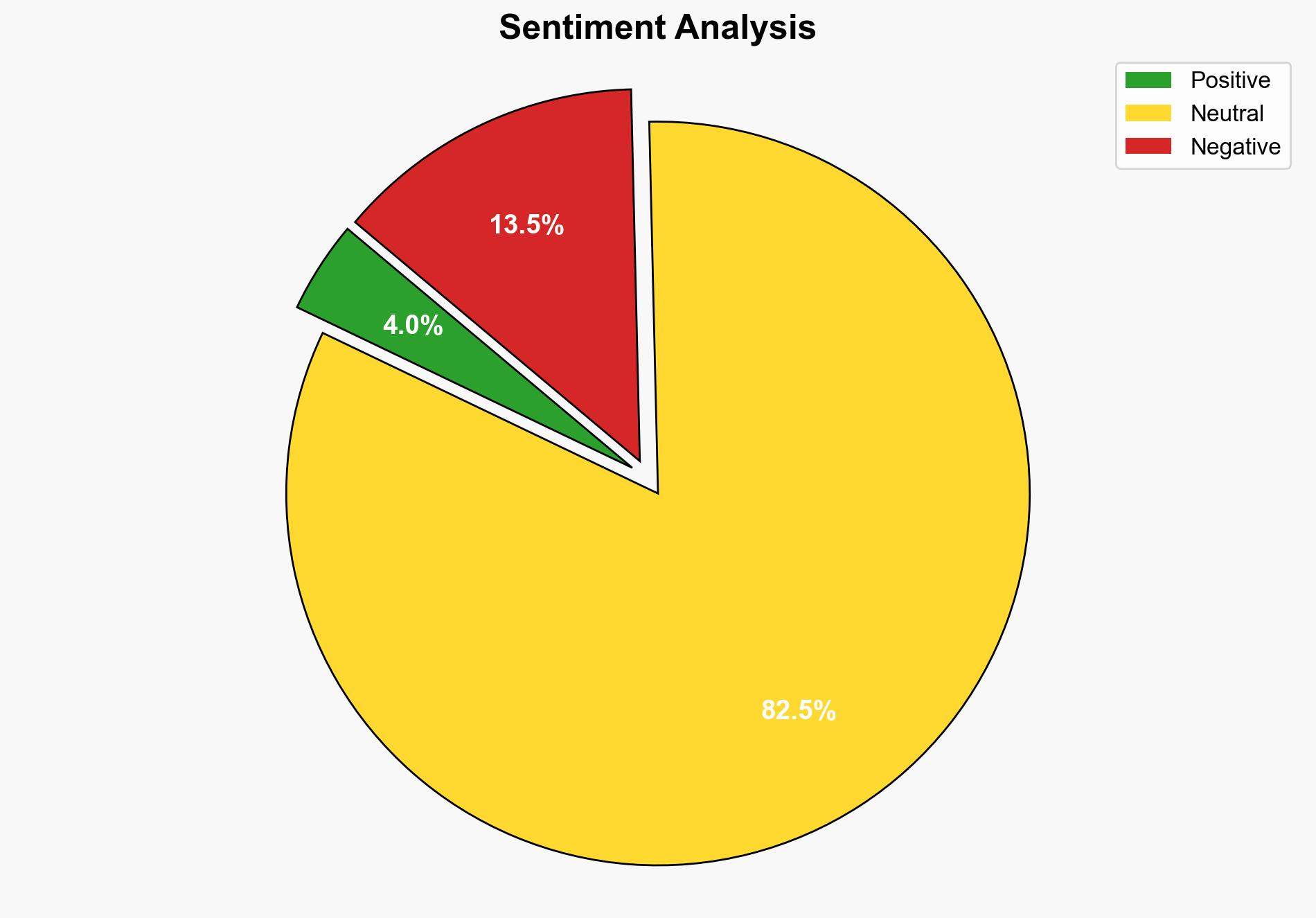

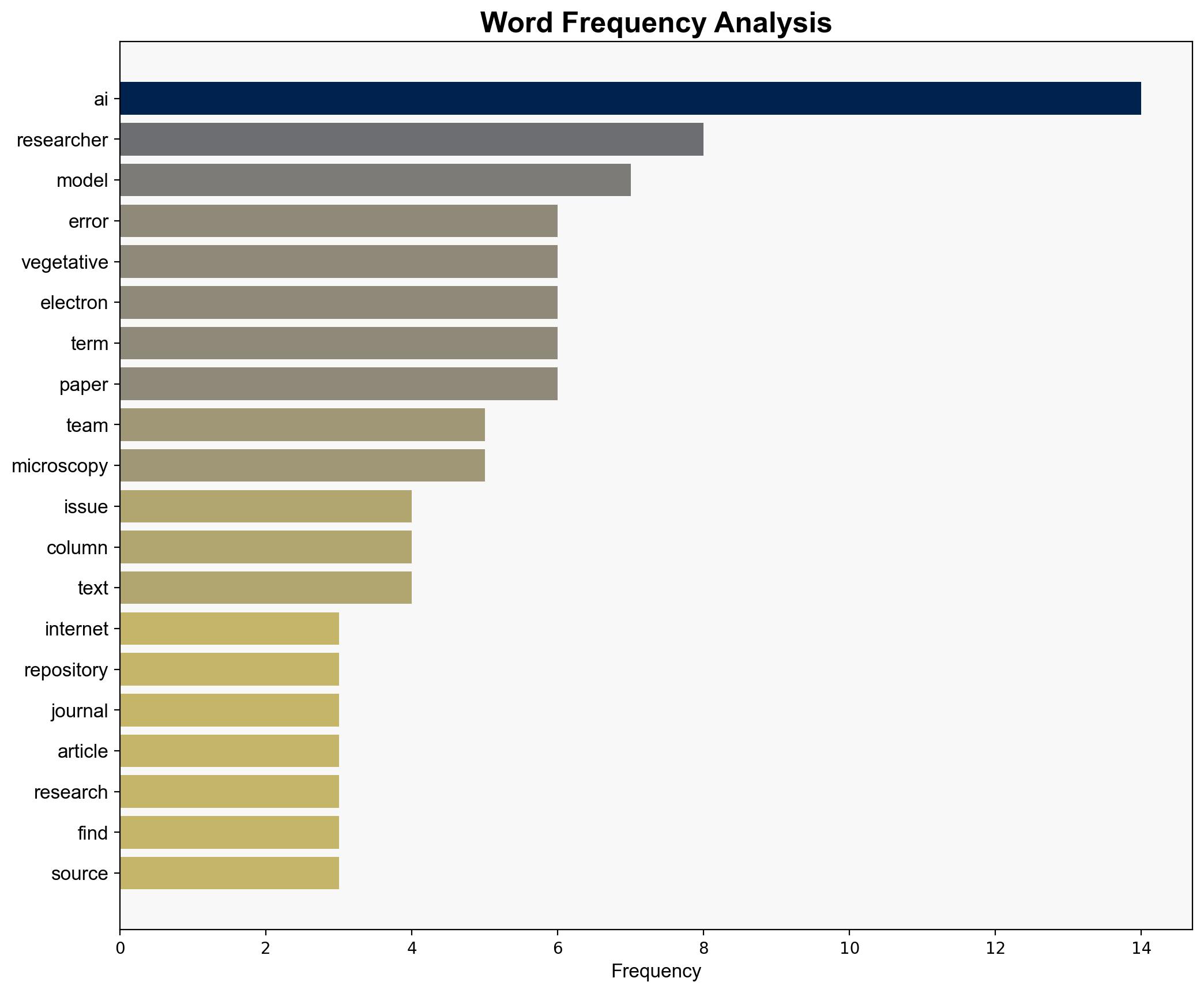

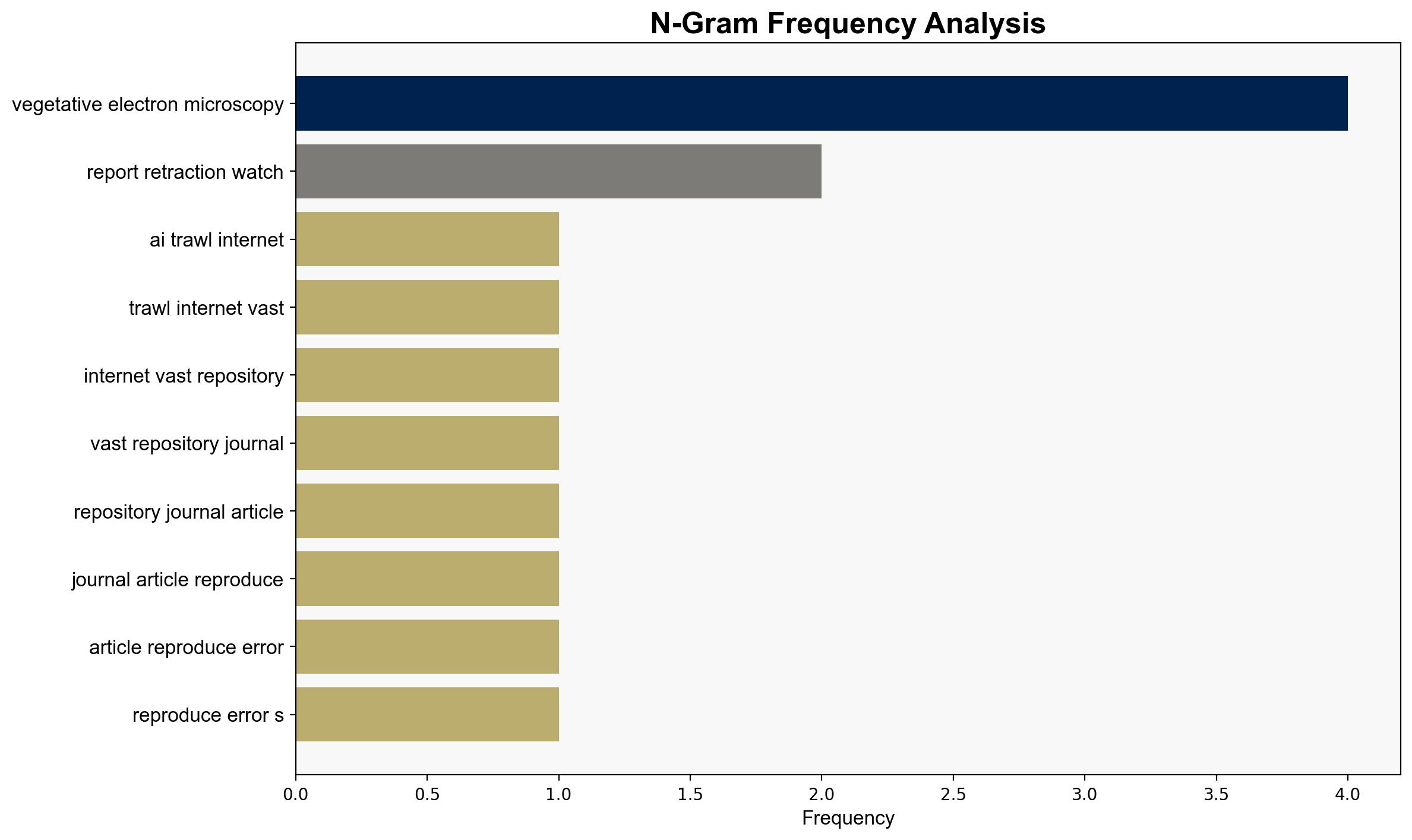

The emergence of the term “vegetative electron microscopy” highlights a significant issue in AI training data integrity. This error, originating from a digitization mistake in a 1959 scientific paper, has been perpetuated by AI models, leading to its appearance in modern research. The persistence of such errors poses a risk to the credibility of scientific literature and underscores the need for improved data validation processes in AI systems.

2. Detailed Analysis

The following structured analytic techniques have been applied:

SWOT Analysis

Strengths: AI’s ability to process vast amounts of data quickly can enhance research efficiency.

Weaknesses: Errors in training data can lead to widespread misinformation and undermine scientific credibility.

Opportunities: Developing robust validation mechanisms for AI training data can improve data accuracy and reliability.

Threats: Continued propagation of erroneous information could erode trust in AI-generated content and scientific publications.

Cross-Impact Matrix

The error’s propagation from a localized digitization mistake to a global issue illustrates how interconnected digital systems can amplify errors. This phenomenon may influence academic and research communities worldwide, potentially affecting funding, policy decisions, and public trust in scientific findings.

Scenario Generation

Scenario 1: Implementation of stricter data validation protocols reduces the occurrence of such errors, restoring confidence in AI-generated content.

Scenario 2: Failure to address data integrity issues leads to increased skepticism towards AI, prompting regulatory interventions and reduced AI adoption in research.

3. Implications and Strategic Risks

The persistence of digital fossils in AI systems poses a strategic risk to the integrity of scientific research. This could lead to flawed policy-making and resource allocation based on inaccurate data. Additionally, the credibility of AI technologies in academic and professional settings may be compromised, affecting future technological advancements and collaborations.

4. Recommendations and Outlook

- Develop and implement comprehensive data validation frameworks to ensure the accuracy of AI training datasets.

- Encourage collaboration between AI developers and domain experts to identify and correct erroneous data entries.

- Promote transparency in AI model training processes to facilitate error detection and correction.

- Scenario-based projections suggest that addressing these issues promptly could enhance AI’s role in research, while neglecting them may lead to increased regulatory scrutiny and reduced trust in AI applications.

5. Key Individuals and Entities

The report references contributions from researchers and analysts who have identified and documented the issue, as well as AI models like GPT-2, GPT-4o, and Claude 3.5, which have been implicated in the propagation of the error.