New ChatGPT Models Seem to Leave Watermarks on Text – Rumidocs.com

Published on: 2025-04-21

Intelligence Report: New ChatGPT Models Seem to Leave Watermarks on Text – Rumidocs.com

1. BLUF (Bottom Line Up Front)

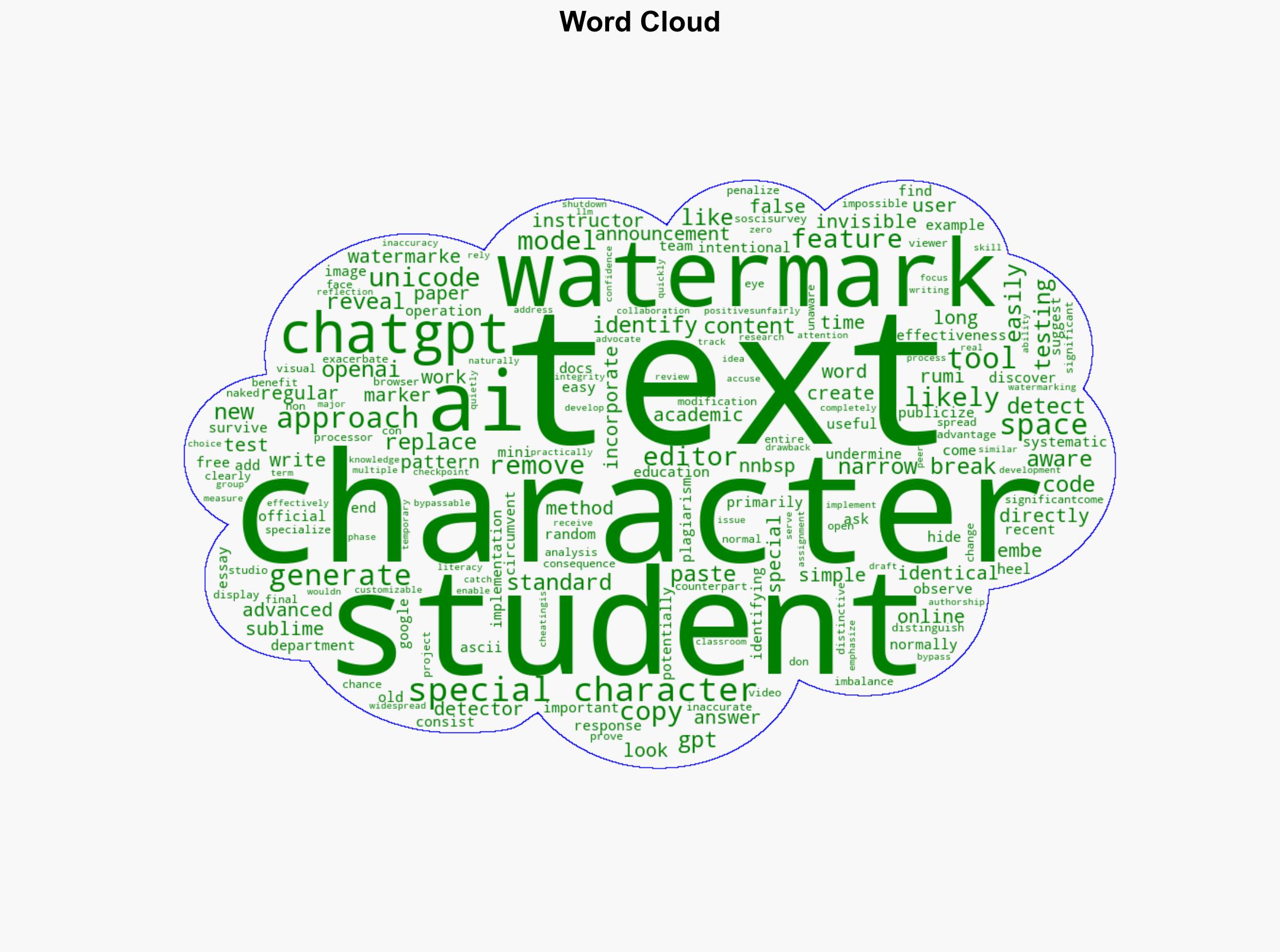

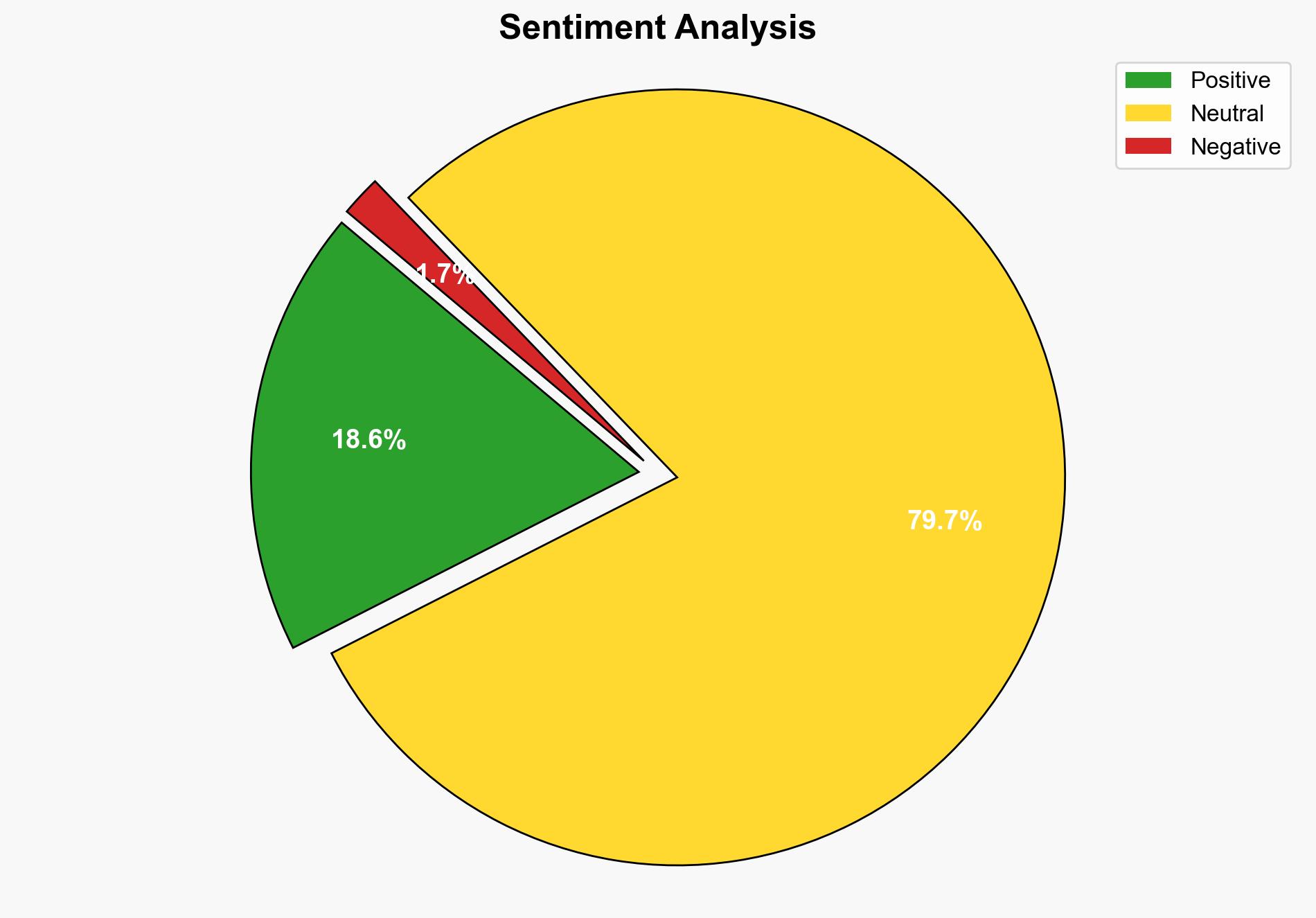

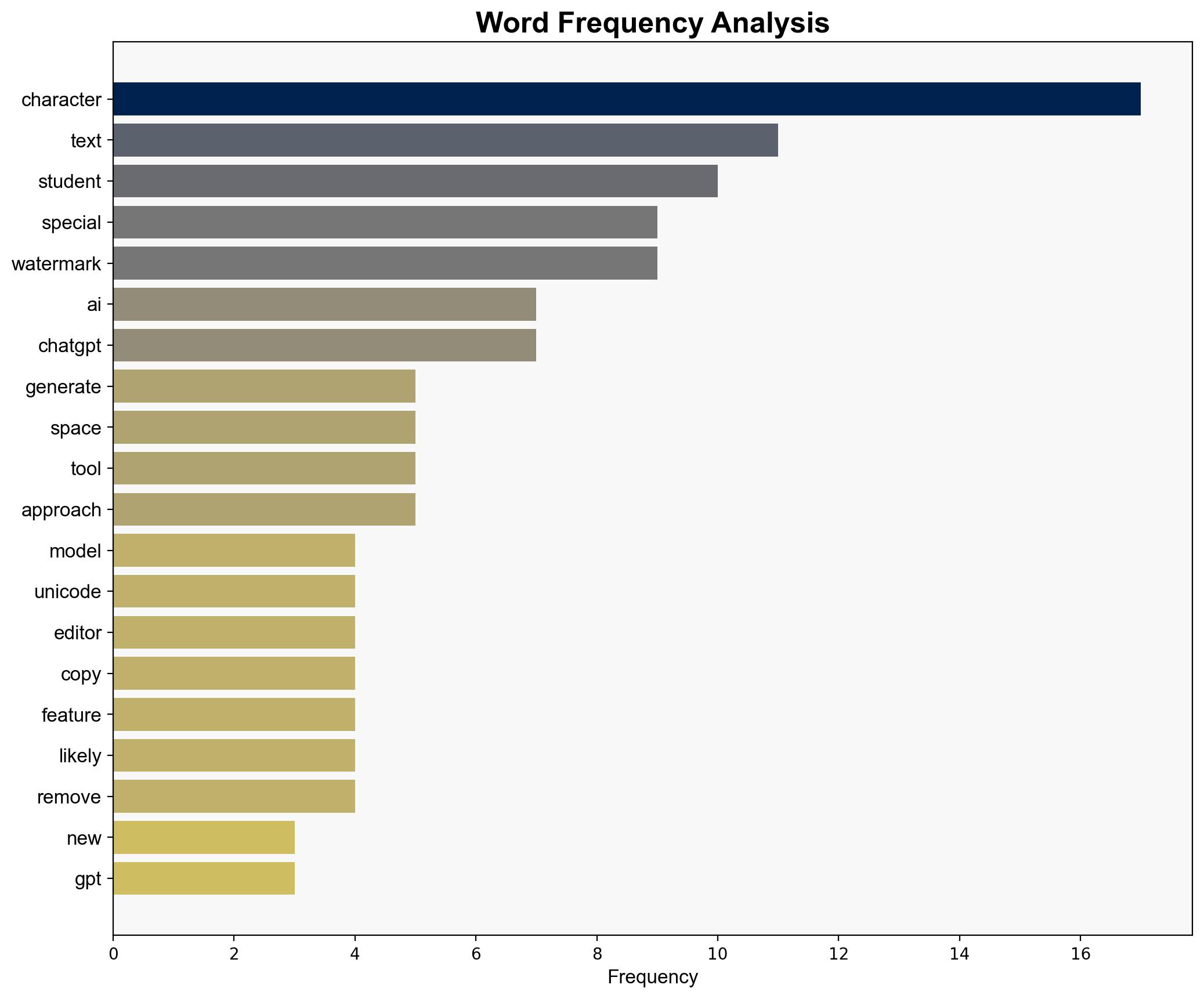

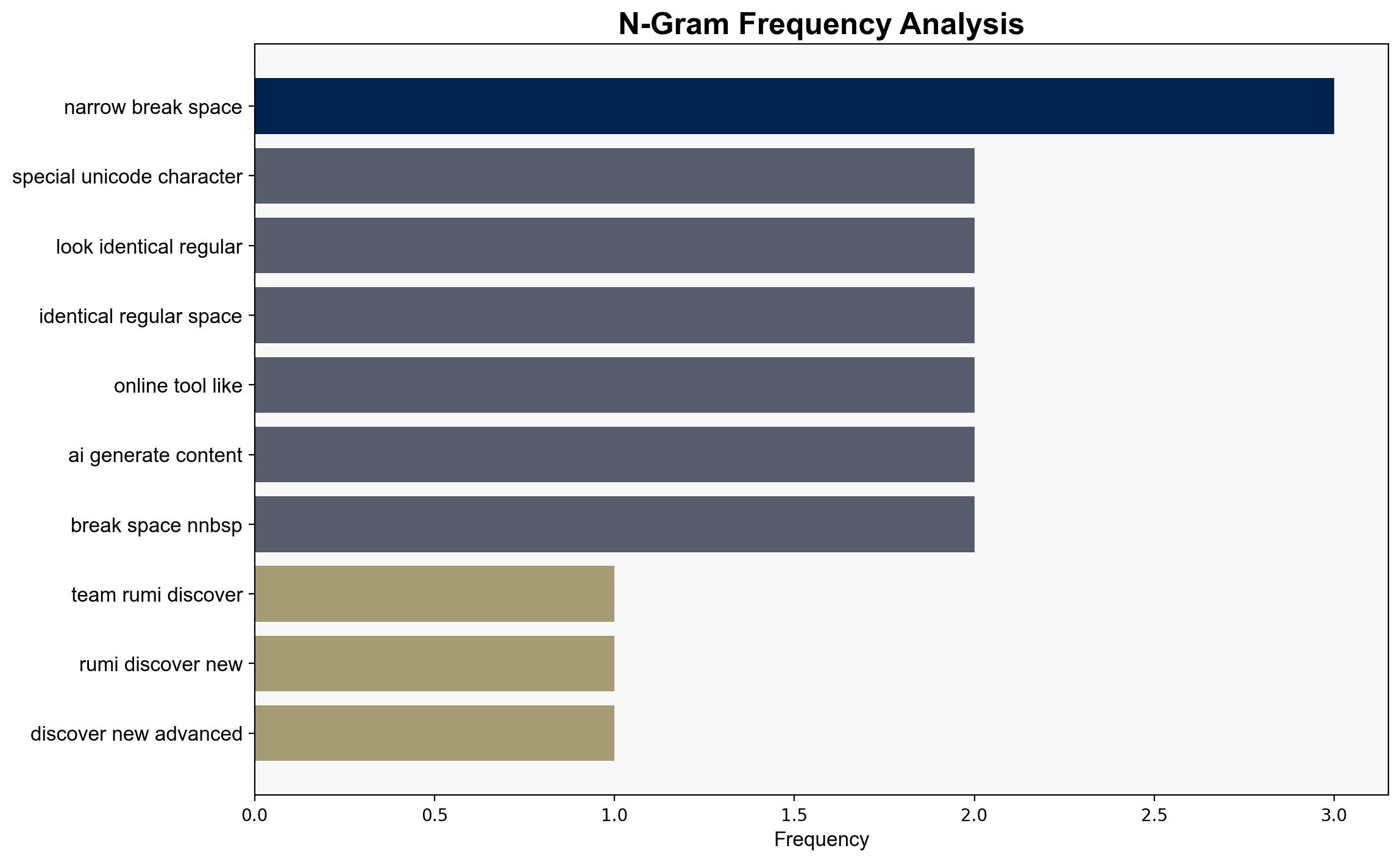

Recent developments indicate that new ChatGPT models embed invisible watermarks in generated text using special Unicode characters. This feature aims to identify AI-generated content but can be easily circumvented. The strategic implications include potential impacts on academic integrity and the effectiveness of AI content detection tools. Recommendations focus on enhancing detection methods and considering alternative approaches to maintain academic standards.

2. Detailed Analysis

The following structured analytic techniques have been applied to ensure methodological consistency:

Analysis of Competing Hypotheses (ACH)

Alternative explanations for the implementation of watermarks include enhancing content traceability, deterring plagiarism, and testing AI capabilities. The evidence suggests intentional watermarking to identify AI-generated content, but its effectiveness is limited due to easy circumvention.

SWOT Analysis

Strengths: Provides a method to trace AI-generated content. Weaknesses: Easily bypassed by users. Opportunities: Develop more robust detection tools. Threats: Undermines trust in AI content verification.

Indicators Development

Monitor for increased use of AI-generated content in academic settings and the development of tools to detect or bypass watermarks.

3. Implications and Strategic Risks

The introduction of watermarks in AI-generated text poses risks to academic integrity as students may exploit these models for assignments. The ease of watermark removal could lead to widespread misuse, challenging educational institutions’ ability to maintain standards. Additionally, reliance on watermarking as a sole detection method may lead to complacency and false security.

4. Recommendations and Outlook

- Develop advanced detection tools that go beyond simple watermark identification.

- Educate students and educators about the limitations and ethical considerations of using AI-generated content.

- Scenario Projections:

- Best Case: Improved detection tools effectively identify AI-generated content, maintaining academic integrity.

- Worst Case: Widespread misuse of AI models leads to a decline in educational standards.

- Most Likely: Incremental improvements in detection tools with ongoing challenges in enforcement.

5. Key Individuals and Entities

OpenAI, RumiDocs.com

6. Thematic Tags

(‘cybersecurity’, ‘academic integrity’, ‘AI-generated content’, ‘technology ethics’)

7. Methodological References

Structured techniques used in this report are based on best practices outlined in the ‘Structured Analytic Techniques Manual’.