Wikipedia Won’t Add AI-Generated Slop After Editors Yelled At Them – Kotaku

Published on: 2025-06-11

Intelligence Report: Wikipedia Won’t Add AI-Generated Summaries After Editorial Pushback

1. BLUF (Bottom Line Up Front)

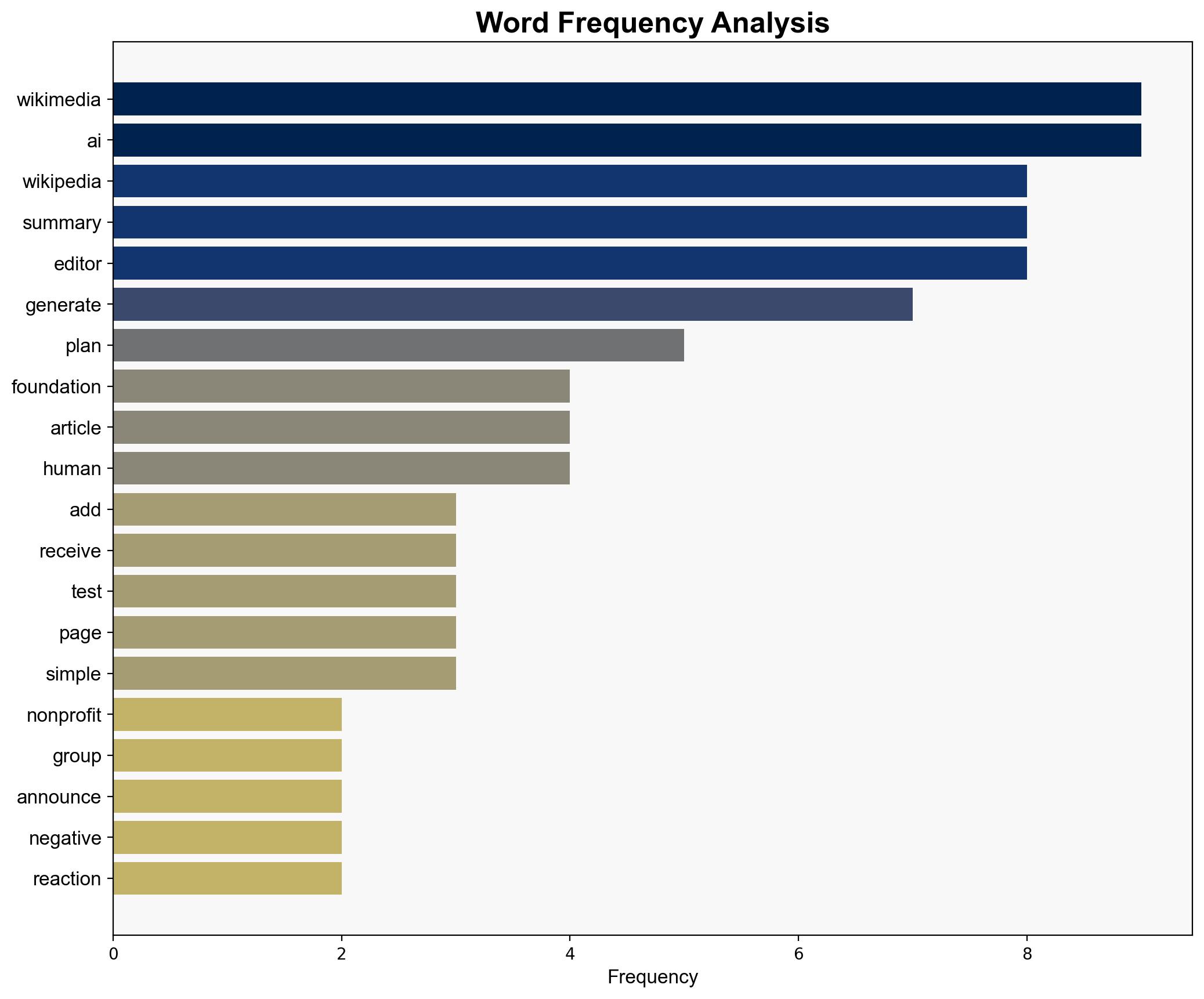

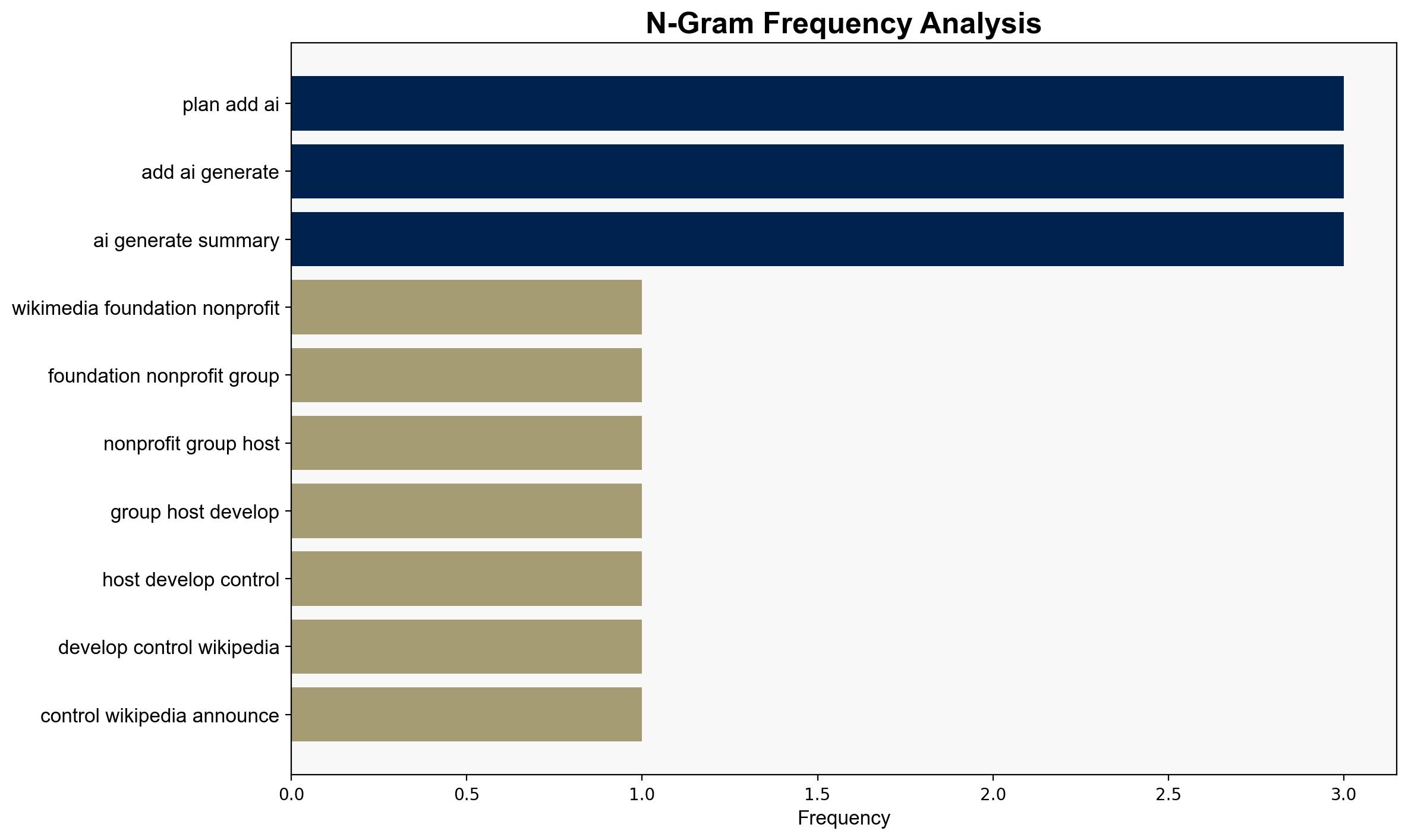

The Wikimedia Foundation has decided not to proceed with its plan to implement AI-generated summaries on Wikipedia following significant backlash from its editorial community. This decision underscores the importance of human oversight in maintaining the credibility and trustworthiness of information platforms. It highlights the potential risks associated with AI integration in content management without adequate community engagement and consensus.

2. Detailed Analysis

The following structured analytic techniques have been applied to ensure methodological consistency:

Adversarial Threat Simulation

While not directly related to cyber adversaries, the simulation of potential negative outcomes from AI implementation was effectively conducted by the editorial community, highlighting vulnerabilities in content integrity.

Indicators Development

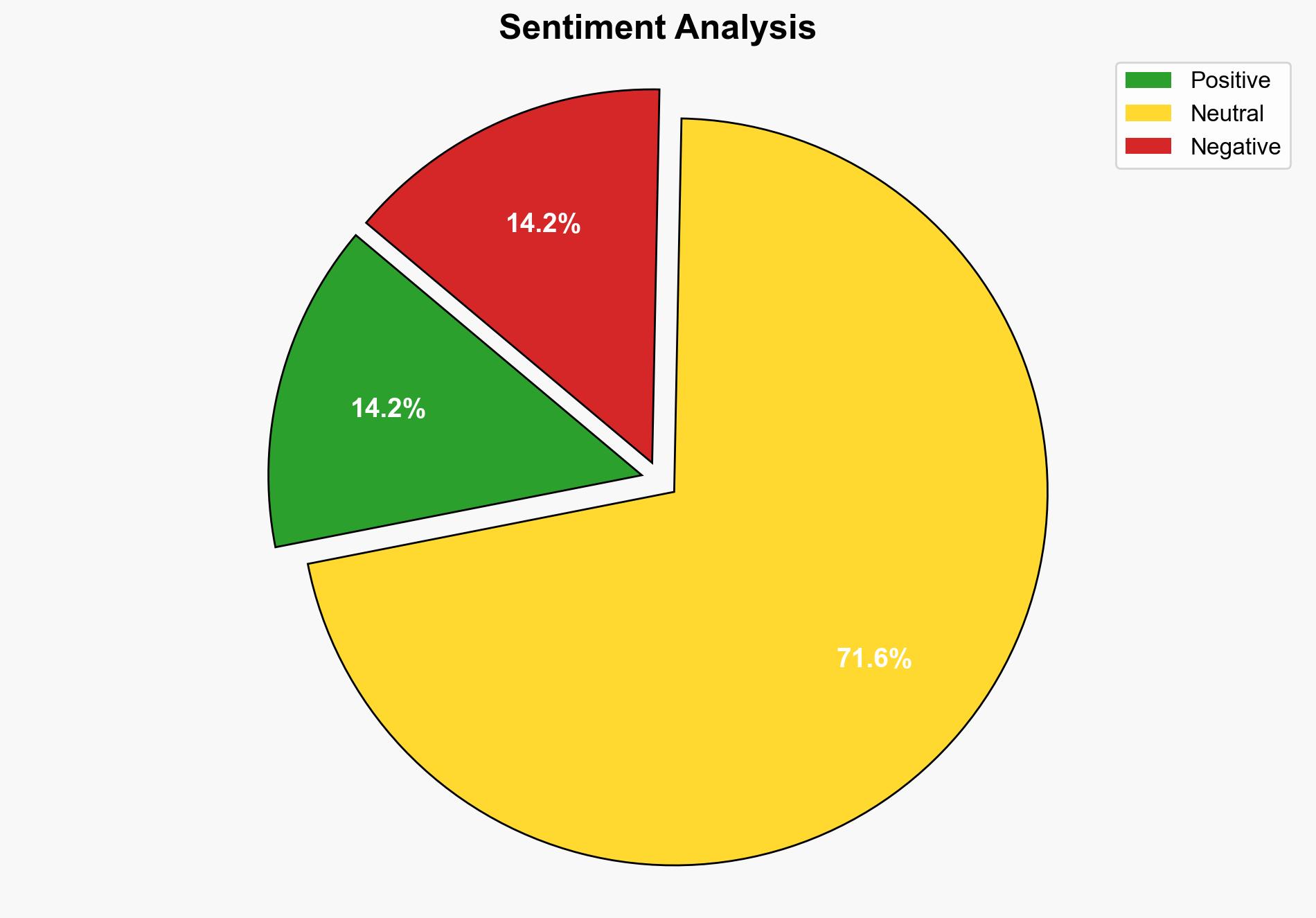

Key indicators of community dissatisfaction included negative feedback and public commentary, which served as early warnings of potential reputational damage.

Bayesian Scenario Modeling

Probabilistic models could predict that continued AI integration without community support might lead to decreased trust and user engagement.

3. Implications and Strategic Risks

The decision to halt AI-generated content reflects broader concerns about the integration of AI in information dissemination. Potential risks include the erosion of trust in digital platforms and the spread of misinformation. The case highlights the necessity for robust community involvement and transparent decision-making processes in technological adoption.

4. Recommendations and Outlook

- Engage in comprehensive stakeholder consultations before implementing AI technologies to ensure alignment with community values and expectations.

- Develop a framework for AI content moderation that prioritizes human oversight and accountability.

- Scenario-based projections suggest that maintaining human-centric content management will preserve platform integrity (best case), while ignoring community feedback could lead to reputational damage (worst case).

5. Key Individuals and Entities

The report does not specify individuals by name but focuses on the collective response of Wikipedia’s editorial community and the Wikimedia Foundation.

6. Thematic Tags

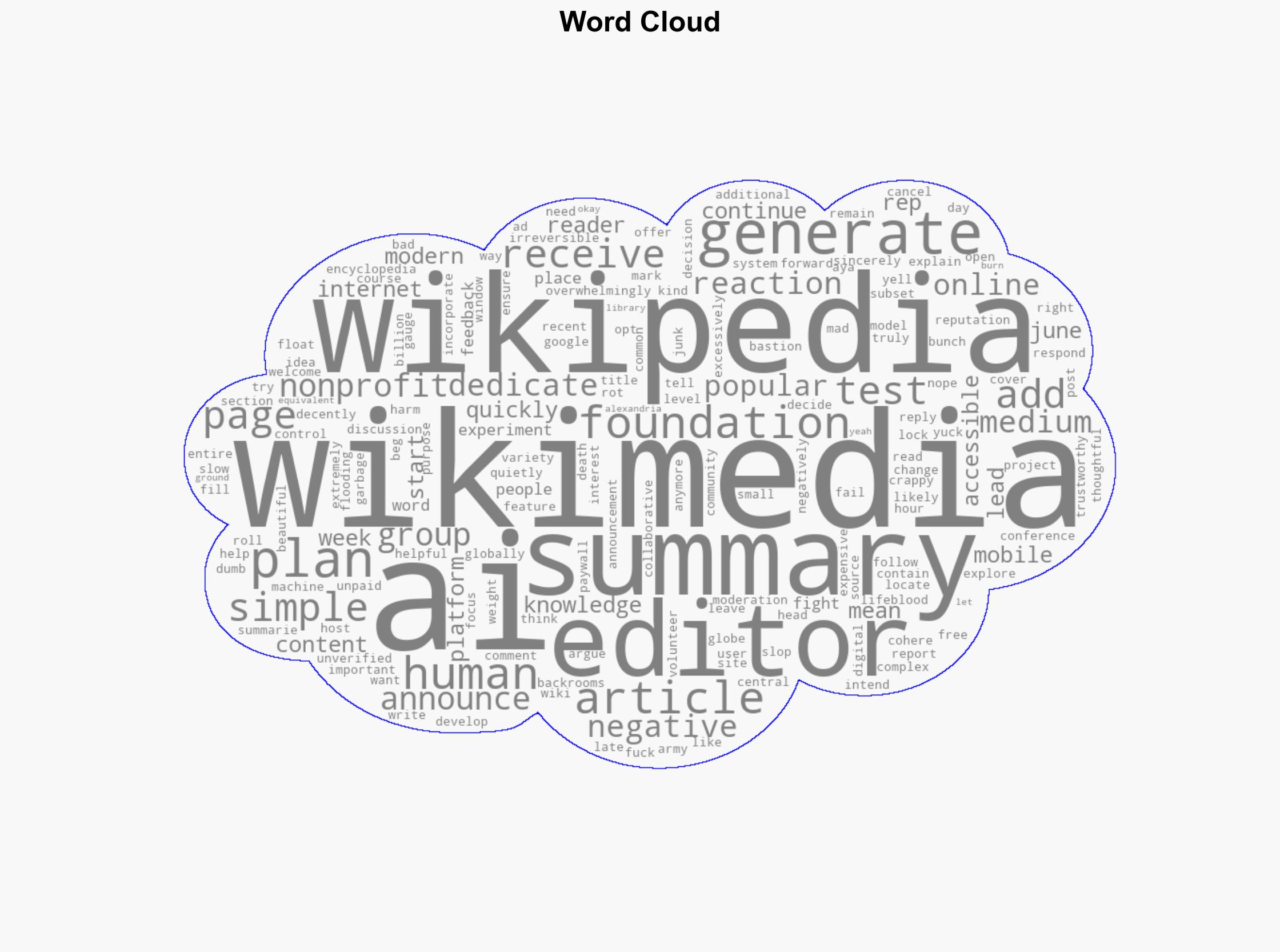

digital governance, AI ethics, community engagement, information integrity