OpenAI just signed a huge deal with the US government – and it could change the future of AI as we know it – TechRadar

Published on: 2025-06-17

Intelligence Report: OpenAI just signed a huge deal with the US government – and it could change the future of AI as we know it – TechRadar

1. BLUF (Bottom Line Up Front)

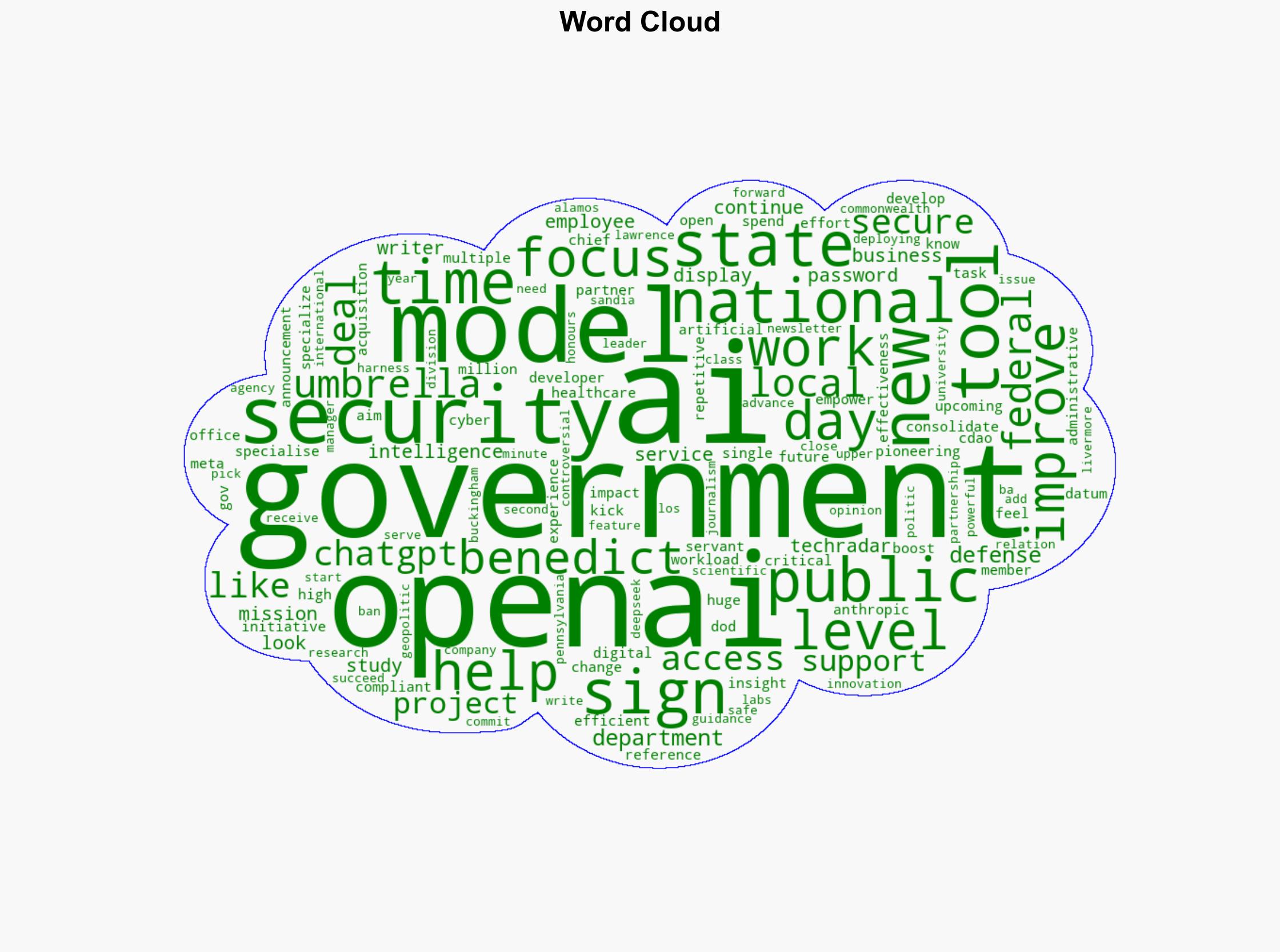

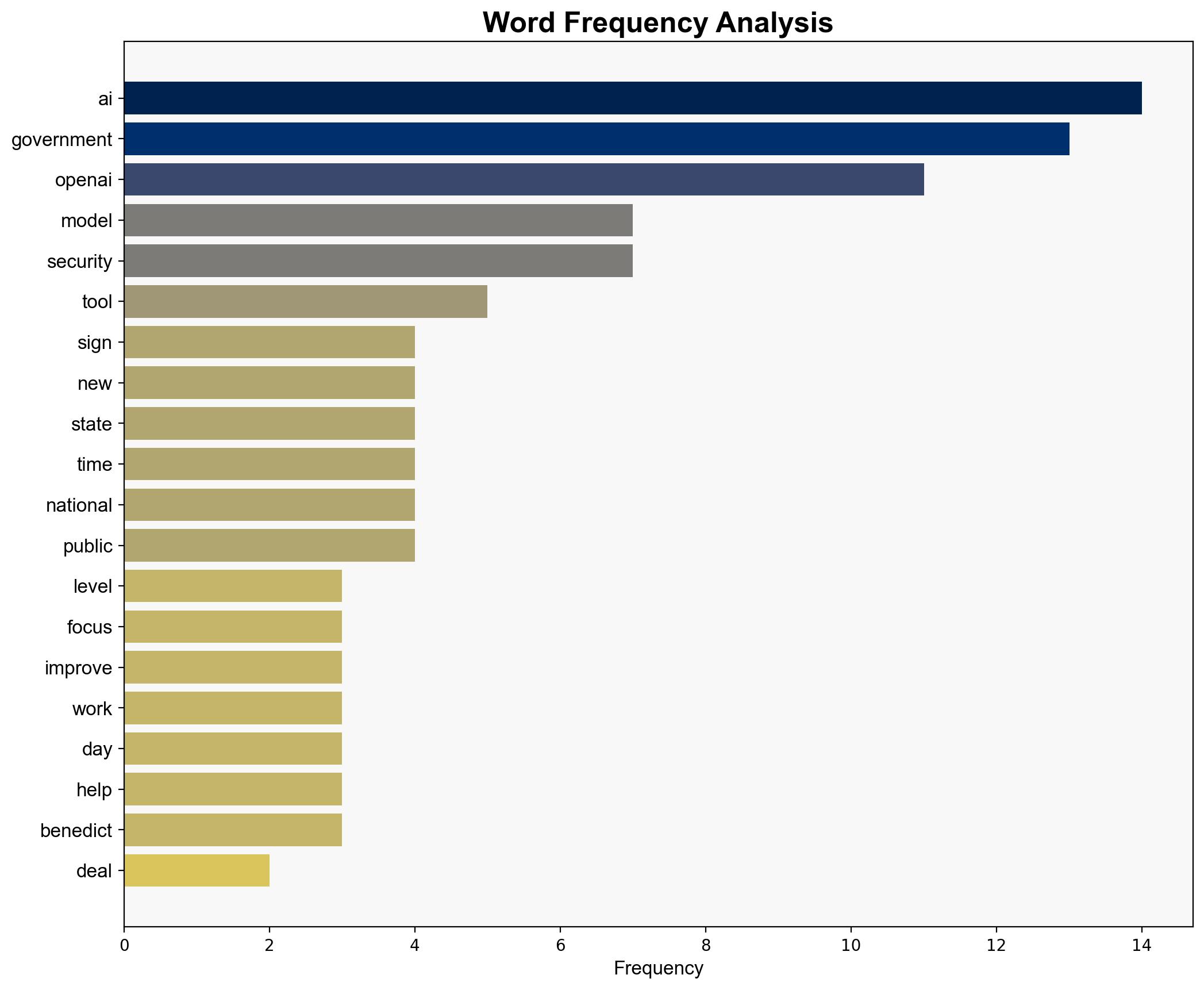

OpenAI has entered into a significant partnership with the US government, aimed at enhancing AI capabilities across federal, state, and local levels. This collaboration focuses on improving administrative efficiency, healthcare access, and cybersecurity, with potential implications for national security and public service operations. Key recommendations include monitoring the integration process and assessing the impact on government operations and security protocols.

2. Detailed Analysis

The following structured analytic techniques have been applied to ensure methodological consistency:

Cognitive Bias Stress Test

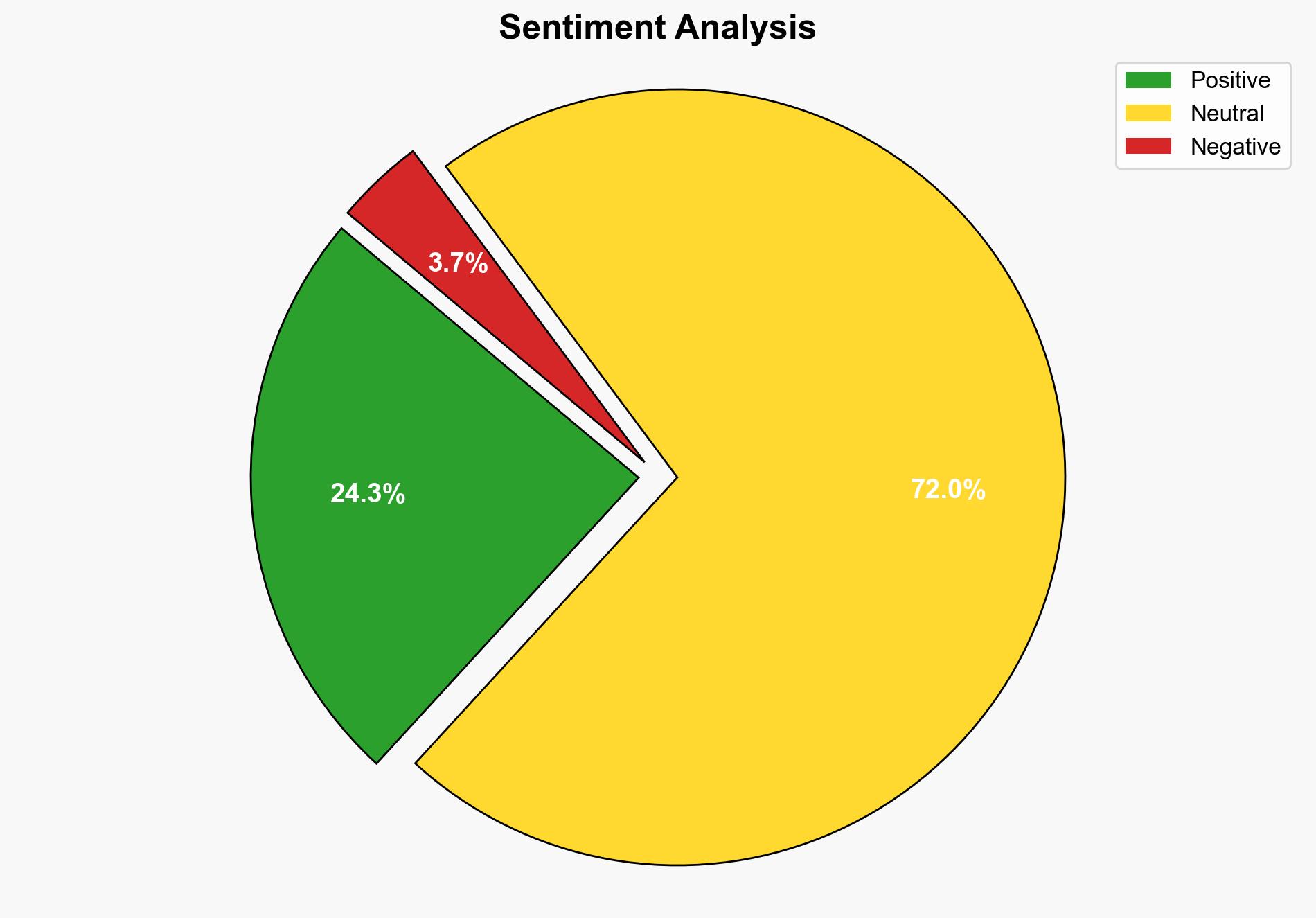

Potential biases were identified in the perception of AI’s role in government, with a focus on ensuring balanced assessments of both benefits and risks. Red teaming exercises were conducted to challenge assumptions about AI’s impact on national security.

Bayesian Scenario Modeling

Probabilistic forecasting suggests a high likelihood of increased AI integration in government functions, with moderate risk of cybersecurity challenges. The potential for conflict escalation due to AI-driven decision-making remains low but should be monitored.

Network Influence Mapping

Influence relationships between OpenAI, government entities, and other AI developers were mapped, highlighting OpenAI’s growing role in shaping AI policy and technology deployment within government frameworks.

3. Implications and Strategic Risks

The partnership may lead to increased efficiency in government operations but poses risks related to cybersecurity and data privacy. The consolidation of AI tools under a single umbrella could create vulnerabilities if not properly managed. Additionally, the reliance on AI for critical functions may introduce systemic risks if AI models fail or are compromised.

4. Recommendations and Outlook

- Implement robust cybersecurity measures to protect AI systems from potential threats.

- Conduct regular audits and assessments of AI integration to ensure compliance with security and privacy standards.

- Develop contingency plans for AI system failures to mitigate potential disruptions in government operations.

- Scenario-based projections suggest a best-case scenario of enhanced government efficiency, a worst-case scenario of significant cybersecurity breaches, and a most likely scenario of gradual improvement with manageable risks.

5. Key Individuals and Entities

OpenAI, Department of Defense, Chief Digital and Artificial Intelligence Office (CDAO), Los Alamos National Laboratory, Lawrence Livermore National Laboratory, Sandia National Laboratories.

6. Thematic Tags

national security threats, cybersecurity, AI integration, government efficiency, public service innovation