AI can’t stop making up software dependencies and sabotaging everything – Theregister.com

Published on: 2025-04-12

Intelligence Report: AI can’t stop making up software dependencies and sabotaging everything – Theregister.com

1. BLUF (Bottom Line Up Front)

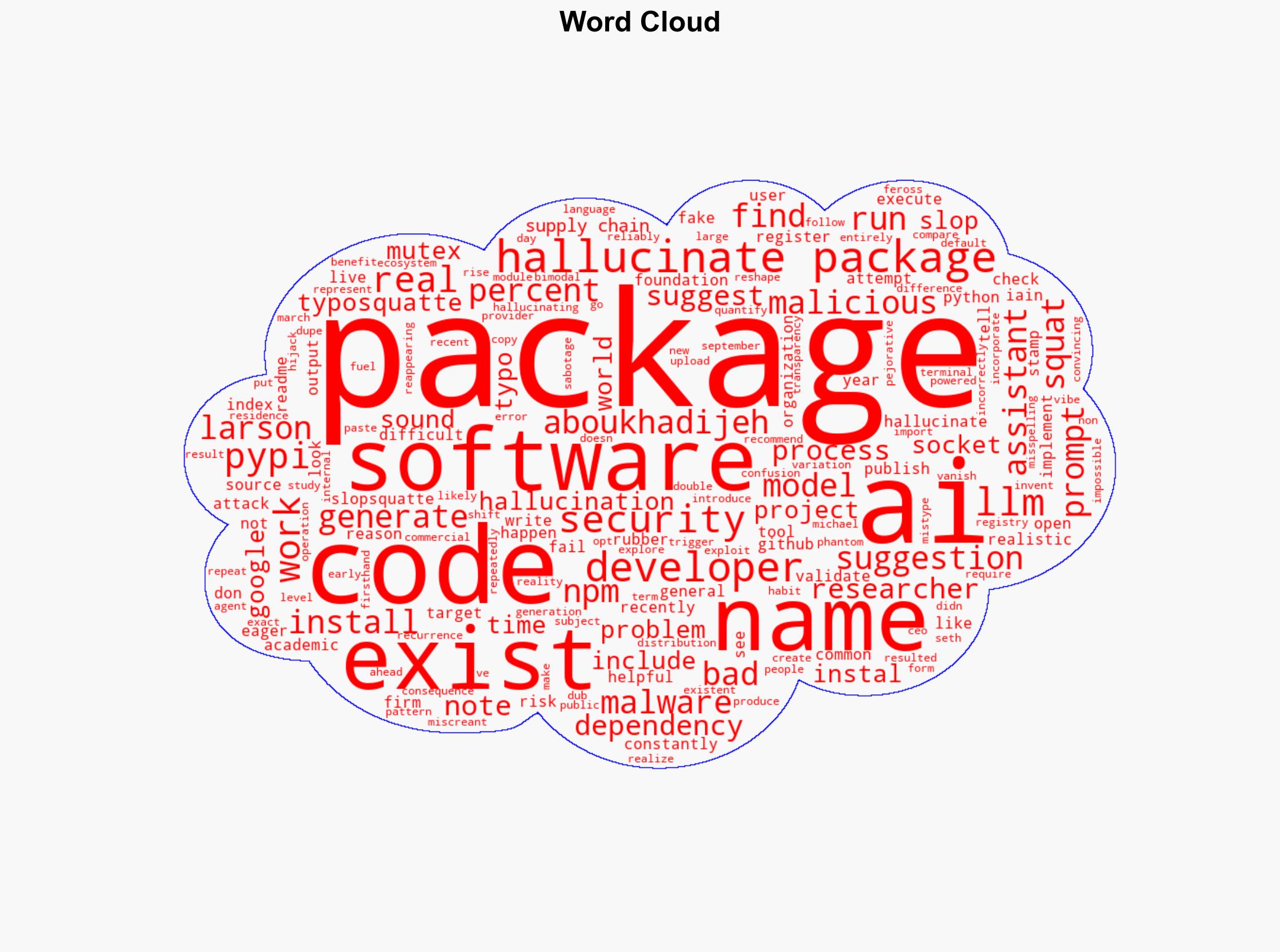

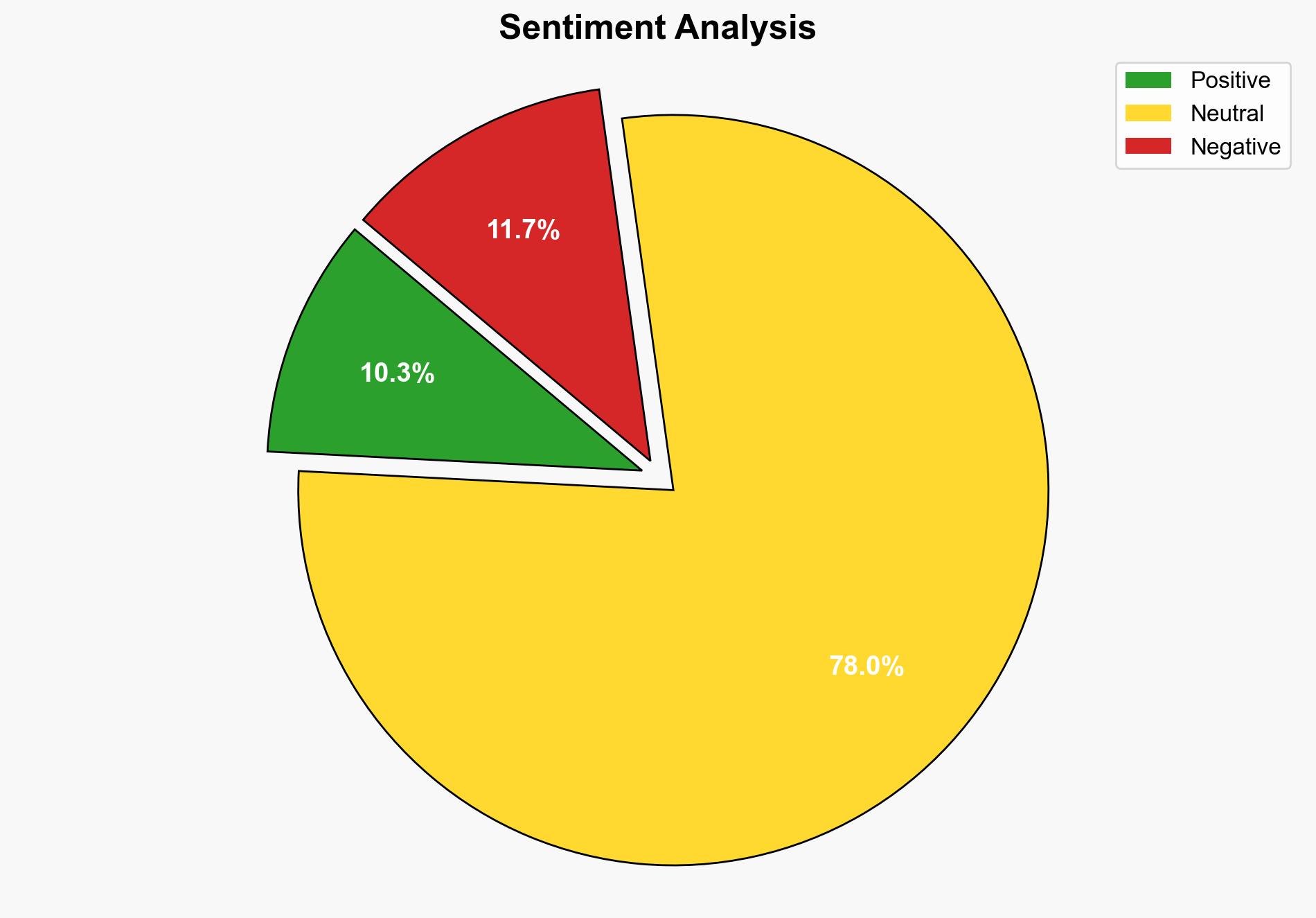

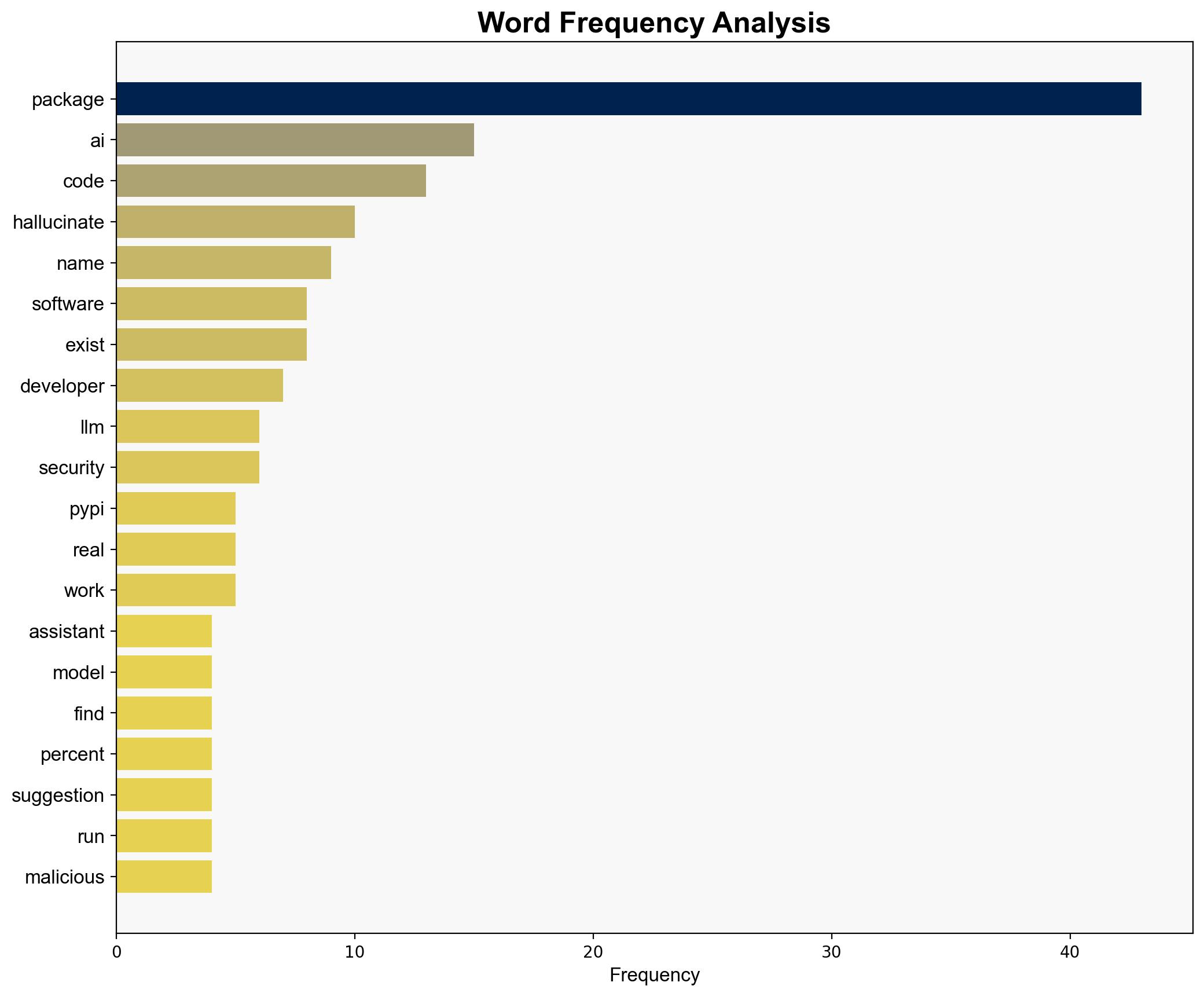

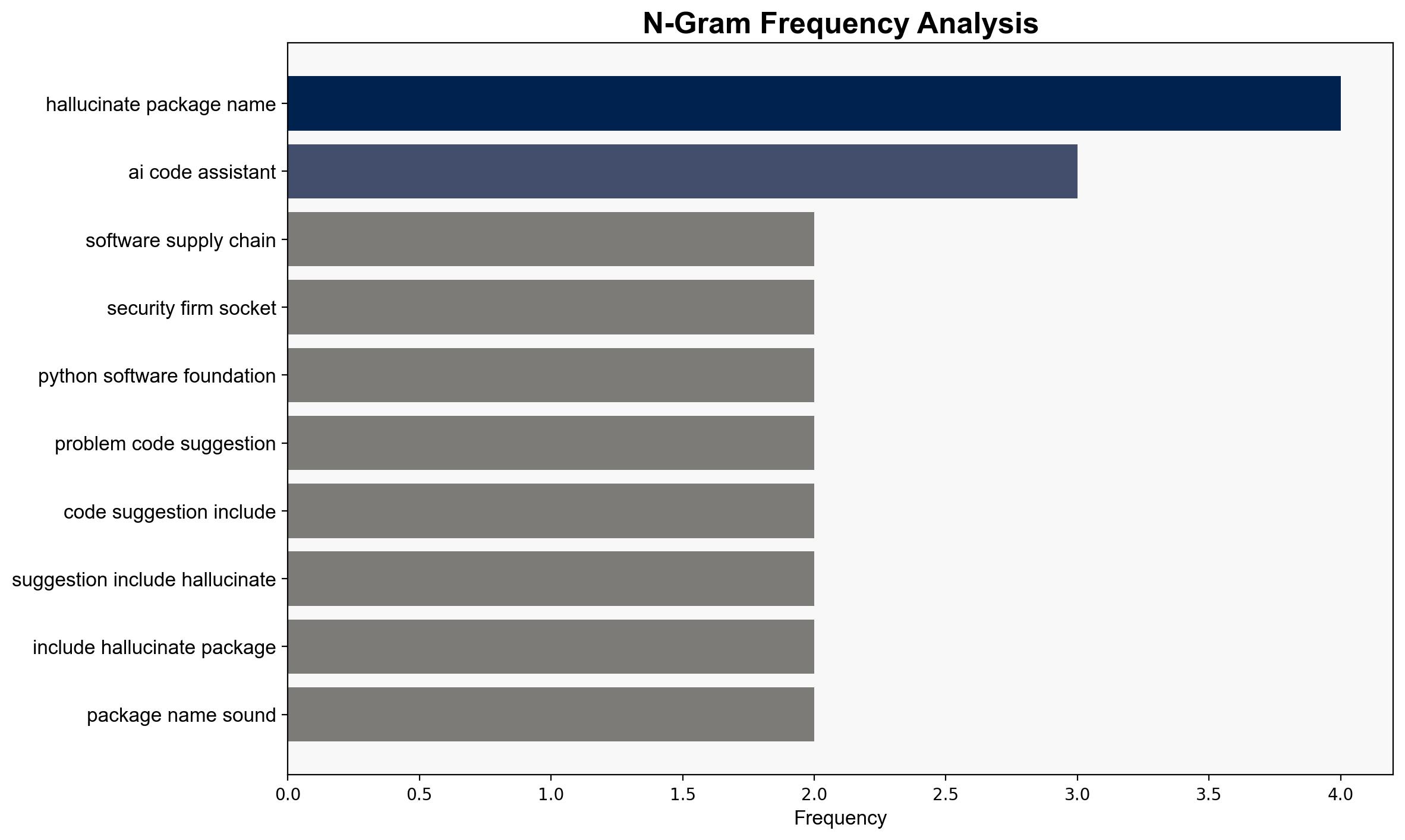

The rise of AI-powered code generation tools, specifically large language models (LLMs), is introducing significant risks to the software supply chain. These tools often suggest non-existent software packages, leading to potential exploitation by malicious actors. The phenomenon, termed “slopsquatting,” involves creating malicious packages under hallucinated names, which can then be distributed through package registries. This issue poses a threat to software integrity and security, requiring immediate attention and strategic intervention.

2. Detailed Analysis

The following structured analytic techniques have been applied for this analysis:

General Analysis

The integration of LLMs in software development is reshaping the industry by automating code generation. However, a critical flaw has emerged: the tendency of these models to hallucinate non-existent package names. A study highlighted that 5.2% of package suggestions from commercial models and 21.7% from open-source models did not exist. This vulnerability is exploited through “slopsquatting,” where malicious actors create and distribute malware under these phantom package names. The recurrence of certain hallucinated names suggests a pattern, with some names appearing consistently in repeated prompts. This issue is compounded by the lack of transparency from LLM providers, making it challenging to quantify the extent of the problem.

3. Implications and Strategic Risks

The exploitation of hallucinated package names poses significant risks to software security and integrity. The potential for widespread malware distribution through trusted package registries threatens national security, economic interests, and regional stability. The lack of transparency and accountability from LLM providers exacerbates these risks, hindering efforts to mitigate the threat. Additionally, the growing reliance on AI in software development increases vulnerability to such exploits, necessitating a reevaluation of current practices and policies.

4. Recommendations and Outlook

Recommendations:

- Enhance transparency and accountability from LLM providers to track and mitigate hallucinated package names.

- Implement stricter verification processes for package submissions to registries like PyPI and npm.

- Encourage developers to double-check AI-generated code against existing package registries to prevent dependency confusion.

- Promote awareness and training for developers on the risks associated with AI-generated code.

Outlook:

In the best-case scenario, increased awareness and improved verification processes reduce the incidence of slopsquatting, enhancing software security. In the worst-case scenario, continued exploitation of hallucinated package names leads to significant security breaches and economic losses. The most likely outcome involves gradual improvements in AI transparency and developer practices, mitigating but not eliminating the risk.

5. Key Individuals and Entities

The report mentions Seth Michael Larson as a key individual discussing the challenges posed by LLM hallucinations. The involvement of academic researchers and security firms like Socket highlights the collaborative efforts to address this issue.