AI Darwin Awards – Aidarwinawards.org

Published on: 2025-09-04

Intelligence Report: AI Darwin Awards – Aidarwinawards.org

1. BLUF (Bottom Line Up Front)

The AI Darwin Awards conceptually highlights the risks of untested AI deployment by celebrating failures to emphasize the need for rigorous safety standards. The most supported hypothesis suggests that this initiative serves as a satirical critique to promote awareness about AI safety. Confidence level: Moderate. Recommended action: Encourage dialogue on AI safety standards and promote responsible AI development practices.

2. Competing Hypotheses

1. **Hypothesis A**: The AI Darwin Awards are a satirical initiative designed to raise awareness about the potential dangers of AI by highlighting catastrophic failures.

2. **Hypothesis B**: The AI Darwin Awards are a genuine attempt to document AI failures for educational purposes, aiming to influence policy and industry standards.

Using ACH 2.0, Hypothesis A is better supported due to the satirical language and emphasis on public voting and humor. Hypothesis B lacks direct evidence of educational intent beyond the satirical presentation.

3. Key Assumptions and Red Flags

– **Assumptions**:

– Hypothesis A assumes the audience will recognize the satire and engage in dialogue about AI risks.

– Hypothesis B assumes the initiative will be taken seriously by policymakers and industry leaders.

– **Red Flags**:

– Potential misinterpretation of satire as trivializing AI risks.

– Lack of clear educational framework or collaboration with AI safety organizations.

4. Implications and Strategic Risks

– **Patterns**: Increasing public discourse on AI safety could lead to more stringent regulatory measures.

– **Cascading Threats**: Misinterpretation of the awards could undermine serious AI safety efforts.

– **Potential Escalation**: If AI failures continue without proper oversight, public trust in AI technologies may erode, impacting economic and technological advancements.

5. Recommendations and Outlook

- Promote collaboration between AI developers and safety researchers to establish robust testing protocols.

- Encourage educational initiatives that clearly differentiate satire from serious discourse.

- Scenario Projections:

- Best Case: Increased awareness leads to improved AI safety standards and reduced failures.

- Worst Case: Satirical approach is misunderstood, leading to complacency in AI safety.

- Most Likely: Mixed reactions, with some increased awareness but limited policy impact.

6. Key Individuals and Entities

No specific individuals are mentioned in the source text. The initiative appears to be a collective effort without named leadership.

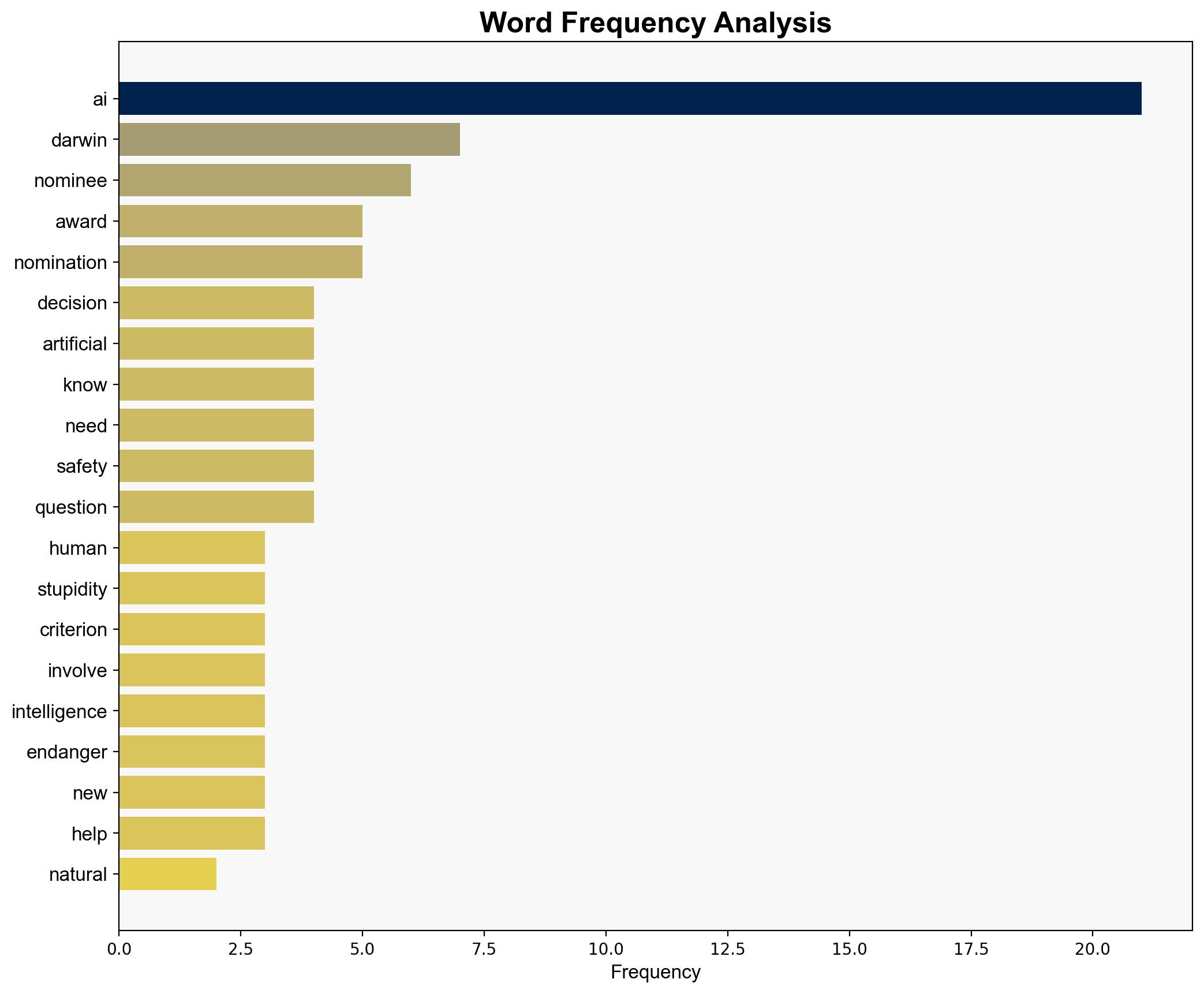

7. Thematic Tags

AI safety, technological ethics, public awareness, regulatory standards