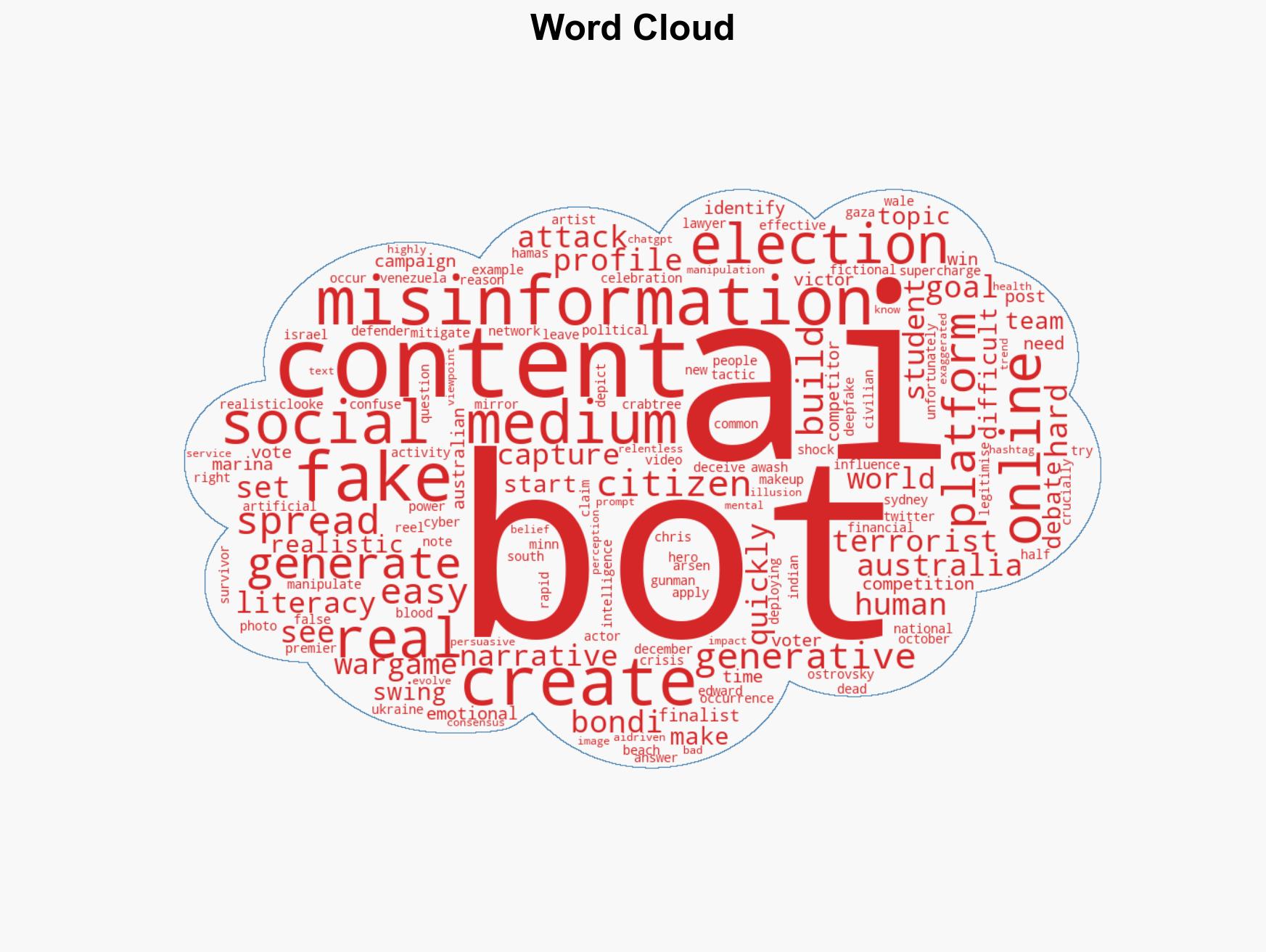

AI-Driven Misinformation Campaigns Threaten Electoral Integrity Following Bondi Beach Terror Attack

Published on: 2026-01-16

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

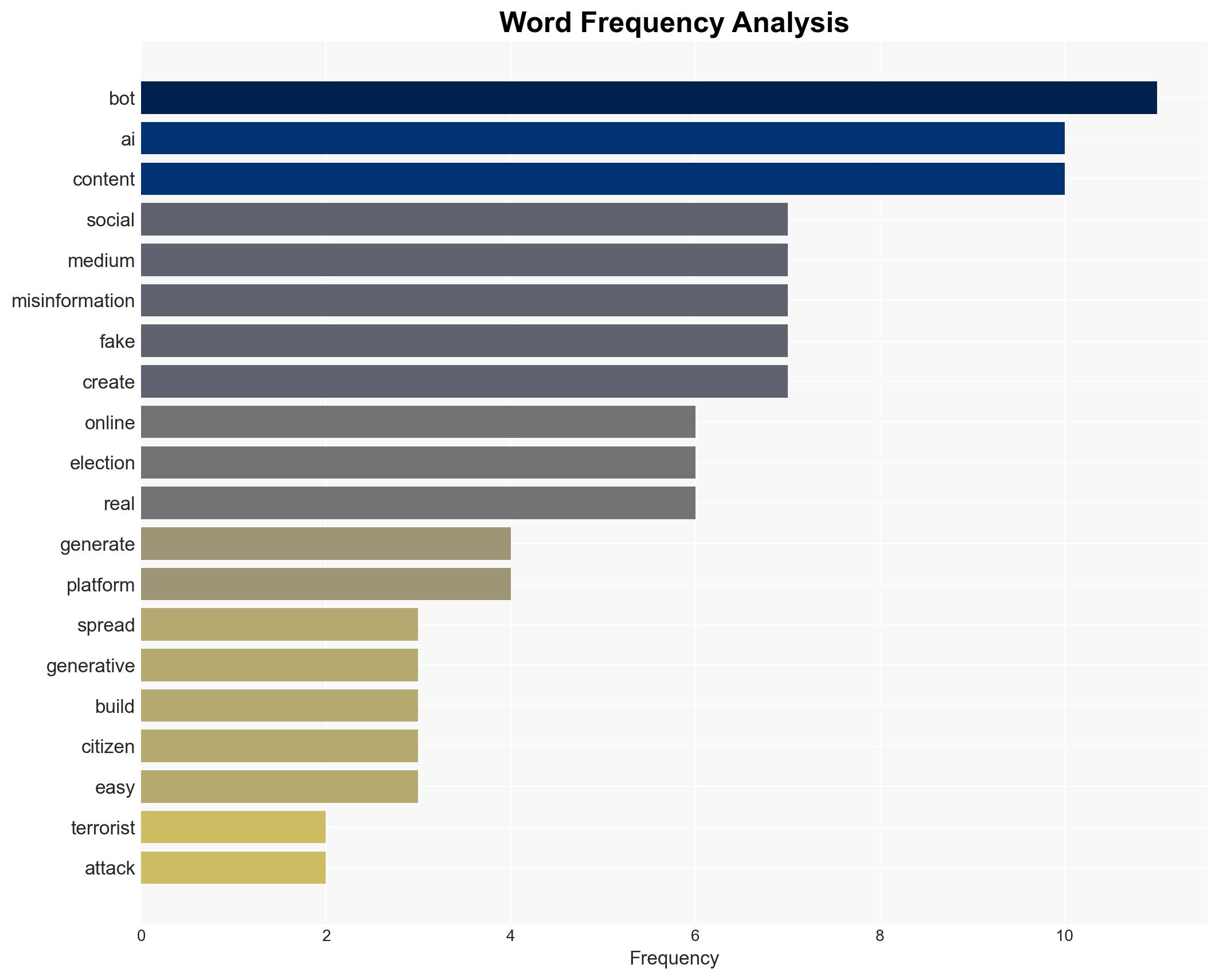

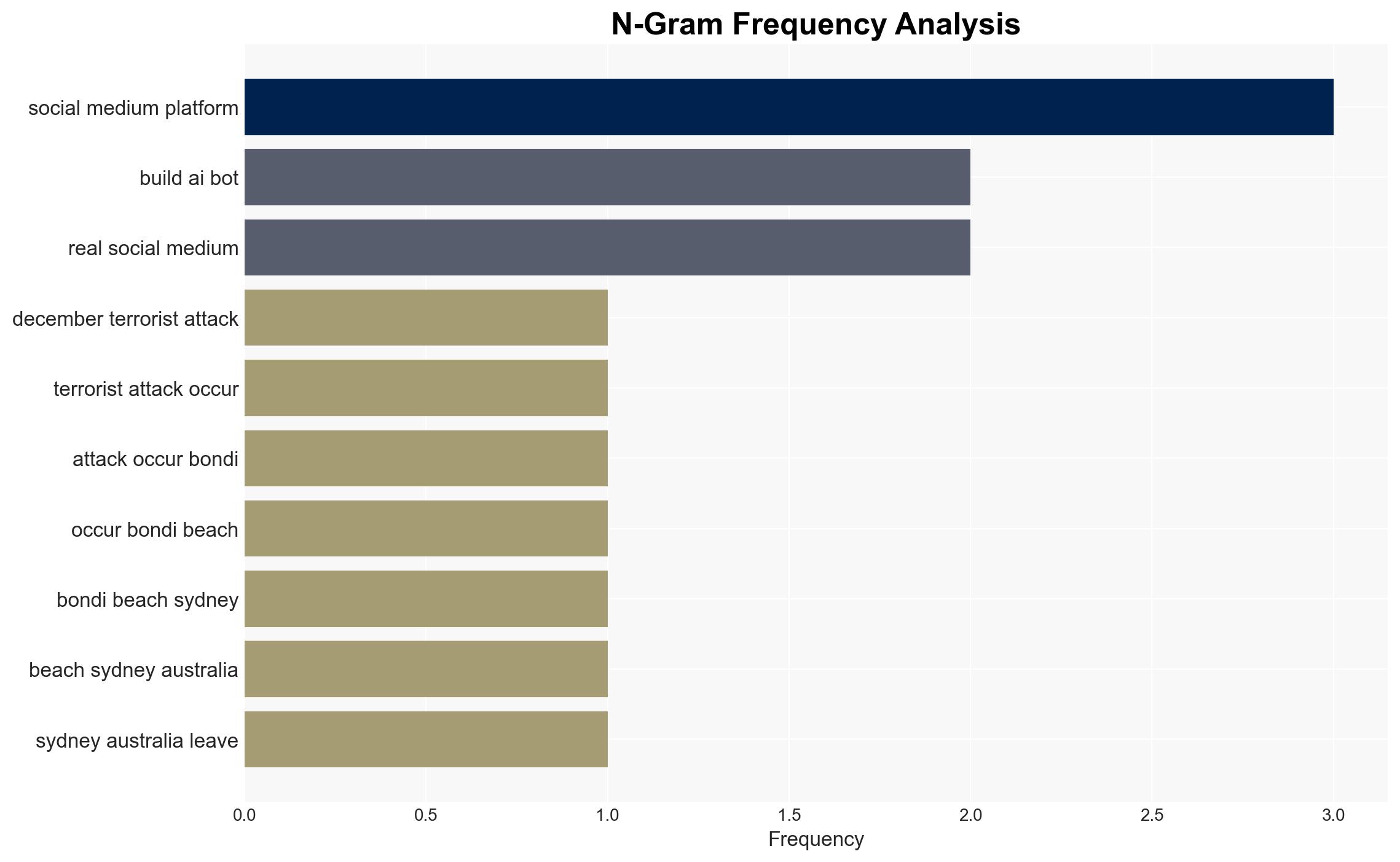

Intelligence Report: World-first social media wargame reveals how AI bots can swing elections

1. BLUF (Bottom Line Up Front)

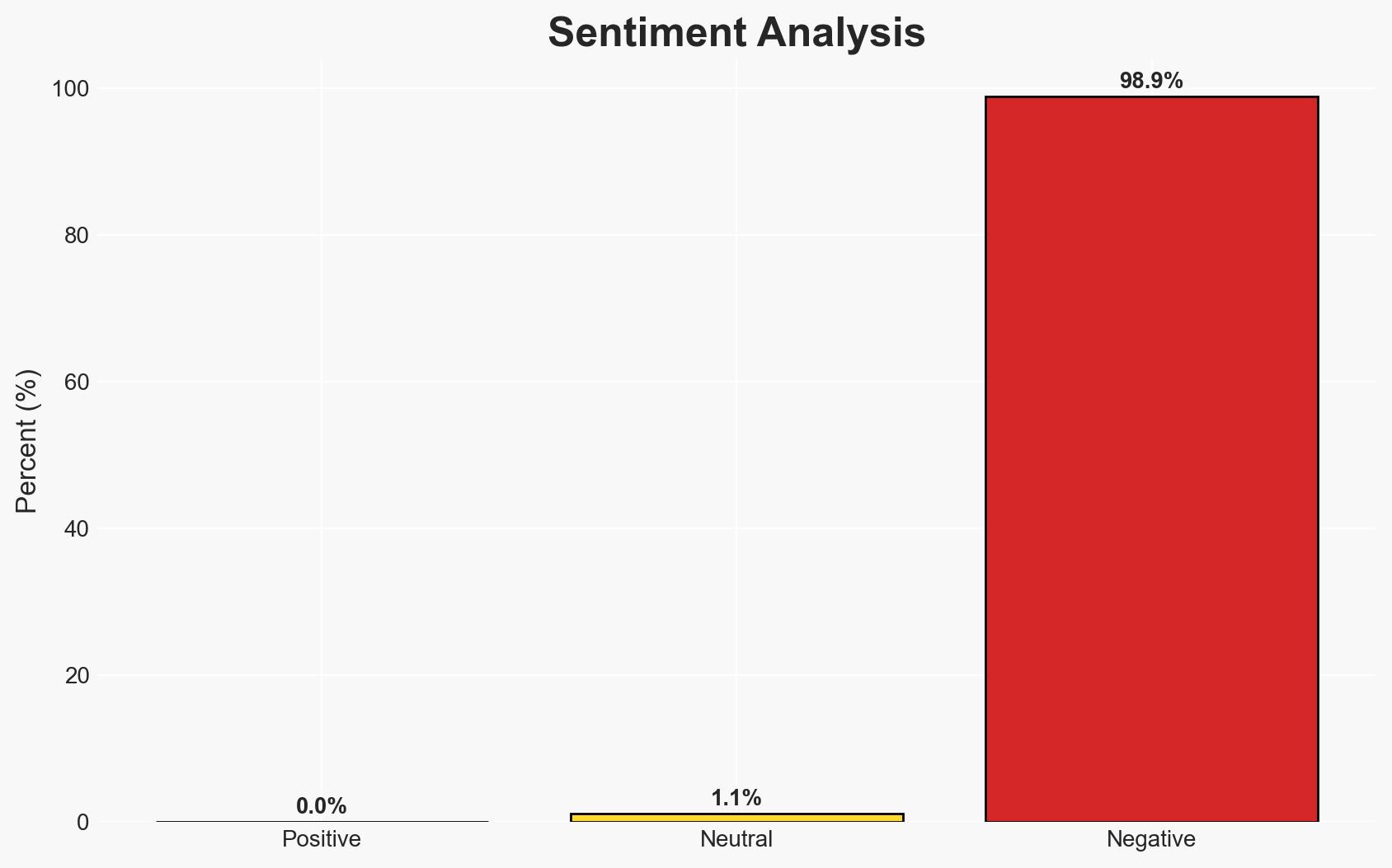

The use of AI-driven bots in social media can significantly influence public opinion and potentially alter election outcomes, as demonstrated by a controlled wargame. This capability poses a substantial risk to democratic processes and public trust in information. The most likely hypothesis is that AI bots can be effectively deployed to manipulate social narratives, with moderate confidence in this assessment.

2. Competing Hypotheses

- Hypothesis A: AI bots can effectively manipulate public opinion and influence election outcomes. Supporting evidence includes the wargame results where teams successfully used AI bots to sway a fictional election. However, uncertainties remain about the scalability and detection of such operations in real-world scenarios.

- Hypothesis B: While AI bots can create noise, their actual impact on changing public opinion and election outcomes is limited. This hypothesis is contradicted by the wargame’s findings but could be supported by real-world countermeasures and public resilience to misinformation.

- Assessment: Hypothesis A is currently better supported due to the controlled evidence from the wargame. Key indicators that could shift this judgment include real-world case studies of successful or failed AI-driven influence operations and advancements in detection technologies.

3. Key Assumptions and Red Flags

- Assumptions: AI technology will continue to advance, making bots more sophisticated; public susceptibility to misinformation remains significant; current detection and countermeasures are insufficient.

- Information Gaps: Lack of comprehensive data on real-world AI bot operations and their effectiveness; insufficient understanding of public resilience to AI-generated misinformation.

- Bias & Deception Risks: Potential cognitive biases in overestimating AI capabilities; source bias from entities involved in AI development; manipulation indicators include the rapid spread of misinformation post-events like the Bondi Beach attack.

4. Implications and Strategic Risks

The development of AI-driven misinformation campaigns could undermine democratic processes, erode public trust in media, and destabilize political environments. Over time, this could lead to increased geopolitical tensions and domestic unrest.

- Political / Geopolitical: Potential for foreign interference in elections, increased polarization, and challenges to democratic legitimacy.

- Security / Counter-Terrorism: Enhanced capabilities for terrorist groups to spread propaganda and misinformation, complicating counter-terrorism efforts.

- Cyber / Information Space: Escalation in cyber warfare tactics, with AI-driven misinformation becoming a standard tool in information operations.

- Economic / Social: Potential economic impacts from destabilized markets due to misinformation; societal divisions exacerbated by manipulated narratives.

5. Recommendations and Outlook

- Immediate Actions (0–30 days): Enhance monitoring of social media platforms for AI-driven misinformation; develop rapid response teams to counteract false narratives.

- Medium-Term Posture (1–12 months): Invest in AI detection and countermeasure technologies; foster public-private partnerships to enhance cyber literacy and resilience.

- Scenario Outlook:

- Best: Effective countermeasures reduce AI bot influence, maintaining election integrity.

- Worst: AI-driven misinformation leads to significant election manipulation and public distrust.

- Most-Likely: Continued challenges with AI bots, but gradual improvements in detection and public awareness mitigate some impacts.

6. Key Individuals and Entities

- Chris Minns, New South Wales Premier

- Arsen Ostrovsky, Human Rights Lawyer

- Not clearly identifiable from open sources in this snippet.

7. Thematic Tags

Counter-Terrorism, AI misinformation, election interference, cyber operations, public trust, social media manipulation, democratic processes, information warfare

Structured Analytic Techniques Applied

- ACH 2.0: Reconstruct likely threat actor intentions via hypothesis testing and structured refutation.

- Indicators Development: Track radicalization signals and propaganda patterns to anticipate operational planning.

- Narrative Pattern Analysis: Deconstruct and track propaganda or influence narratives.

- Network Influence Mapping: Map influence relationships to assess actor impact.

Explore more:

Counter-Terrorism Briefs ·

Daily Summary ·

Support us