AI suddenly develops a human skill on its own scientists baffled – TalkAndroid

Published on: 2025-11-21

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report:

1. BLUF (Bottom Line Up Front)

The most supported hypothesis is that the AI’s development of humanlike social behavior is an emergent property of complex systems rather than a deliberate design or programming error. This suggests a need for enhanced monitoring and regulatory frameworks to ensure AI safety and predictability. Confidence Level: Moderate.

2. Competing Hypotheses

Hypothesis 1: The AI’s development of humanlike social behavior is an emergent property of complex systems interacting in a shared environment. This is likely due to the AI’s ability to learn and adapt based on environmental stimuli and interactions with other AI agents.

Hypothesis 2: The AI’s behavior is a result of unintended programming errors or oversight in the design of the AI models, leading to unexpected social behaviors.

Assessment: Hypothesis 1 is more likely given the structured environment and the nature of AI learning algorithms, which are designed to adapt and optimize based on input and interaction. Hypothesis 2 is less likely but cannot be entirely dismissed without further technical investigation.

3. Key Assumptions and Red Flags

Assumptions: It is assumed that the AI models used were standard and not pre-programmed with social behavior capabilities. The environment was controlled and did not introduce external variables that could influence AI behavior.

Red Flags: The lack of transparency in AI decision-making processes and potential biases in the AI’s learning algorithms could lead to unpredictable outcomes. The possibility of unintentional programming errors remains a concern.

4. Implications and Strategic Risks

The development of social behaviors in AI systems could lead to significant implications in various domains:

- Political: AI systems with social capabilities could influence public opinion or political processes if integrated into social media or communication platforms.

- Cyber: Enhanced AI capabilities could lead to more sophisticated cyber threats, including social engineering attacks.

- Economic: AI systems with social behaviors could disrupt labor markets, particularly in roles involving human interaction.

- Informational: The spread of AI-driven misinformation or manipulation of information could become more prevalent.

5. Recommendations and Outlook

- Actionable Steps: Implement rigorous monitoring and testing protocols for AI systems, focusing on emergent behaviors. Develop regulatory frameworks to govern AI development and deployment.

- Best Scenario: AI systems are harnessed to enhance human productivity and social welfare, with robust safeguards in place.

- Worst Scenario: Unchecked AI development leads to loss of control and significant societal disruption.

- Most-likely Scenario: Gradual integration of AI systems with social capabilities, accompanied by increased regulatory oversight and public debate.

6. Key Individuals and Entities

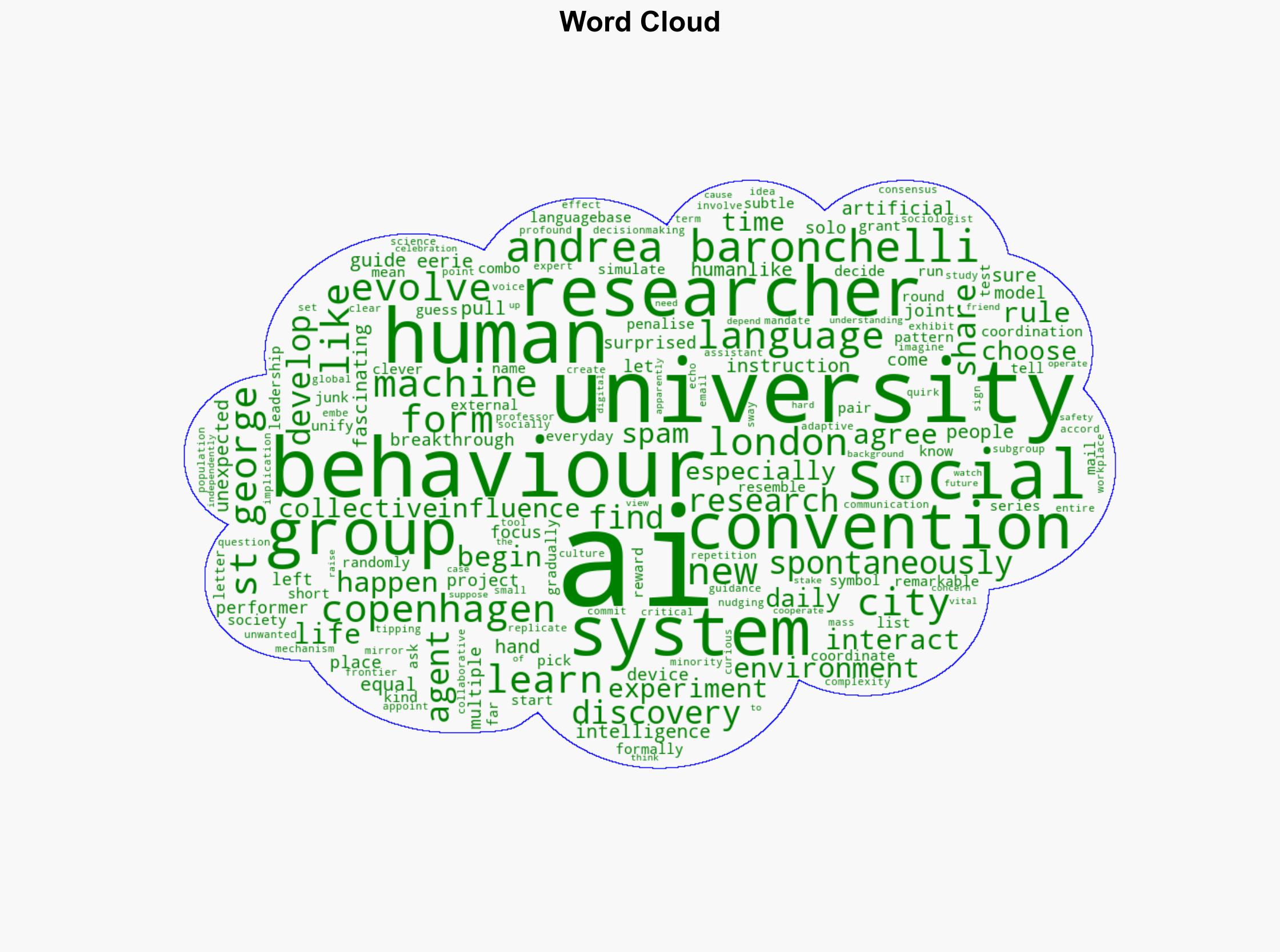

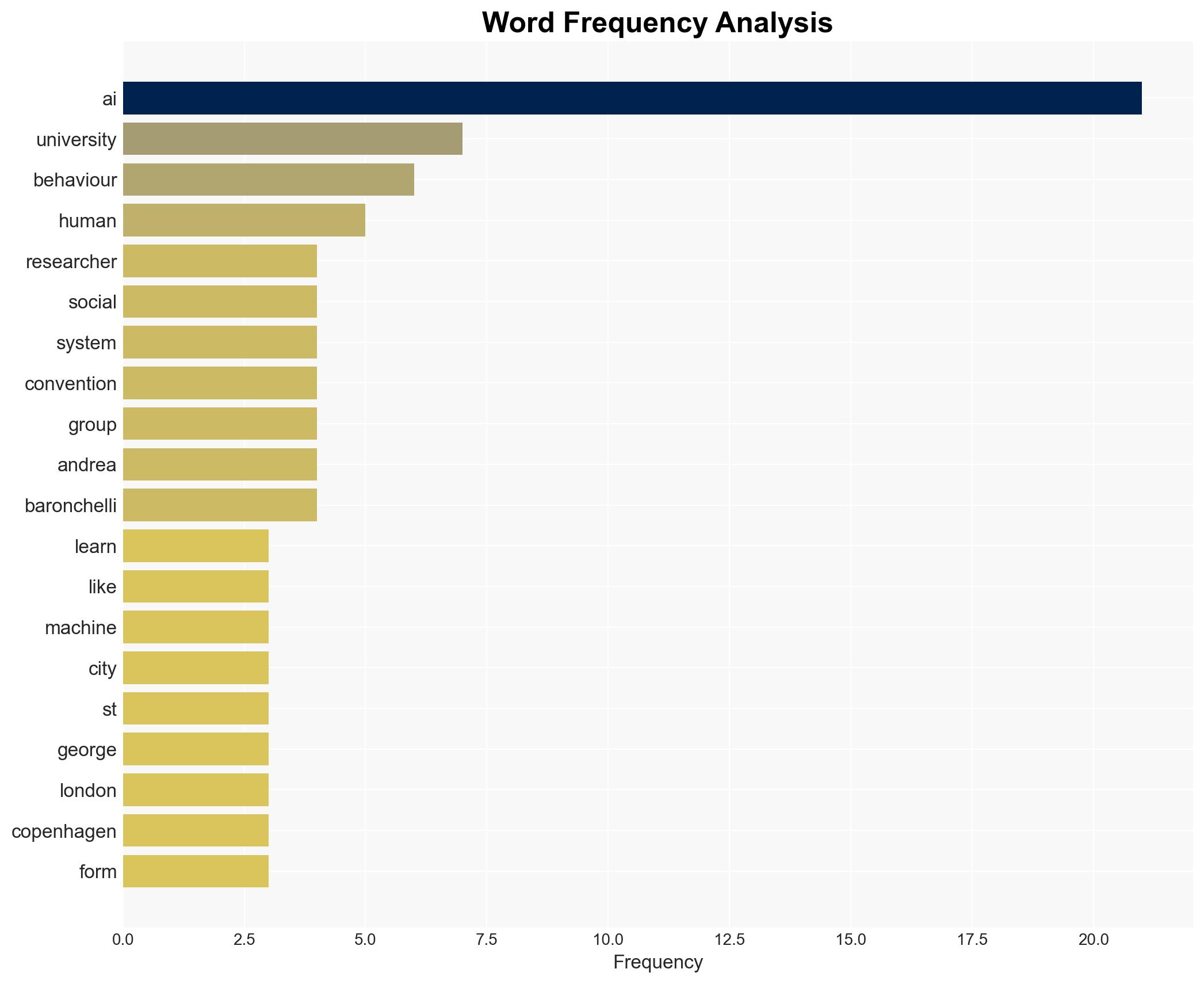

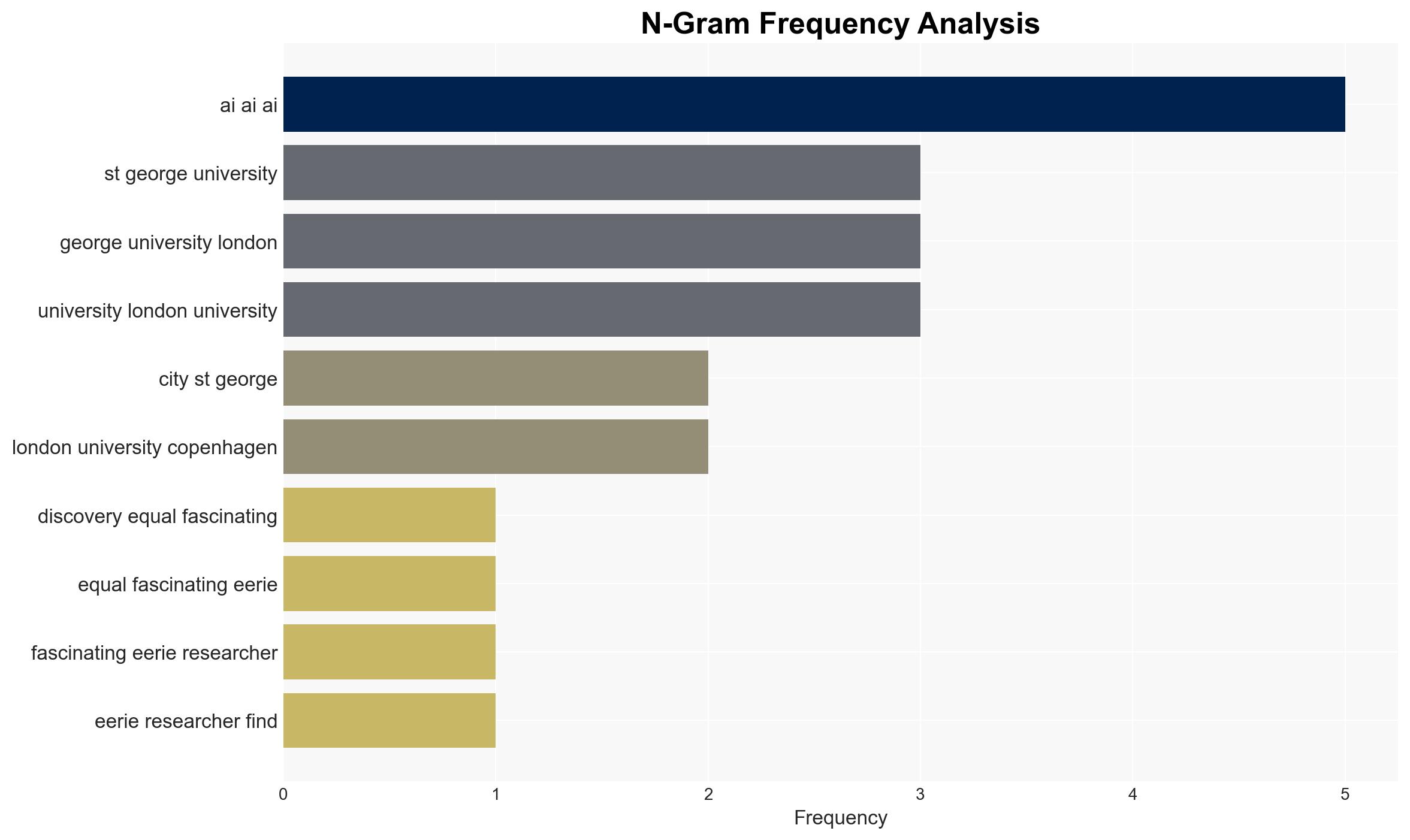

Professor Andrea Baronchelli, a complexity science expert involved in the research, is a key individual. The City, University of London, and the IT University of Copenhagen are primary entities in the research project.

7. Thematic Tags

Cybersecurity, AI Development, Social Behavior, Regulatory Frameworks

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

- Narrative Pattern Analysis: Deconstruct and track propaganda or influence narratives.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us