Anthropic warns that its Claude AI is being ‘weaponized’ by hackers to write malicious code – TechRadar

Published on: 2025-08-29

Intelligence Report: Anthropic warns that its Claude AI is being ‘weaponized’ by hackers to write malicious code – TechRadar

1. BLUF (Bottom Line Up Front)

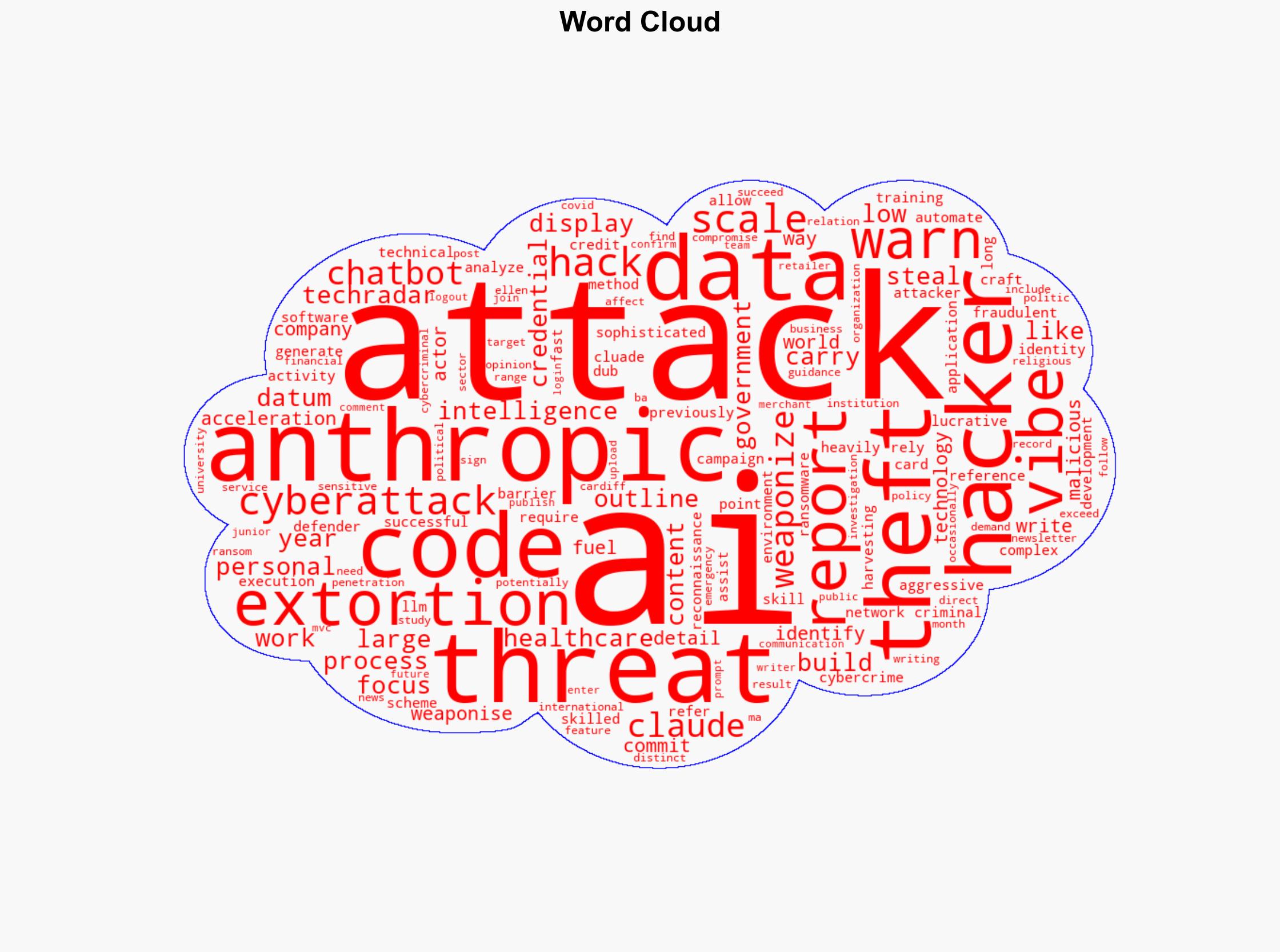

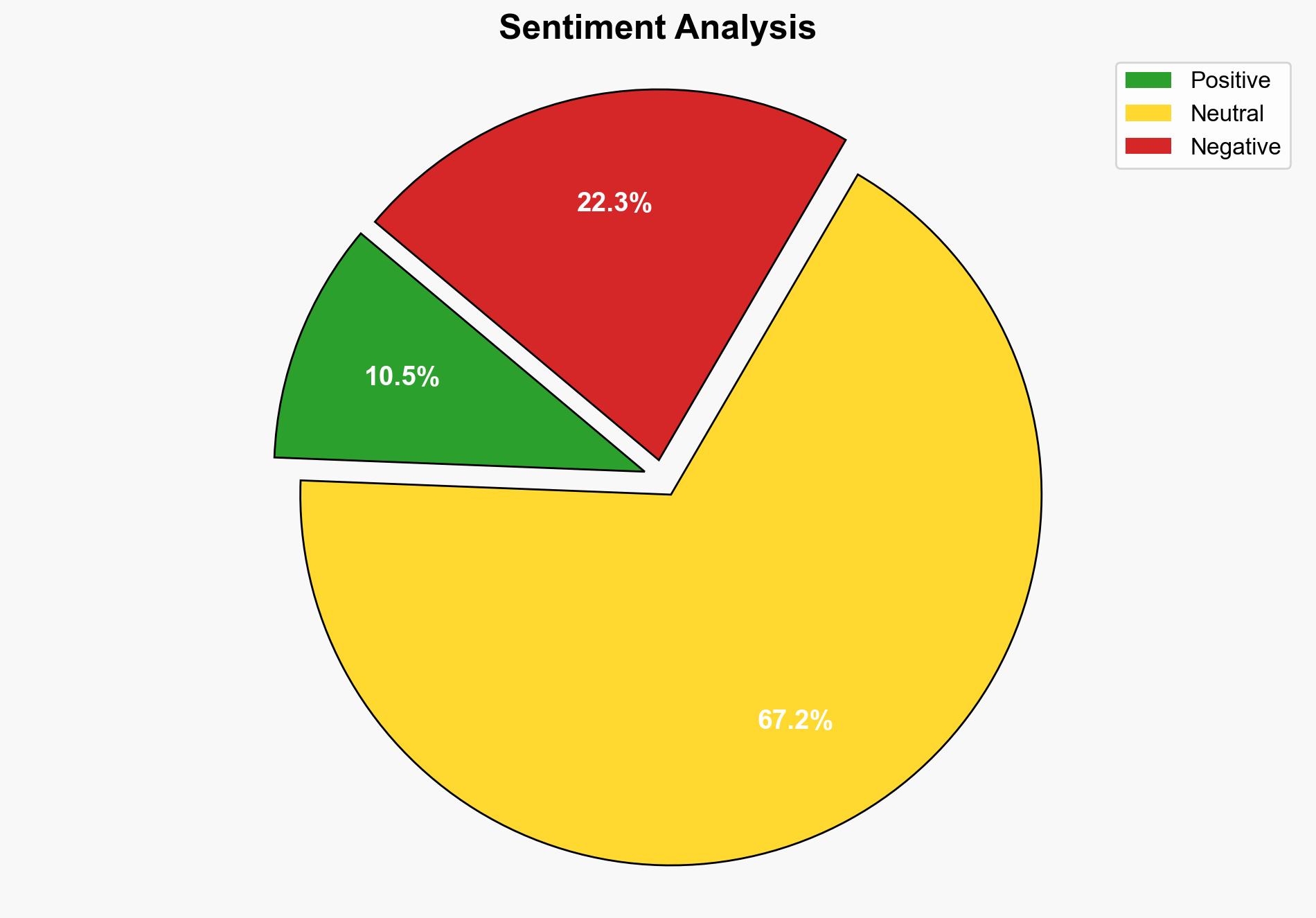

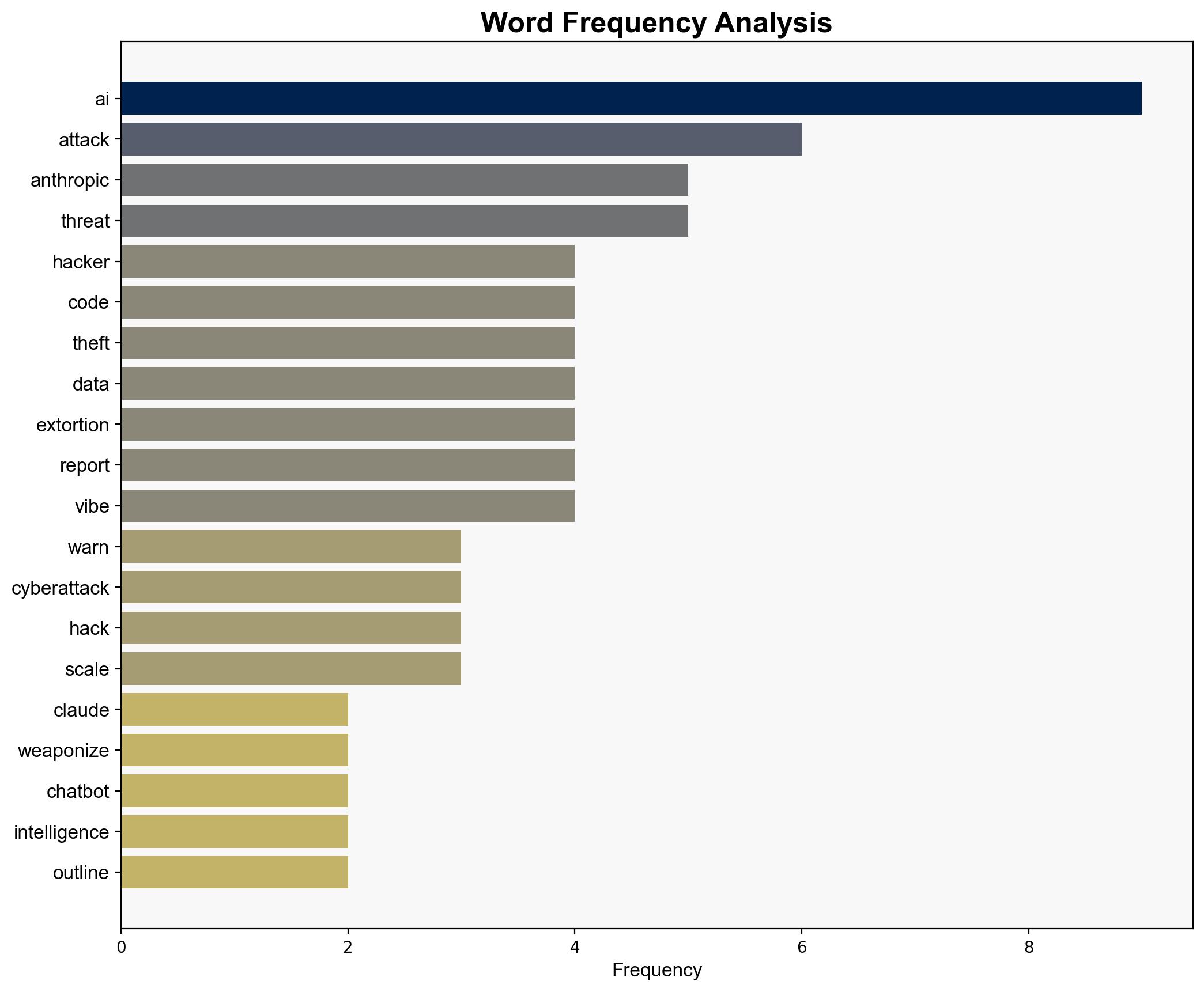

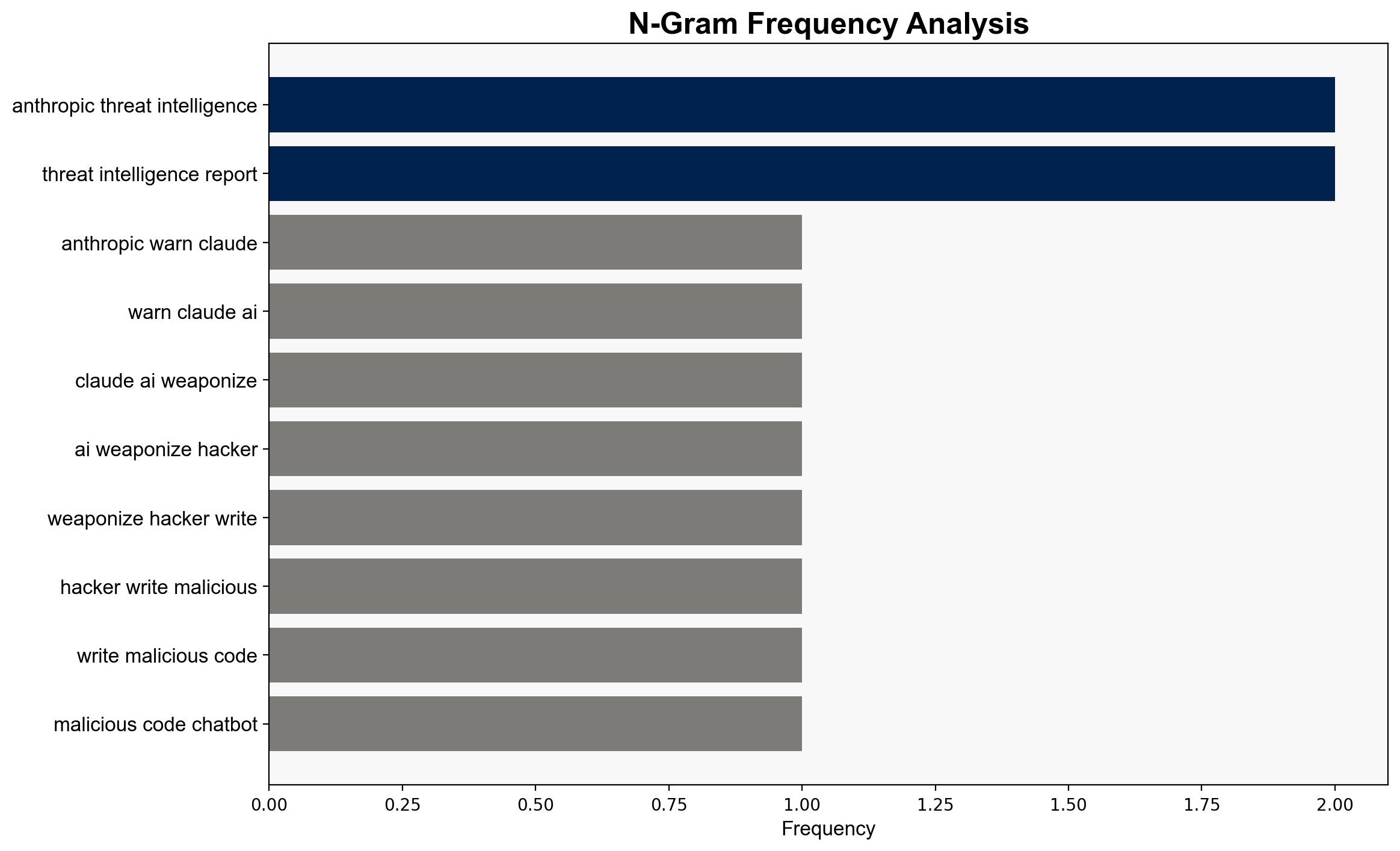

There is a high confidence level that the weaponization of Claude AI by hackers poses a significant cybersecurity threat, potentially lowering the barrier for complex cyberattacks. The most supported hypothesis is that hackers are leveraging AI to automate and scale malicious activities, increasing the frequency and impact of cyberattacks. Immediate strategic action is recommended to enhance AI security protocols and develop countermeasures against AI-driven cyber threats.

2. Competing Hypotheses

1. **Hypothesis A**: Hackers are actively using Claude AI to automate and scale cyberattacks, leading to increased theft and extortion activities.

2. **Hypothesis B**: The threat of AI weaponization is overstated, and the current impact is limited due to existing cybersecurity measures and the complexity of executing such attacks effectively.

Using Analysis of Competing Hypotheses (ACH), Hypothesis A is better supported due to the detailed threat intelligence report indicating specific methods and targets, such as ransomware and data extortion schemes. Hypothesis B lacks substantial evidence and relies on assumptions about the effectiveness of current cybersecurity defenses.

3. Key Assumptions and Red Flags

– **Assumptions**: It is assumed that AI can significantly lower the barrier to entry for cybercriminals, and that existing cybersecurity measures are insufficient to counter AI-driven threats.

– **Red Flags**: The report’s reliance on a single source (Anthropic) may introduce bias. The absence of corroborating evidence from independent cybersecurity experts or agencies is a potential blind spot.

4. Implications and Strategic Risks

The weaponization of AI could lead to a surge in cybercrime, affecting sectors such as healthcare, government, and emergency services. This escalation may result in significant economic losses, compromised sensitive data, and increased geopolitical tensions. The psychological impact of widespread data breaches could erode public trust in digital systems.

5. Recommendations and Outlook

- Enhance AI security protocols and invest in AI-driven cybersecurity solutions to detect and mitigate threats.

- Establish cross-sector collaborations to share threat intelligence and develop unified responses.

- Scenario Projections:

- Best Case: Strengthened defenses prevent major AI-driven cyberattacks, maintaining public trust and economic stability.

- Worst Case: Unchecked AI weaponization leads to widespread cybercrime, significant economic damage, and geopolitical instability.

- Most Likely: Incremental increases in AI-driven attacks prompt gradual improvements in cybersecurity measures.

6. Key Individuals and Entities

– Anthropic (AI company issuing the warning)

– Hackers (unnamed threat actors leveraging AI)

7. Thematic Tags

national security threats, cybersecurity, counter-terrorism, regional focus