Can wise heads fix the hard problem of AI policy – Crikey

Published on: 2025-10-08

Intelligence Report: Can wise heads fix the hard problem of AI policy – Crikey

1. BLUF (Bottom Line Up Front)

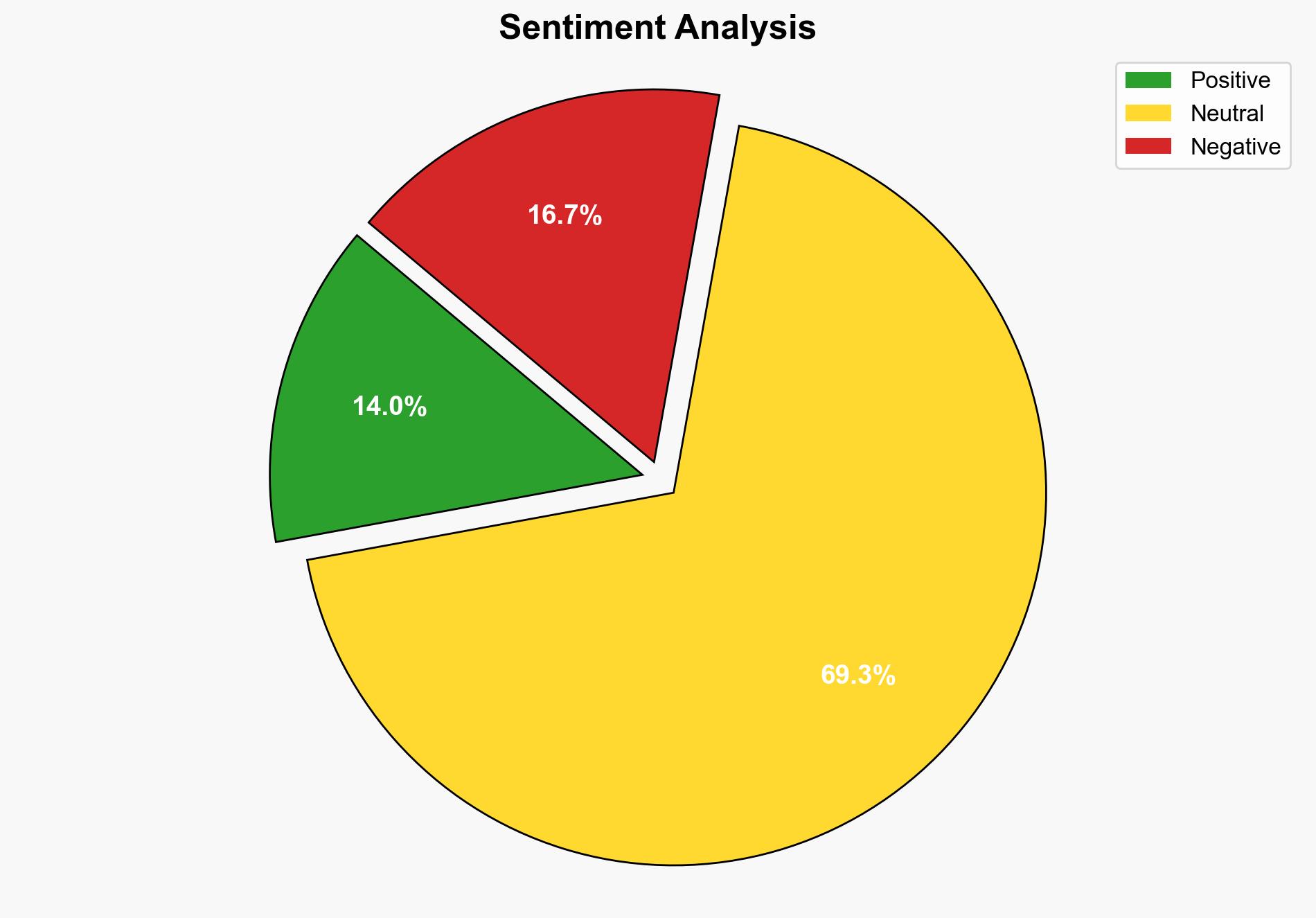

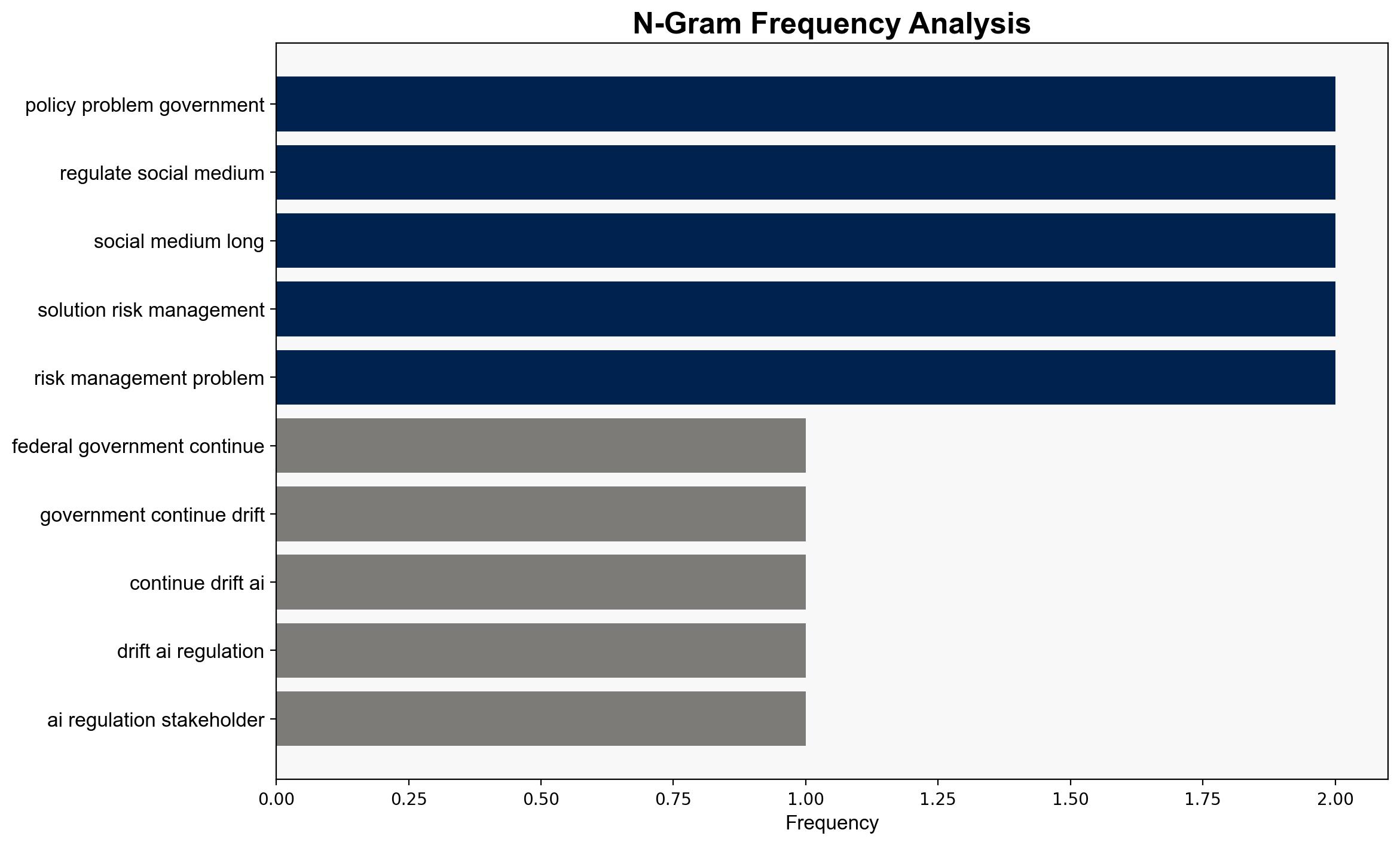

The strategic judgment is that the federal government faces a significant challenge in formulating effective AI policy due to conflicting stakeholder interests and the rapidly evolving nature of AI technology. The most supported hypothesis is that the government will struggle to implement a coherent AI policy, leading to potential economic and social disruptions. Confidence level: Moderate. Recommended action is to establish a dedicated AI regulatory body with cross-sector expertise to proactively address emerging AI challenges.

2. Competing Hypotheses

1. **Hypothesis A**: The government will successfully navigate AI policy challenges by balancing stakeholder interests and implementing effective regulations that mitigate risks while fostering innovation.

2. **Hypothesis B**: The government will fail to establish a coherent AI policy due to conflicting demands from stakeholders, resulting in economic and social disruptions as AI technologies advance unchecked.

Using ACH 2.0, Hypothesis B is better supported due to the current lack of clear policy direction and the diverse, often contradictory, demands from stakeholders such as AI companies, content creators, and unions.

3. Key Assumptions and Red Flags

– **Assumptions**: It is assumed that AI technological advancements will continue at a rapid pace and that stakeholder interests will remain divergent.

– **Red Flags**: The absence of a clear governmental policy framework and the potential for AI companies to influence policy disproportionately.

– **Blind Spots**: The potential for international regulatory developments to impact domestic policy is not addressed.

4. Implications and Strategic Risks

– **Economic Risks**: Unregulated AI could lead to market instability, job displacement, and increased inequality.

– **Social Risks**: AI-driven disinformation and erosion of personal relationships could undermine social cohesion.

– **Geopolitical Risks**: Lack of regulation may weaken national security by allowing foreign entities to exploit AI technologies.

– **Cyber Risks**: Increased AI integration without proper oversight could lead to vulnerabilities in critical infrastructure.

5. Recommendations and Outlook

- Establish a dedicated AI regulatory body with cross-sector expertise.

- Engage in international cooperation to harmonize AI regulations.

- Scenario Projections:

- **Best Case**: Effective regulation leads to balanced innovation and risk mitigation.

- **Worst Case**: Regulatory failure results in economic and social chaos.

- **Most Likely**: Partial regulation with ongoing stakeholder conflicts and moderate disruptions.

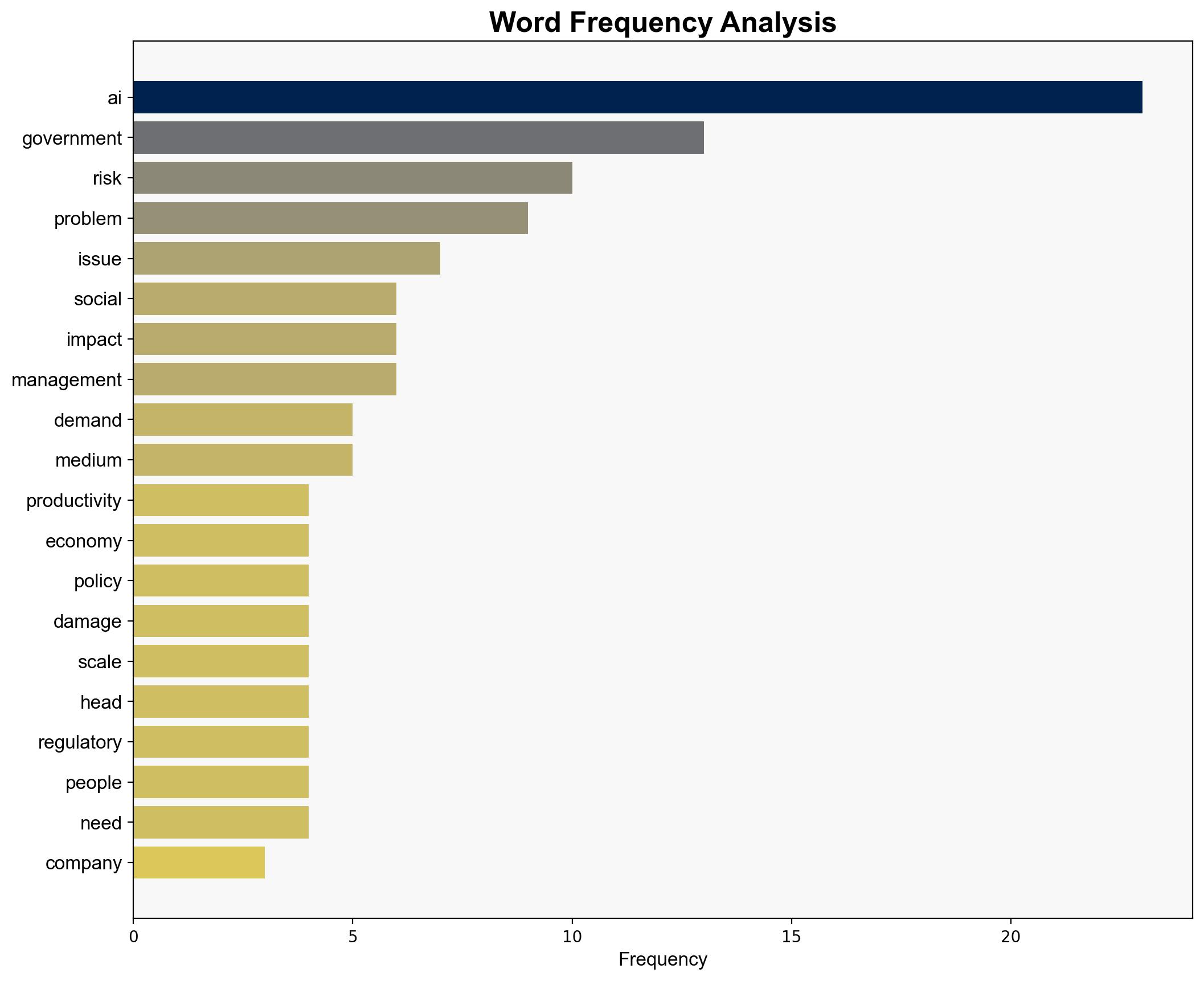

6. Key Individuals and Entities

– Richard Holden: Highlighted the risk of an AI bubble.

– Mordy Bromberg: Involved in scoping regulatory challenges.

– Andrew Leigh: Discussed AI’s role in productivity and potential job impacts.

7. Thematic Tags

national security threats, cybersecurity, economic policy, AI regulation, stakeholder management