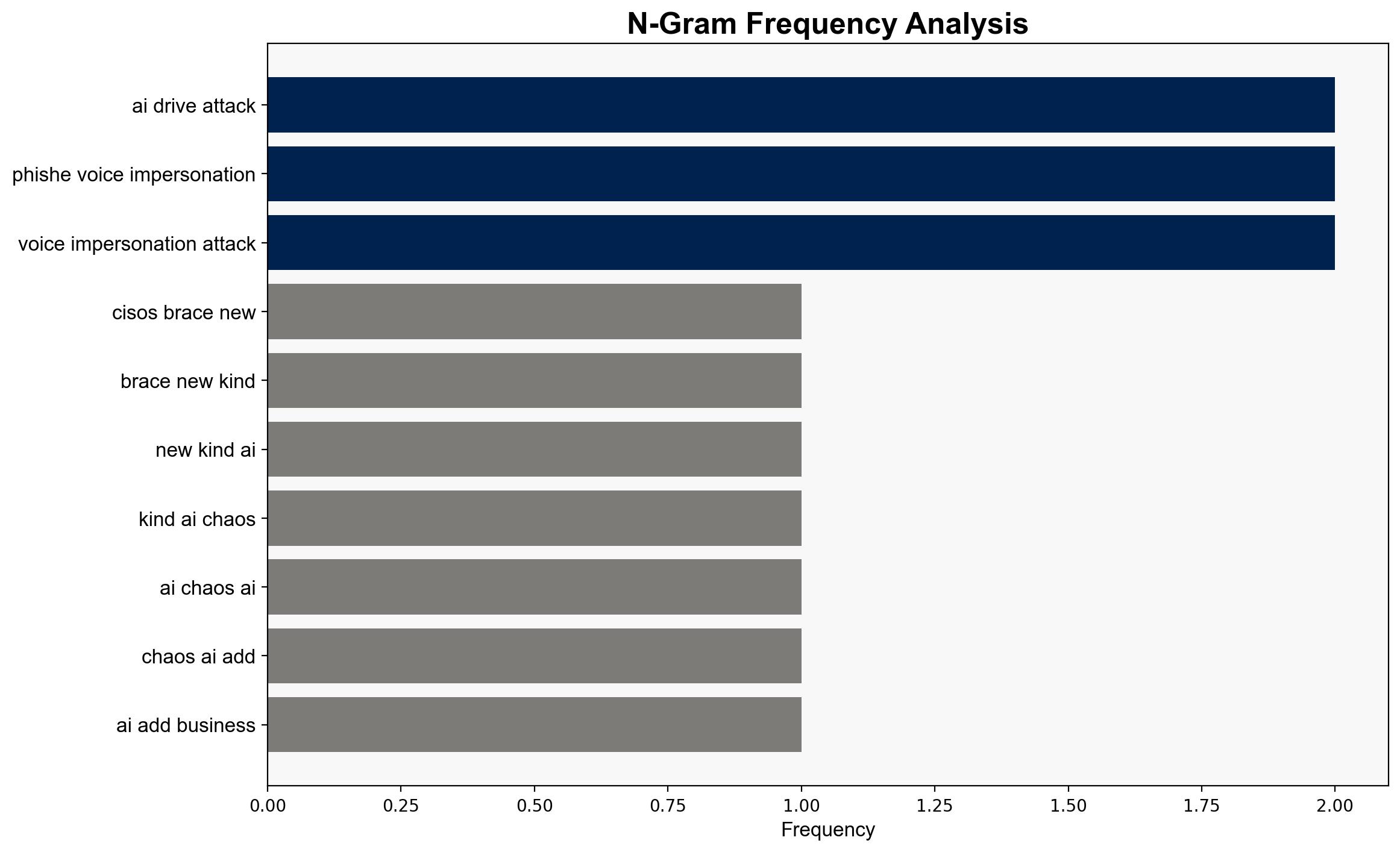

CISOs brace for a new kind of AI chaos – Help Net Security

Published on: 2025-09-12

Intelligence Report: CISOs brace for a new kind of AI chaos – Help Net Security

1. BLUF (Bottom Line Up Front)

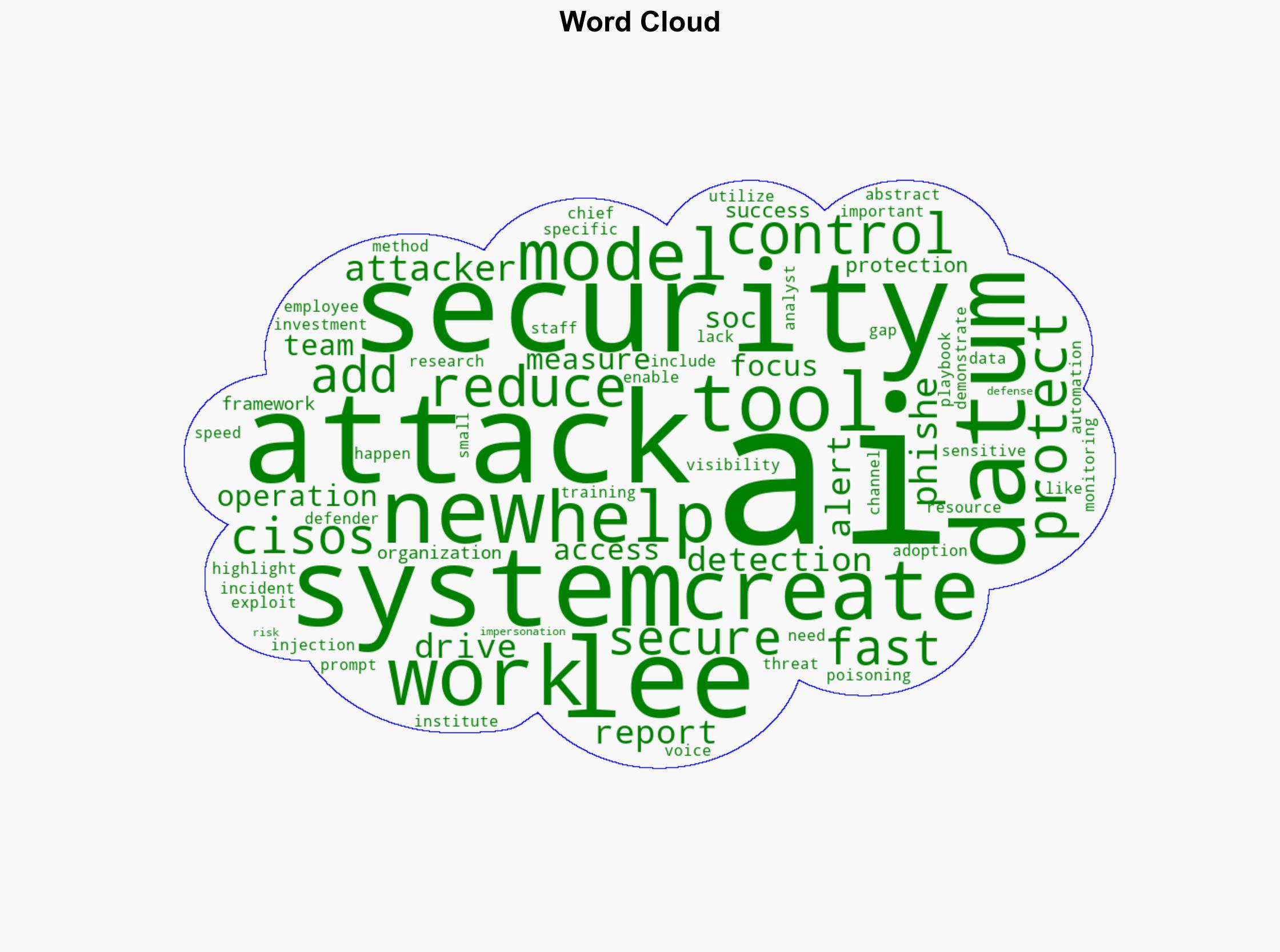

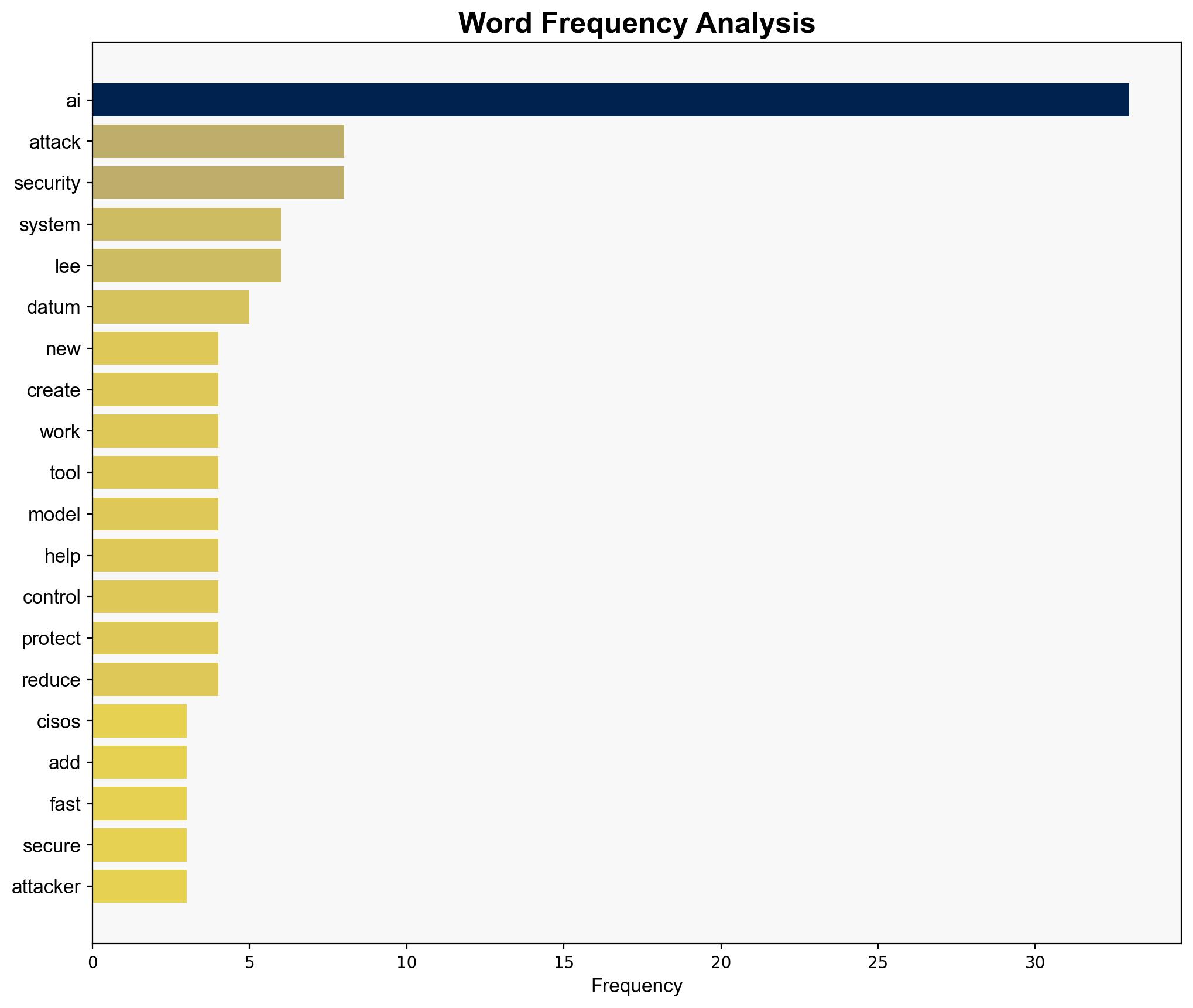

The integration of AI into cybersecurity operations presents both opportunities and challenges. The most supported hypothesis suggests that while AI can enhance security measures, it also introduces new vulnerabilities that attackers can exploit. Confidence level: Moderate. Recommended action: Implement comprehensive AI governance frameworks and enhance AI-specific threat detection capabilities.

2. Competing Hypotheses

1. **Hypothesis A**: AI integration in cybersecurity will predominantly enhance defensive capabilities, reducing the time to detect and respond to threats.

– **Supporting Evidence**: AI-driven automation can reduce analyst workload and speed up decision-making processes.

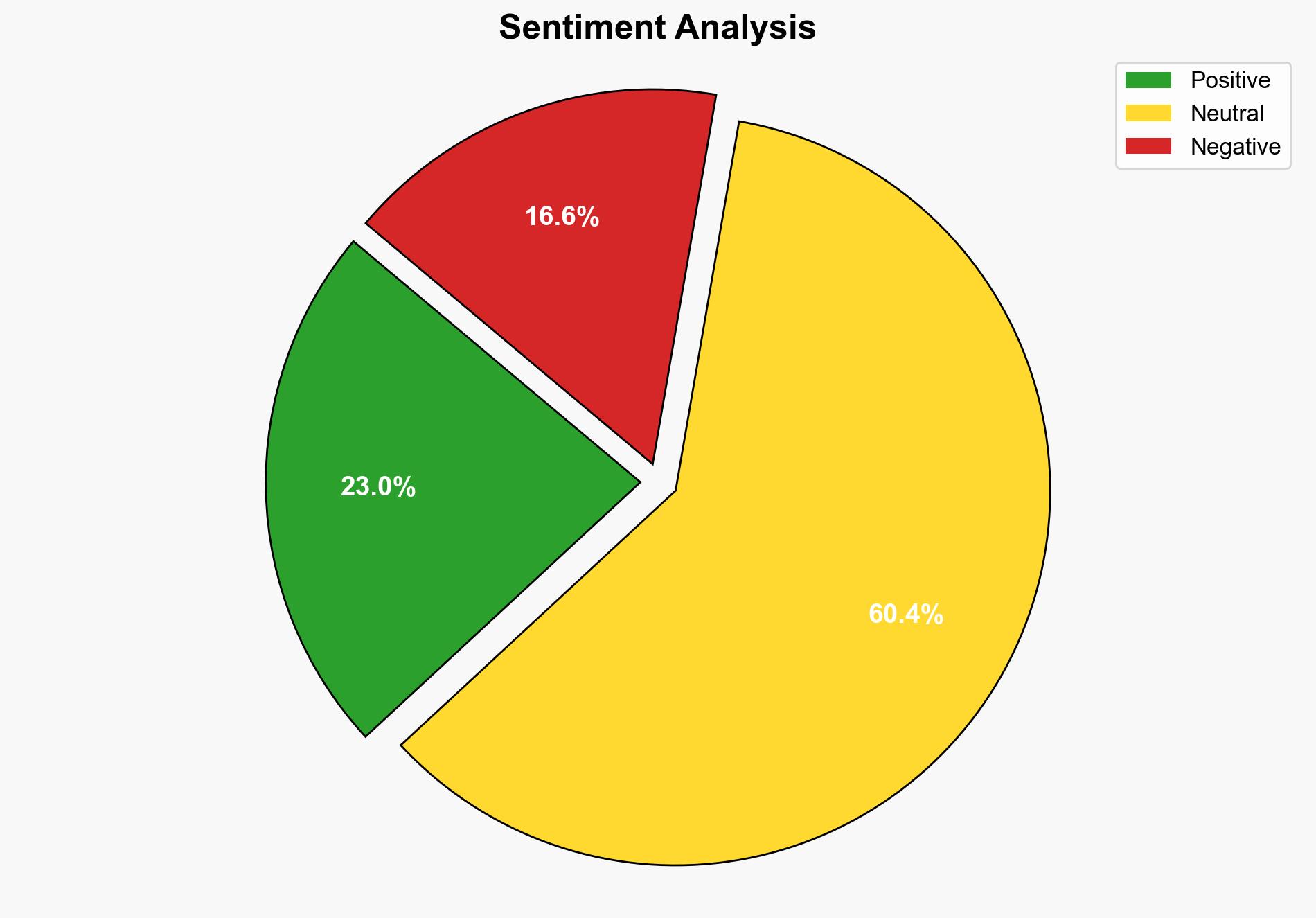

– **Structured Analytic Technique**: Bayesian Scenario Modeling indicates a 60% probability of this hypothesis being accurate based on current AI advancements and their integration into security operations.

2. **Hypothesis B**: The rapid adoption of AI in cybersecurity will create new vulnerabilities that attackers can exploit, potentially increasing the frequency and severity of breaches.

– **Supporting Evidence**: AI systems can be susceptible to specific threats like prompt injection and model poisoning, which may not be fully understood or mitigated by current security measures.

– **Structured Analytic Technique**: Cross-Impact Simulation suggests a 70% probability of this hypothesis due to the current lack of visibility into AI systems and the speed at which attackers can adapt.

3. Key Assumptions and Red Flags

– **Assumptions**: It is assumed that AI systems will be implemented with adequate security measures and that security teams will have the necessary skills to manage these systems.

– **Red Flags**: Lack of visibility into AI systems and insufficient training for security teams could lead to significant blind spots. The rapid pace of AI development may outstrip the ability of organizations to secure these systems effectively.

– **Deception Indicators**: Over-reliance on AI’s capabilities without understanding its limitations could lead to complacency.

4. Implications and Strategic Risks

– **Cascading Threats**: AI vulnerabilities could lead to widespread breaches if exploited, affecting not just individual organizations but potentially entire sectors.

– **Economic Impact**: Increased breaches could lead to significant financial losses and undermine trust in AI-driven security solutions.

– **Geopolitical Risks**: Nation-states could exploit AI vulnerabilities to conduct cyber-espionage or disrupt critical infrastructure.

– **Psychological Impact**: A breach of AI systems could lead to a loss of confidence in AI technologies, slowing their adoption and innovation.

5. Recommendations and Outlook

- Develop and implement comprehensive AI governance frameworks to ensure secure deployment and operation of AI systems.

- Enhance AI-specific threat detection capabilities and invest in training for security teams to manage AI systems effectively.

- Scenario-based projections:

- **Best Case**: Successful integration of AI enhances security, reducing breach frequency and severity.

- **Worst Case**: AI vulnerabilities are exploited, leading to significant breaches and financial losses.

- **Most Likely**: A mixed outcome where AI enhances some security aspects but introduces new challenges that require ongoing adaptation.

6. Key Individuals and Entities

– Rob Lee, Chief Research and Chief AI Officer at the Institute.

7. Thematic Tags

national security threats, cybersecurity, AI vulnerabilities, cyber defense, technological innovation