Cloudflare updates robotstxt for the AI era but publishers still want more bite against bots – Digiday

Published on: 2025-09-29

Intelligence Report: Cloudflare updates robotstxt for the AI era but publishers still want more bite against bots – Digiday

1. BLUF (Bottom Line Up Front)

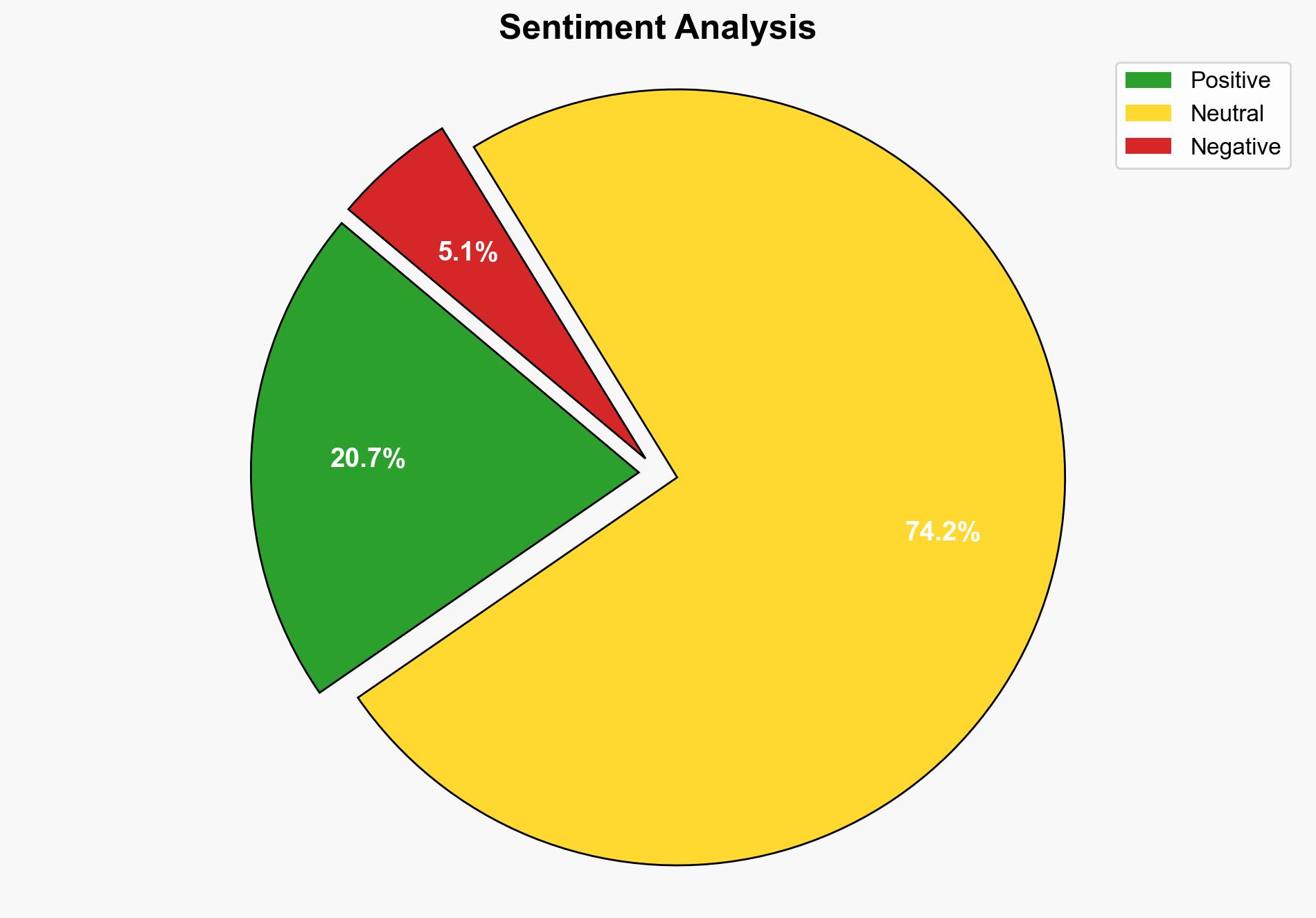

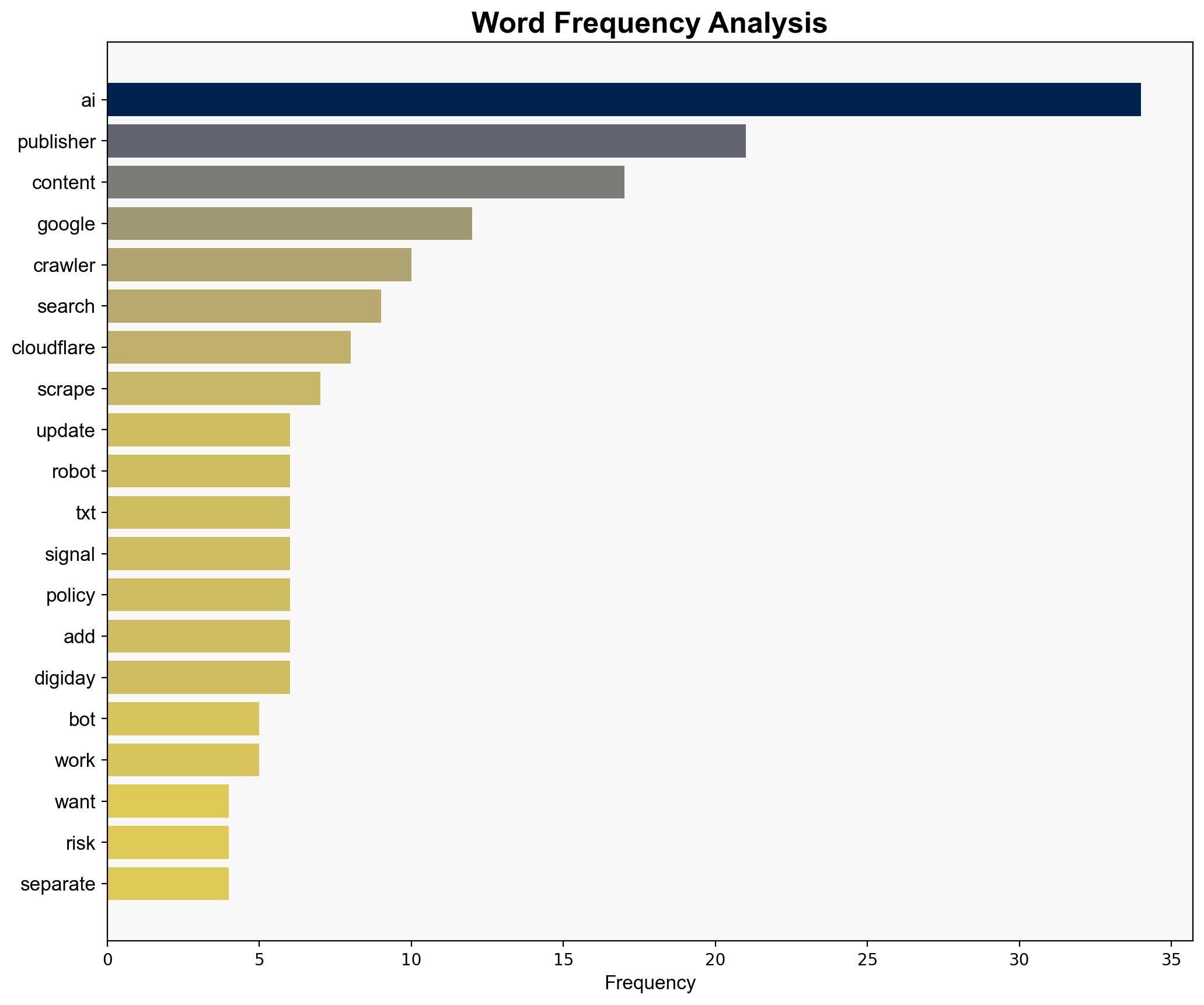

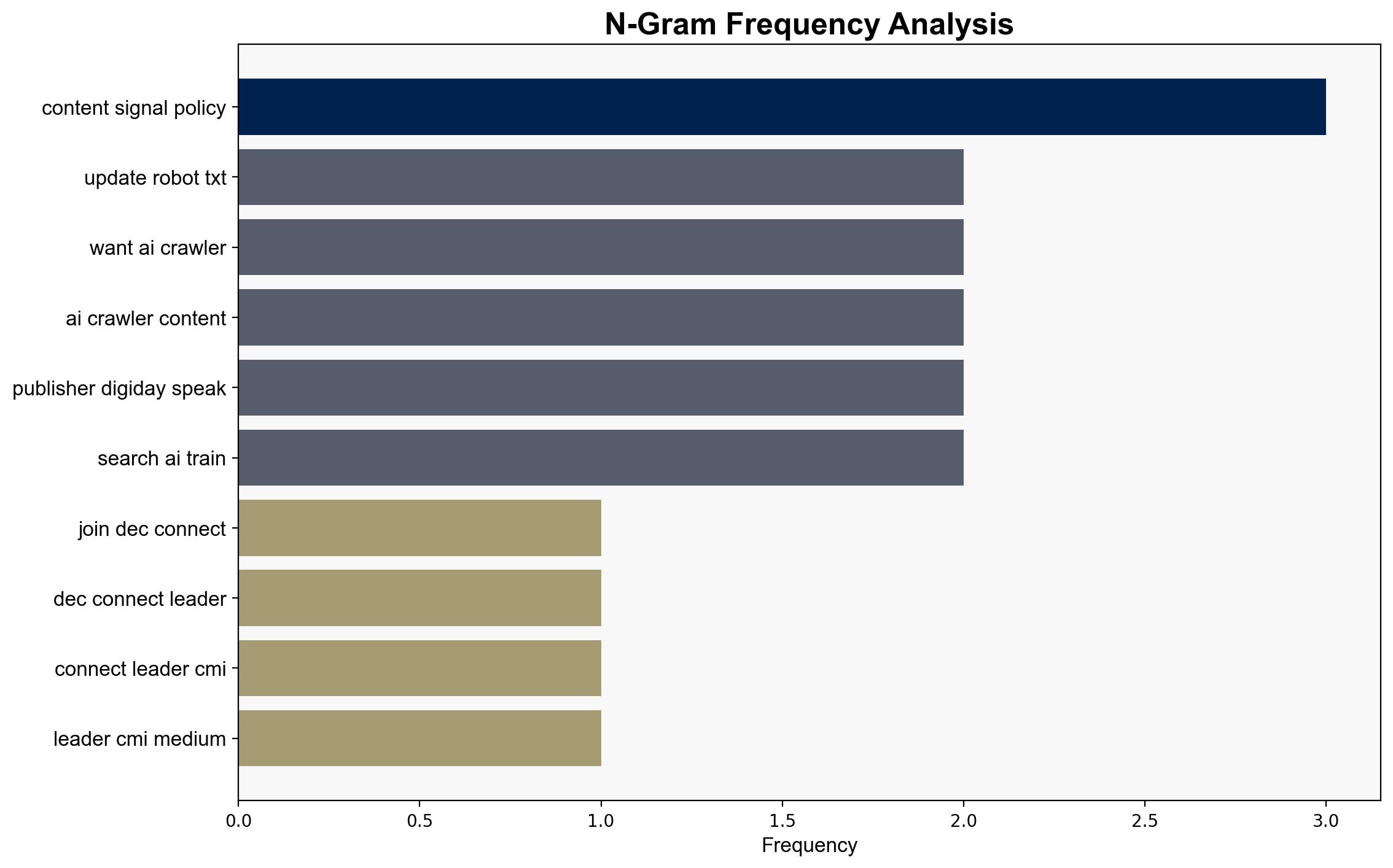

Cloudflare’s update to the robots.txt protocol aims to give publishers more control over AI crawlers, but significant challenges remain in enforceability and compliance. The most supported hypothesis is that while the update is a step towards transparency, it lacks the mechanisms to ensure AI platforms adhere to publishers’ preferences. Confidence Level: Moderate. Recommended action: Encourage development of industry-wide standards and enforceable mechanisms to protect publisher content from unauthorized AI scraping.

2. Competing Hypotheses

Hypothesis 1: Cloudflare’s update to robots.txt will effectively empower publishers to control AI crawler access, leading to better content protection and potential monetization opportunities.

Hypothesis 2: Despite Cloudflare’s update, AI platforms will continue to bypass publisher preferences due to the lack of enforceable compliance mechanisms, resulting in minimal impact on unauthorized content scraping.

Using ACH 2.0, Hypothesis 2 is better supported as the update lacks enforceability, and historical patterns show AI platforms often disregard non-binding protocols.

3. Key Assumptions and Red Flags

Assumptions:

– Publishers will universally adopt and correctly implement the updated robots.txt.

– AI platforms will voluntarily comply with the new signals.

Red Flags:

– Lack of enforceable compliance mechanisms.

– Potential for AI platforms to interpret signals differently, leading to inconsistent adherence.

– Historical disregard for non-binding protocols by AI platforms.

4. Implications and Strategic Risks

The update could lead to a temporary sense of security among publishers, potentially delaying more robust solutions. Economic implications include continued loss of potential revenue from unauthorized AI scraping. Cyber risks involve AI platforms exploiting loopholes in the updated protocol. Geopolitically, the lack of enforceability might prompt calls for regulatory intervention, affecting international tech relations.

5. Recommendations and Outlook

- Encourage collaboration among publishers to develop a unified standard for AI crawler compliance.

- Advocate for regulatory frameworks that enforce AI platform adherence to publisher preferences.

- Scenario Projections:

- Best Case: Industry-wide adoption of enforceable standards leads to significant reduction in unauthorized content scraping.

- Worst Case: AI platforms continue to bypass protocols, leading to increased unauthorized content use and publisher revenue loss.

- Most Likely: Partial compliance by AI platforms, with ongoing challenges in enforcement and content protection.

6. Key Individuals and Entities

– Justin Wohl

– Eric Hochberger

– Cloudflare

– Google

– Reddit

– Fastly

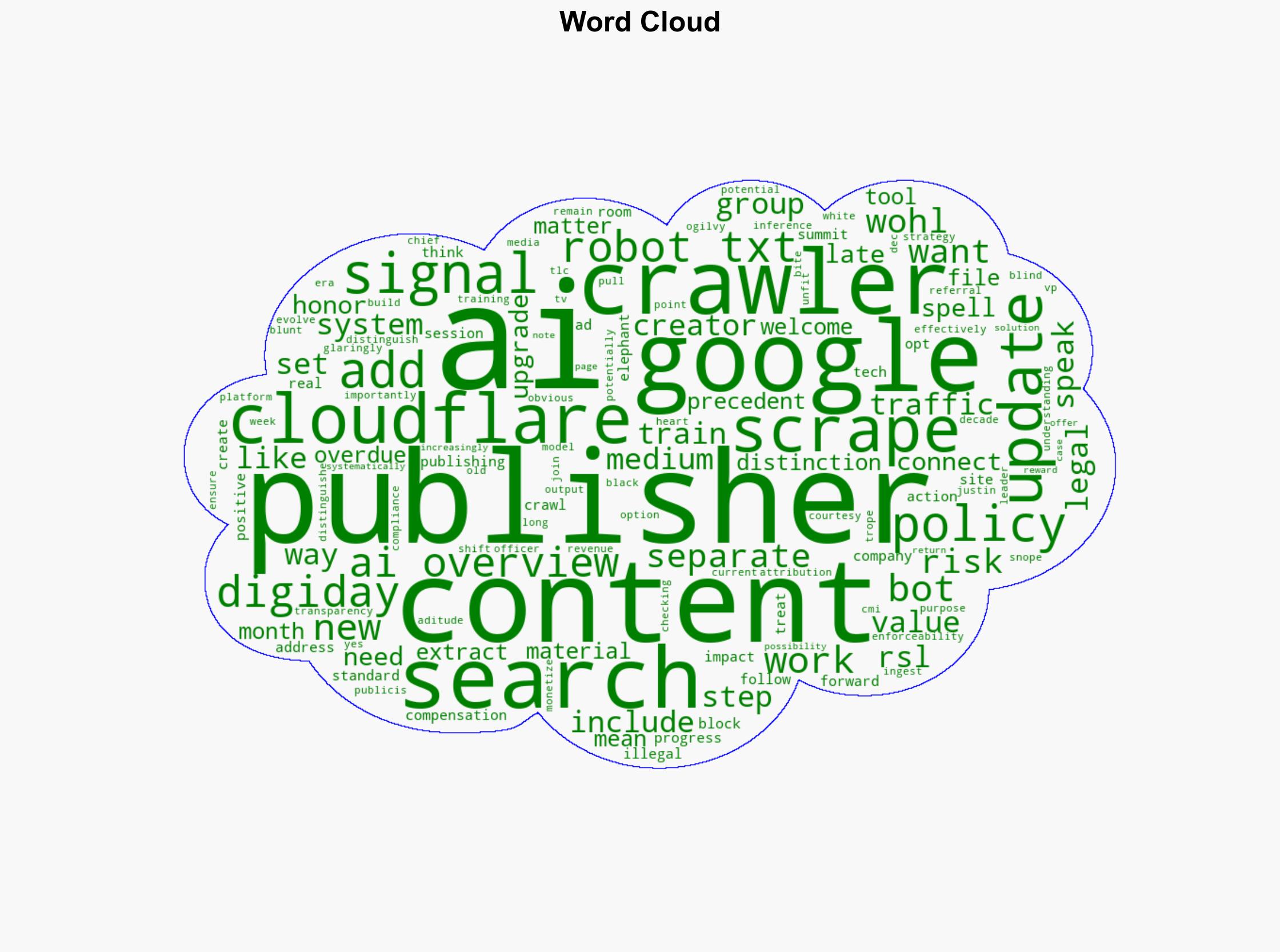

7. Thematic Tags

cybersecurity, digital rights management, AI compliance, content monetization, regulatory frameworks