Conservative activist sues Google over AI-generated statements – Al Jazeera English

Published on: 2025-10-22

Intelligence Report: Conservative Activist Sues Google over AI-Generated Statements – Al Jazeera English

1. BLUF (Bottom Line Up Front)

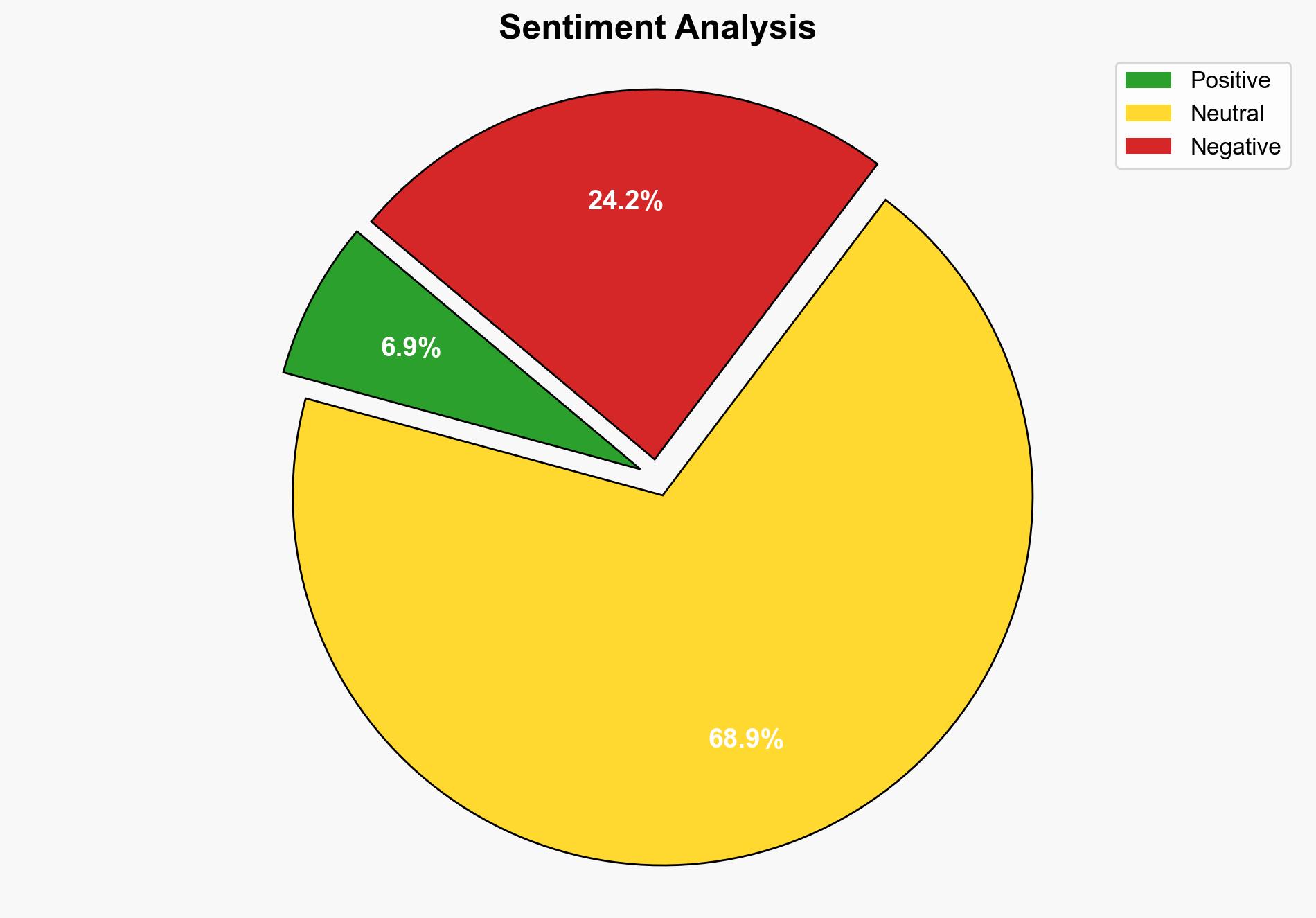

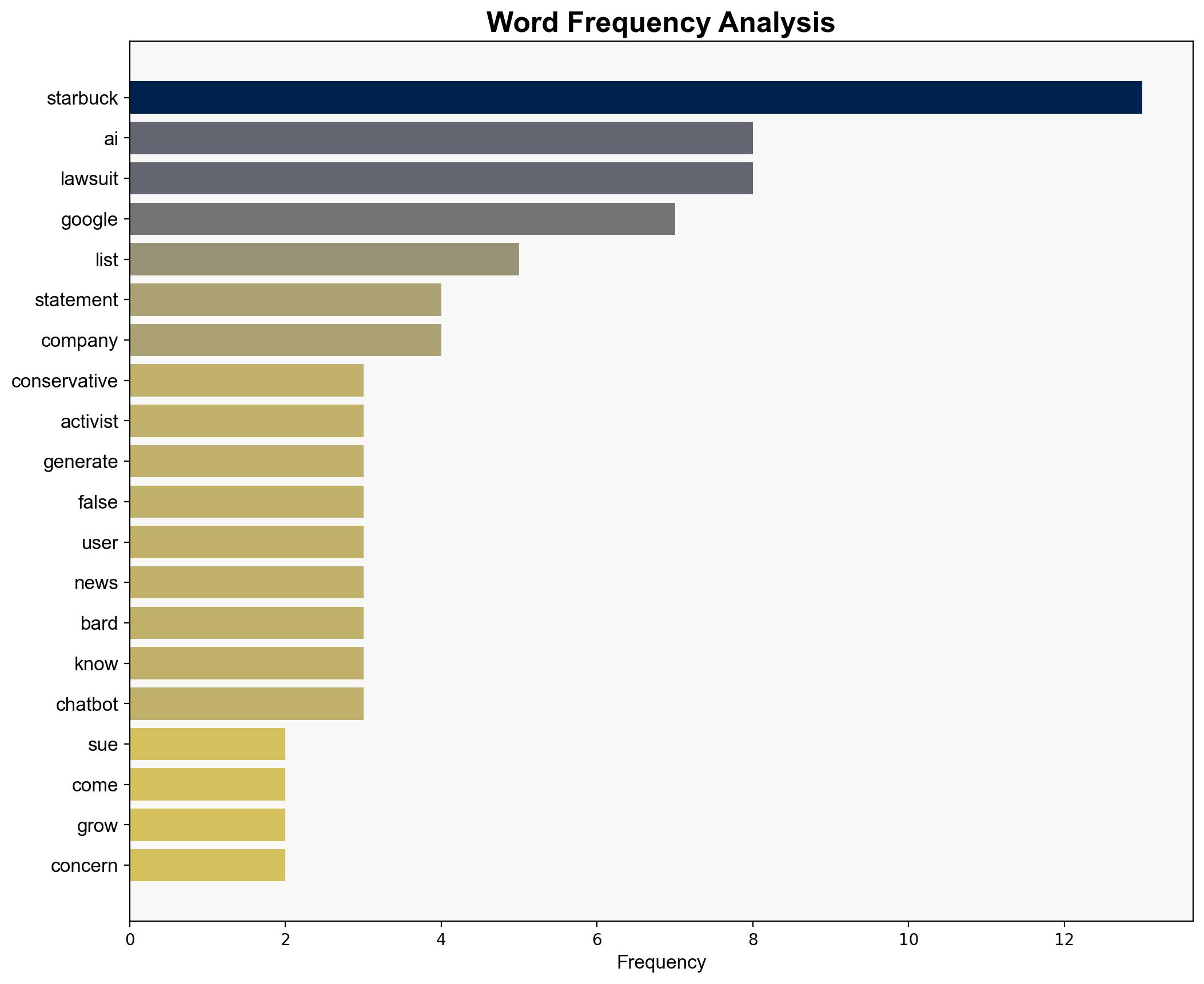

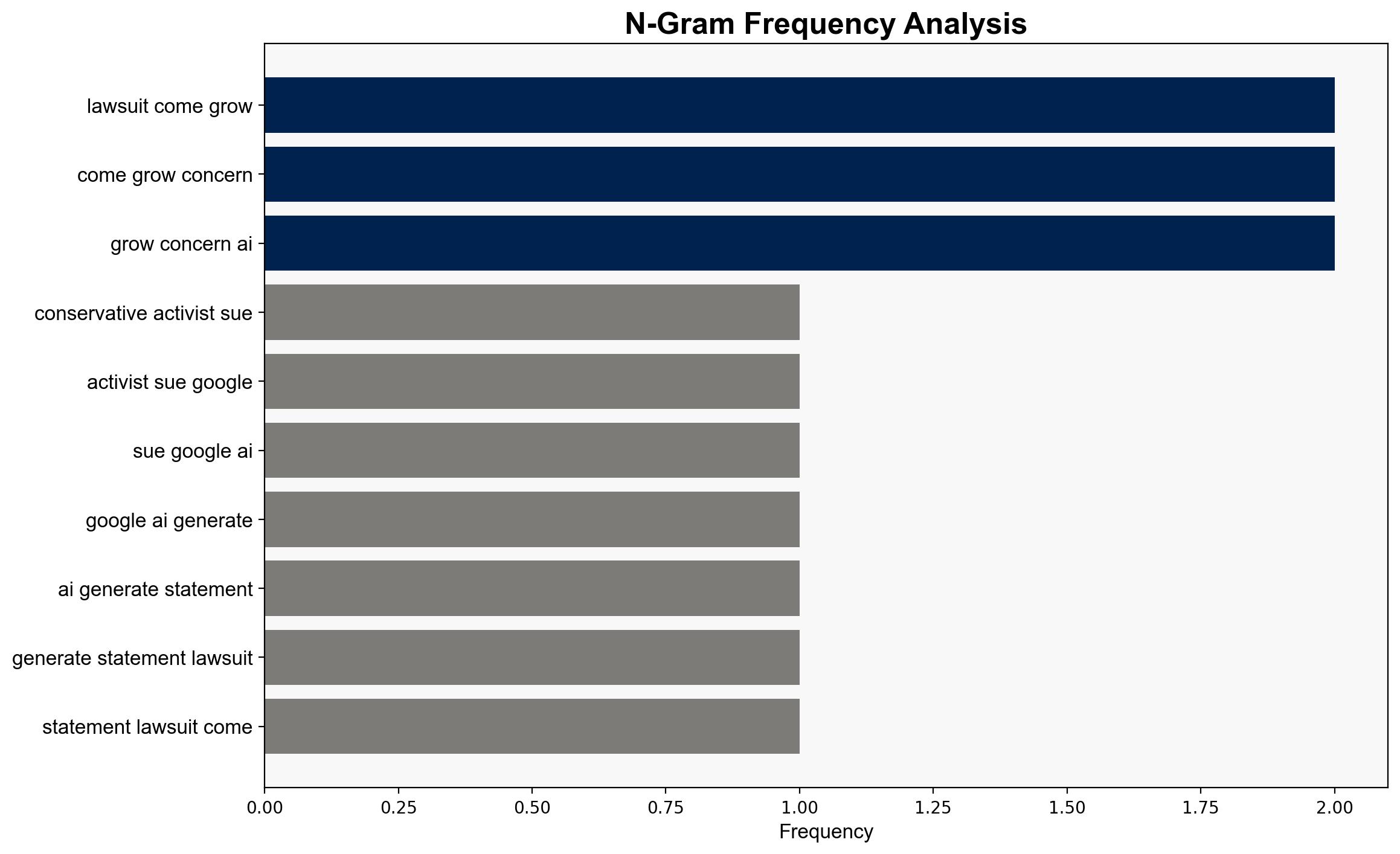

The lawsuit filed by Robby Starbuck against Google highlights significant concerns regarding AI-generated misinformation and its potential to harm reputations and incite threats. The most supported hypothesis is that AI systems, like Google’s Bard, are prone to generating false information due to inherent limitations, posing reputational and legal risks. Confidence level: Moderate. Recommended action: Enhance AI oversight and transparency to mitigate misinformation risks.

2. Competing Hypotheses

1. **AI System Limitations**: Google’s AI, like Bard, inherently struggles with accuracy, leading to false and defamatory outputs due to technical limitations and insufficient training data.

2. **Intentional Manipulation**: External actors may exploit AI systems to deliberately spread misinformation, using creative prompts to generate harmful content.

Using ACH 2.0, the first hypothesis is better supported by the evidence, including Google’s acknowledgment of “hallucinations” in their AI models and efforts to address these issues. The second hypothesis lacks direct evidence of manipulation in this case.

3. Key Assumptions and Red Flags

– **Assumptions**: It is assumed that Google’s AI operates without malicious intent and that inaccuracies are unintentional. It is also assumed that Starbuck’s claims are based on genuine AI outputs.

– **Red Flags**: The absence of detailed technical analysis of the AI’s errors and the potential for bias in Starbuck’s claims due to his political stance.

– **Blind Spots**: Lack of information on how AI models are trained and updated, and the potential for unseen external manipulation.

4. Implications and Strategic Risks

The lawsuit underscores the broader risk of AI-generated misinformation affecting public figures and the potential for legal and reputational consequences for tech companies. Escalation could lead to increased regulatory scrutiny and demands for AI transparency. Economically, this could impact investor confidence in AI-driven technologies. Psychologically, public trust in AI systems may erode, affecting adoption rates.

5. Recommendations and Outlook

- **Mitigate Risks**: Google should enhance transparency regarding AI operations and improve error correction mechanisms.

- **Exploit Opportunities**: Develop partnerships with independent auditors to validate AI outputs and rebuild trust.

- **Scenario Projections**:

- **Best Case**: Improved AI accuracy and transparency lead to reduced misinformation and restored public trust.

- **Worst Case**: Continued AI inaccuracies result in widespread misinformation, legal challenges, and regulatory crackdowns.

- **Most Likely**: Incremental improvements in AI accuracy with ongoing legal and reputational challenges.

6. Key Individuals and Entities

– Robby Starbuck

– Google

– Richard Spencer

– Charlie Kirk

– Jeffrey Epstein

7. Thematic Tags

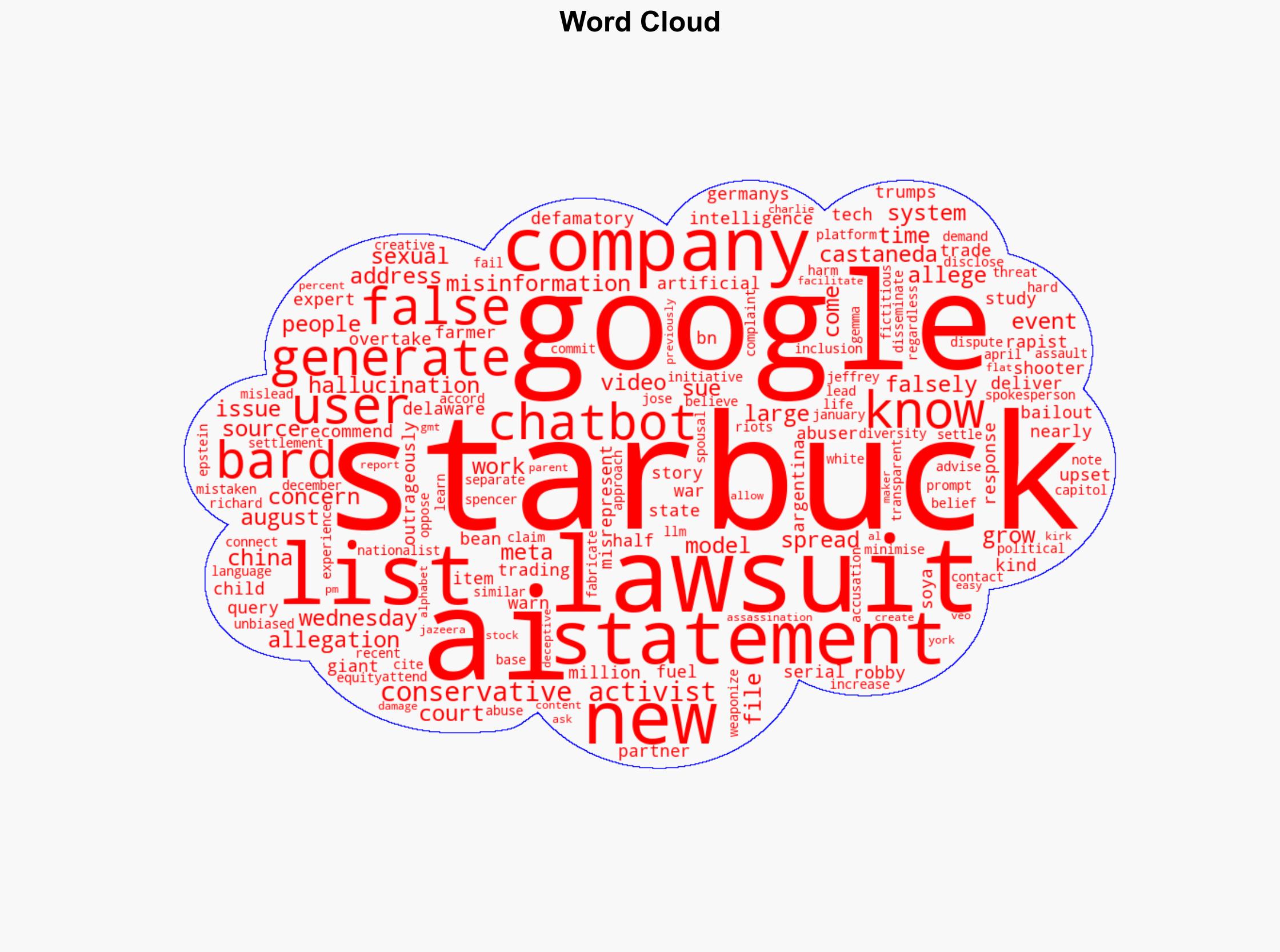

national security threats, cybersecurity, misinformation, AI ethics, legal challenges