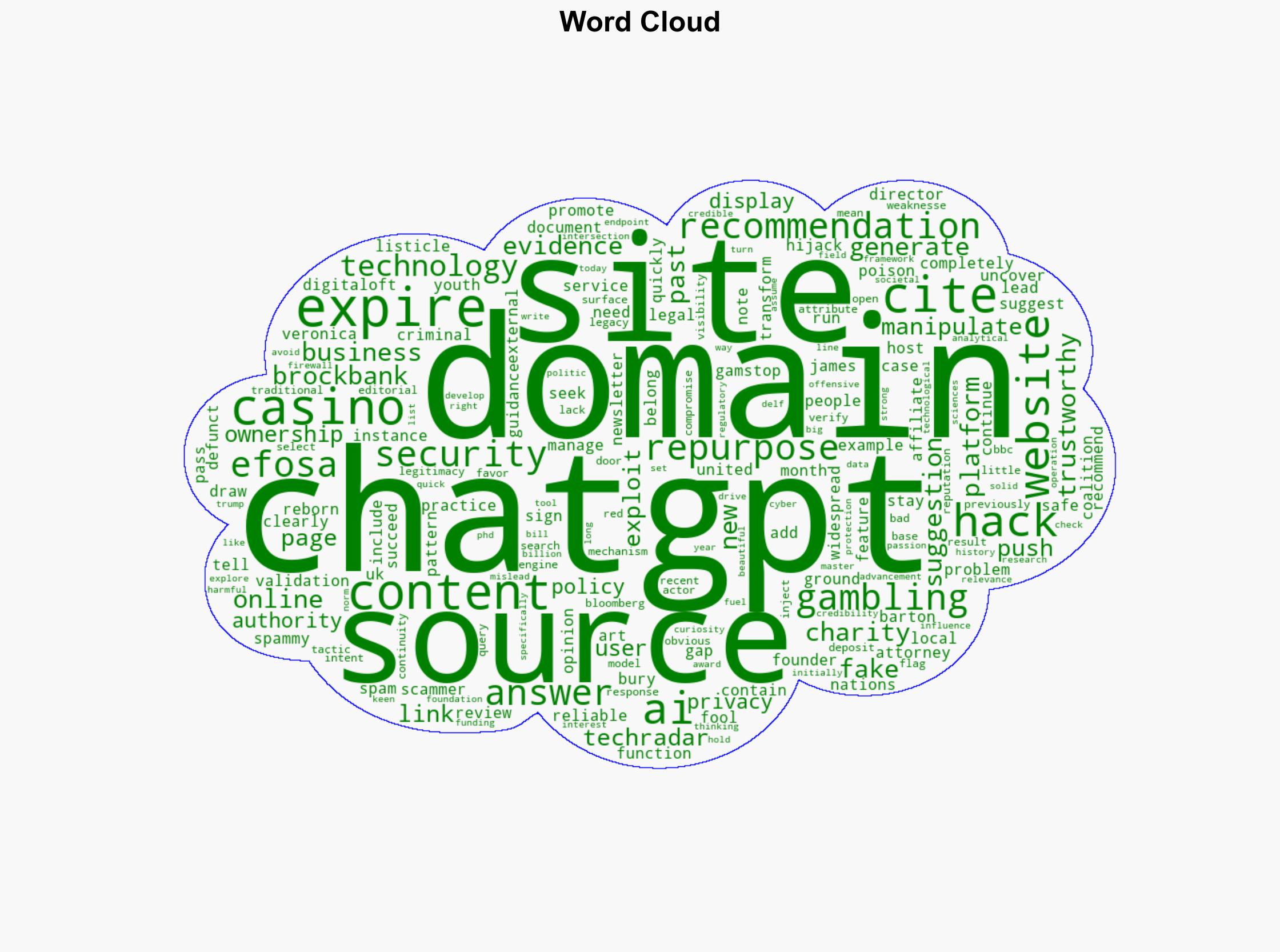

Criminals and scammers are using hacked websites and expired domain names to ‘poison’ ChatGPT with spammy recommendations – here’s how to stay safe – TechRadar

Published on: 2025-07-19

Intelligence Report: Criminals and Scammers Exploit Hacked Websites and Expired Domain Names to ‘Poison’ ChatGPT with Spammy Recommendations

1. BLUF (Bottom Line Up Front)

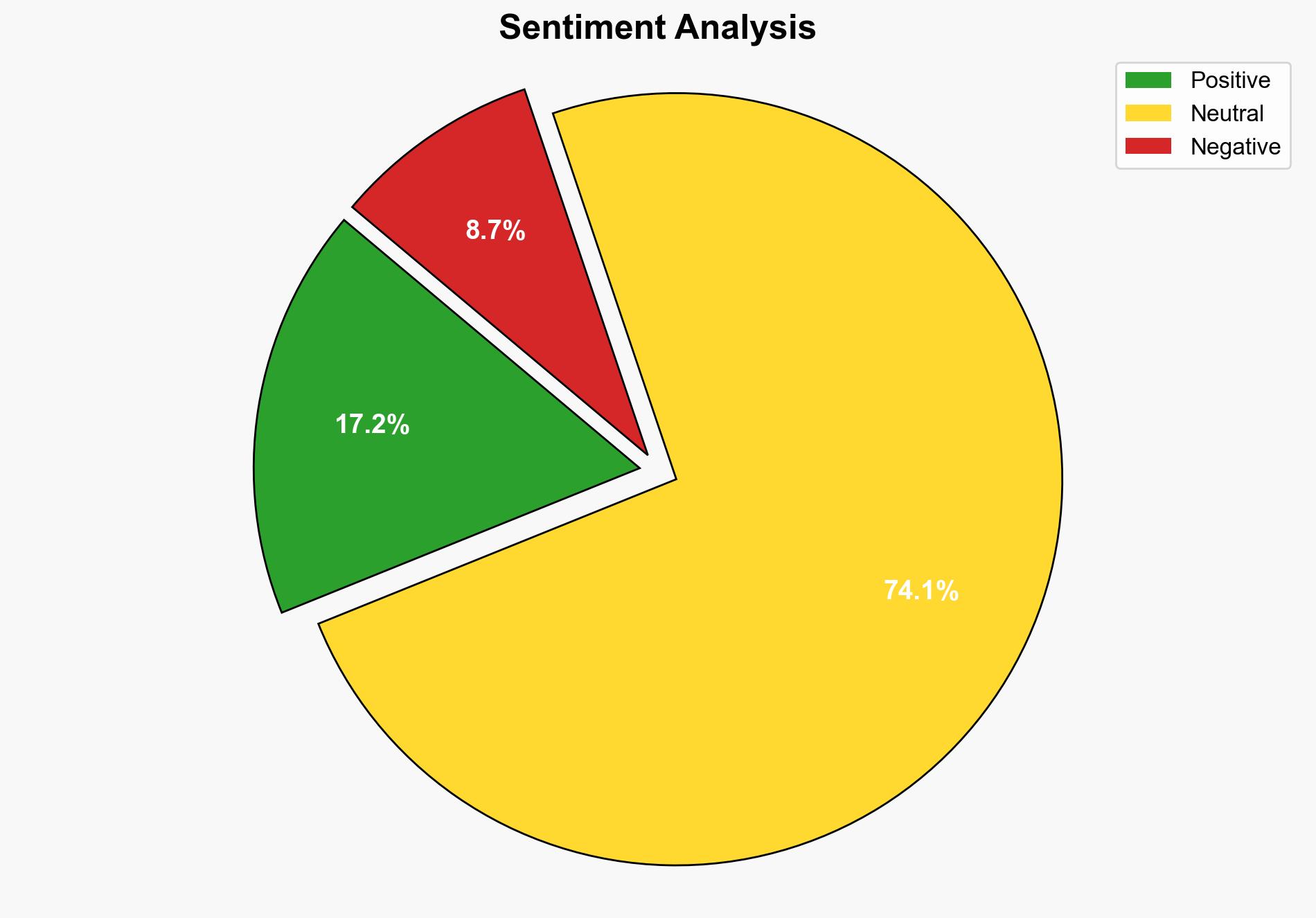

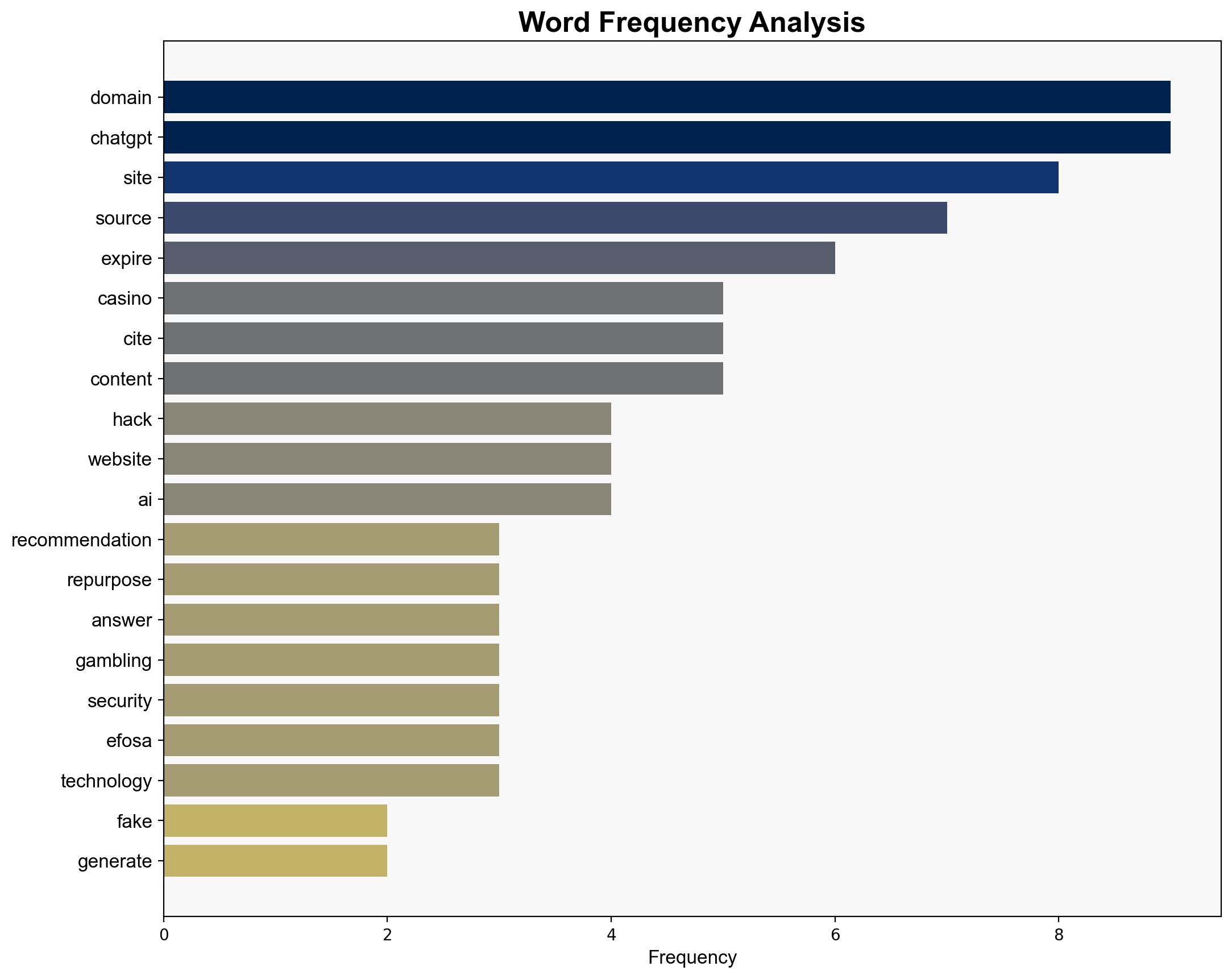

Criminals are exploiting vulnerabilities in AI systems like ChatGPT by using hacked websites and expired domain names to disseminate misleading and spammy recommendations. This tactic undermines the reliability of AI-generated content and poses significant cybersecurity risks. Immediate actions are required to enhance AI source validation mechanisms and user awareness to mitigate these threats.

2. Detailed Analysis

The following structured analytic techniques have been applied to ensure methodological consistency:

Adversarial Threat Simulation

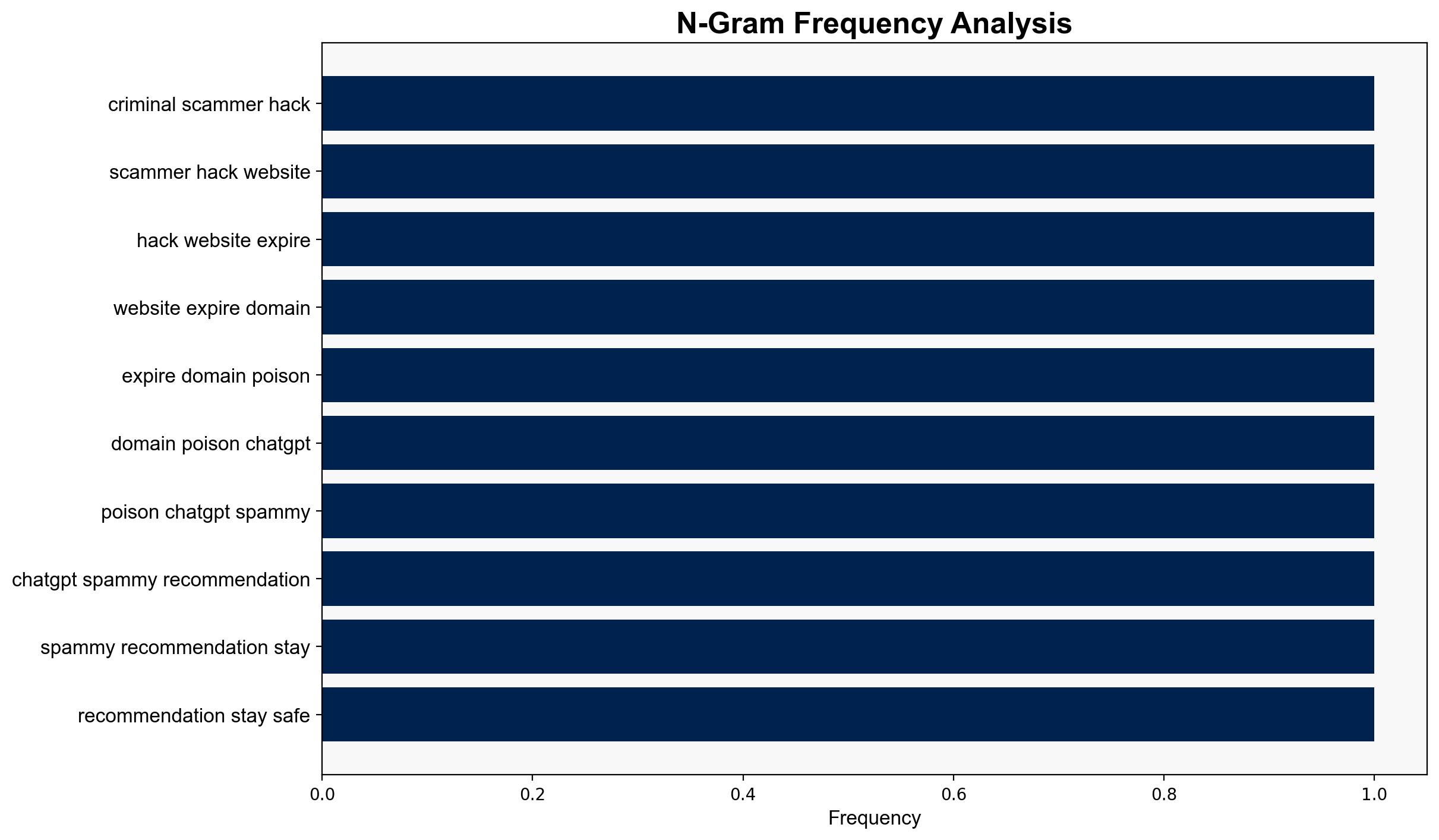

Cyber adversaries are repurposing expired domains, including those of defunct organizations, to manipulate AI outputs. This simulation helps anticipate potential vulnerabilities in AI systems.

Indicators Development

Monitoring anomalies in AI-generated content and domain ownership changes can serve as early indicators of malicious activity.

Bayesian Scenario Modeling

Probabilistic models predict that without intervention, the frequency and sophistication of such attacks will increase, potentially affecting broader AI applications.

Narrative Pattern Analysis

Exploiting legacy domain reputations, adversaries craft narratives that appear credible, complicating threat detection and response.

3. Implications and Strategic Risks

The manipulation of AI systems through compromised sources presents a systemic vulnerability with potential cross-domain impacts, including misinformation dissemination and erosion of trust in AI technologies. This could lead to broader cybersecurity challenges, affecting both public and private sectors.

4. Recommendations and Outlook

- Enhance AI source validation protocols to ensure content credibility and integrity.

- Educate users on verifying the authenticity of AI-generated recommendations.

- Develop robust monitoring systems for detecting and responding to domain repurposing activities.

- Scenario Projections:

- Best Case: Implementation of advanced validation mechanisms reduces the impact of such attacks.

- Worst Case: Failure to address vulnerabilities leads to widespread misinformation and loss of trust in AI systems.

- Most Likely: Incremental improvements in AI validation and user education mitigate some risks but do not eliminate them entirely.

5. Key Individuals and Entities

James Brockbank, Veronica Barton

6. Thematic Tags

cybersecurity, AI vulnerabilities, misinformation, domain exploitation