Cybercriminals Target Personal AI, Compromising Identity and Sensitive Data in New Malware Attack

Published on: 2026-02-19

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

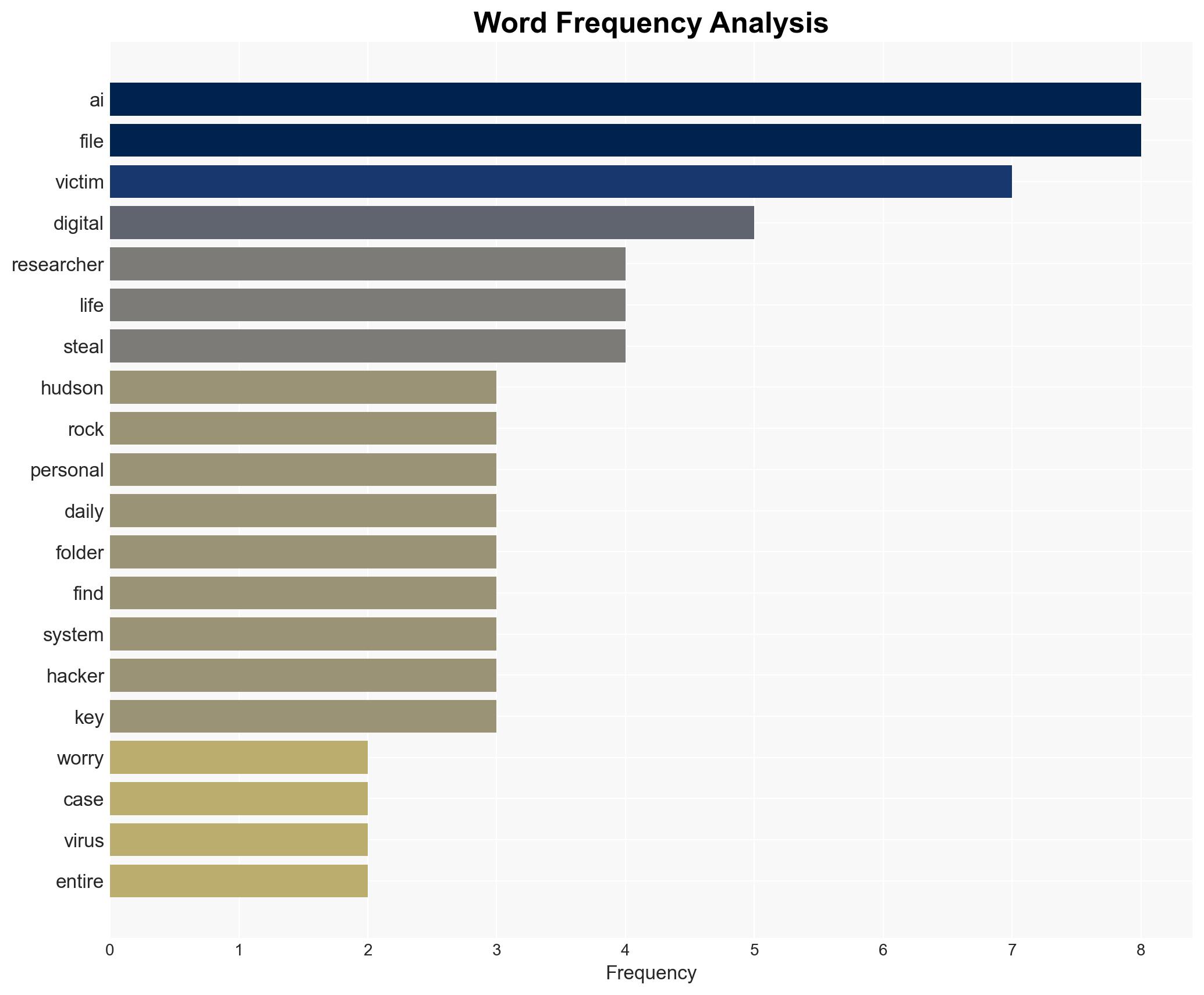

Intelligence Report: Infostealer Found Stealing OpenClaw AI Identity and Memory Files

1. BLUF (Bottom Line Up Front)

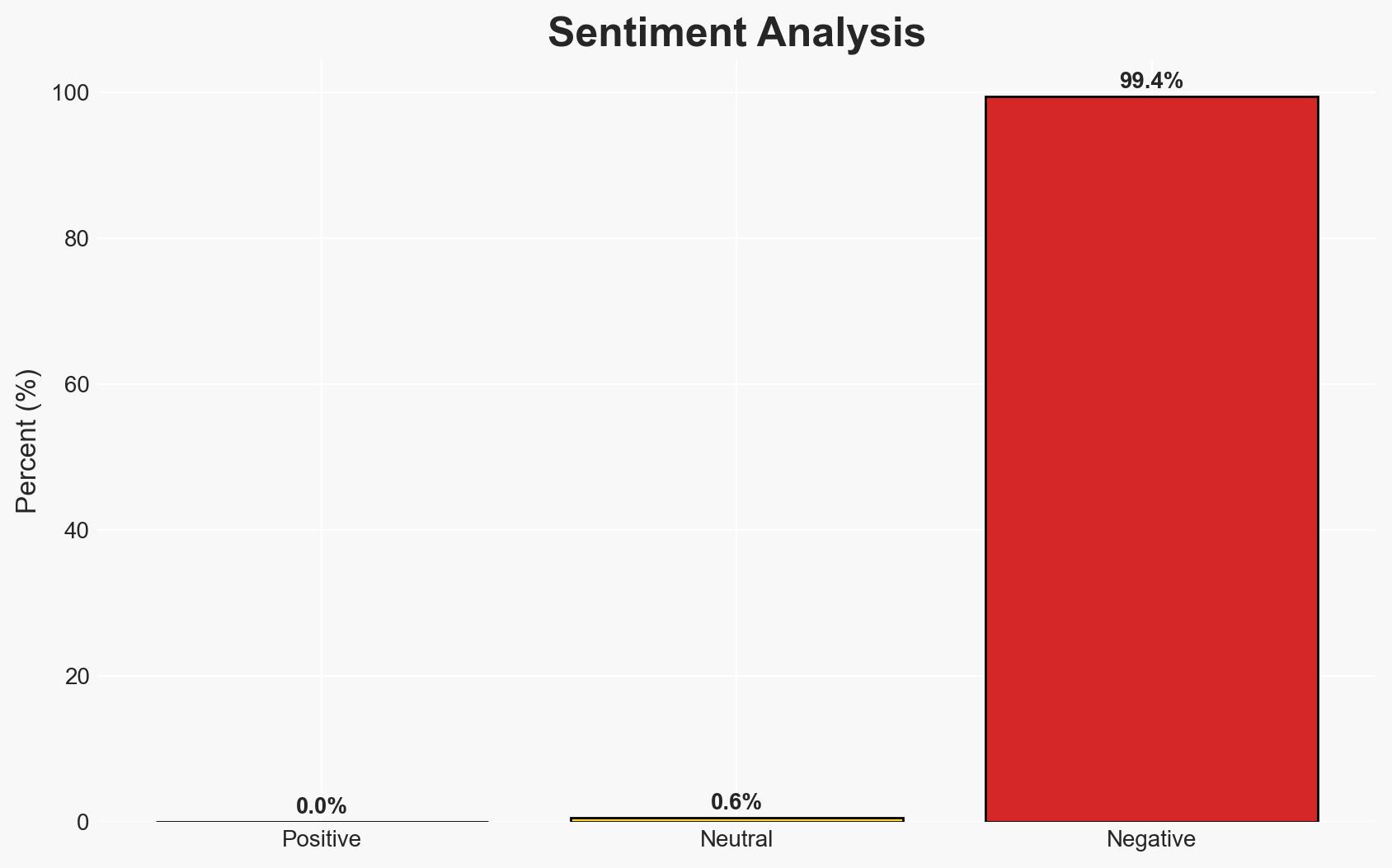

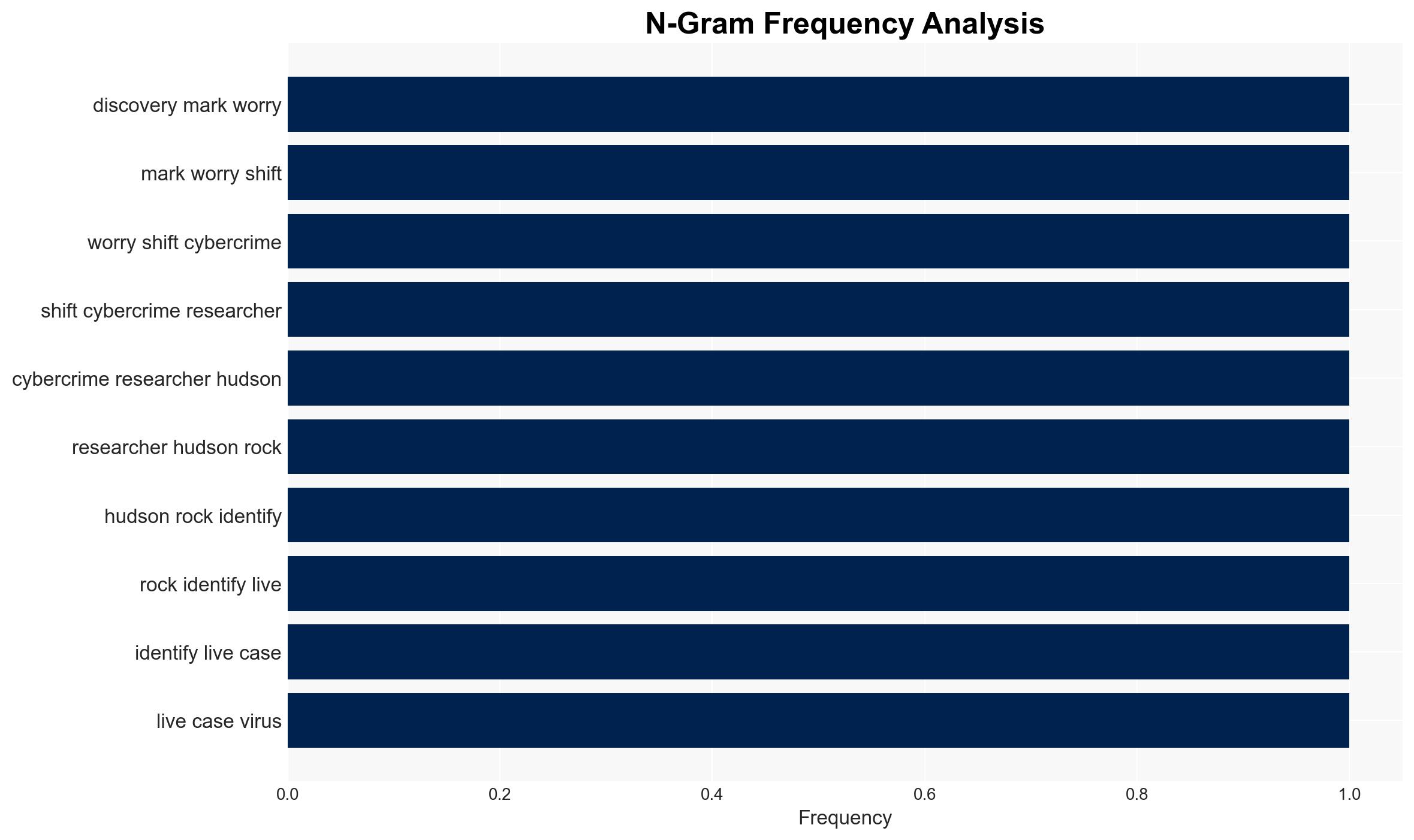

The discovery of malware targeting AI systems like OpenClaw represents a significant evolution in cybercrime, with personal AI assistants becoming prime targets for data theft. This incident highlights vulnerabilities in AI systems that store comprehensive personal data. The most likely hypothesis is that cybercriminals will increasingly exploit these vulnerabilities, posing a growing threat to digital identities. Overall confidence in this assessment is moderate.

2. Competing Hypotheses

- Hypothesis A: The malware incident was an opportunistic attack, with cybercriminals unintentionally discovering valuable AI data. Supporting evidence includes the broad routine used by the malware and the accidental discovery of the OpenClaw folder. However, the sophistication of the data extracted suggests some level of intent.

- Hypothesis B: The attack was a deliberate attempt to target AI systems, indicating a strategic shift in cybercriminal tactics. The detailed nature of the stolen data and the potential for significant exploitation support this hypothesis. Contradicting evidence includes the initial non-targeted nature of the malware.

- Assessment: Hypothesis B is currently better supported due to the specific and valuable nature of the data stolen, which suggests a growing trend of targeting AI systems. Indicators such as repeated attacks on AI systems or increased sophistication in malware could further support this hypothesis.

3. Key Assumptions and Red Flags

- Assumptions: AI systems will continue to store extensive personal data; cybercriminals have the capability to exploit AI vulnerabilities; the value of AI-stored data will increase over time.

- Information Gaps: The identity and motives of the attackers; the full extent of the data compromised across similar incidents; potential defensive measures in place for AI systems.

- Bias & Deception Risks: Potential confirmation bias in interpreting the sophistication of the attack; reliance on a single research source (Hudson Rock) could introduce source bias; possibility of attackers planting misleading data to obscure their true intent.

4. Implications and Strategic Risks

This development could lead to increased targeting of AI systems, influencing broader cybercrime strategies and necessitating enhanced security measures.

- Political / Geopolitical: Potential for state actors to exploit similar vulnerabilities for espionage or sabotage, escalating cyber tensions.

- Security / Counter-Terrorism: Increased risk of personal data exploitation could lead to identity theft and fraud, complicating law enforcement efforts.

- Cyber / Information Space: Growing focus on AI vulnerabilities could drive innovation in both offensive and defensive cyber capabilities.

- Economic / Social: Breaches of AI systems could undermine trust in digital services, impacting consumer behavior and economic stability.

5. Recommendations and Outlook

- Immediate Actions (0–30 days): Enhance monitoring of AI system vulnerabilities; issue alerts to users and organizations about potential risks; collaborate with cybersecurity firms to develop rapid response strategies.

- Medium-Term Posture (1–12 months): Invest in AI security research and development; establish partnerships with tech companies to improve AI resilience; develop regulatory frameworks for AI data protection.

- Scenario Outlook:

- Best Case: Rapid development of security measures mitigates risks, restoring trust in AI systems.

- Worst Case: Widespread exploitation of AI vulnerabilities leads to significant economic and social disruption.

- Most-Likely: Incremental improvements in AI security reduce but do not eliminate risks, with ongoing incidents prompting continued vigilance.

6. Key Individuals and Entities

- Hudson Rock (research entity)

- OpenClaw (AI system)

- Not clearly identifiable from open sources in this snippet (attackers)

7. Thematic Tags

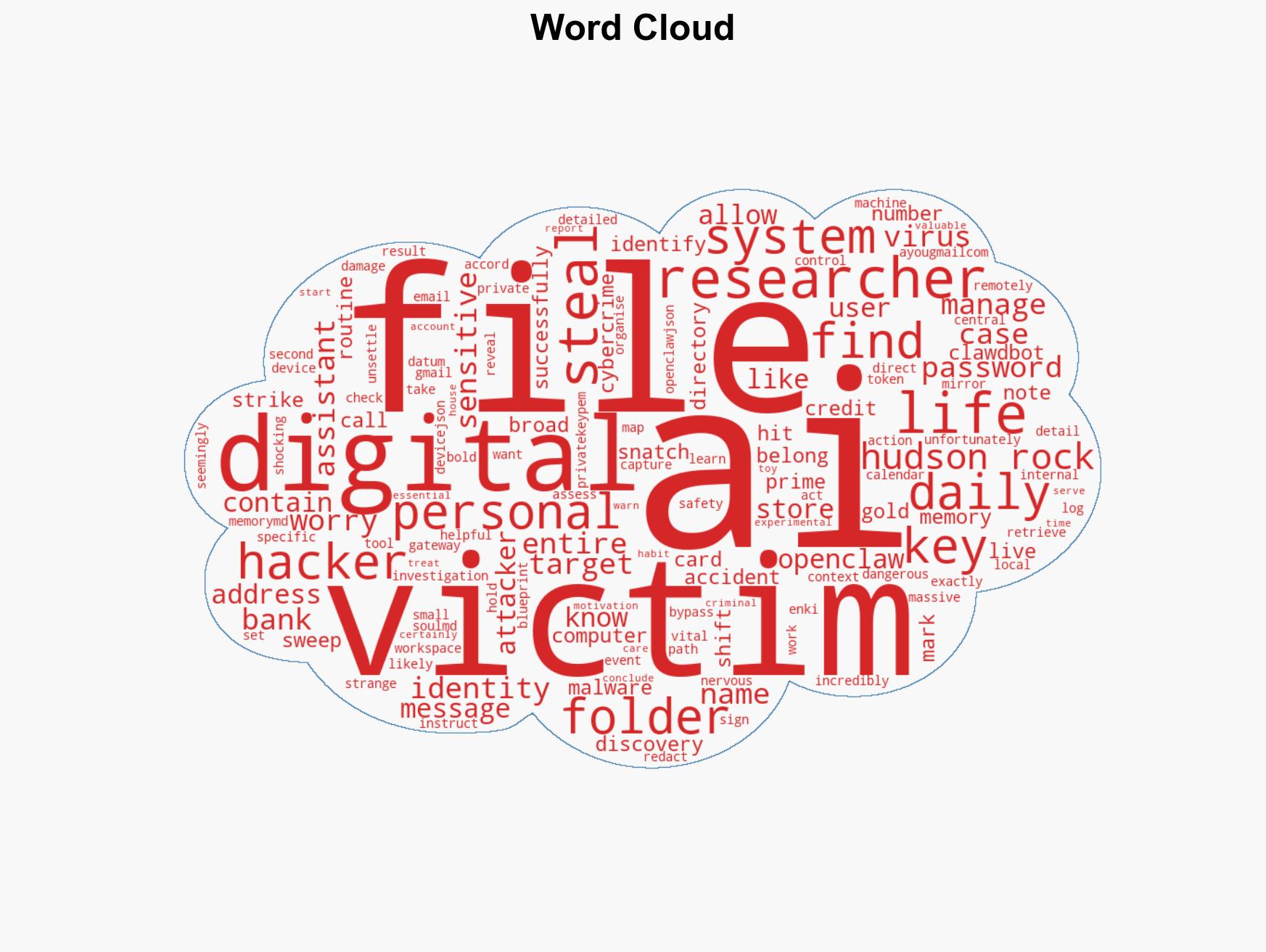

cybersecurity, AI vulnerabilities, data theft, digital identity, cybercrime evolution, personal data protection, malware

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us