Faculty Lead AI Usage Conversations on Campus – Inside Higher Ed

Published on: 2025-11-11

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report: Faculty Lead AI Usage Conversations on Campus – Inside Higher Ed

1. BLUF (Bottom Line Up Front)

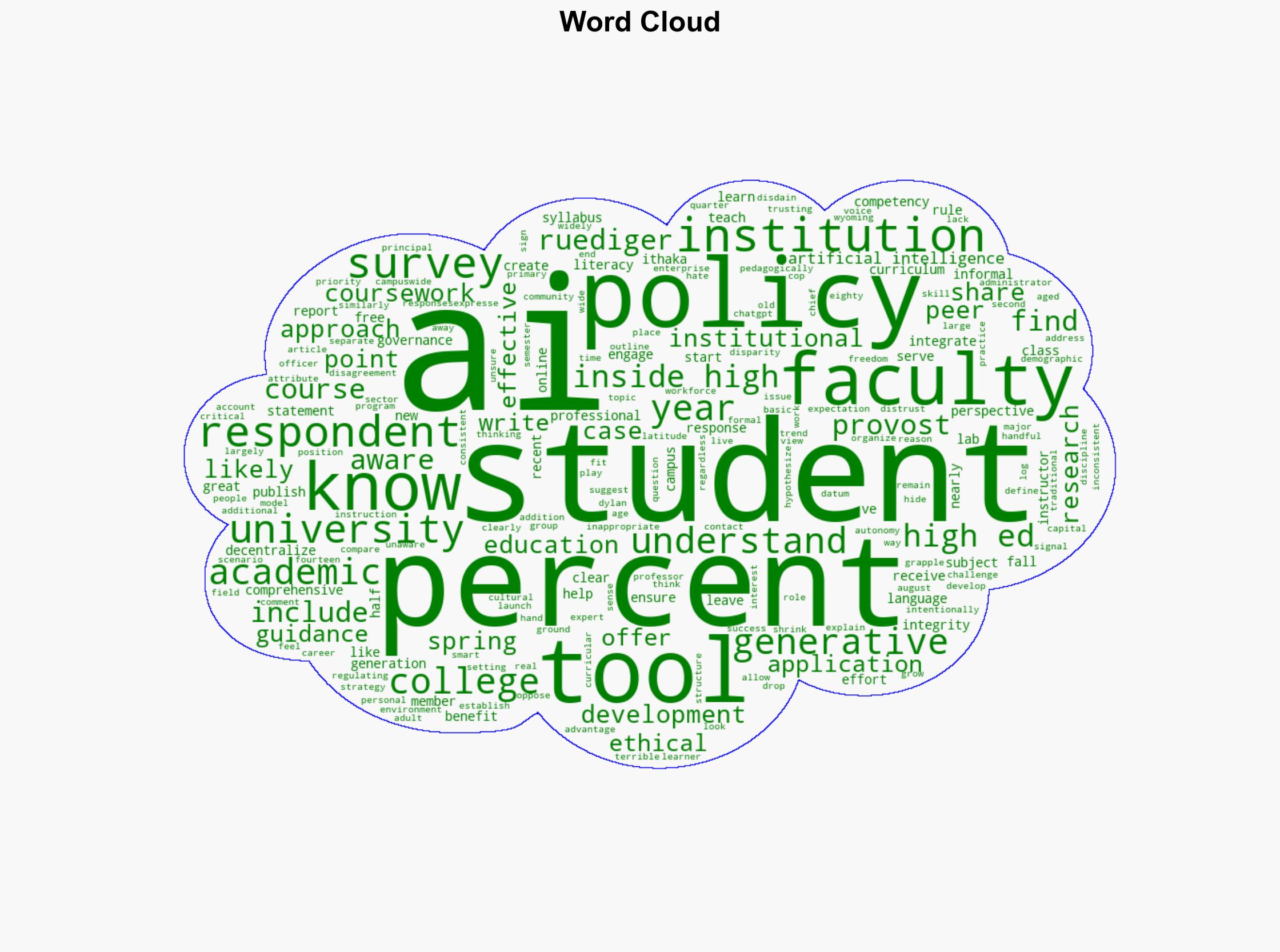

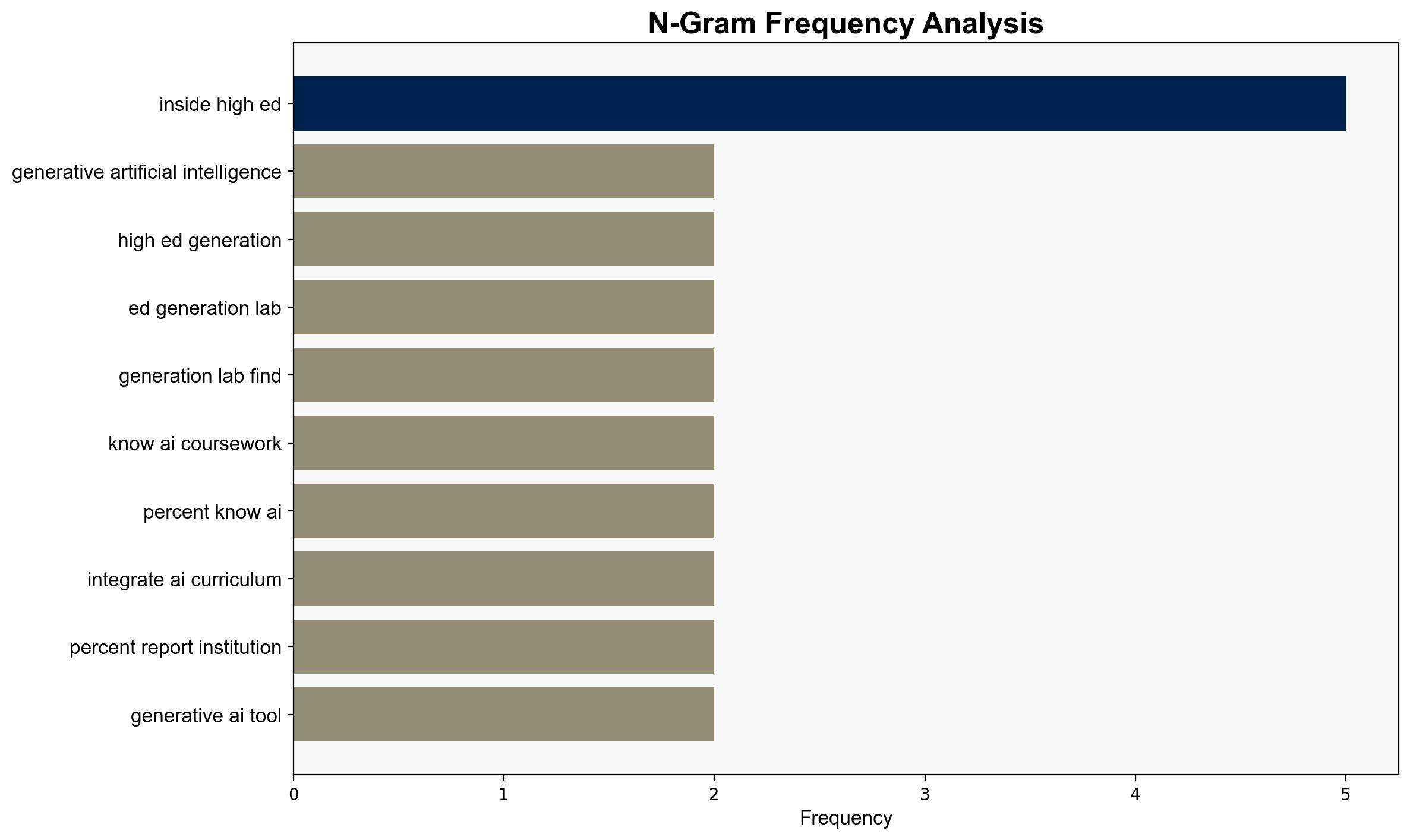

There is a significant gap in AI understanding and policy implementation across higher education institutions, with faculty playing a pivotal role in shaping AI usage. The most supported hypothesis is that decentralized AI policy development, which empowers faculty, is more effective in integrating AI into curricula. Confidence Level: Moderate. Recommended action includes fostering faculty-led AI initiatives while developing a more cohesive institutional strategy to ensure consistent AI literacy and ethical standards.

2. Competing Hypotheses

Hypothesis 1: Decentralized AI policy development, allowing faculty autonomy, leads to more effective integration of AI into curricula and better prepares students for AI-related challenges.

Hypothesis 2: Centralized AI policy development is necessary to ensure consistent AI literacy and ethical standards across institutions, as decentralized approaches may lead to significant disparities in AI understanding among students.

Hypothesis 1 is more likely supported by the evidence, as faculty autonomy aligns with pedagogical flexibility and subject expertise, which can foster more relevant and effective AI integration. However, the lack of a centralized policy could lead to inconsistencies and gaps in AI literacy.

3. Key Assumptions and Red Flags

Assumptions: Faculty have the necessary expertise and resources to effectively integrate AI into their curricula. Students will engage with AI tools if adequately introduced.

Red Flags: The potential for significant disparities in AI understanding among students due to varying faculty approaches. Lack of comprehensive institutional policies could lead to ethical and academic integrity issues.

4. Implications and Strategic Risks

The decentralized approach could lead to uneven AI literacy, potentially disadvantaging students from less proactive faculties. This may result in a workforce ill-prepared for AI-driven industries, impacting economic competitiveness. Additionally, inconsistent AI policies could expose institutions to cyber and informational risks, as students may misuse AI tools without clear guidelines.

5. Recommendations and Outlook

- Encourage faculty-led AI initiatives while establishing a baseline institutional AI policy to ensure consistency in AI literacy and ethical standards.

- Implement faculty development programs to enhance AI teaching capabilities.

- Best-case scenario: Harmonized faculty autonomy with institutional guidelines leads to comprehensive AI literacy and ethical standards.

- Worst-case scenario: Disparities in AI understanding widen, leading to academic integrity issues and workforce unpreparedness.

- Most-likely scenario: Gradual improvement in AI integration with ongoing challenges in achieving uniformity across institutions.

6. Key Individuals and Entities

Dylan Ruediger (Principal Researcher, Ithaka)

7. Thematic Tags

Cybersecurity, Higher Education, AI Policy, Faculty Autonomy, Academic Integrity

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Forecast futures under uncertainty via probabilistic logic.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Methodology