Gemini Can Now Tell You If An Image Was Made With AI – BGR

Published on: 2025-11-20

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report:

1. BLUF (Bottom Line Up Front)

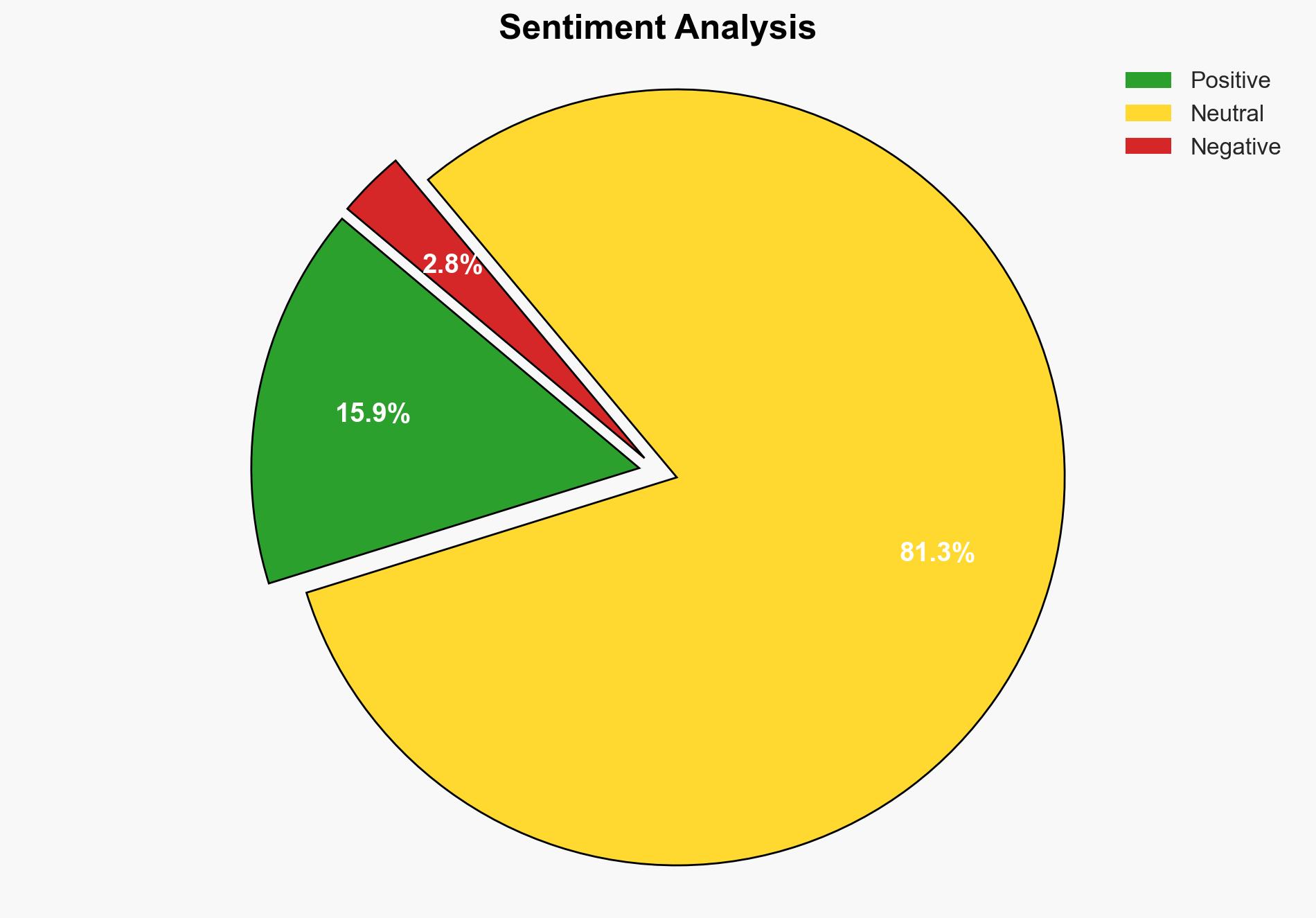

With a moderate confidence level, the most supported hypothesis is that Google’s Gemini AI’s ability to detect AI-generated images will enhance digital content authenticity and security, but it also poses potential privacy and misuse risks. Recommended actions include monitoring the technology’s adoption and developing regulatory frameworks to mitigate misuse.

2. Competing Hypotheses

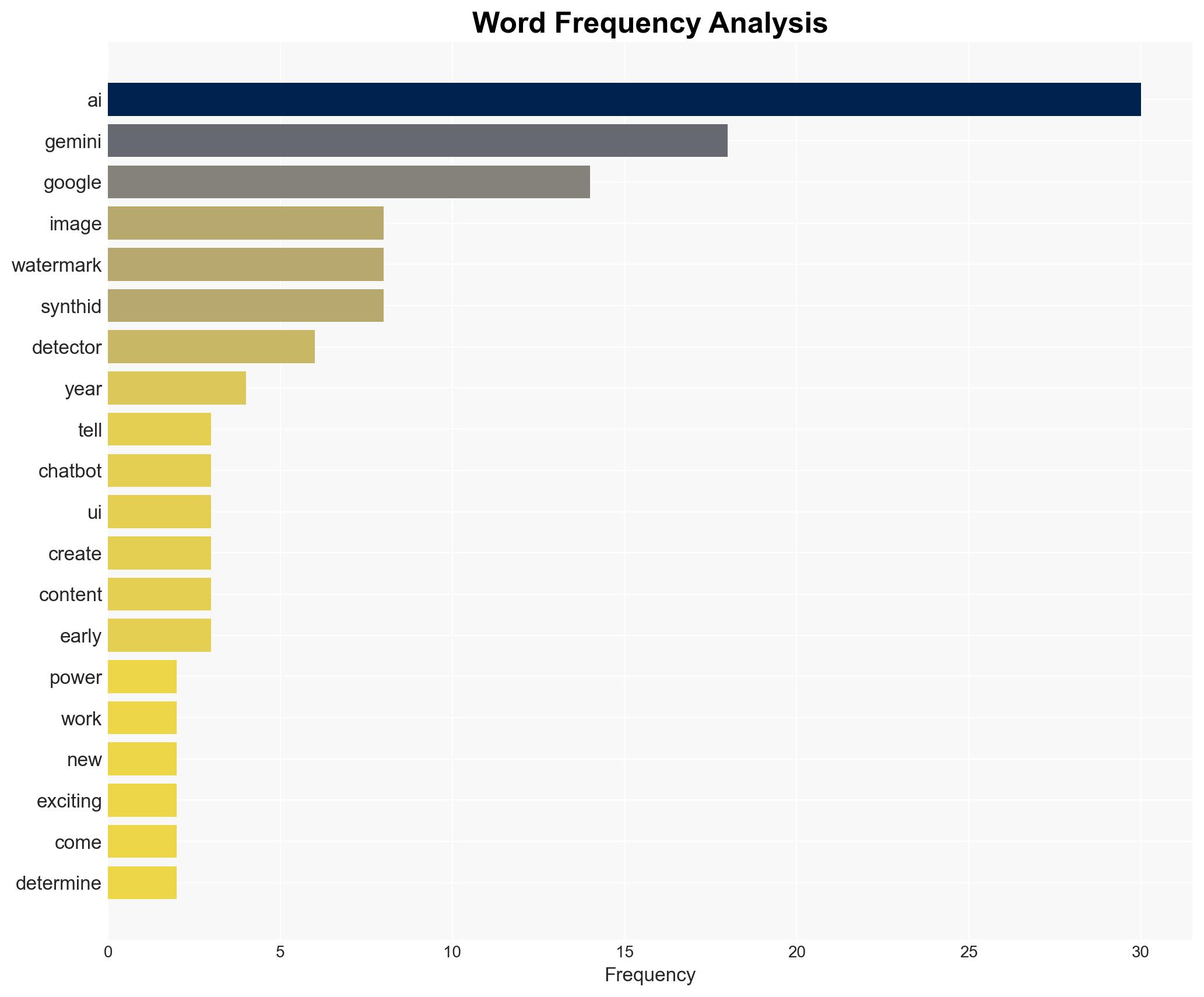

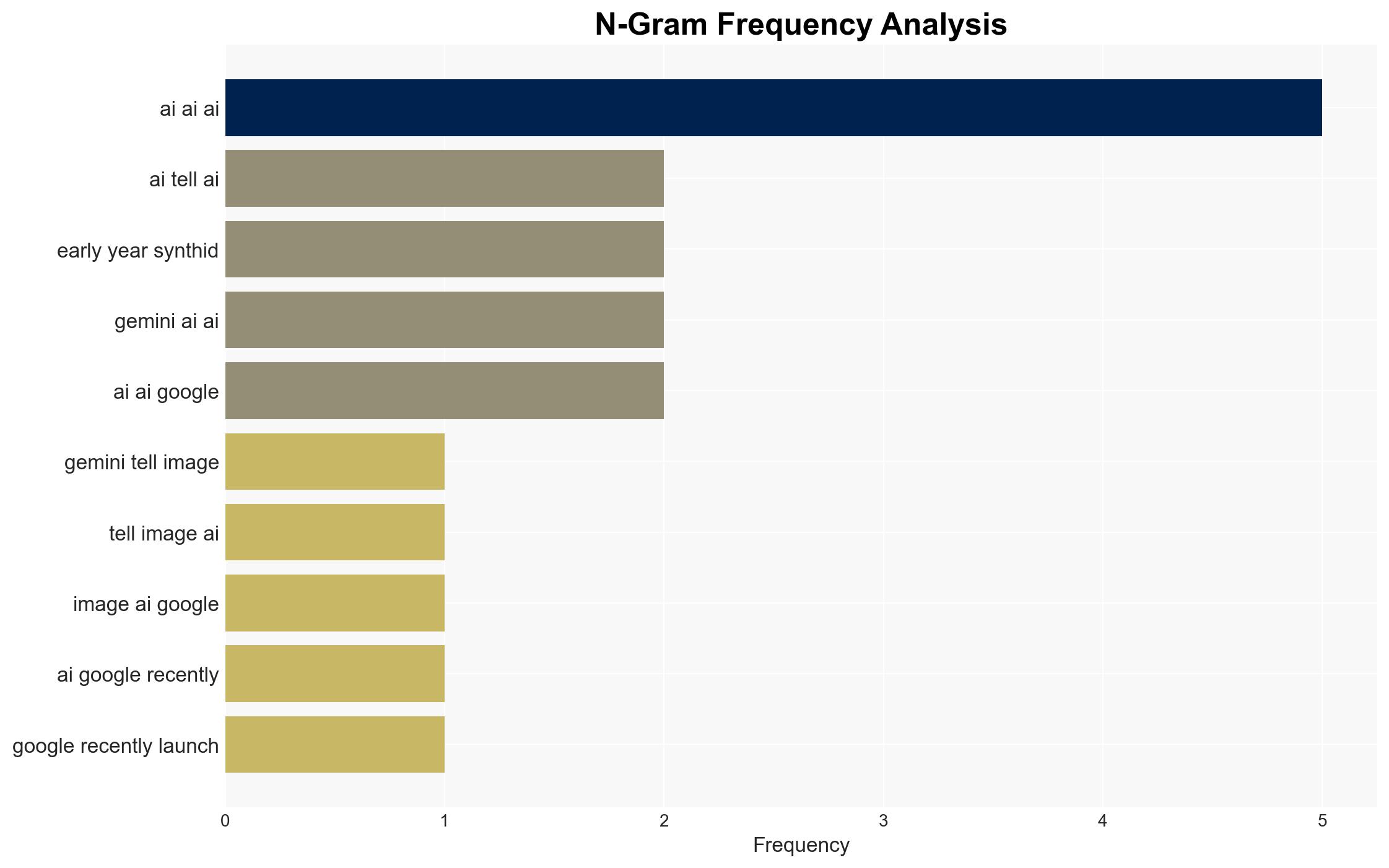

Hypothesis 1: Google’s Gemini AI will significantly enhance the ability to authenticate digital content, reducing misinformation and enhancing trust in digital media.

Hypothesis 2: The introduction of Gemini AI’s detection capabilities will lead to new privacy concerns and potential misuse by malicious actors seeking to circumvent detection.

Hypothesis 1 is more likely due to the increasing demand for tools to verify digital content authenticity amid rising misinformation. However, Hypothesis 2 remains plausible given the historical pattern of technology being exploited for malicious purposes.

3. Key Assumptions and Red Flags

Assumptions: It is assumed that the technology will be widely adopted and effectively integrated into existing platforms. It is also assumed that the technology will remain robust against circumvention attempts.

Red Flags: The potential for rapid development of countermeasures by adversaries to bypass detection. Limited transparency from Google regarding the technology’s limitations and potential biases.

4. Implications and Strategic Risks

Implications: The widespread adoption of Gemini AI could lead to a reduction in AI-generated misinformation, enhancing digital trust. However, it could also trigger a technological arms race between detection and evasion techniques.

Strategic Risks: Potential escalation in cyber warfare tactics as adversaries develop more sophisticated methods to bypass detection. Economic risks include potential impacts on industries reliant on AI-generated content, such as digital marketing and entertainment.

5. Recommendations and Outlook

- Monitor the adoption and effectiveness of Gemini AI in various sectors.

- Develop regulatory frameworks to ensure ethical use and prevent misuse.

- Encourage collaboration between tech companies and governments to share best practices and detection methodologies.

- Best-case scenario: Gemini AI becomes a standard tool for verifying digital content, significantly reducing misinformation.

- Worst-case scenario: Malicious actors develop effective countermeasures, undermining the technology’s effectiveness.

- Most-likely scenario: Gemini AI is adopted with moderate success, leading to incremental improvements in digital content verification.

6. Key Individuals and Entities

Google (entity responsible for developing Gemini AI)

7. Thematic Tags

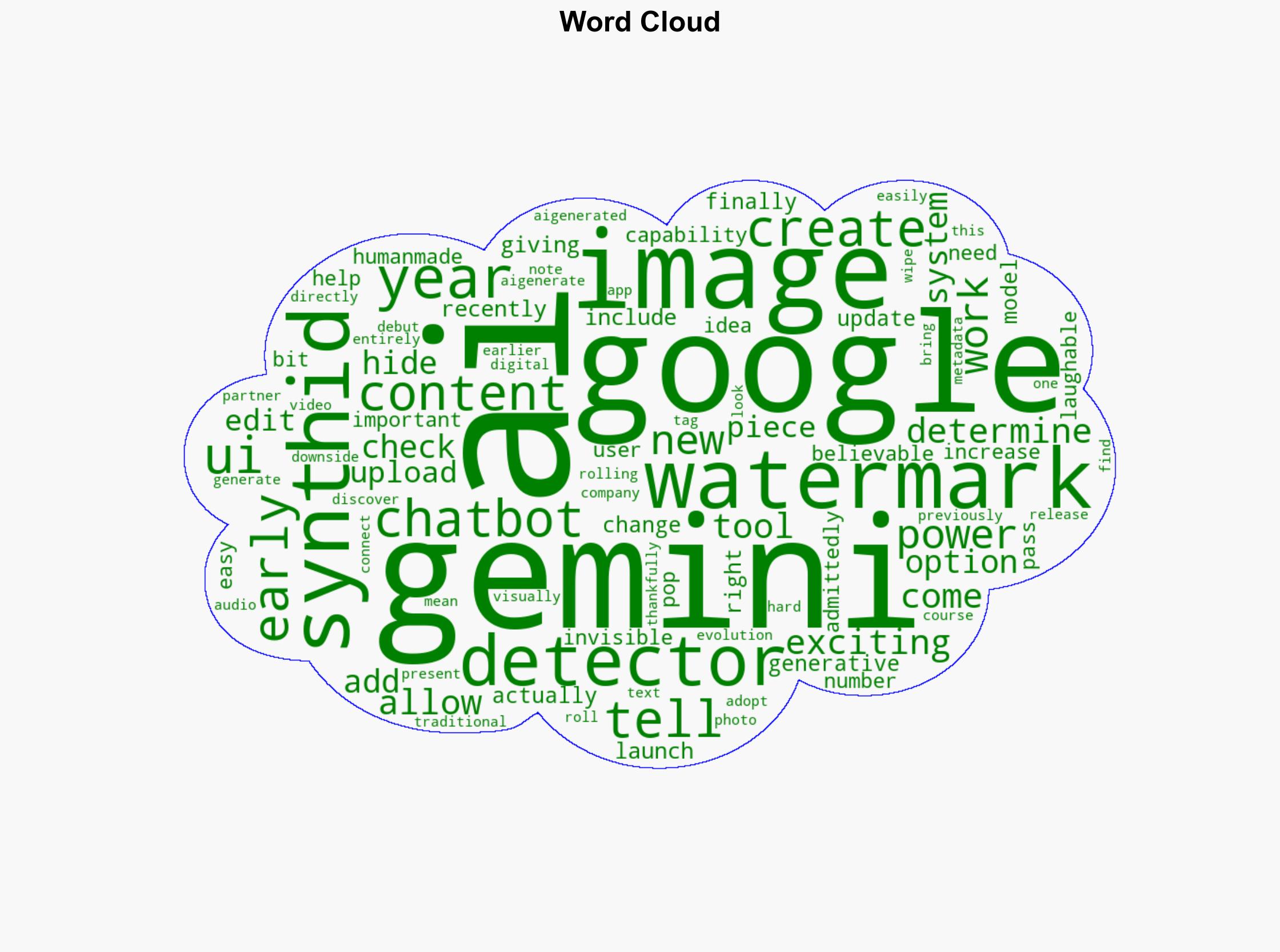

Cybersecurity, Digital Authentication, AI Technology, Misinformation

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us