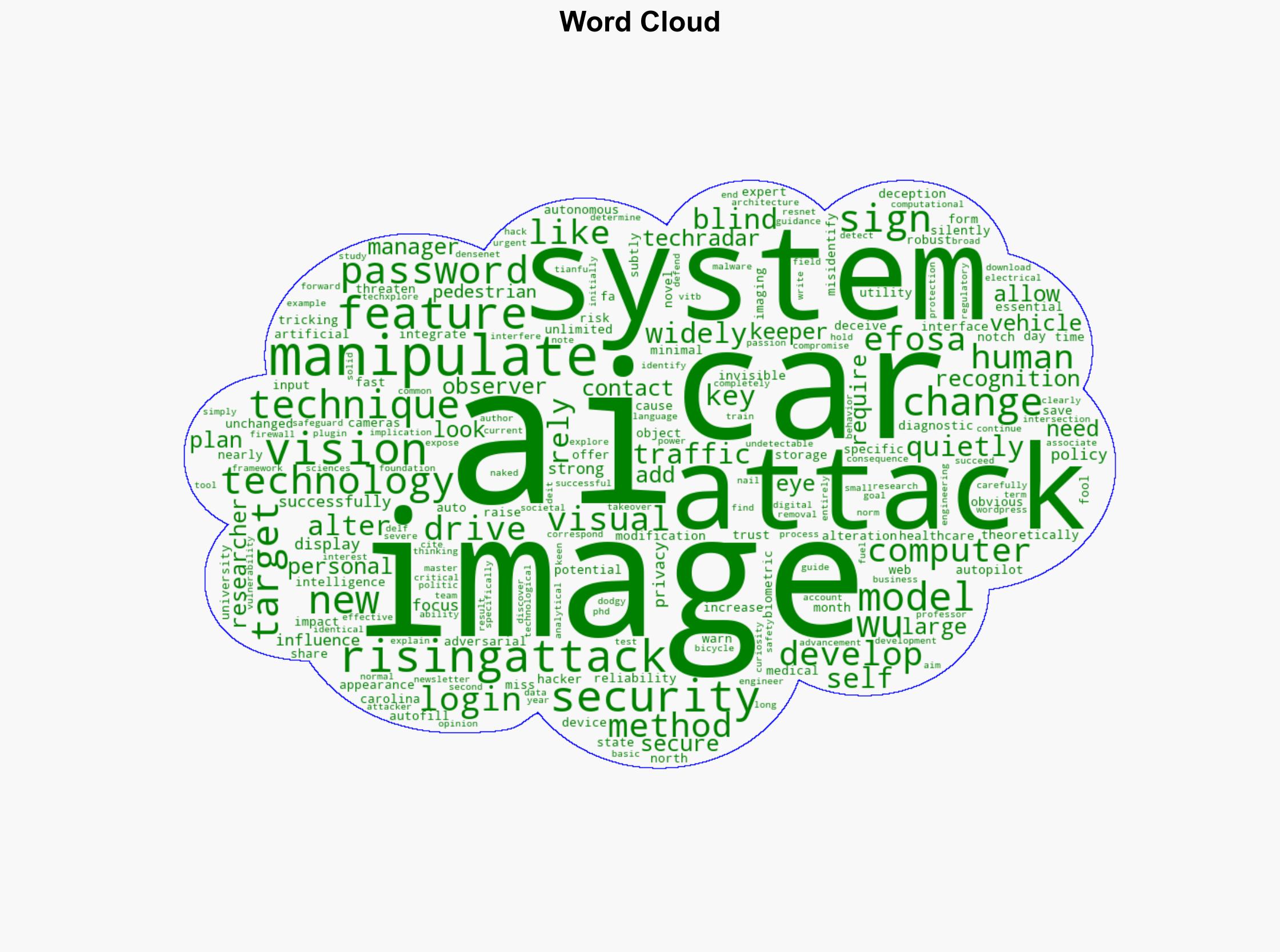

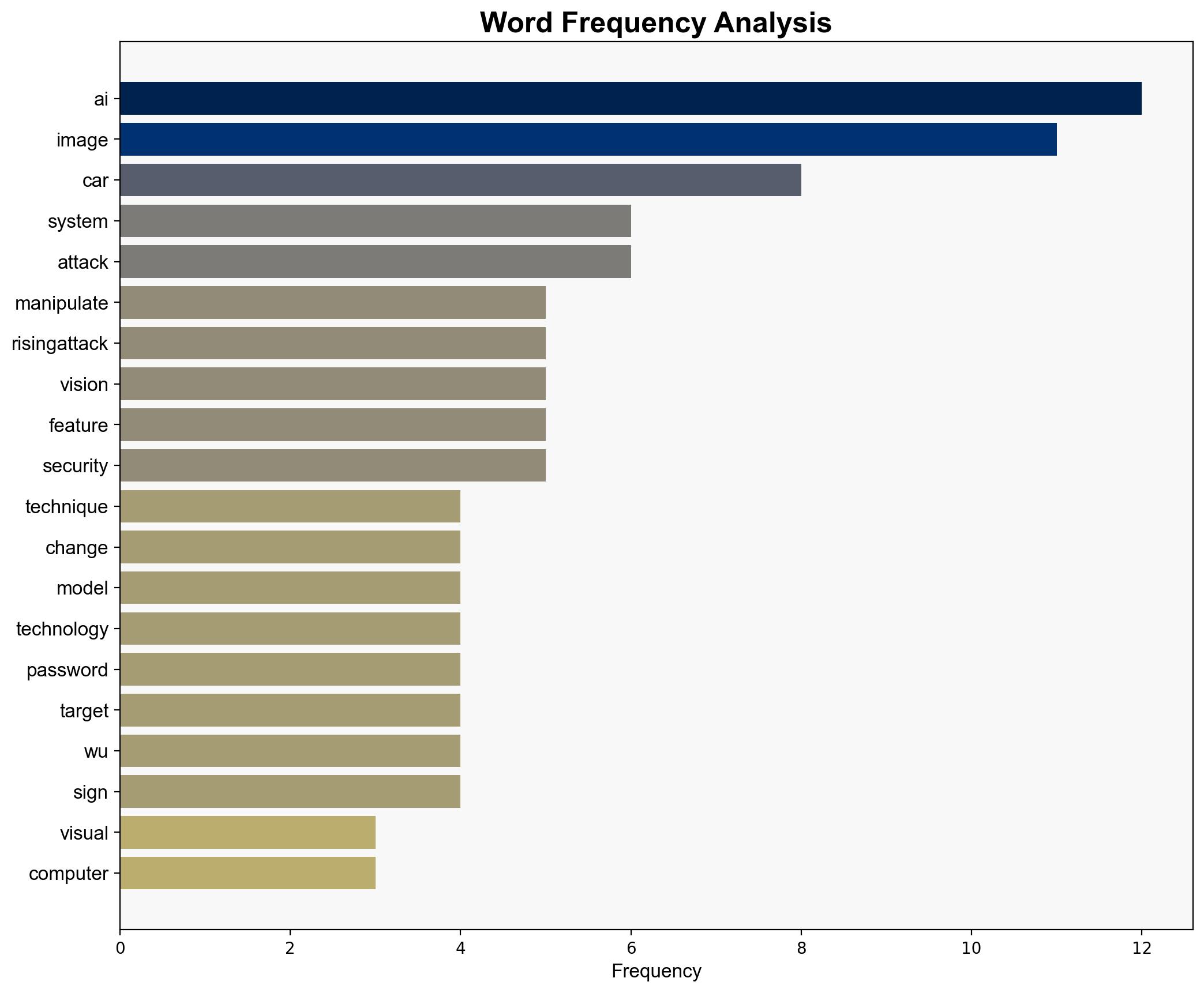

Hackers could one day use novel visual techniques to manipulate what AI sees – RisingAttacK impacts ‘most widely used AI computer vision systems’ – TechRadar

Published on: 2025-07-07

Intelligence Report: Hackers could one day use novel visual techniques to manipulate what AI sees – RisingAttacK impacts ‘most widely used AI computer vision systems’ – TechRadar

1. BLUF (Bottom Line Up Front)

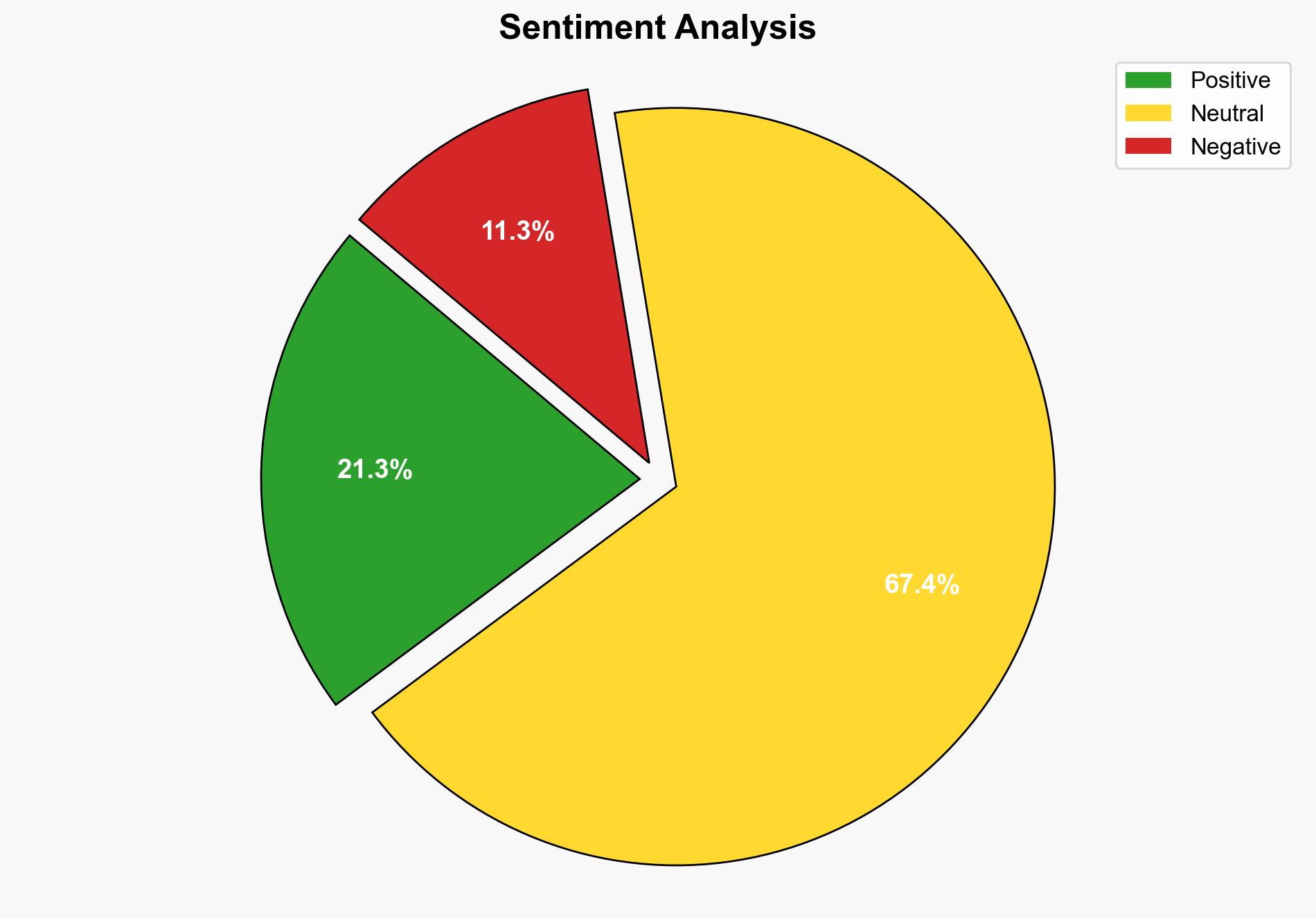

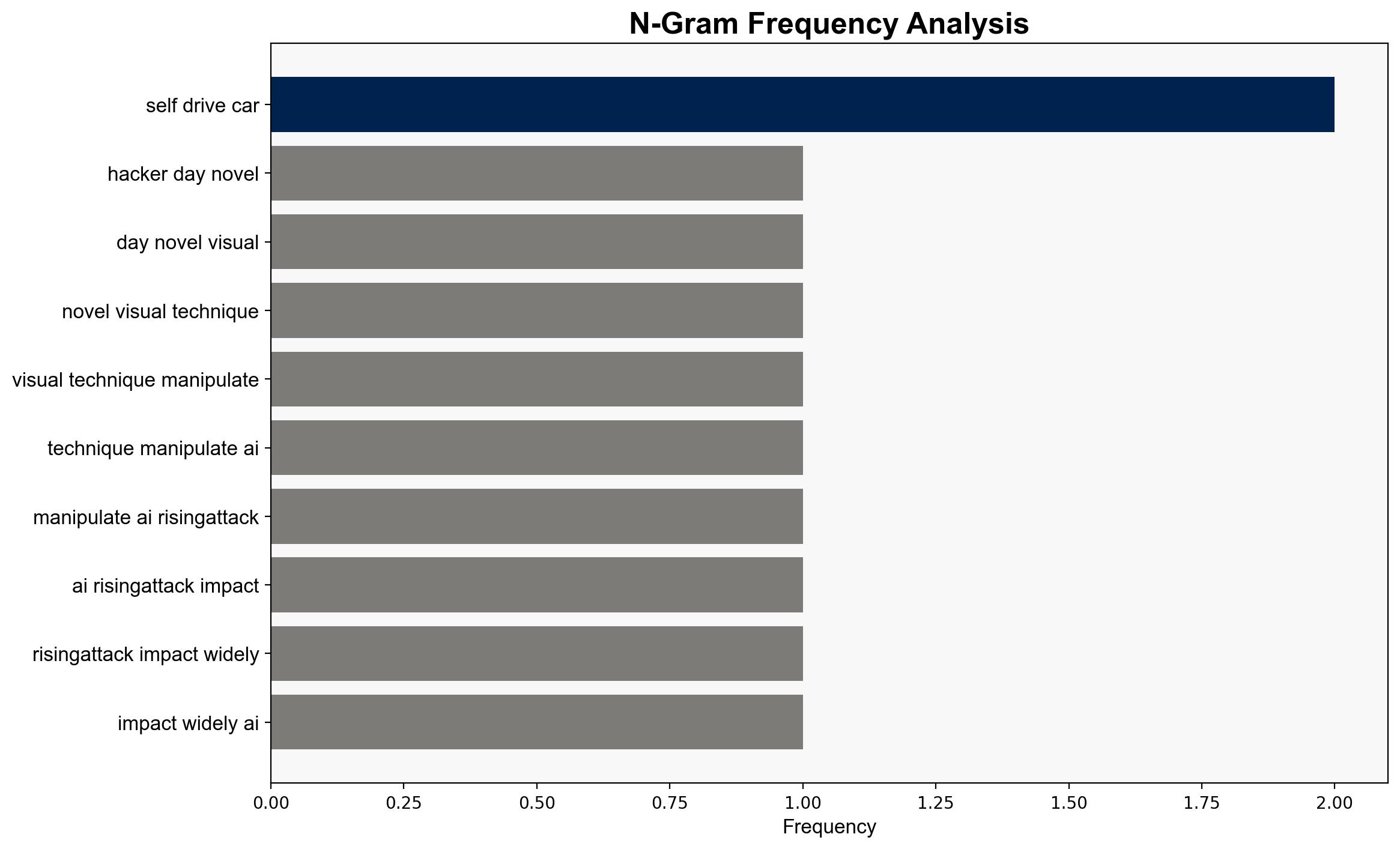

RisingAttacK, a novel adversarial technique, poses a significant threat to AI computer vision systems by subtly altering visual inputs to deceive AI models. This method can compromise critical systems such as autonomous vehicles and healthcare diagnostics, leading to severe safety and security risks. Immediate attention is required to develop robust defenses against such attacks.

2. Detailed Analysis

The following structured analytic techniques have been applied to ensure methodological consistency:

Adversarial Threat Simulation

Simulated potential adversarial behaviors to identify vulnerabilities within AI systems, focusing on subtle image modifications that can deceive AI without human detection.

Indicators Development

Established indicators to detect and monitor anomalies in AI behavior, emphasizing early threat detection in systems reliant on visual recognition.

Bayesian Scenario Modeling

Utilized probabilistic models to predict potential pathways for cyberattacks, assessing the likelihood and impact of RisingAttacK on AI systems.

Narrative Pattern Analysis

Analyzed the underlying narratives and motivations behind adversarial attacks to enhance threat assessment and response strategies.

3. Implications and Strategic Risks

The RisingAttacK technique introduces significant risks across multiple domains. In the cyber domain, it threatens the integrity of AI systems, potentially leading to widespread disruptions in autonomous vehicles and healthcare diagnostics. The political and economic dimensions are also at risk, as compromised AI systems could undermine public trust and lead to regulatory challenges. The cascading effects of such vulnerabilities could extend to national security, necessitating a comprehensive response strategy.

4. Recommendations and Outlook

- Develop and implement advanced detection mechanisms to identify and neutralize adversarial attacks on AI systems.

- Enhance collaboration between government agencies, technology firms, and research institutions to strengthen AI security frameworks.

- Scenario-based projections:

- Best Case: Successful development of robust defenses against RisingAttacK, minimizing potential impacts.

- Worst Case: Widespread exploitation of AI vulnerabilities, leading to significant disruptions and loss of public trust.

- Most Likely: Gradual improvements in AI security, with ongoing challenges in keeping pace with evolving attack techniques.

5. Key Individuals and Entities

Tianfu Wu, a key researcher involved in the study of RisingAttacK, has been instrumental in identifying the vulnerabilities within AI systems.

6. Thematic Tags

national security threats, cybersecurity, AI vulnerabilities, adversarial attacks, autonomous vehicles, healthcare diagnostics