How attackers use patience to push past AI guardrails – Help Net Security

Published on: 2025-11-18

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report: How Attackers Use Patience to Push Past AI Guardrails

1. BLUF (Bottom Line Up Front)

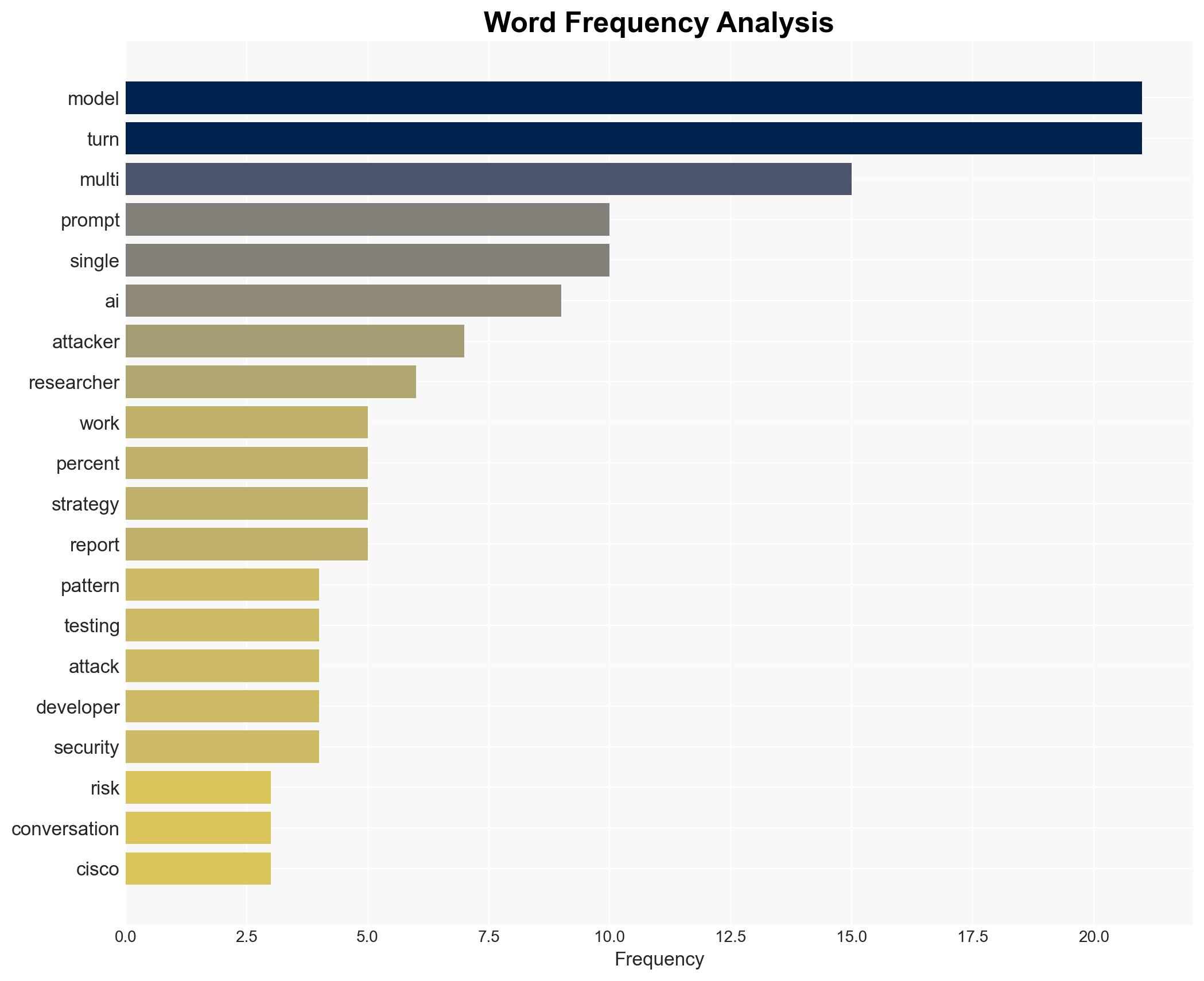

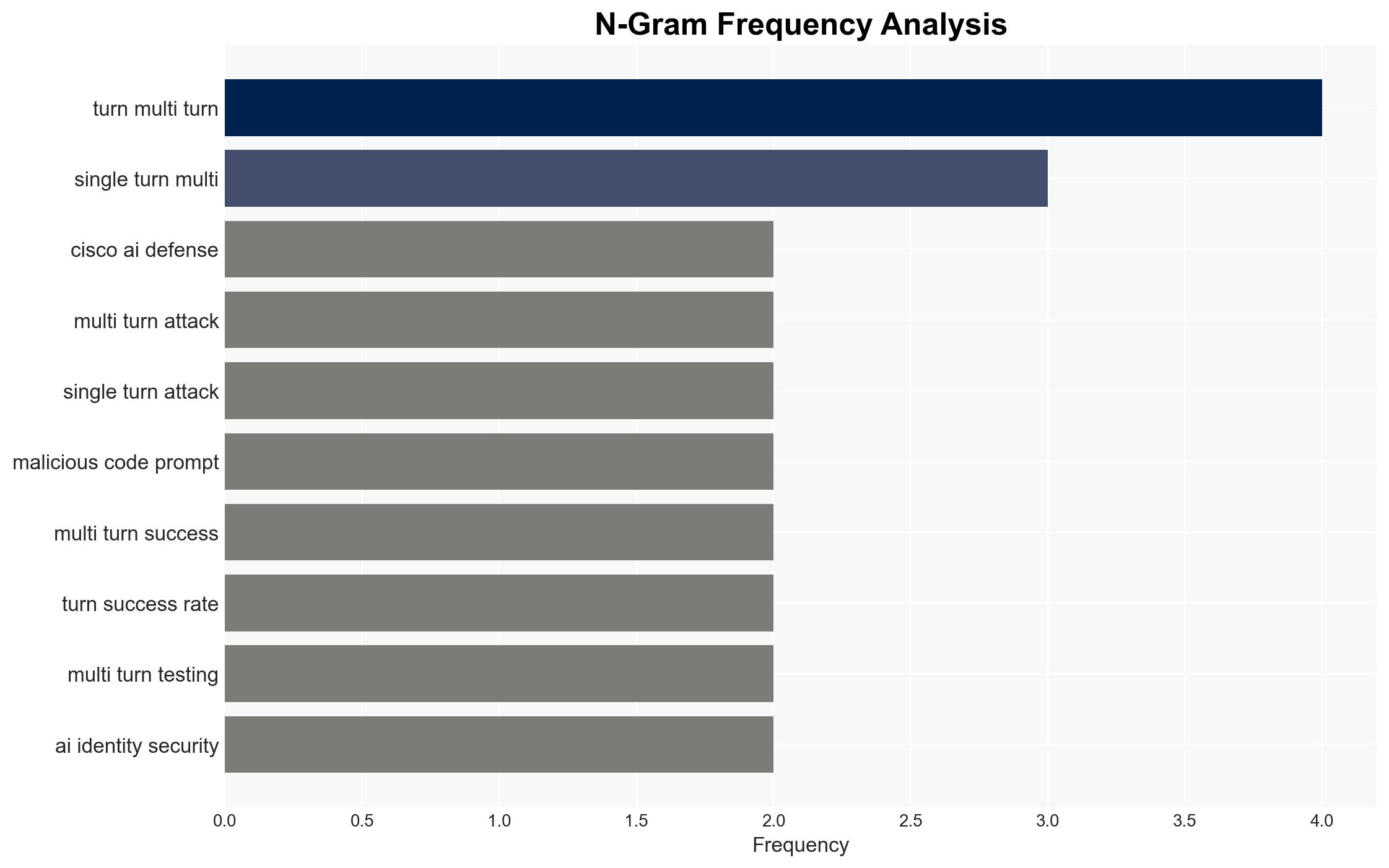

With a moderate confidence level, the most supported hypothesis is that attackers are increasingly leveraging multi-turn interactions to bypass AI guardrails, exploiting vulnerabilities in AI models that are not adequately tested for prolonged engagements. It is recommended that organizations implement layered security measures, including context-aware filters and ongoing multi-turn simulations, to mitigate these evolving threats.

2. Competing Hypotheses

Hypothesis 1: Attackers are primarily using multi-turn interactions to exploit vulnerabilities in AI models, as these models are less robust in handling extended dialogues compared to single-turn interactions.

Hypothesis 2: The perceived increase in multi-turn attack success is due to inadequate initial testing and security measures, rather than an inherent vulnerability in the AI models themselves.

Assessment: Hypothesis 1 is more likely due to evidence of higher success rates in multi-turn attacks and the structured nature of these interactions, which suggests deliberate exploitation. Hypothesis 2, while plausible, does not account for the observed consistency across different models and scenarios.

3. Key Assumptions and Red Flags

Assumptions: It is assumed that AI models are not uniformly tested for multi-turn interactions, and that attackers have the capability to conduct such sophisticated attacks.

Red Flags: The rapid adaptation of attackers to AI defenses and the potential underreporting of successful multi-turn attacks due to lack of detection capabilities.

4. Implications and Strategic Risks

The strategic risk lies in the potential for widespread exploitation of AI systems, leading to compromised data integrity and security breaches. This could escalate into significant economic and reputational damage for organizations relying on AI for critical operations. Politically, there could be increased regulatory scrutiny and pressure to enhance AI security standards.

5. Recommendations and Outlook

- Implement layered security measures, including context-aware filters and ongoing multi-turn simulations to identify and mitigate vulnerabilities.

- Conduct comprehensive testing of AI models for both single and multi-turn interactions to ensure robust defenses.

- Best-case scenario: Organizations successfully adapt to the evolving threat landscape, significantly reducing the success rate of multi-turn attacks.

- Worst-case scenario: Attackers continue to outpace defensive measures, leading to widespread AI system breaches and significant economic losses.

- Most-likely scenario: A gradual improvement in AI defenses as organizations adopt recommended strategies, with a moderate reduction in successful multi-turn attacks.

6. Key Individuals and Entities

No specific individuals are mentioned in the source text. Key entities include AI model developers, cybersecurity teams, and organizational decision-makers responsible for AI deployment and security.

7. Thematic Tags

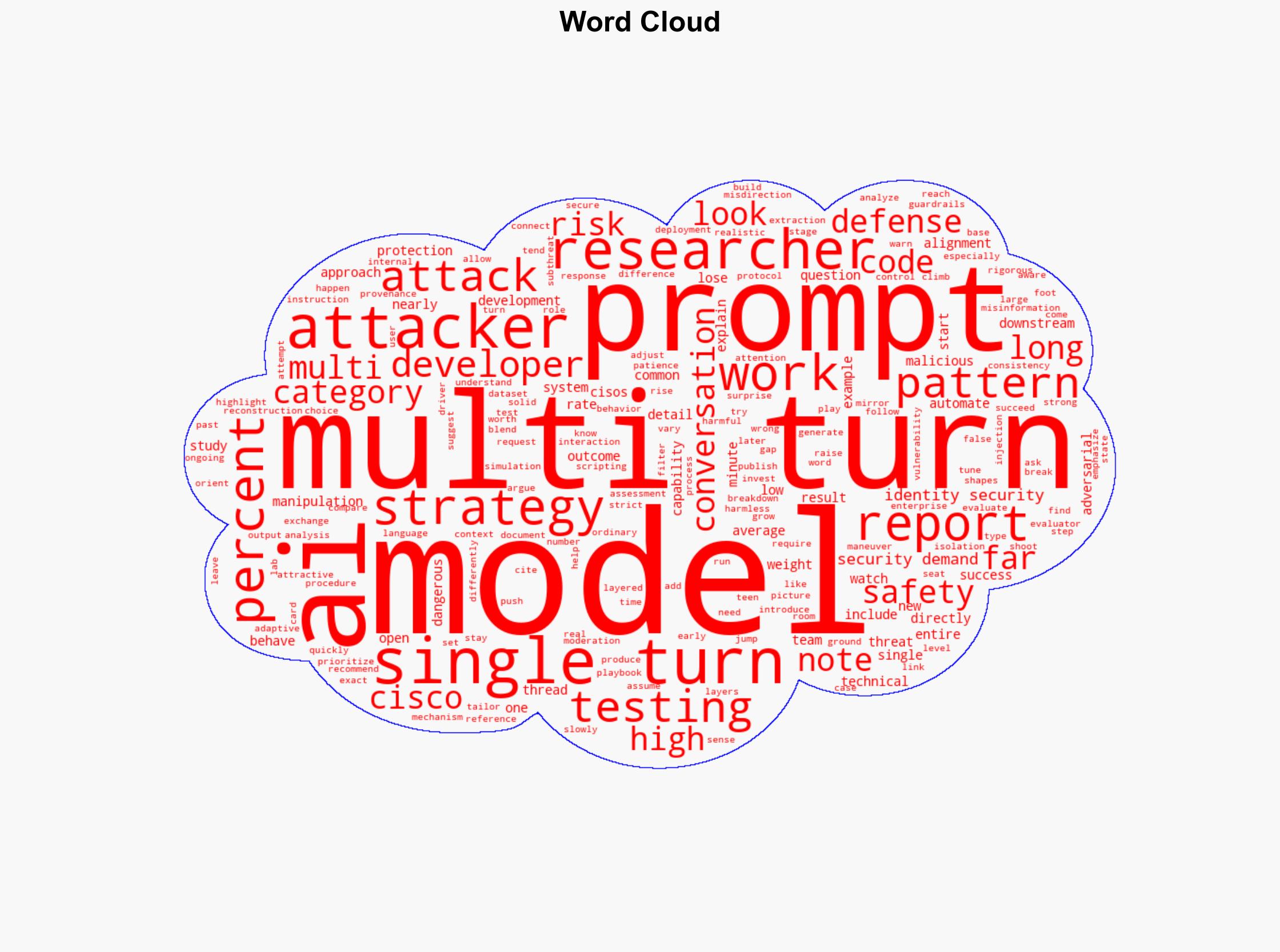

Cybersecurity, AI Vulnerabilities, Multi-turn Interactions, Adversarial Testing

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model hostile behavior to identify vulnerabilities.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us