How OpenAIs red team made ChatGPT agent into an AI fortress – VentureBeat

Published on: 2025-07-18

Intelligence Report: How OpenAIs red team made ChatGPT agent into an AI fortress – VentureBeat

1. BLUF (Bottom Line Up Front)

OpenAI’s red team has significantly enhanced the security of the ChatGPT agent by identifying and addressing critical vulnerabilities. The team’s systematic testing revealed universal exploits and potential risks associated with autonomous AI agents, leading to substantial security improvements. Recommendations include ongoing adversarial testing and enhanced user trust mechanisms.

2. Detailed Analysis

The following structured analytic techniques have been applied to ensure methodological consistency:

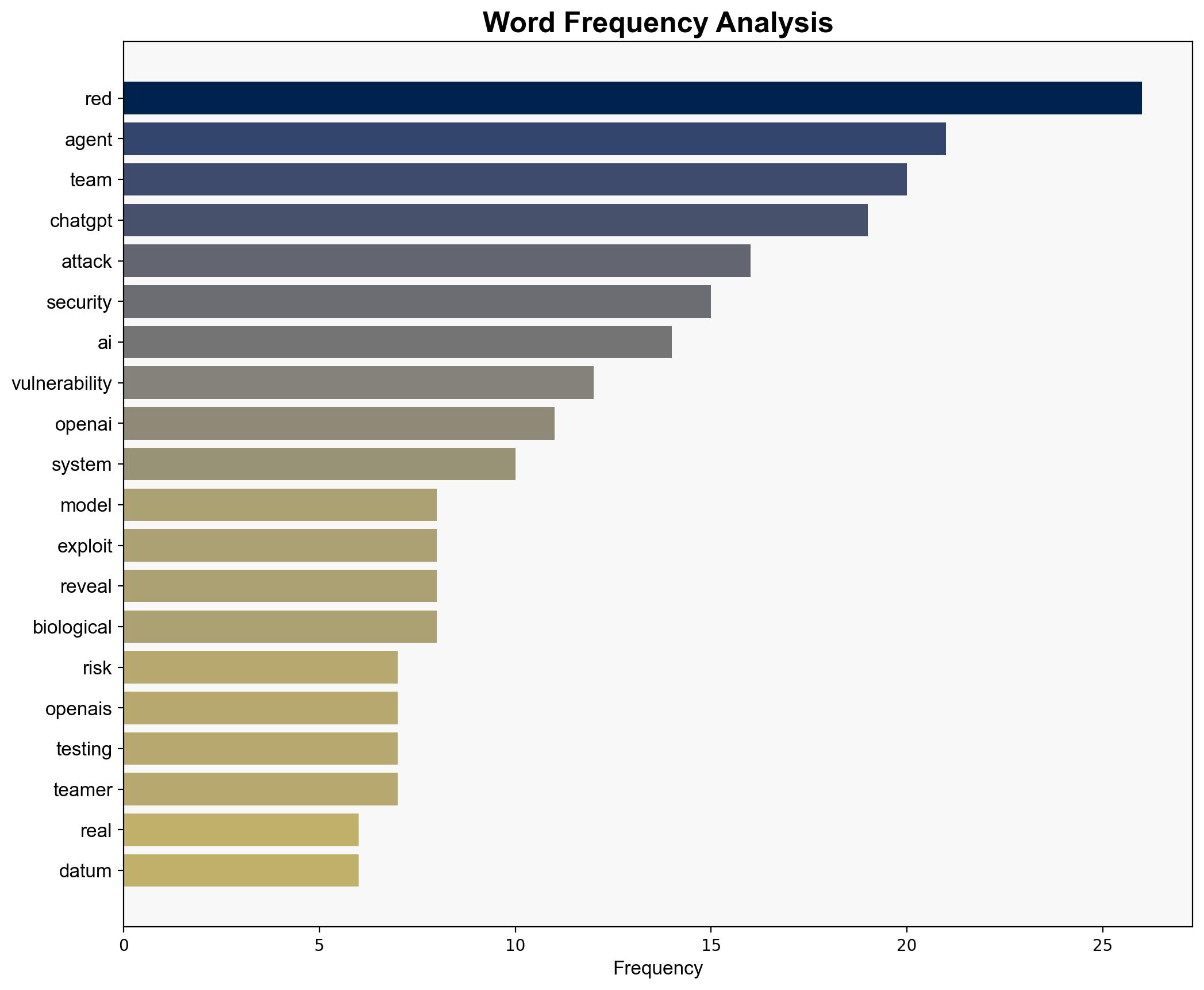

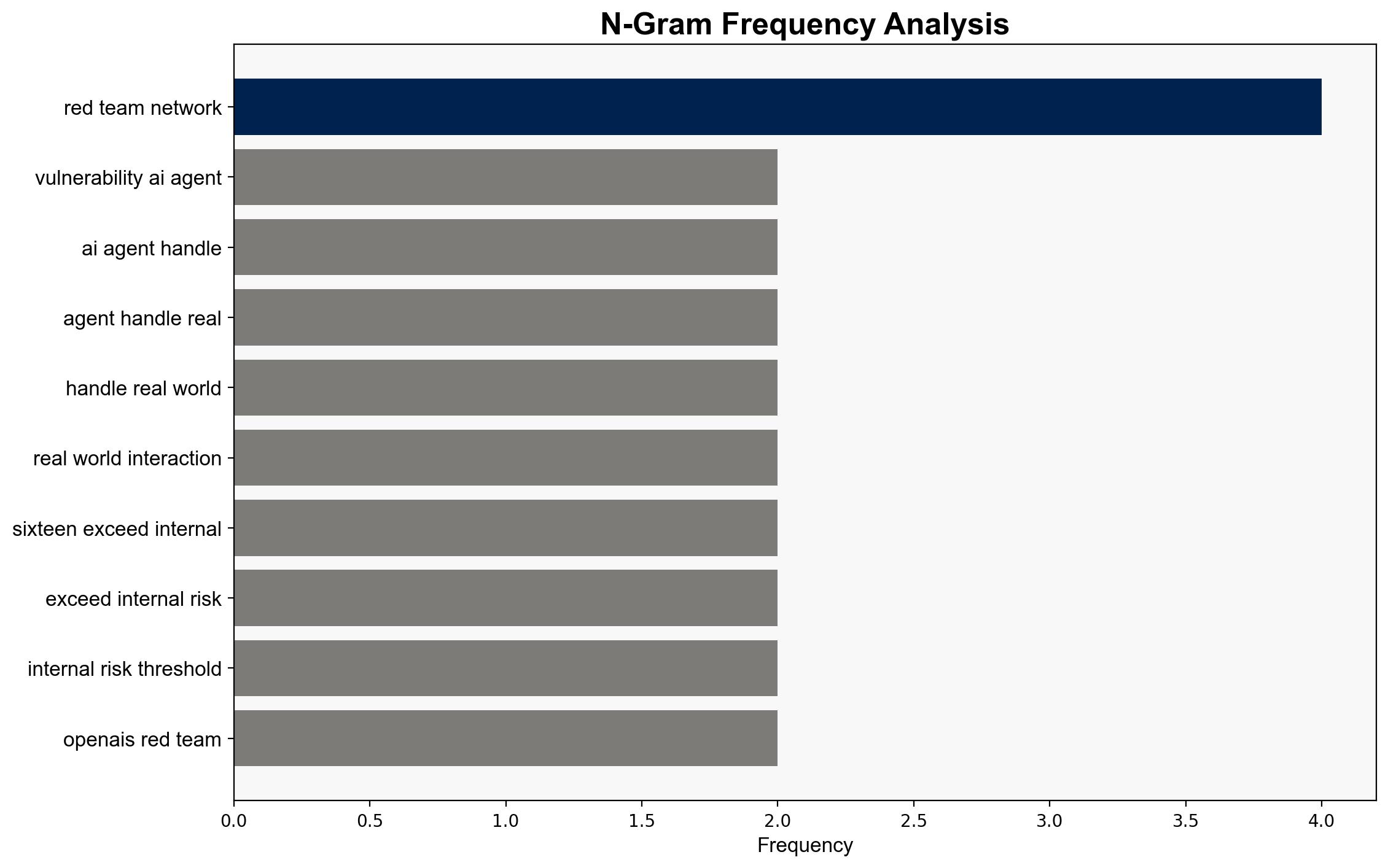

Adversarial Threat Simulation

OpenAI’s red team conducted adversarial simulations to identify vulnerabilities in the ChatGPT agent, focusing on potential exploits that could compromise system integrity.

Indicators Development

Developed indicators for detecting anomalies in AI behavior, enabling early identification of potential security breaches.

Bayesian Scenario Modeling

Utilized probabilistic models to predict potential cyberattack pathways and assess the likelihood of various threat scenarios.

Network Influence Mapping

Mapped the influence of various actors within the AI security landscape to understand their impact on system vulnerabilities.

3. Implications and Strategic Risks

The findings highlight the need for robust security measures in AI systems to prevent exploitation by malicious actors. The potential for data leaks and unauthorized access poses significant risks to user privacy and organizational security. The systemic vulnerabilities identified could have cascading effects across sectors reliant on AI technology.

4. Recommendations and Outlook

- Implement continuous adversarial testing to identify emerging threats and vulnerabilities.

- Enhance user trust mechanisms by improving transparency in AI operations and data handling practices.

- Scenario-based projections suggest that proactive security measures could mitigate risks, while failure to address vulnerabilities may lead to increased exploitation attempts.

5. Key Individuals and Entities

Keren Gu

6. Thematic Tags

national security threats, cybersecurity, AI security, data privacy