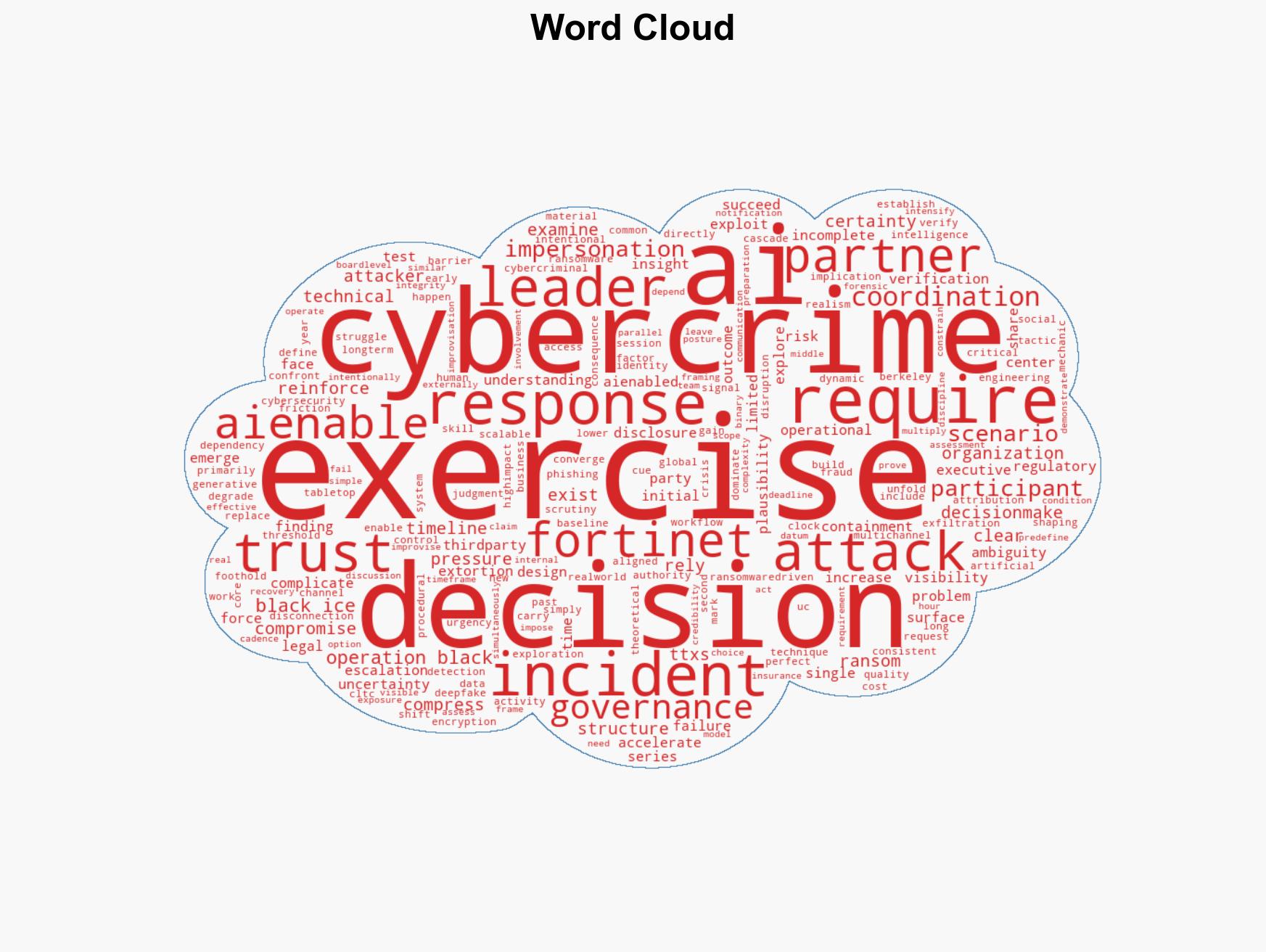

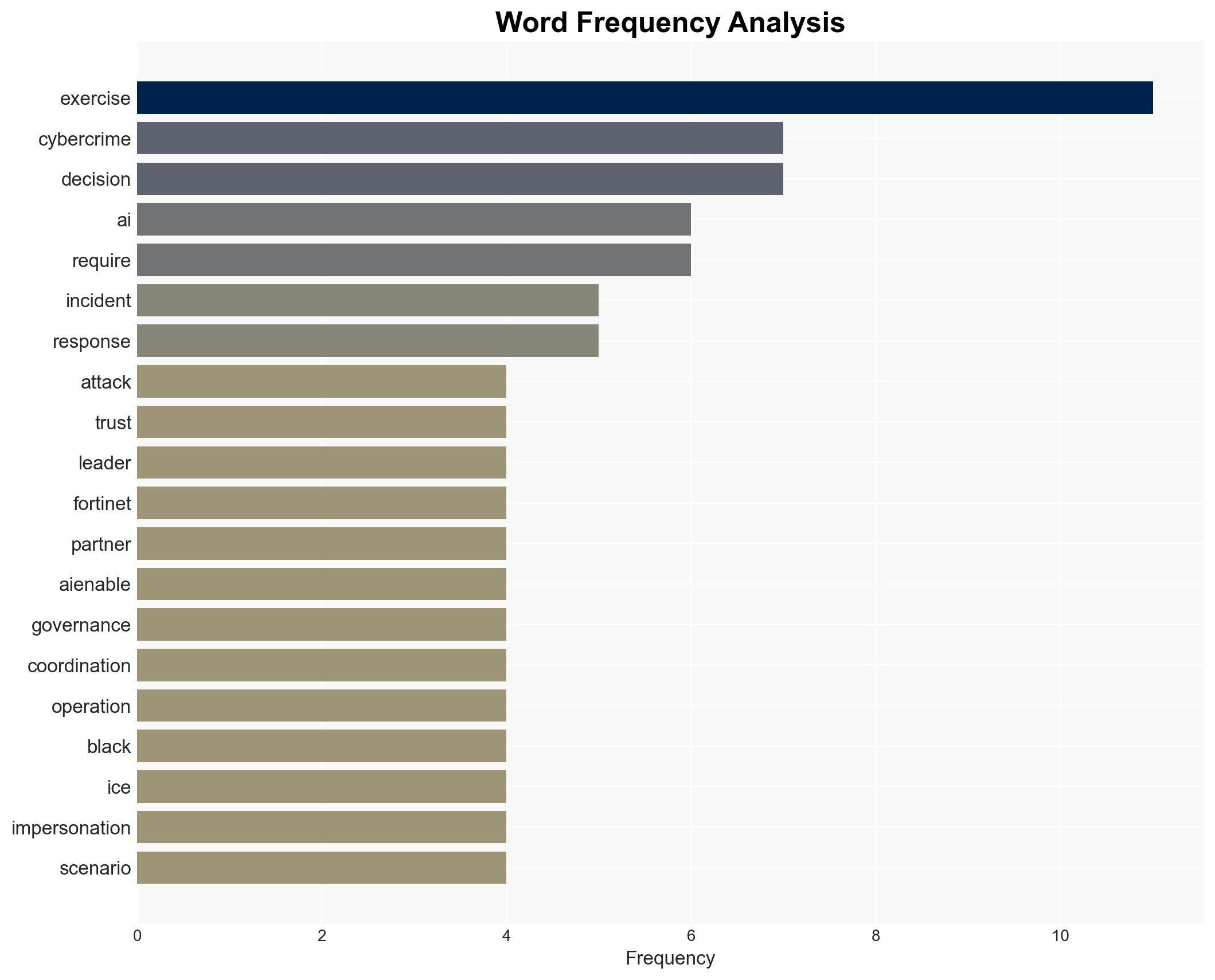

Insights from Operation Black Ice: AI’s Role in Cybercrime and Organizational Decision-Making Challenges

Published on: 2026-02-10

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report: From Theory to Pressure What the Third AI-Enabled Cybercrime Tabletop Exercise Revealed

1. BLUF (Bottom Line Up Front)

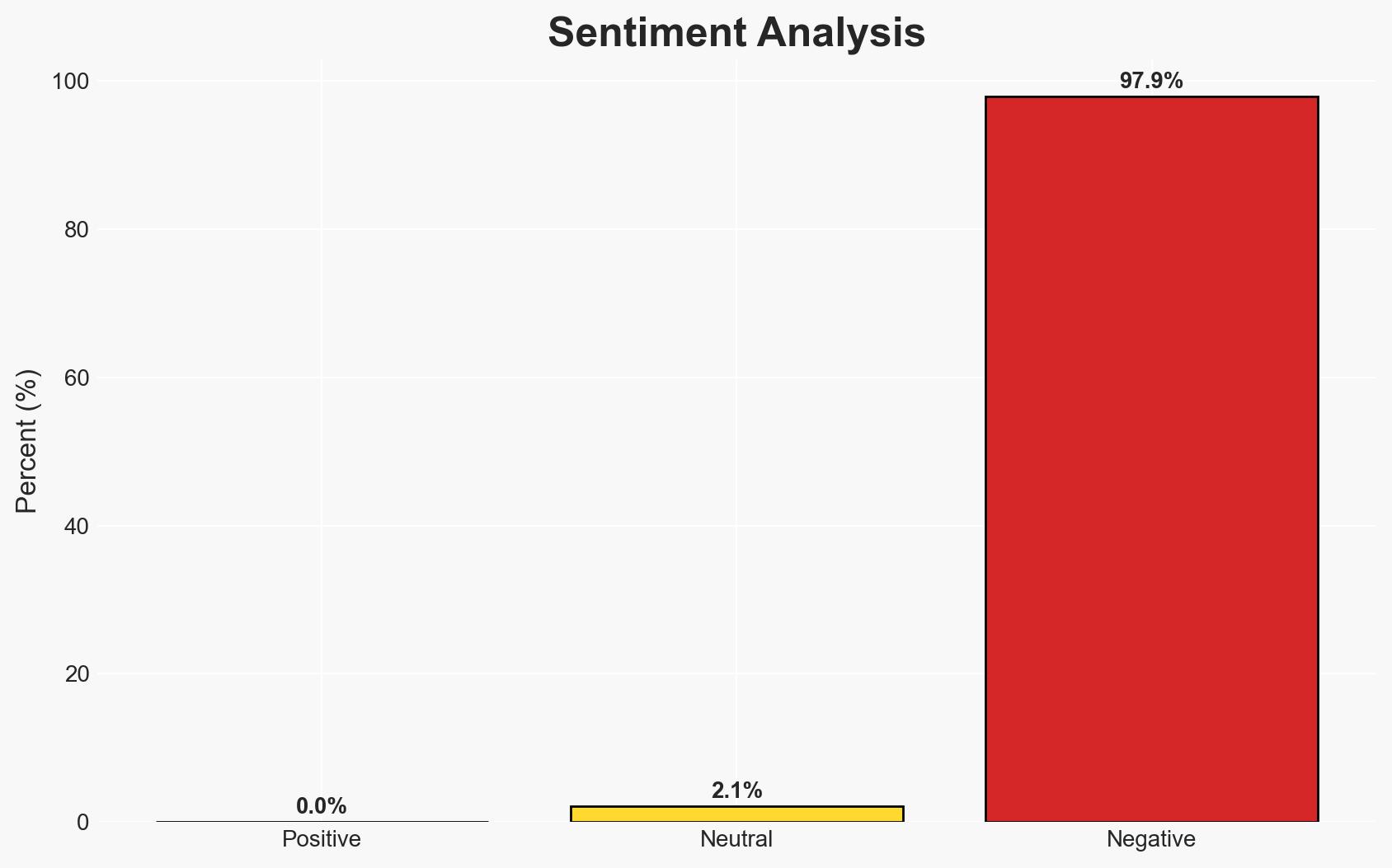

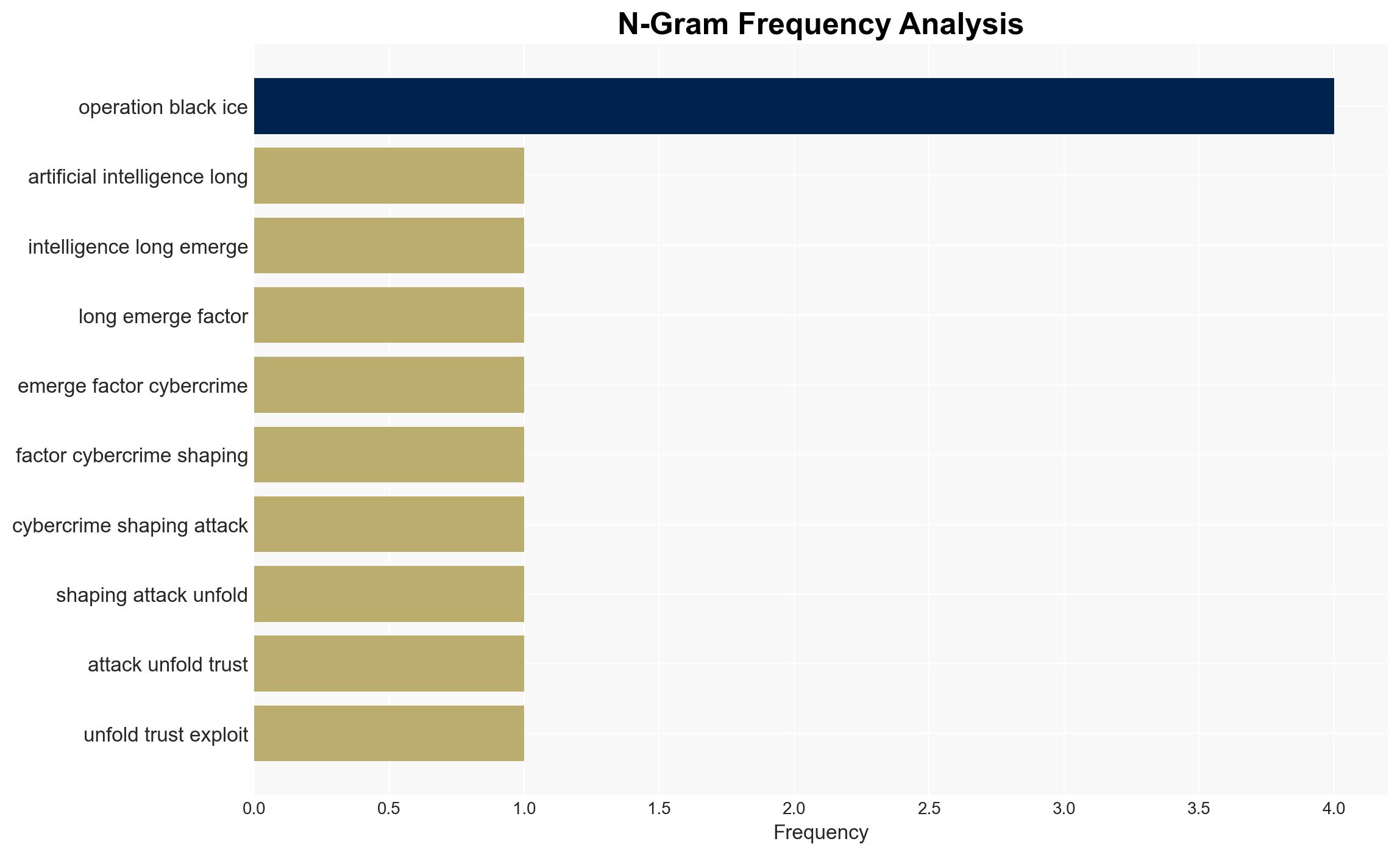

The third AI-enabled cybercrime tabletop exercise, Operation Black Ice, highlighted the increasing role of AI in compressing decision-making timelines and complicating governance structures. The exercise revealed that AI-enabled impersonation, when combined with ransomware and third-party compromise, poses significant challenges to organizational trust and decision-making. The primary affected parties include organizational leaders and cybersecurity teams. Overall confidence in these findings is moderate.

2. Competing Hypotheses

- Hypothesis A: AI-enabled cybercrime primarily accelerates existing attack vectors, making it difficult for organizations to respond effectively within compressed timelines. This hypothesis is supported by the exercise’s findings that AI lowers barriers for cybercriminals and complicates attribution, but it is contradicted by the lack of new attack techniques.

- Hypothesis B: AI-enabled cybercrime introduces fundamentally new attack paradigms that require entirely new defense mechanisms. This is less supported by the exercise, which focused on the acceleration of existing tactics rather than the introduction of new ones.

- Assessment: Hypothesis A is currently better supported, as the exercise demonstrated the acceleration of existing tactics rather than the emergence of new paradigms. Indicators that could shift this judgment include the development of novel AI-driven attack techniques.

3. Key Assumptions and Red Flags

- Assumptions: AI will continue to lower barriers for cybercriminals; organizations will struggle with compressed decision-making timelines; current governance structures are insufficient for AI-enabled threats.

- Information Gaps: Specific details on the effectiveness of multi-channel verification and escalation thresholds in real-world scenarios are lacking.

- Bias & Deception Risks: Potential bias in the exercise design towards existing known threats; possible underestimation of novel AI capabilities.

4. Implications and Strategic Risks

The integration of AI into cybercrime is likely to evolve, increasing the pressure on organizational decision-making and governance structures. This could lead to broader systemic vulnerabilities if not addressed.

- Political / Geopolitical: Increased cyber threats could strain international relations and lead to calls for new regulatory frameworks.

- Security / Counter-Terrorism: Enhanced AI capabilities could be leveraged by state and non-state actors, complicating threat detection and response.

- Cyber / Information Space: AI-driven attacks may increase misinformation and disinformation campaigns, impacting public trust.

- Economic / Social: Ransomware and data breaches could lead to significant economic losses and erode consumer trust in digital services.

5. Recommendations and Outlook

- Immediate Actions (0–30 days): Enhance monitoring of AI-driven threats, implement multi-channel verification processes, and conduct regular cybersecurity drills.

- Medium-Term Posture (1–12 months): Develop partnerships with AI research institutions, invest in AI threat detection capabilities, and update governance frameworks to address AI-specific risks.

- Scenario Outlook: Best: Organizations adapt quickly to AI threats; Worst: AI-driven attacks outpace defensive measures; Most-Likely: Incremental improvements in defense, with ongoing challenges in governance and decision-making.

6. Key Individuals and Entities

- Fortinet

- Center for Long-Term Cybersecurity (CLTC) at UC Berkeley

- Global partners involved in the exercise

- Not clearly identifiable from open sources in this snippet.

7. Thematic Tags

cybersecurity, cybercrime, artificial intelligence, ransomware, governance, decision-making, threat detection

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Forecast futures under uncertainty via probabilistic logic.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us