NSB Warns of Potential Cybersecurity Risks in China-Made Generative AI Language Models – Globalsecurity.org

Published on: 2025-11-17

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report:

1. BLUF (Bottom Line Up Front)

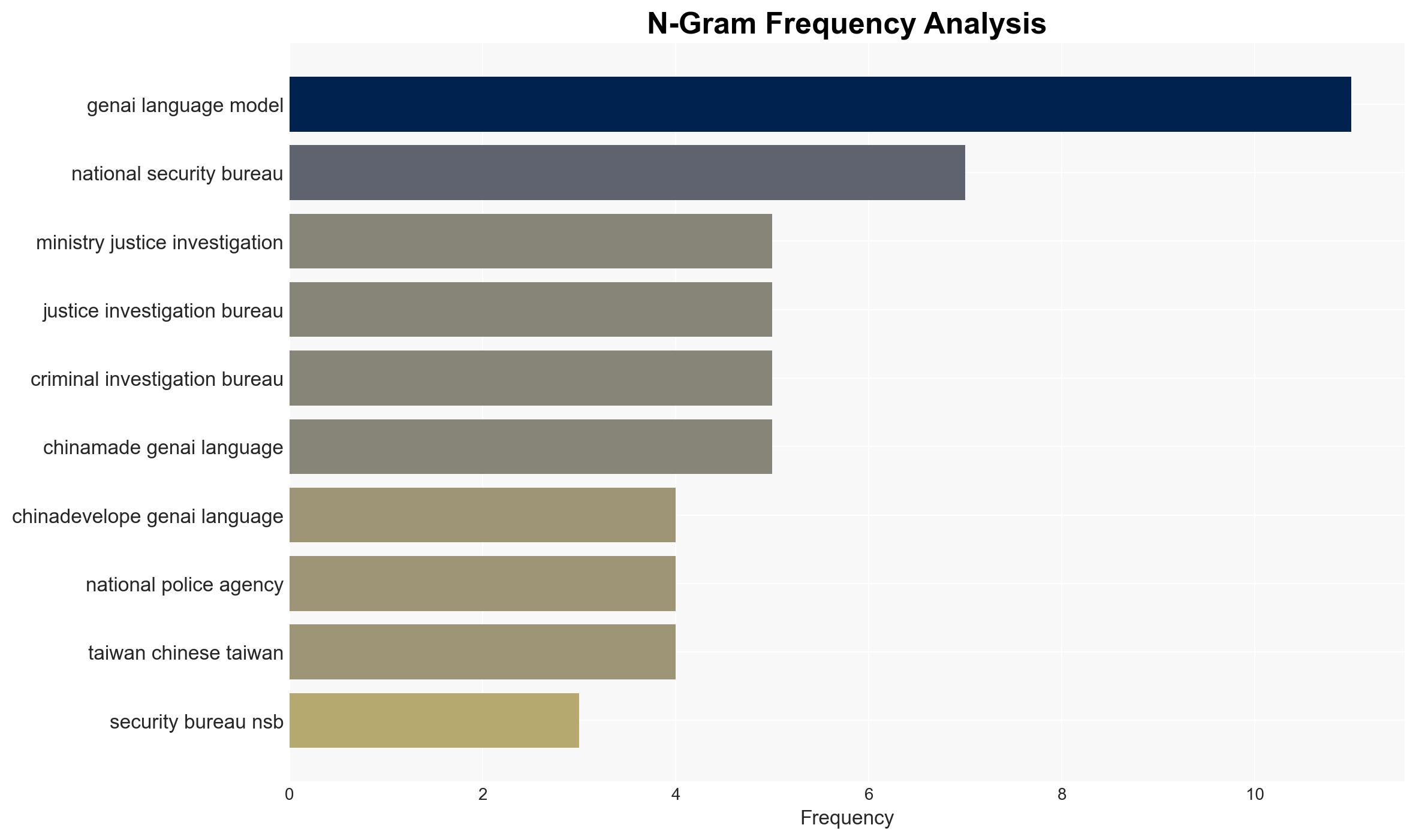

The strategic judgment is that China-made generative AI language models pose a significant cybersecurity and informational risk, with a high confidence level. The most supported hypothesis is that these models are intentionally designed to align with Chinese state interests, potentially serving as tools for cyber and informational influence operations. Recommended actions include enhancing cybersecurity measures, conducting thorough inspections of AI applications, and fostering international cooperation to address these risks.

2. Competing Hypotheses

Hypothesis 1: China-made generative AI language models are intentionally designed to align with Chinese state interests, serving as tools for cyber and informational influence operations.

Hypothesis 2: The cybersecurity and informational risks associated with China-made generative AI language models are primarily due to inadequate security protocols and oversight, rather than intentional state-driven objectives.

Hypothesis 1 is more likely due to the alignment of generated content with Chinese official stances and the presence of political censorship indicators. The systematic nature of content bias and keyword filtering suggests deliberate design rather than oversight failure.

3. Key Assumptions and Red Flags

Assumptions include the belief that China-made AI models are subject to state influence and that the identified risks are not coincidental. Red flags include the alignment of AI-generated content with Chinese political narratives and the potential for these models to generate malicious code. Deception indicators could involve the obfuscation of true capabilities or intentions behind these AI models.

4. Implications and Strategic Risks

The potential for these AI models to disseminate disinformation and generate malicious code poses significant cyber and informational threats. Politically, this could escalate tensions in cross-strait relations and influence international perceptions of Taiwan. Economically, compromised data security could impact businesses and trade relations. The risk of cyber-attacks using AI-generated scripts could lead to broader cybersecurity incidents affecting multiple countries.

5. Recommendations and Outlook

- Enhance cybersecurity protocols and conduct regular inspections of AI applications, focusing on those developed in China.

- Foster international cooperation to establish standards and share intelligence on AI-related risks.

- Engage in public awareness campaigns to educate users on potential biases and risks associated with AI-generated content.

- Best-case scenario: International collaboration leads to improved AI security standards, mitigating risks.

- Worst-case scenario: Unchecked AI risks lead to significant cyber incidents and geopolitical tensions.

- Most-likely scenario: Incremental improvements in AI security, with ongoing challenges in managing state-driven influence operations.

6. Key Individuals and Entities

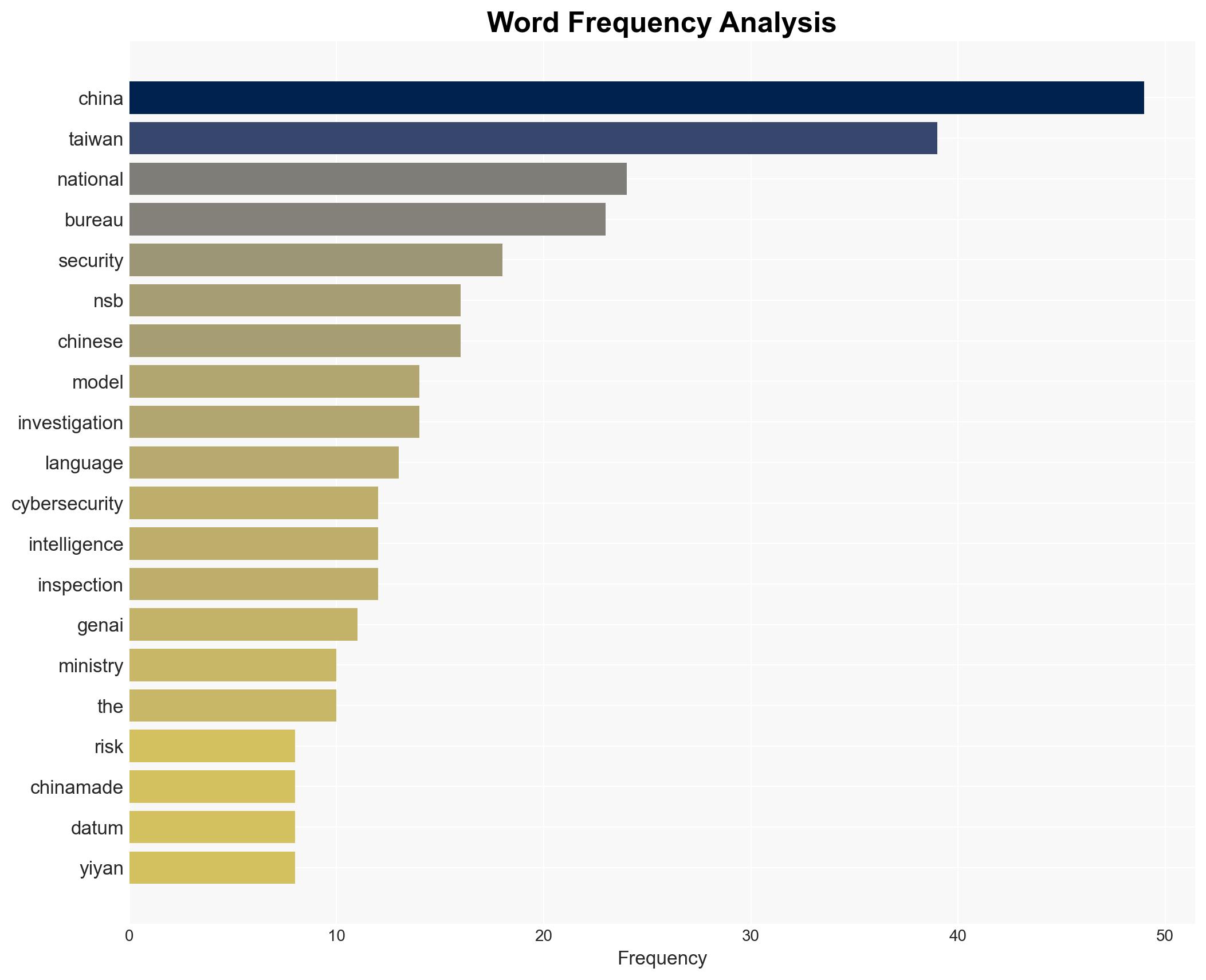

No specific individuals are mentioned. Key entities include the National Security Bureau (NSB), Ministry of Justice Investigation Bureau (MJIB), and the Chinese government.

7. Thematic Tags

Cybersecurity, AI, China, Information Warfare, National Security

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

- Cognitive Bias Stress Test: Structured challenge to expose and correct biases.

- Network Influence Mapping: Map influence relationships to assess actor impact.

- Narrative Pattern Analysis: Deconstruct and track propaganda or influence narratives.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us

·