Perplexity is giving you wrong answers on purpose – MakeUseOf

Published on: 2025-11-19

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report:

1. BLUF (Bottom Line Up Front)

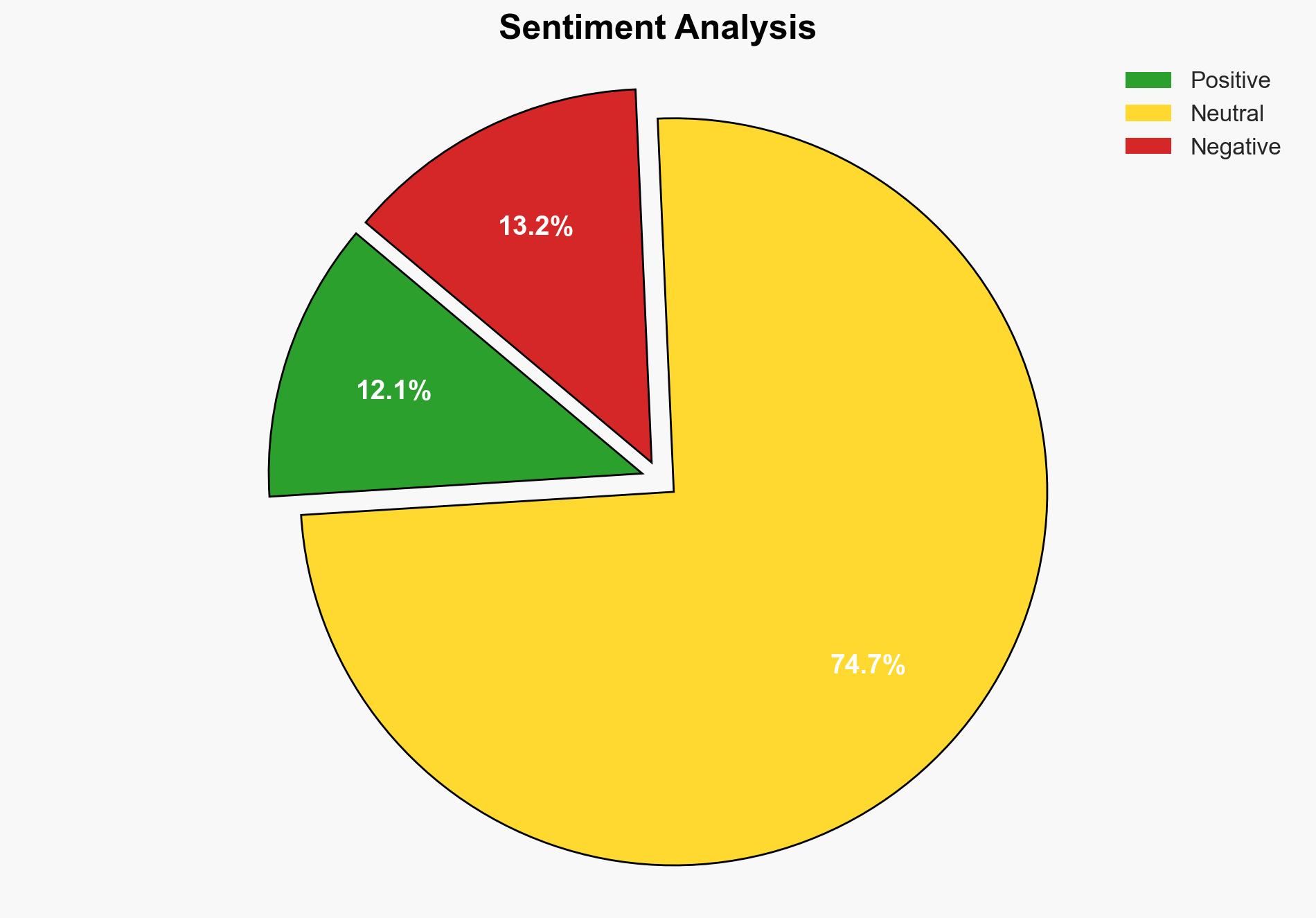

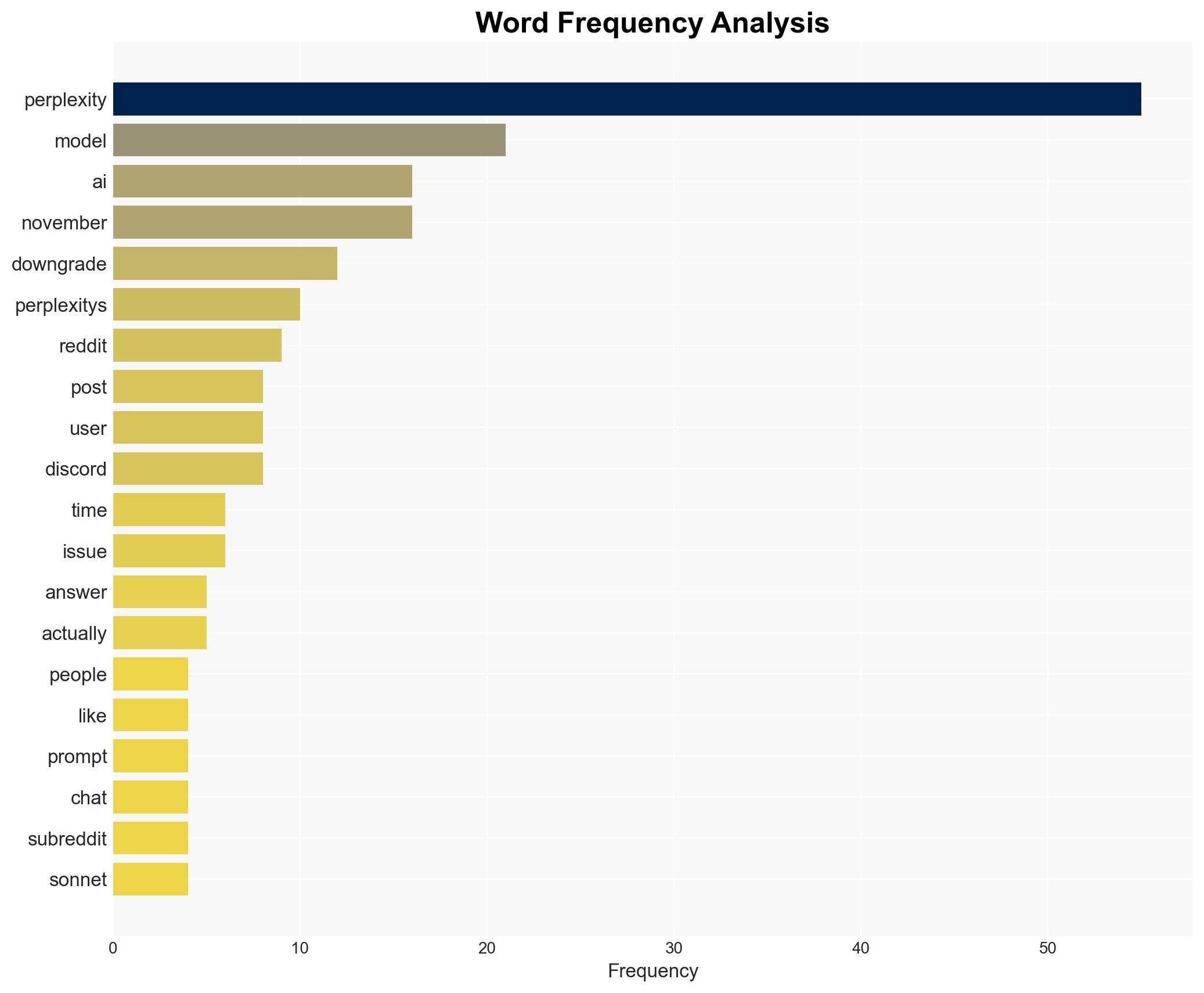

There is a medium confidence level that the issues with Perplexity’s AI model downgrades are primarily due to operational challenges and not deliberate deception. The most supported hypothesis is that the downgrades are a result of demand management and technical bugs. It is recommended that Perplexity enhance transparency and communication with users to rebuild trust and mitigate negative perceptions.

2. Competing Hypotheses

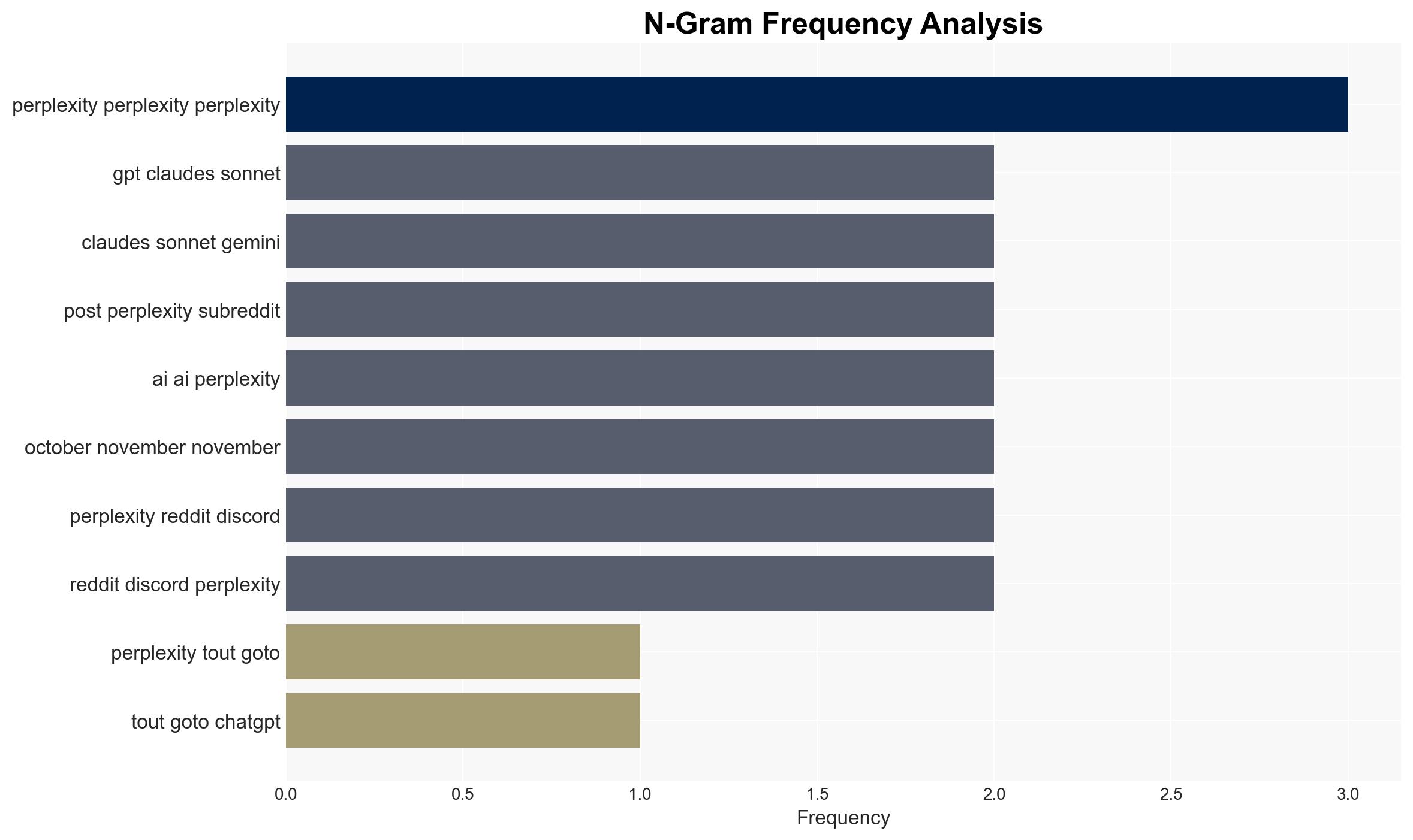

Hypothesis 1: Perplexity’s AI model downgrades are due to operational challenges, including demand management and technical bugs.

Hypothesis 2: Perplexity is intentionally downgrading AI models to manage costs or for other undisclosed strategic reasons.

Hypothesis 1 is more likely supported by the evidence, including statements from Perplexity’s CEO and the acknowledgment of technical issues. Hypothesis 2 lacks direct evidence but is fueled by user dissatisfaction and perceptions of inadequate transparency.

3. Key Assumptions and Red Flags

Assumptions include the belief that Perplexity’s communications are truthful and that technical bugs are a plausible explanation for the downgrades. Red flags include the lack of prior notification to users about potential downgrades and the negative user feedback, which could indicate deeper issues or mismanagement. There is a risk of confirmation bias in assuming operational challenges without considering potential strategic motives.

4. Implications and Strategic Risks

The primary risk is reputational damage to Perplexity, leading to user attrition and potential regulatory scrutiny if users escalate complaints to authorities like the FTC. There is also a risk of increased competition if users migrate to alternative AI services. The situation could escalate into a broader trust issue within the AI service industry if transparency is not improved.

5. Recommendations and Outlook

- Perplexity should implement a robust communication strategy to inform users of potential downgrades and technical issues in advance.

- Develop a transparent reporting mechanism for users to track model changes in real-time.

- Conduct a thorough internal review to ensure technical issues are resolved and prevent future occurrences.

- Best-case scenario: Perplexity resolves technical issues, improves transparency, and regains user trust.

- Worst-case scenario: Continued user dissatisfaction leads to significant subscriber loss and potential regulatory action.

- Most-likely scenario: Perplexity addresses immediate technical issues but faces ongoing challenges in fully restoring user confidence.

6. Key Individuals and Entities

Aravind Srinivas (CEO of Perplexity)

7. Thematic Tags

Cybersecurity, AI Trust, User Experience, Operational Management

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Forecast futures under uncertainty via probabilistic logic.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us