Saturday Night Live Perfectly Lampoons AI-Generated Photos – PetaPixel

Published on: 2025-11-17

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report:

1. BLUF (Bottom Line Up Front)

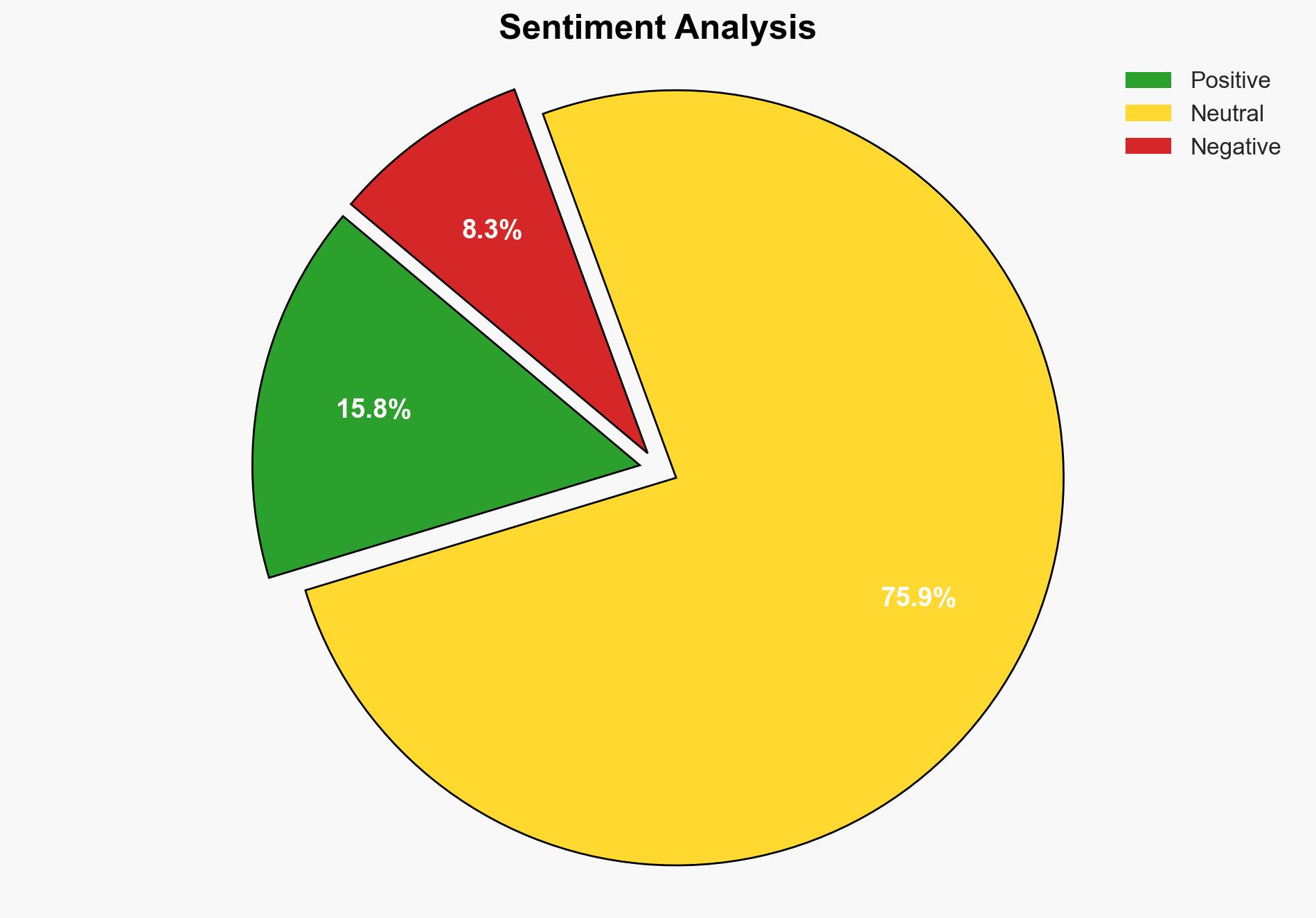

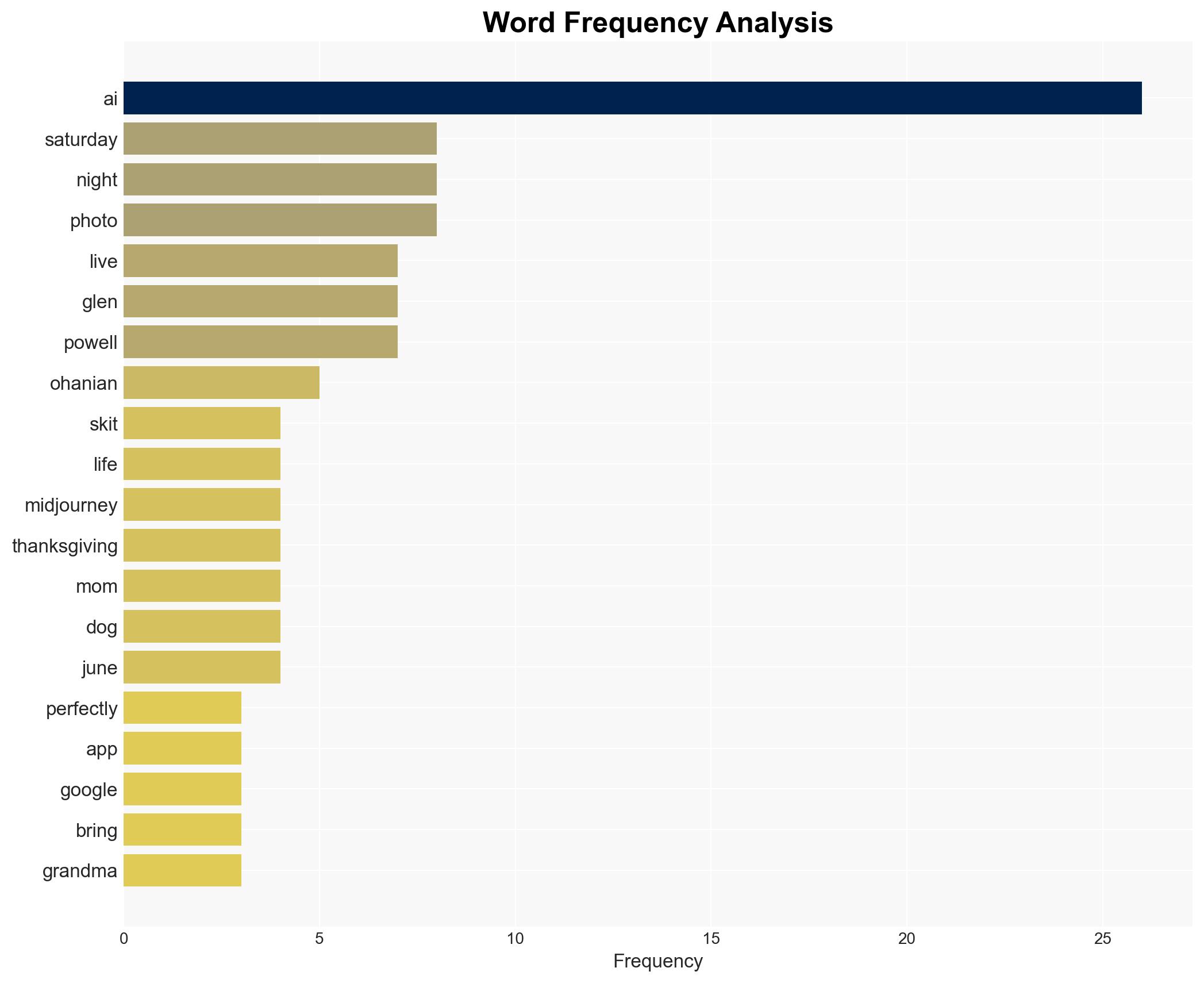

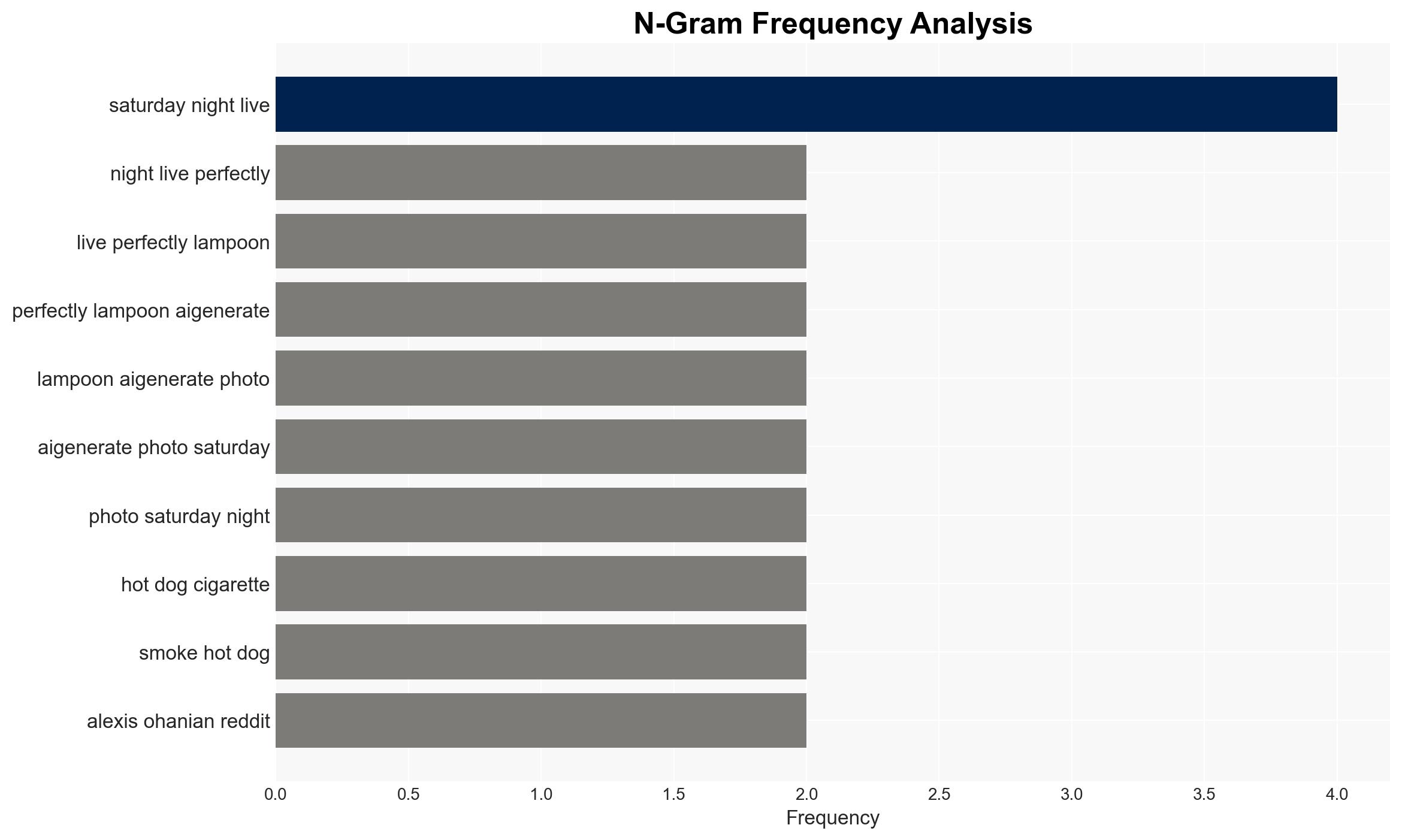

The strategic judgment is that the SNL skit highlights growing public skepticism and humor towards AI-generated content, particularly in its current imperfect state. The most supported hypothesis is that such portrayals will increase pressure on AI developers to improve accuracy and reliability, while also influencing public perception and policy discussions. Confidence level: Moderate.

2. Competing Hypotheses

Hypothesis 1: The SNL skit will lead to increased public scrutiny and regulatory pressure on AI companies to address flaws in AI-generated content.

Hypothesis 2: The skit will be perceived merely as entertainment, having minimal impact on public perception or regulatory actions regarding AI technology.

Hypothesis 1 is more likely due to the increasing trend of media influencing public discourse and policy, especially concerning emerging technologies. The skit’s portrayal of AI errors aligns with existing concerns about AI reliability.

3. Key Assumptions and Red Flags

Assumptions: The public is influenced by media portrayals of technology; AI companies are responsive to public and regulatory pressure.

Red Flags: Potential bias in media portrayal of AI; overestimation of media influence on policy-making.

Deception Indicators: None identified in the skit itself, but AI companies may downplay issues to mitigate negative perceptions.

4. Implications and Strategic Risks

The skit could exacerbate public distrust in AI technologies, potentially slowing adoption and innovation. If regulatory bodies respond to public pressure, AI companies might face stricter compliance requirements, impacting their operational costs and development timelines. Conversely, failure to address these issues could lead to reputational damage and loss of consumer trust.

5. Recommendations and Outlook

- AI companies should proactively address known flaws in their technologies and communicate improvements transparently to the public.

- Engage with media to provide balanced perspectives on AI capabilities and limitations.

- Best Case: AI companies use the opportunity to enhance product reliability, boosting consumer trust and market growth.

- Worst Case: Persistent negative portrayals lead to stringent regulations, stifling innovation and market expansion.

- Most Likely: A moderate increase in scrutiny leads to incremental improvements in AI technology and communication strategies.

6. Key Individuals and Entities

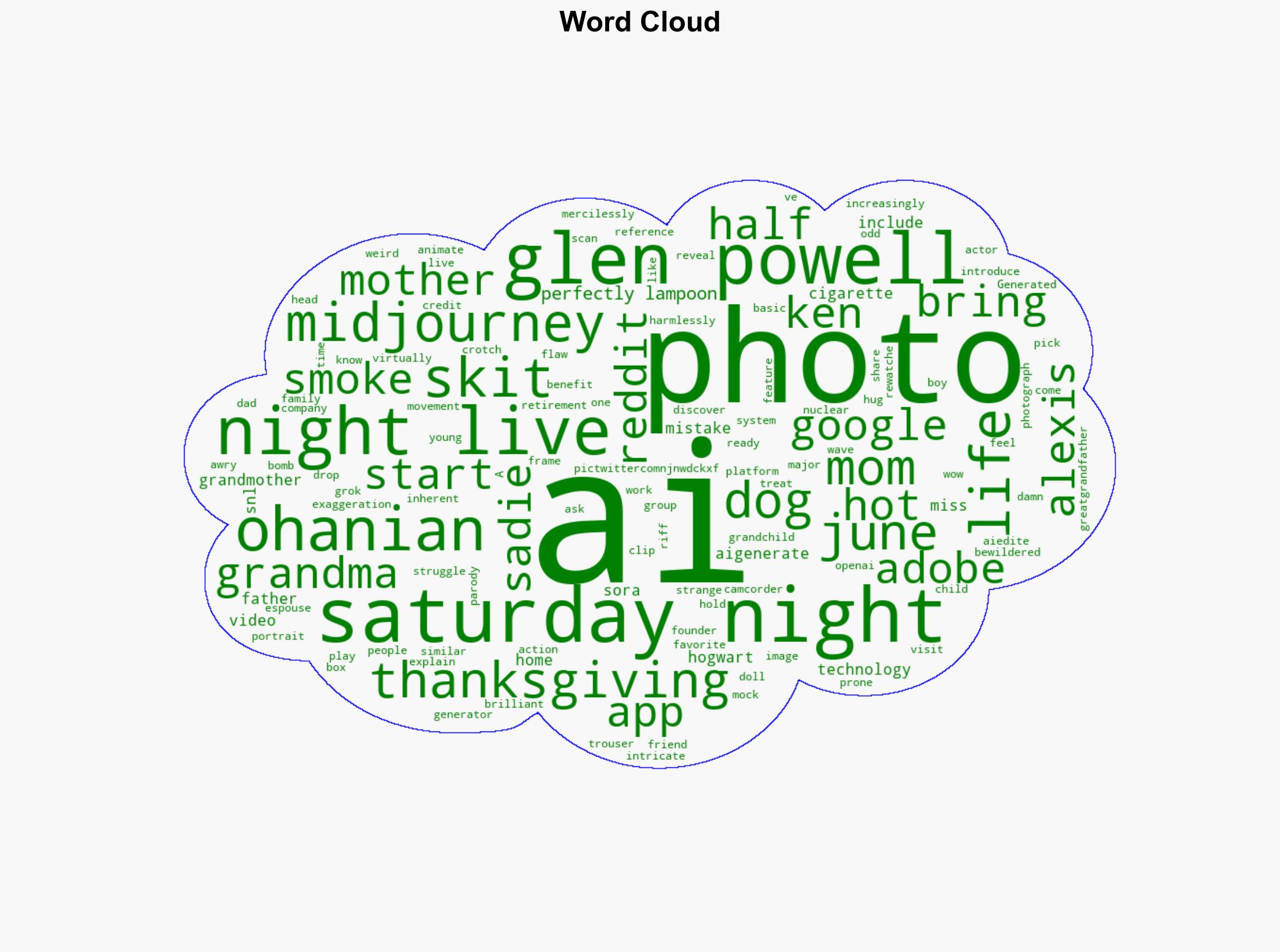

Glen Powell (Actor), Alexis Ohanian (Reddit Founder), Midjourney, OpenAI, Google, Adobe.

7. Thematic Tags

Cybersecurity, AI Reliability, Media Influence, Public Perception, Regulatory Impact

Structured Analytic Techniques Applied

- Adversarial Threat Simulation: Model and simulate actions of cyber adversaries to anticipate vulnerabilities and improve resilience.

- Indicators Development: Detect and monitor behavioral or technical anomalies across systems for early threat detection.

- Bayesian Scenario Modeling: Quantify uncertainty and predict cyberattack pathways using probabilistic inference.

Explore more:

Cybersecurity Briefs ·

Daily Summary ·

Support us

·