Trump Supporters Are Using OpenAIs Sora to Generate AI Videos of Soldiers Assaulting Protesters – Futurism

Published on: 2025-10-12

Intelligence Report: Trump Supporters Are Using OpenAIs Sora to Generate AI Videos of Soldiers Assaulting Protesters – Futurism

1. BLUF (Bottom Line Up Front)

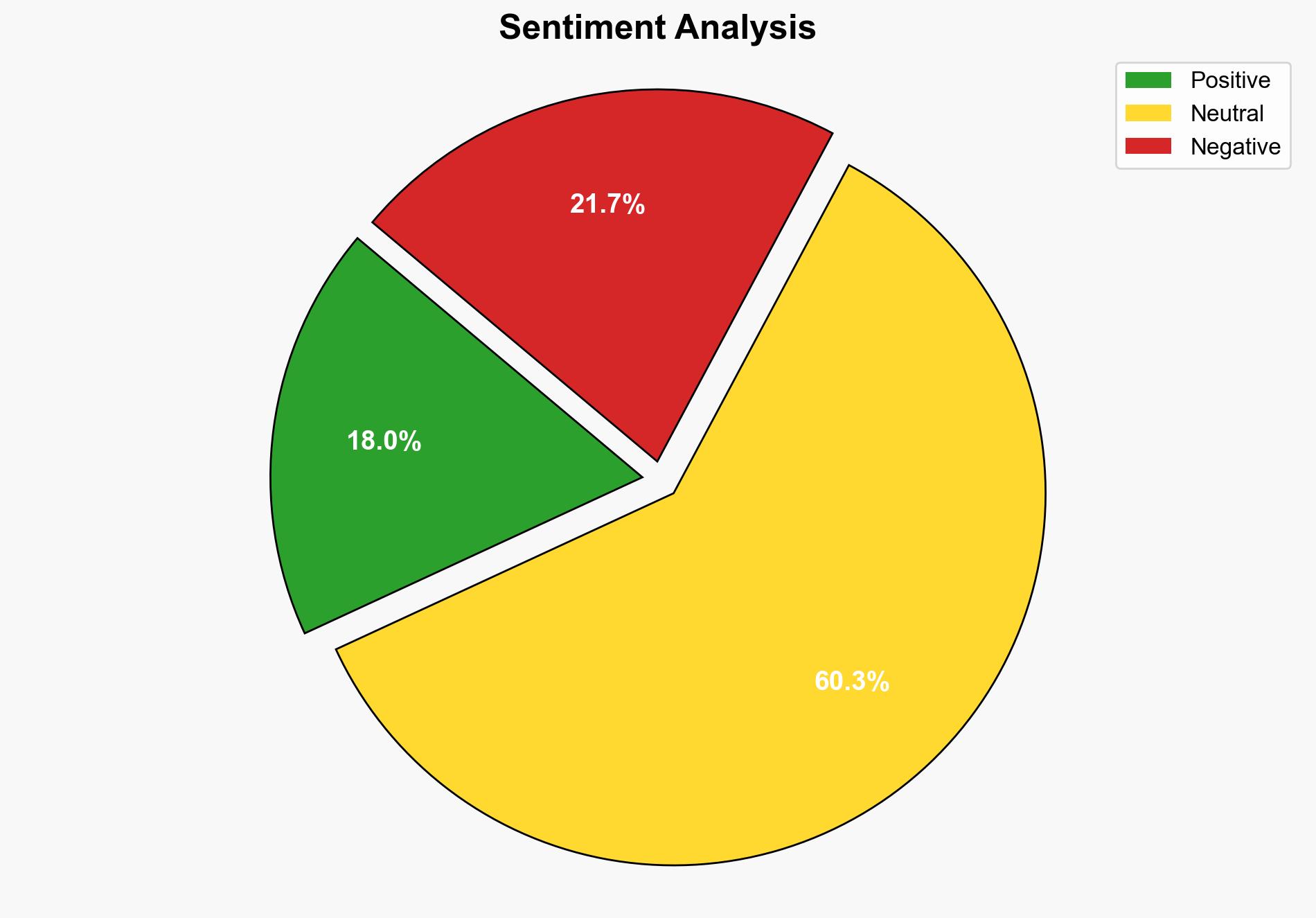

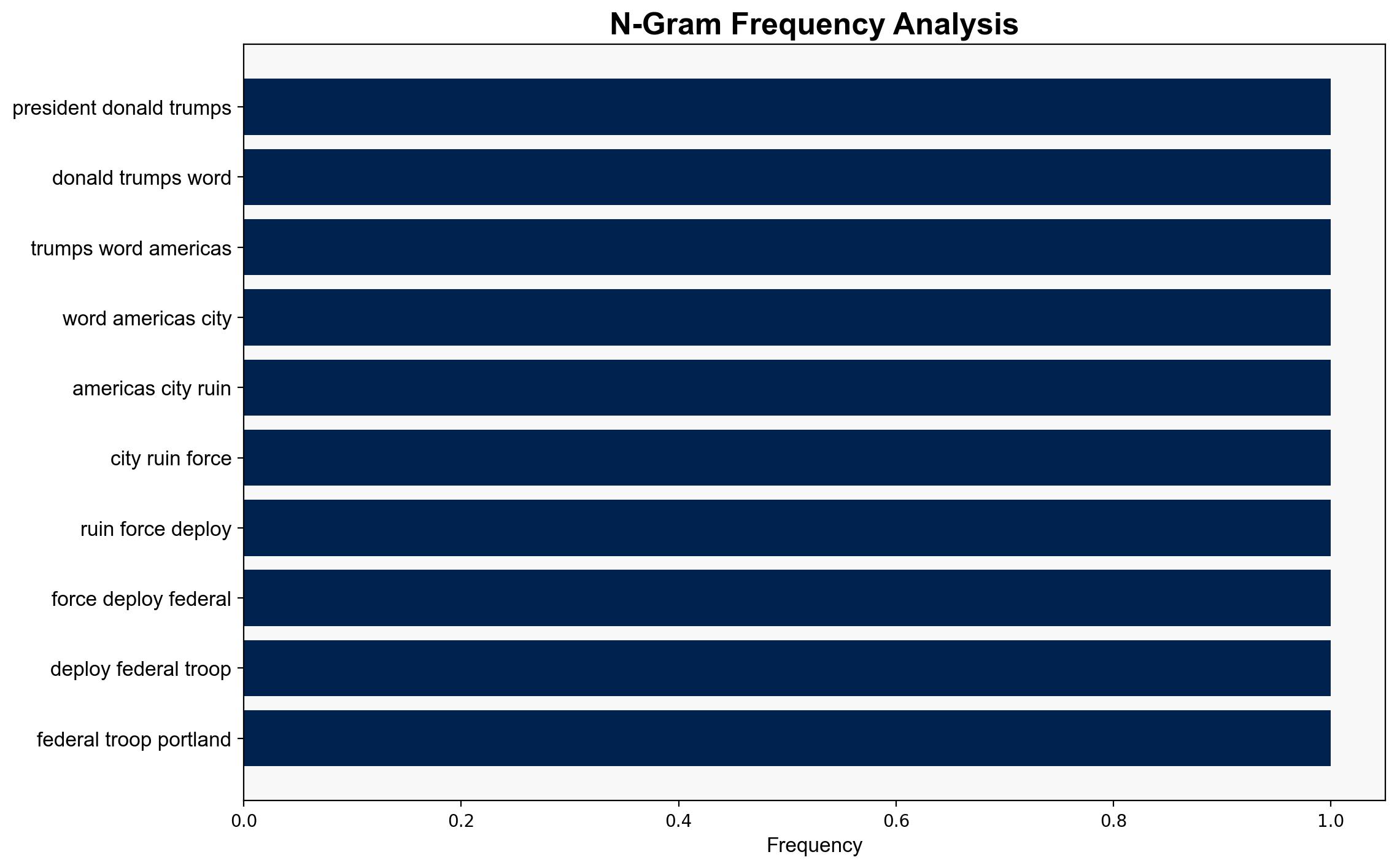

The use of AI-generated videos by Trump supporters to depict violent confrontations between soldiers and protesters represents a significant information manipulation threat. The most supported hypothesis is that these videos are part of a coordinated effort to influence public perception and justify increased militarization in urban areas. Confidence level: Moderate. Recommended action: Enhance monitoring of AI-generated content and develop counter-narratives to mitigate misinformation.

2. Competing Hypotheses

1. **Hypothesis A**: The AI-generated videos are part of a deliberate campaign by Trump supporters to create a false narrative of widespread violence, thereby justifying the deployment of federal troops and gaining political support.

2. **Hypothesis B**: The videos are the result of isolated actions by individual Trump supporters without a coordinated strategy, reflecting personal biases rather than an organized campaign.

Using the Analysis of Competing Hypotheses (ACH) method, Hypothesis A is better supported due to the scale of video dissemination and the alignment with political narratives favoring increased militarization.

3. Key Assumptions and Red Flags

– **Assumptions**: Hypothesis A assumes a level of coordination and intent that may not be fully substantiated by available evidence. Hypothesis B assumes that individual actions are not influenced by broader strategic goals.

– **Red Flags**: The lack of direct evidence linking the videos to a coordinated campaign raises questions about the true origin and intent. The potential for cognitive bias exists in interpreting the videos as part of a larger conspiracy.

4. Implications and Strategic Risks

The proliferation of AI-generated misinformation could exacerbate social tensions and lead to increased polarization. If perceived as credible, these videos may influence public opinion and policy, potentially escalating military presence in urban areas. The risk of similar tactics being adopted by other groups or nations poses a broader cybersecurity threat.

5. Recommendations and Outlook

- Enhance AI content monitoring systems to detect and flag potentially manipulative videos.

- Develop strategic communication plans to counter misinformation and educate the public on AI-generated content.

- Scenario Projections:

- Best Case: Effective countermeasures reduce the impact of misinformation, maintaining public trust.

- Worst Case: Misinformation leads to increased civil unrest and policy shifts towards militarization.

- Most Likely: Continued dissemination of AI-generated content with moderate impact on public perception.

6. Key Individuals and Entities

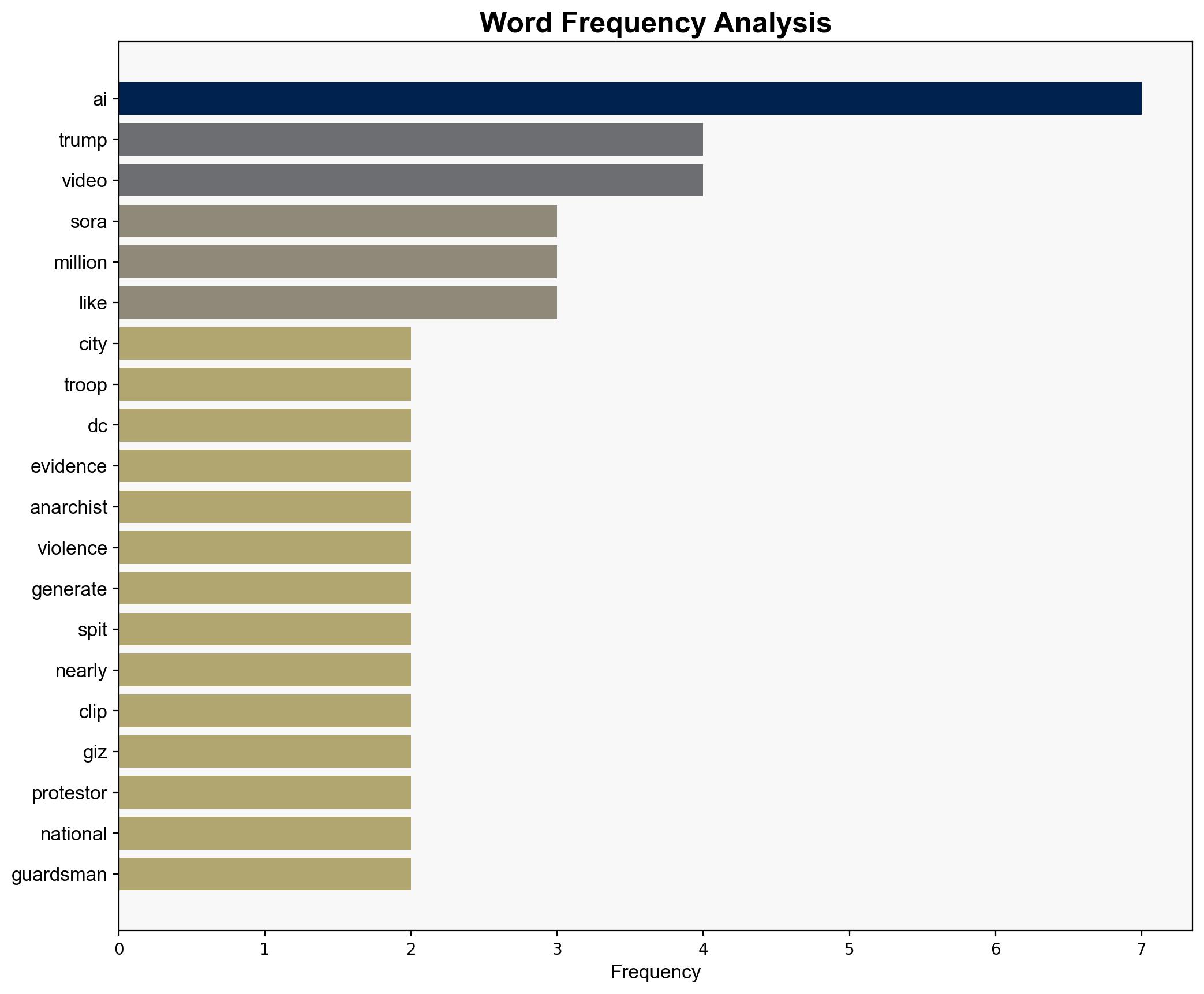

No specific individuals are named in the source text. The focus is on Trump supporters and the use of OpenAI’s Sora AI video generator.

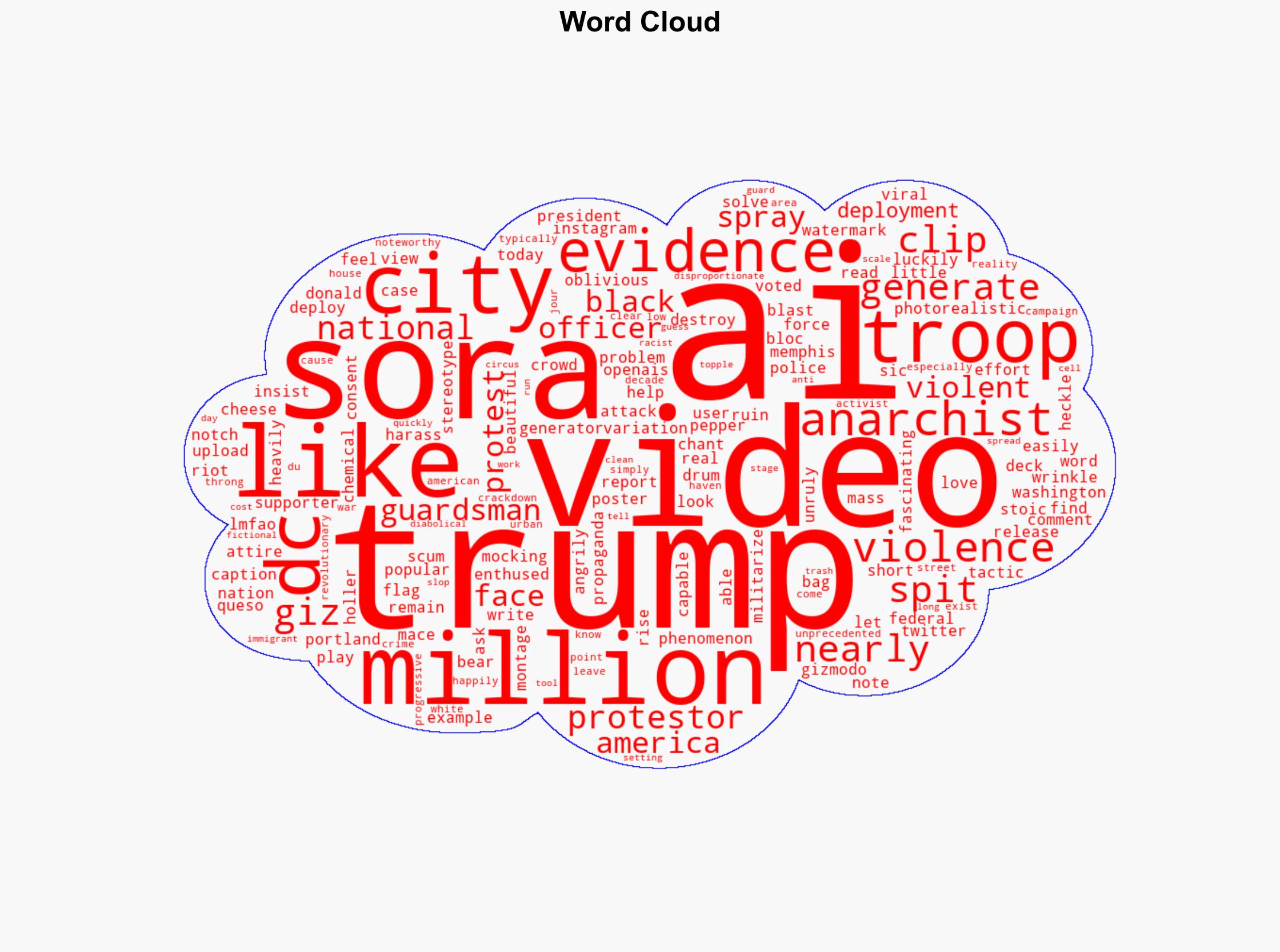

7. Thematic Tags

national security threats, cybersecurity, counter-terrorism, regional focus