Trumps Draft Executive Order Targets States Enacting AI Transparency Laws – PetaPixel

Published on: 2025-11-20

AI-powered OSINT brief from verified open sources. Automated NLP signal extraction with human verification. See our Methodology and Why WorldWideWatchers.

Intelligence Report:

1. BLUF (Bottom Line Up Front)

The most supported hypothesis is that the draft executive order aims to establish a federal preemption over state AI transparency laws to create a unified national framework, potentially favoring tech companies’ interests. This approach could centralize AI governance, but may also undermine state-level innovation and public trust in AI oversight. Confidence Level: Moderate.

2. Competing Hypotheses

Hypothesis 1: The draft executive order is primarily intended to prevent a fragmented regulatory environment that could hinder AI innovation and competitiveness by establishing a uniform federal standard.

Hypothesis 2: The draft executive order is a strategic move to align with technology companies and their interests, potentially at the expense of state autonomy and public trust in AI governance.

The first hypothesis is more likely given the emphasis on preventing a “patchwork” of state laws and the strategic importance of AI in national competitiveness. However, the second hypothesis cannot be dismissed due to the alignment with tech companies and the potential undermining of state-level regulatory efforts.

3. Key Assumptions and Red Flags

Assumptions: It is assumed that a federal standard would indeed streamline AI development and not stifle innovation. It is also assumed that states’ AI transparency laws are significantly divergent and burdensome.

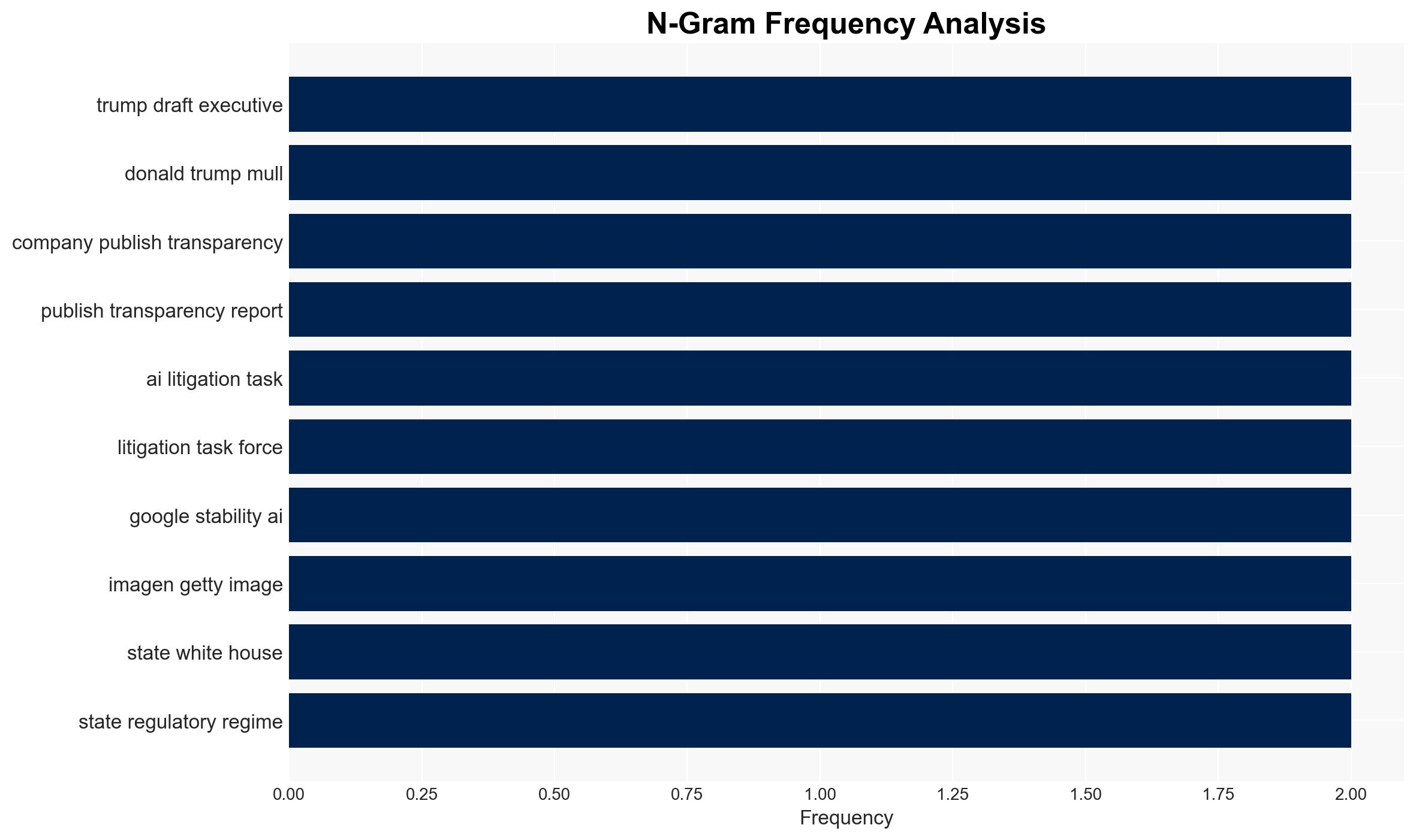

Red Flags: The involvement of Pam Bondi and the creation of an AI Litigation Task Force suggest a potential for aggressive legal challenges, which may indicate a prioritization of federal over state authority. The lack of official confirmation from the White House raises questions about the draft’s finality and intent.

4. Implications and Strategic Risks

The centralization of AI regulation could lead to political tensions between federal and state governments, potentially resulting in legal battles that delay AI policy implementation. Economically, a federal standard may benefit large tech companies but could disadvantage smaller firms unable to influence federal policy. Informationally, public trust in AI oversight may erode if perceived as favoring corporate interests over transparency and accountability.

5. Recommendations and Outlook

- Engage with state governments to understand their concerns and incorporate their insights into a balanced federal AI framework.

- Facilitate transparent discussions with tech companies and civil liberty advocates to address public trust issues.

- Best-case scenario: A cohesive federal AI policy that enhances innovation and competitiveness while maintaining public trust and state collaboration.

- Worst-case scenario: Prolonged legal disputes and loss of public confidence in AI governance, hindering technological advancement.

- Most-likely scenario: A contentious but eventual establishment of a federal standard with ongoing state-federal negotiations.

6. Key Individuals and Entities

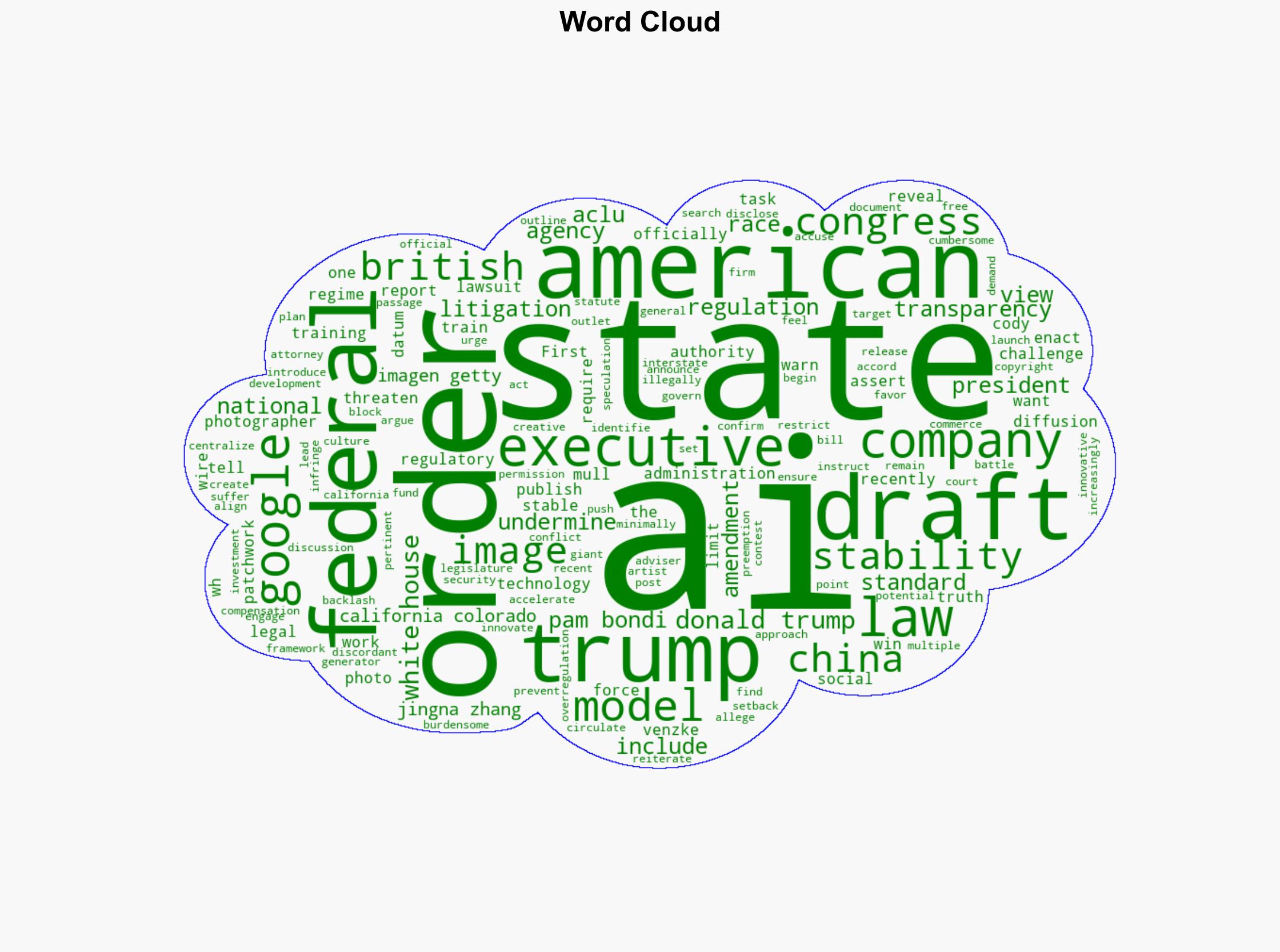

Donald Trump, Pam Bondi, Cody Venzke, Google, Stability AI, Getty Images

7. Thematic Tags

National Security Threats, AI Regulation, Federal vs. State Authority, Technology Policy

Structured Analytic Techniques Applied

- Cognitive Bias Stress Test: Expose and correct potential biases in assessments through red-teaming and structured challenge.

- Bayesian Scenario Modeling: Use probabilistic forecasting for conflict trajectories or escalation likelihood.

- Network Influence Mapping: Map relationships between state and non-state actors for impact estimation.

- Narrative Pattern Analysis: Deconstruct and track propaganda or influence narratives.

Explore more:

National Security Threats Briefs ·

Daily Summary ·

Support us