We havent figured that out yet Sam Altman explains why using ChatGPT as your therapist is still a privacy nightmare – TechRadar

Published on: 2025-07-28

Intelligence Report: We haven’t figured that out yet – Sam Altman explains why using ChatGPT as your therapist is still a privacy nightmare – TechRadar

1. BLUF (Bottom Line Up Front)

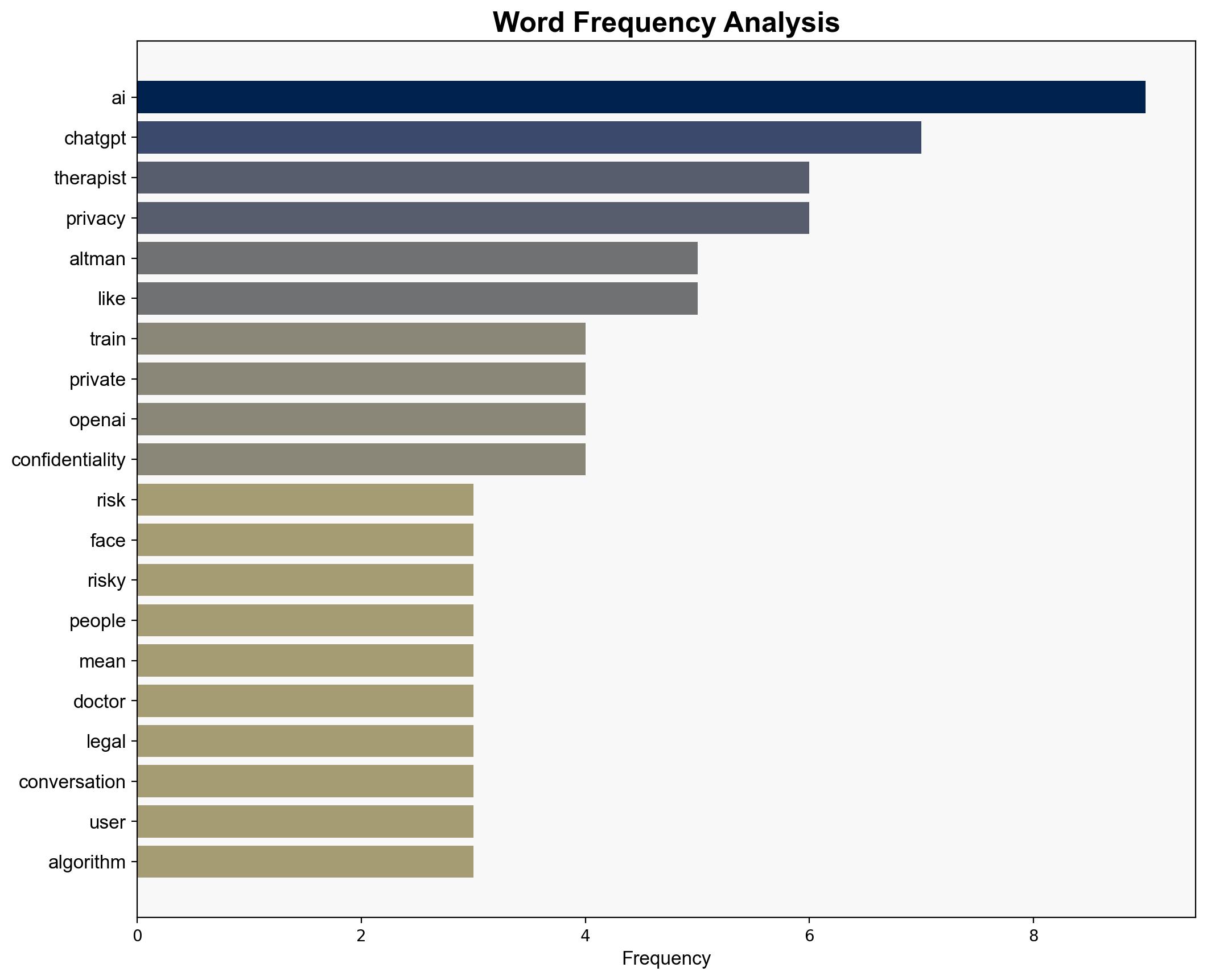

The most supported hypothesis is that using AI like ChatGPT for therapy poses significant privacy risks due to the lack of legal confidentiality and potential data exposure. Confidence Level: High. Recommended action includes developing stringent privacy protocols and exploring secure AI alternatives for sensitive applications.

2. Competing Hypotheses

Hypothesis 1: AI tools like ChatGPT are inherently risky for therapeutic use due to privacy vulnerabilities, as they lack legal confidentiality protections and may expose sensitive data.

Hypothesis 2: The privacy risks associated with AI therapy are overstated, and with proper safeguards and encryption, AI can be a viable support tool for mental health.

Using structured analytic techniques, Hypothesis 1 is better supported by the evidence presented, highlighting the lack of legal protections and potential data exposure risks.

3. Key Assumptions and Red Flags

Assumptions:

– AI systems like ChatGPT cannot guarantee confidentiality akin to human therapists.

– Users may not fully understand the privacy implications of using AI for therapy.

Red Flags:

– Lack of transparency in AI data handling processes.

– Potential for AI systems to inadvertently leak sensitive information.

– Over-reliance on AI without understanding its limitations.

4. Implications and Strategic Risks

The use of AI in therapy without robust privacy measures could lead to significant personal data breaches, undermining trust in AI technologies. This could escalate to legal challenges and reputational damage for AI developers. Economically, it may deter investment in AI health applications. Psychologically, users may suffer if sensitive information is exposed.

5. Recommendations and Outlook

- Develop and implement comprehensive privacy protocols for AI applications in sensitive areas.

- Explore partnerships with privacy-focused tech companies to enhance data security.

- Scenario Projections:

- Best Case: AI therapy tools are equipped with robust privacy measures, gaining user trust and expanding their use in mental health support.

- Worst Case: Major data breach occurs, leading to widespread distrust and legal repercussions for AI developers.

- Most Likely: Gradual improvement in AI privacy measures, with cautious adoption in sensitive fields.

6. Key Individuals and Entities

– Sam Altman

– OpenAI

– TechRadar

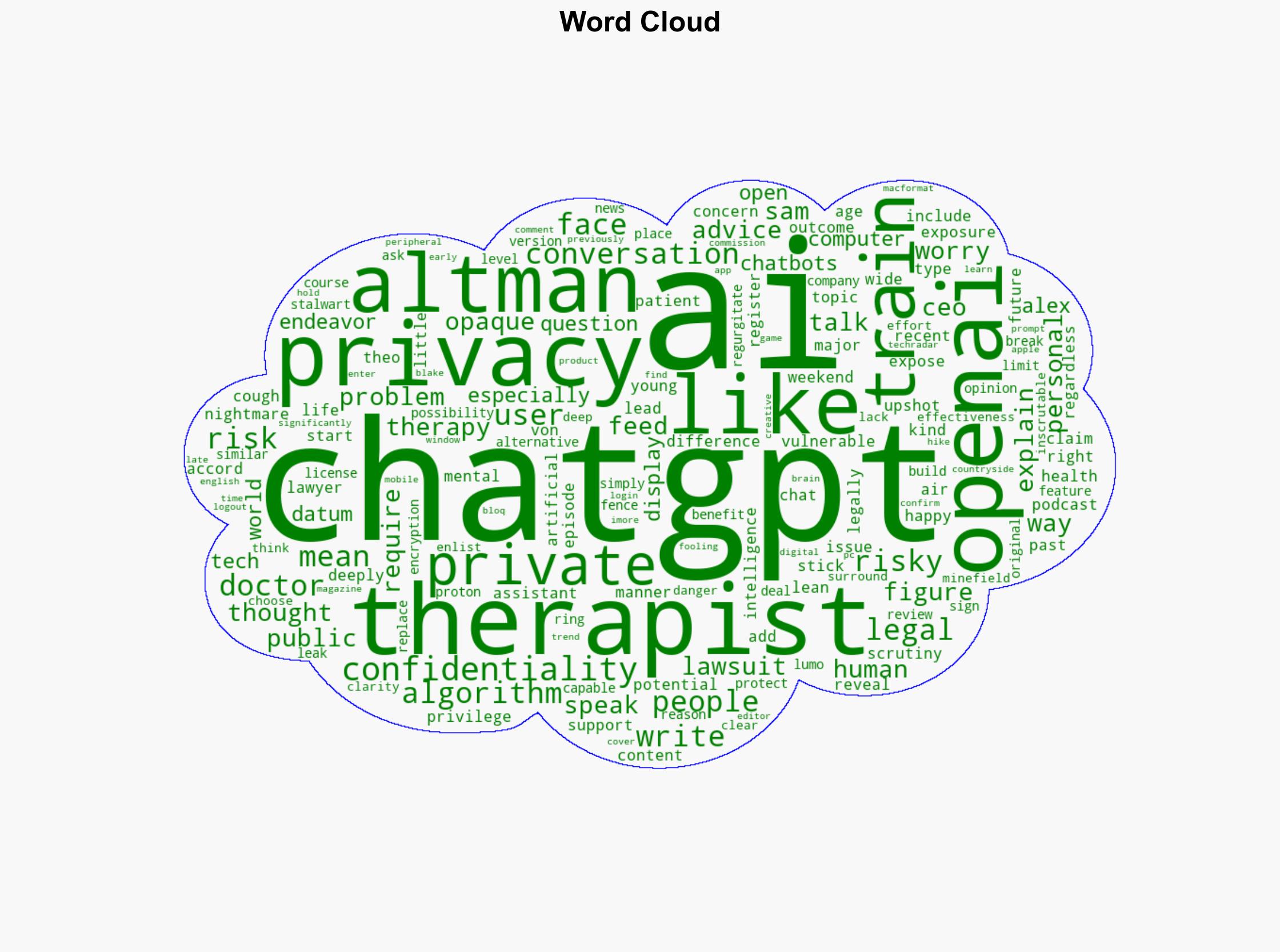

7. Thematic Tags

cybersecurity, privacy risks, AI ethics, mental health technology