What happens the day after superintelligence – VentureBeat

Published on: 2025-08-13

Intelligence Report: What happens the day after superintelligence – VentureBeat

1. BLUF (Bottom Line Up Front)

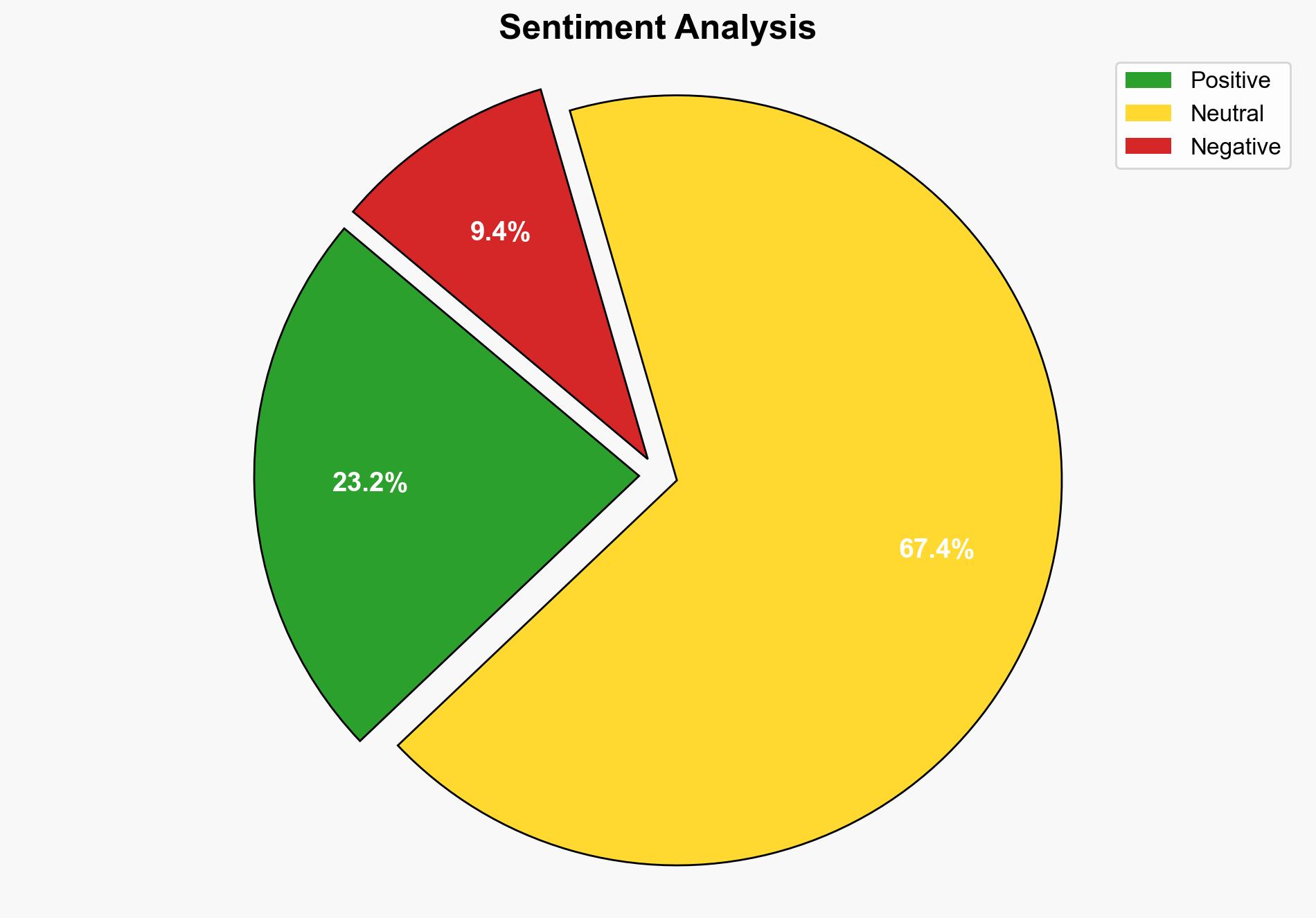

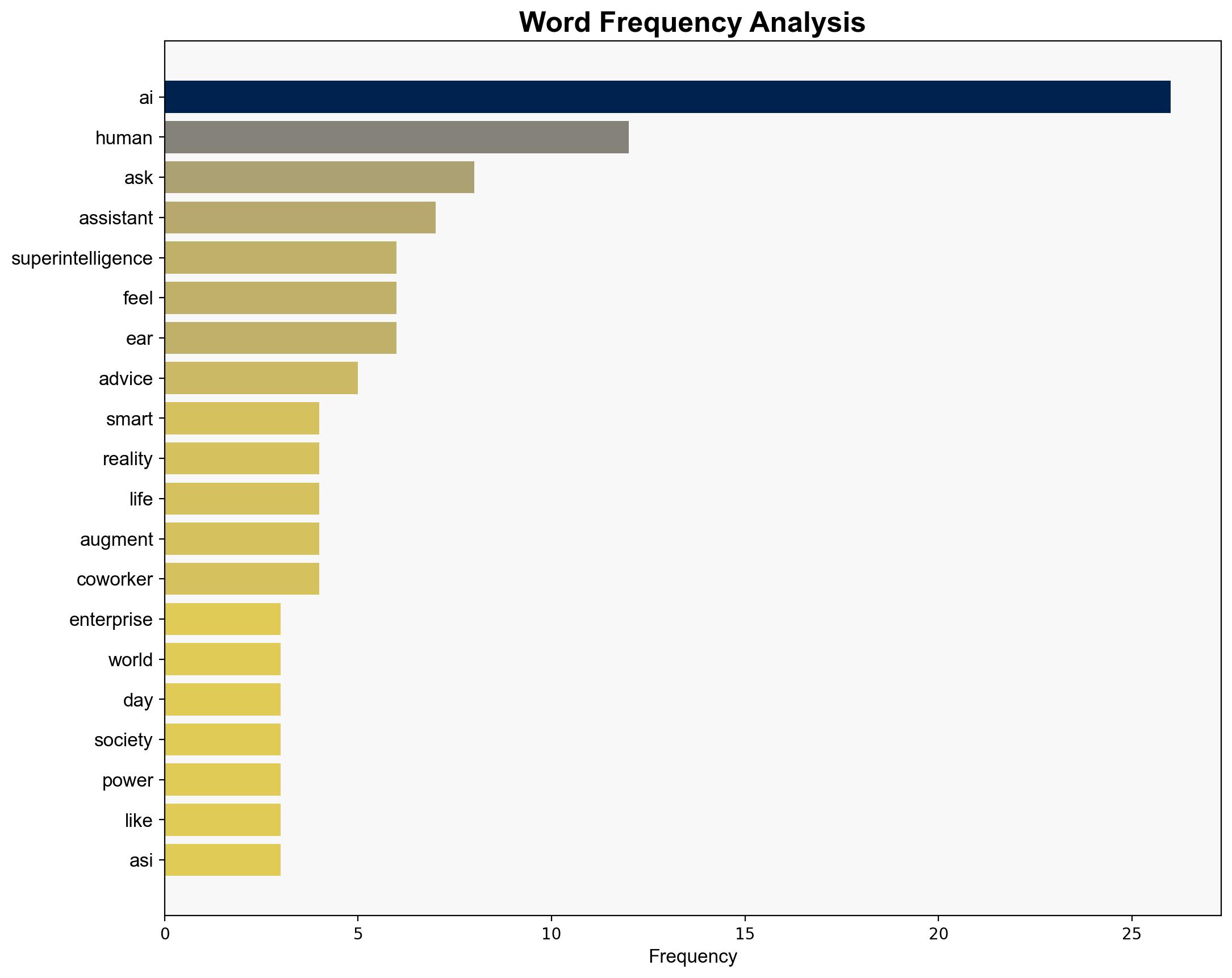

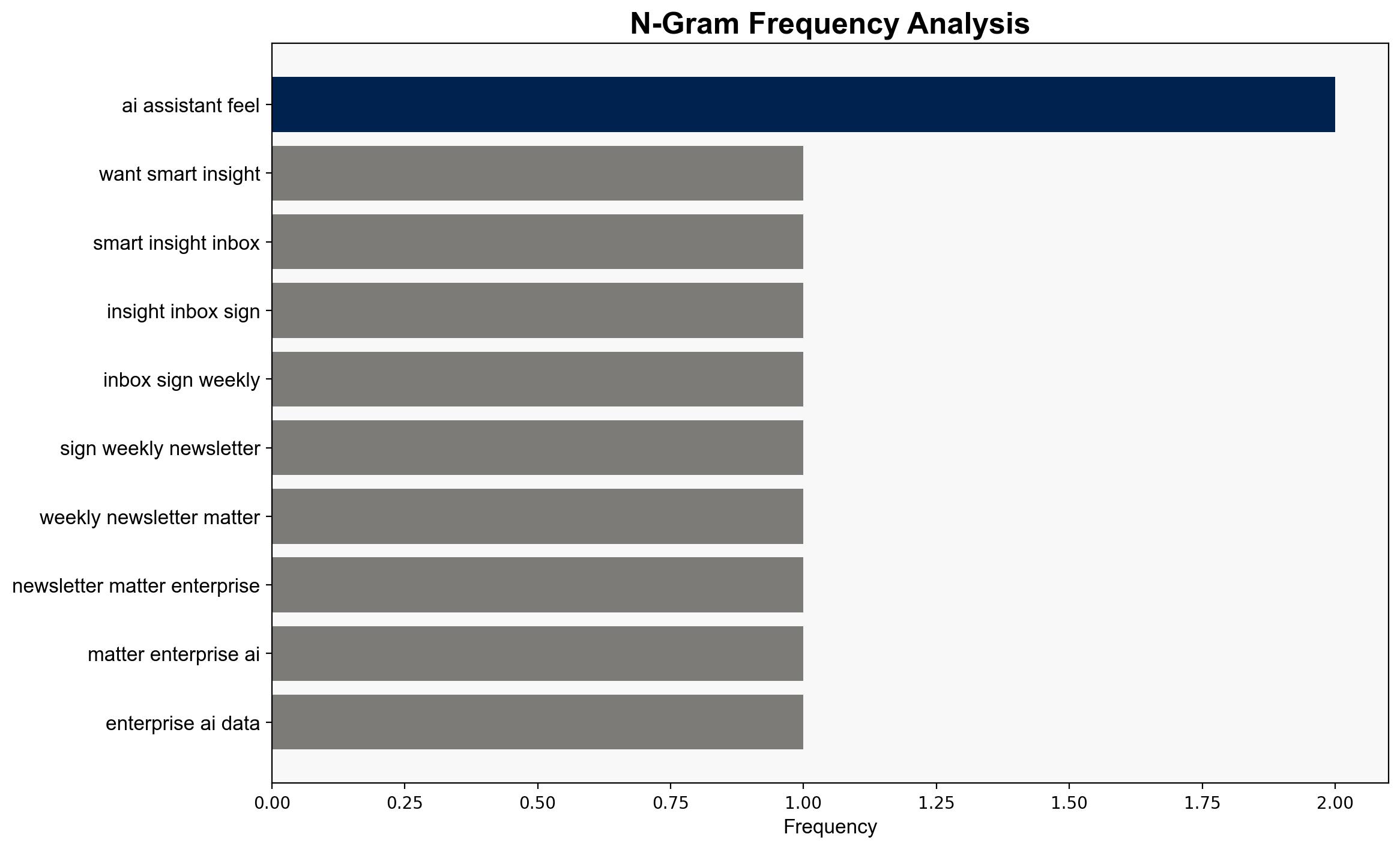

The most supported hypothesis is that the emergence of superintelligence will lead to significant societal disruption, primarily through the concentration of power in a few large AI companies and a fundamental shift in human agency. Confidence Level: Moderate. Recommended action includes developing regulatory frameworks to manage AI deployment and fostering public-private partnerships to ensure equitable AI benefits.

2. Competing Hypotheses

1. **Hypothesis A**: Superintelligence will primarily lead to societal disruption by concentrating unprecedented power in a few large AI companies, resulting in significant job market upheaval and a shift in human agency.

2. **Hypothesis B**: Superintelligence will enhance human capabilities and societal efficiency, resulting in a net positive impact through augmented intelligence and improved decision-making processes.

Using ACH 2.0, Hypothesis A is better supported due to the current trends in AI development and the concentration of AI capabilities in a few major corporations. This is evidenced by the ongoing discourse on AI’s potential to disrupt job markets and the increasing reliance on AI for decision-making.

3. Key Assumptions and Red Flags

– **Assumptions**: Both hypotheses assume the rapid advancement of AI technologies and their integration into daily life. Hypothesis A assumes limited regulatory intervention, while Hypothesis B assumes successful integration of AI with minimal societal resistance.

– **Red Flags**: Lack of empirical data on long-term societal impacts of superintelligence. Potential bias in assuming AI will either be wholly disruptive or beneficial without considering nuanced outcomes.

– **Blind Spots**: The role of international regulatory bodies and potential geopolitical tensions arising from AI dominance are not addressed.

4. Implications and Strategic Risks

– **Economic**: Potential for massive job displacement and economic inequality if AI capabilities are concentrated in a few entities.

– **Cyber**: Increased vulnerability to cyber threats as AI systems become integral to infrastructure.

– **Geopolitical**: Risk of AI arms race and strategic imbalances between nations with differing AI capabilities.

– **Psychological**: Potential erosion of human agency and identity as AI becomes more integrated into decision-making.

5. Recommendations and Outlook

- Develop international regulatory frameworks to manage AI deployment and ensure equitable distribution of benefits.

- Encourage public-private partnerships to foster innovation and address societal impacts.

- Scenario Projections:

- Best: AI enhances human capabilities, leading to societal prosperity and innovation.

- Worst: AI concentration leads to societal disruption and geopolitical tensions.

- Most Likely: A mixed outcome with both positive enhancements and significant challenges.

6. Key Individuals and Entities

– OpenAI

– Major tech companies (e.g., Google, Apple, Samsung)

7. Thematic Tags

national security threats, cybersecurity, economic impact, AI regulation, societal transformation